k8s部署微服务

一、安装nfs服务

由于资源有限,以master节点所在虚拟机作为nfs服务器

1、确认是否安装nfs

rpm -qa nfs-utils rpcbind

如果未安装,则安装

nfs服务器上安装2个模块:yum install -y nfs-utils rpcbind

nfs客户端机器(这里是所有node节点)安装yum install -y nfs-utils

2、nfs服务器创建共享文件夹/data/nfs和mysql各数据库文件的挂载目录,当然你可以自己选择位置

nfs:为nfs共享文件的目录

auth_center_mysql为认证中心mysql数据文件目录

user_center_mysql为用户中心mysql数据文件目录

log_center_mysql为日志中心mysql数据文件目录

nacos_mysql为nacos的mysql数据文件目录

3、配置vim /etc/exports文件,在此文件中写入如下内容(不支持共享子目录no_hide)

/data/nfs *(insecure,rw,async,no_root_squash)

/data/auth_center_mysql *(insecure,rw,async,no_root_squash)

/data/user_center_mysql *(insecure,rw,async,no_root_squash)

/data/log_center_mysql *(insecure,rw,async,no_root_squash)

/data/nacos_mysql *(insecure,rw,async,no_root_squash)

具体含义如下:

配置完成后需要使其马上生效:exportfs –r

4、启动 RPC 服务

service rpcbind start

5、查看 NFS 服务项 rpc 服务器注册的端口列表

rpcinfo -p localhost

6、启动 NFS 服务

service nfs start(重启service nfs restart)

7、查看是否加载了/etc/exports中的配置

showmount -e localhost

8、k8s中部署nfs

1)下载nacos

在master节点上root目录下执行

git clone https://github.com/nacos-group/nacos-k8s.git

2)下载完成后进入nacos-k8s

3)创建角色(注意名称空间,下面2种根据自己需要选择)

- 创建到默认名称空间default中

kubectl create -f deploy/nfs/rbac.yaml

如果K8S命名空间不是default,请在部署RBAC之前执行以下脚本(就不要执行上面的脚本了):

NS=$(kubectl config get-contexts|grep -e "^\*" |awk '{print $5}')

NAMESPACE=${NS:-default}

sed -i'' "s/namespace:.*/namespace: $NAMESPACE/g" ./deploy/nfs/rbac.yaml

- 创建到指定名称空间中,我这里是msp

修改rbac.yaml中出现namespace:default的所有地方为namespace:msp

yaml文件内容

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: msp

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: msp

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io4)创建 ServiceAccount 和部署 NFS-Client Provisioner

修改yaml文件中nfs的server地址及共享目录,指定名称空间为msp,可指定nfs-client-provisioner镜像,这里使用默认的镜像,nfs服务器地址为192.168.126.128,共享目录为/data/nfs

./deploy/nfs/deployment.yaml内容:

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

namespace: msp

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: nfs-client-provisioner

namespace: msp

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccount: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: quay.io/external_storage/nfs-client-provisioner:latest

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: fuseim.pri/ifs

- name: NFS_SERVER

value: 192.168.126.128

- name: NFS_PATH

value: /data/nfs

volumes:

- name: nfs-client-root

nfs:

server: 192.168.126.128

path: /data/nfs执行yaml文件:

kubectl create -f deploy/nfs/deployment.yaml

5)创建NFS StorageClass

kubectl create -f deploy/nfs/class.yaml

yaml文件内容指定名称空间

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage

namespace: msp

provisioner: fuseim.pri/ifs

parameters:

archiveOnDelete: "false"

6)验证NFS部署成功

kubectl get pod -l app=nfs-client-provisioner -n msp

7)如果安装错误可以根据部署的yaml执行删除再创建

进入nacos-k8s目录执行

#角色信息

kubectl delete -f deploy/nfs/rbac.yaml

kubectl create -f deploy/nfs/rbac.yaml

#存储类信息

kubectl delete -f deploy/nfs/class.yaml

kubectl create -f deploy/nfs/class.yaml

#账号和nfs客户端信息

kubectl delete -f deploy/nfs/deployment.yaml

kubectl create -f deploy/nfs/deployment.yaml

二、部署单机mysql

使用kuboard部署三个数据库db-auth-center、db-user-center、db-log-center、db-nacos数据库

1、制作mysql(mysql 版本 5.7)镜像

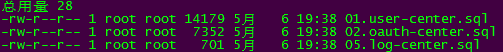

1)将对应的sql文件传到master节点上

创建sql目录,将对应的初始化数据库执行的sql脚本文件上传到sql目录

2)在当前目录下创建对应的mysql配置文件,用来解决mysql使用时的乱码问题

vim user-center-my.cnf,配置如下

[mysqld]

init_connect='SET collation_connection = utf8_unicode_ci'

init_connect='SET NAMES utf8'

character-set-server=utf8

collation-server=utf8_unicode_ci

skip-character-set-client-handshake

3)创建docker文件

vim dockerfile_user-center,配置文件内容如下:

FROM mysql:5.7.26

ADD user-center-my.cnf /etc/mysql/conf.d/my.cnf

ADD 01.user-center.sql /docker-entrypoint-initdb.d/01.user-center.sql

EXPOSE 33064)创建镜像(注意镜像是要存放在私有的harbor镜像仓库的msp,且最后以空格+英文点号结尾)

docker build -f dockerfile_user-center -t 192.168.126.131:80/msp/user-center-mysql:V1 .

稍等片刻下载依赖的mysql镜像比较慢.

推送镜像到仓库

docker push 192.168.126.131:80/msp/user-center-mysql:V1

5)依次按照第2)、3)、4)步分别完成auth-center-mysql、log-center-mysql镜像制作

登录harbor查看镜像仓库中的镜像

2、使用kuboard部署mysql数据库

注意集群中各个service的端口不能冲突。

1)登录kuboard,进入集群及名称空间

2)从常用操作中选择创建工作负载,以下以创建auth-center为例

| 字段名称 |

填写内容 |

说明 |

| 工作负载类型 |

StatefulSet |

|

| 工作负载分层 |

持久层 |

|

| 工作负载名称 |

auth-center-mysql |

|

| 服务描述 |

认证中心数据库 |

|

| 副本数量 |

1 |

请填写1,如果是2,他们挂载到的nfs是同一路径会导致错误[ERROR] InnoDB: Unable to lock ./ibdata1 error: 11 |

| 容器名称 |

auth-center-mysql |

|

| 镜像 |

msp/auth-center-mysql:V1 |

创建镜像仓库密码后并选择,再输入镜像路径和标签 |

| 抓取策略 |

IfNotPresent |

|

| 环境变量 |

MYSQL_ROOT_PASSWORD=root |

参考mysql官方镜像 |

| 容器端口 |

Tcp/3306 |

containerPort,Pod内部mysql容器的端口,不一定与dockerfile文件中EXPOSE命令声明的端口一致,但与springboot应用指定的端口一致 |

| Service名称 |

db-auth-center |

默认与工作负载名称一致无法修改,可以单独创建服务来指定服务名称 |

| Service |

ClusterIP(集群内访问) |

|

| service服务端口 |

port:3306 targetPort:3306 |

port,service提供给集群内部的访问端口,targetPort是service所映射的容器组pod暴露的端口 |

| 存储挂载名称 |

auth-center-mysql-nfs |

注意不能使用下划线,只能使用数字字母和短横线 |

| 存储挂载类型 |

NFS |

|

| NFS SERVER |

192.168.126.128 |

|

| NFS PATH |

/data/auth_center_mysql |

|

| 权限 |

读写 |

|

| 容器内的路径(挂载点) |

/var/lib/mysql |

|

| 挂载数据卷中的子路径 |

基本信息配置如下图:

容器(工作容器)信息配置如下图:

存储挂载信息如下图

高级信息

服务/应用路由,名称默认和工作负载名称一样,单独创建服务service可能指定服务名称,选择器选择指定标签的容器

保存,点击应用成功。

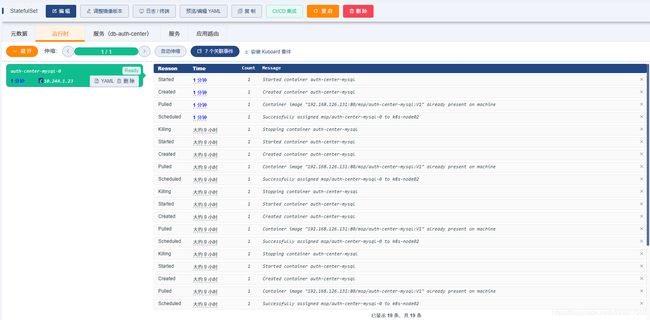

3)验证数据库是否创建成功

点击容器下方的Bash,进入终端界面,执行如下命令

mysql -uroot -proot

> show databases;

> use oauth-center;

> show tables;

不知道为什么只有这个容器bash进去后mysql -uroot –proot登录会提示不安全,不使用密码就可以登录

mysql: [Warning] Using a password on the command line interface can be insecure.

ERROR 1045 (28000): Access denied for user 'root'@'localhost' (using password: YES)

4)按照上面的步骤创建用户中心和日志中心的工作负载

| 字段名称 |

填写内容 |

说明 |

| 工作负载类型 |

StatefulSet |

|

| 工作负载分层 |

持久层 |

|

| 工作负载名称 |

user-center-mysql |

|

| 服务描述 |

用户中心数据库 |

|

| 副本数量 |

1 |

请填写1 |

| 容器名称 |

user-center-mysql |

|

| 镜像 |

msp/user-center-mysql:V1 |

|

| 抓取策略 |

IfNotPresent |

|

| 环境变量 |

MYSQL_ROOT_PASSWORD=root |

参考mysql官方镜像 |

| 容器端口 |

tcp/3306 |

containerPort,Pod内部mysql容器的端口,不一定与dockerfile文件中EXPOSE命令声明的端口一致,但与springboot应用指定的端口一致 |

| Service名称 |

db-user-center |

|

| Service |

ClusterIP(集群内访问) |

|

| service服务端口 |

port:3106 targetPort:3306 |

port,service提供给集群内部的访问端口,targetPort是service所映射的容器组pod暴露的端口 |

| 存储挂载名称 |

user-center-mysql-nfs |

注意不能使用下划线,只能使用数字字母和短横线 |

| 存储挂载类型 |

NFS |

|

| NFS SERVER |

192.168.126.128 |

|

| NFS PATH |

/data/user_center_mysql |

|

| 权限 |

读写 |

|

| 容器内的路径(挂载点) |

/var/lib/mysql |

|

| 挂载数据卷中的子路径 |

日志中心

| 字段名称 |

填写内容 |

说明 |

| 工作负载类型 |

StatefulSet |

|

| 工作负载分层 |

持久层 |

|

| 工作负载名称 |

log-center-mysql |

|

| 服务描述 |

日志中心数据库 |

|

| 副本数量 |

1 |

请填写1 |

| 容器名称 |

log-center-mysql |

|

| 镜像 |

msp/log-center-mysql:V1 |

|

| 抓取策略 |

IfNotPresent |

|

| 环境变量 |

MYSQL_ROOT_PASSWORD=root |

参考mysql官方镜像 |

| 容器端口 |

Tcp/3306 |

containerPort,Pod内部mysql容器的端口,不一定与dockerfile文件中EXPOSE命令声明的端口一致,但与springboot应用指定的端口一致 |

| Service名称 |

db-log-center |

|

| Service |

ClusterIP(集群内访问) |

|

| service服务端口 |

port:3206 targetPort:3306 |

port,service提供给集群内部的访问端口,targetPort是service所映射的容器组pod暴露的端口 |

| 存储挂载名称 |

log-center-mysql-nfs |

注意不能使用下划线,只能使用数字字母和短横线 |

| 存储挂载类型 |

NFS |

|

| NFS SERVER |

192.168.126.128 |

|

| NFS PATH |

/data/log_center_mysql |

|

| 权限 |

读写 |

|

| 容器内的路径(挂载点) |

/var/lib/mysql |

|

| 挂载数据卷中的子路径 |

5)nacos数据库工作负载创建

- 创建脚本文件

下载官方的mysql脚本文件内容创建vim nacos-mysql.sql

https://github.com/alibaba/nacos/blob/develop/distribution/conf/nacos-mysql.sql

创建配置文件vim nacos-mysql-my.cnf,内容如下

[mysqld]

init_connect='SET collation_connection = utf8_unicode_ci'

init_connect='SET NAMES utf8'

character-set-server=utf8

collation-server=utf8_unicode_ci

skip-character-set-client-handshake

- 创建dockerfile构建文件,vim dockerfile_nacos_mysql

FROM mysql:5.7.26

ADD nacos-mysql-my.cnf /etc/mysql/conf.d/my.cnf

ADD nacos-mysql.sql /docker-entrypoint-initdb.d/nacos-mysql.sql

EXPOSE 3306

- 创建镜像

docker build -f dockerfile_nacos_mysql -t 192.168.126.131:80/msp/nacos_mysql:5.7.26 .

- 推送镜像

docker push 192.168.126.131:80/msp/nacos_mysql:5.7.26

- kuboard上创建nacos-mysql的工作负载

| 字段名称 |

填写内容 |

说明 |

| 服务类型 |

StatefulSet |

|

| 服务分层 |

持久层 |

|

| 服务名称 |

nacos-mysql |

|

| 服务描述 |

nacos的数据库 |

|

| 副本数量 |

1 |

请填写1 |

| 容器名称 |

nacos-mysql |

|

| 镜像 |

msp/nacos_mysql:5.7.26 |

|

| 抓取策略 |

IfNotPresent |

|

| 环境变量root用户的密码 |

MYSQL_ROOT_PASSWORD=root |

参考mysql官方镜像 |

| 启动时创建的数据库名称 |

MYSQL_DATABASE=nacos_config |

参考mysql官方镜像 |

| 启动时创建的用户 |

MYSQL_USER=nacos |

参考mysql官方镜像 |

| 启动时创建的用户密码 |

MYSQL_PASSWORD=nacos |

参考mysql官方镜像 |

| 容器端口 |

Tcp/3306 |

containerPort,Pod内部mysql容器的端口,相当于dockerfile文件中EXPOSE指定的端口 |

| Service |

ClusterIP(集群内访问) |

|

| service服务端口 |

port:3406 targetPort:3306 |

port,service提供给集群内部的访问端口,targetPort是service所映射的容器组pod的端口 |

| 存储挂载名称 |

nacos-mysql-nfs |

注意不能使用下划线,只能使用数字字母和短横线 |

| 存储挂载类型 |

NFS |

|

| NFS SERVER |

192.168.126.128 |

|

| NFS PATH |

/data/nacos_mysql |

|

| 权限 |

读写 |

|

| 容器内的路径(挂载点) |

/var/lib/mysql |

|

| 挂载数据卷中的子路径 |

三、部署redis

创建工作负载redis

| 字段名称 |

填写内容 |

说明 |

| 服务类型 |

StatefulSet |

|

| 服务分层 |

中间层 |

|

| 服务名称 |

redis |

|

| 服务描述 |

redis缓存 |

|

| 副本数量 |

1 |

请填写1 |

| 容器名称 |

redis |

|

| 镜像 |

redis: 4.0.14 |

从hub.docker.com 加载镜像 |

| 抓取策略 |

IfNotPresent |

|

| 容器端口 |

Tcp/6379 |

containerPort,Pod内部redis容器的端口,相当于dockerfile文件中EXPOSE指定的端口 |

| Service |

ClusterIP(集群内访问) |

|

| service服务端口 |

port: 6379 targetPort: 6379 |

port,service提供给集群内部的访问端口,targetPort是service所映射的容器组pod的端口 |

四、部署nacos集群

1、制作私有的nacos-peer-finder-plugin镜像

1)构建本地镜像

根据官方的提供的nacos-k8s部署包,解压后进入/nacos-k8s/plugin目录,执行构建命令

docker build -f Dockerfile -t 192.168.126.131:80/msp/nacos-peer-finder-plugin:V1.0 .

2)推送镜像

docker push 192.168.126.131:80/msp/nacos-peer-finder-plugin:V1.0

2、制作nacos镜像

参考https://www.pianshen.com/article/71541656180/

1)下载nacos的构建镜像源码

- 下载镜像源码

git clone https://github.com/nacos-group/nacos-docker.git

- 查看使用的nacos-server默认版本

进入/nacos-docker/build目录,cat Dockerfile,可以看到是2.0.2

2)制作nacos镜像

进入/nacos-docker/build目录,执行

docker build -f Dockerfile -t 192.168.126.131:80/msp/nacos-server:V2.0.2 .

此过程要安装很多模块,可以下载nacos-server相应tar包到本地,减少构建时间,需要修改Dockerfile文件,如下图:

3)推送镜像

docker push 192.168.126.131:80/msp/nacos-server:V2.0.2

3、部署Nacos

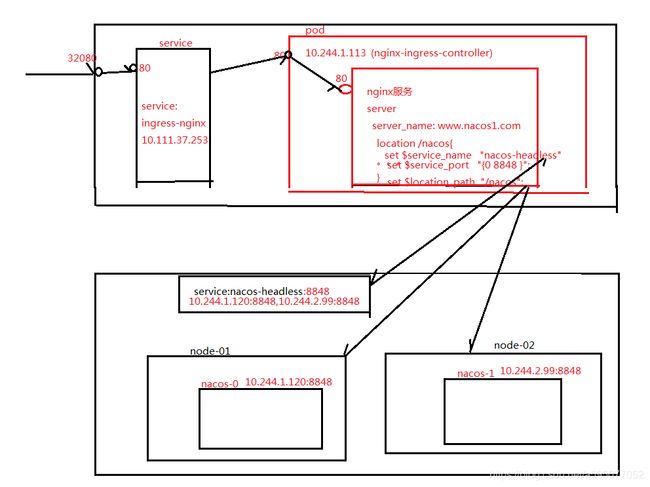

kuboard部署,service类型使用headless方式,可以让每个容器组(pod)都向CoreDNS注册,外部通过CoreDNS可以得到每个pod的地址信息(域名信息),而不会解析成service的ip(此方式再通过iptables或者ipvs进行请求路由),这样外部可以使用自定义的路由规则进行负载均衡。通过部署nginx-ingress-controller可以针对多个节点进行负载均衡。

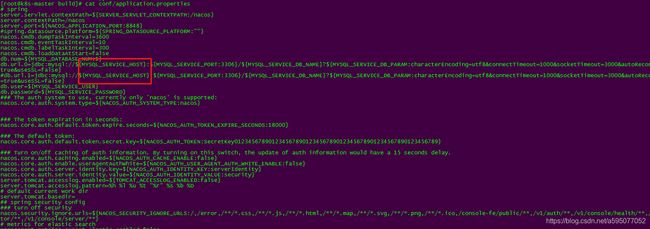

使用nacos-k8s包下的nacos-pvc-nfs.yaml进行部署,注意里面没有指定数据库的host配置,即MYSQL_SERVICE_HOST,我没有配置这项,启动时报No DataSource set,注意端口也要使用前面配置的mysql服务service所暴露的端口。

查看MYSQL_SERVICE_HOST使用的地方(构建nacos镜像的/nacos-docker/build/conf/application.properties)

名称空间也使用自定义的msp。下面是将nacos-pvc-nfs.yaml的内容拷贝到kuboard中进行部署的,最后生成的yaml如下(nacos副本数量是2,机器资源不足,没办法了),注意环境变量SERVICE_NAME的取值必须与服务Service的名称一样,不是指的工作负载的配置属性

serviceName:

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

annotations:

k8s.kuboard.cn/displayName: nacos集群

creationTimestamp: '2021-07-02T14:19:03Z'

generation: 15

labels:

k8s.kuboard.cn/layer: cloud

k8s.kuboard.cn/name: nacos

name: nacos

namespace: msp

resourceVersion: '620141'

selfLink: /apis/apps/v1/namespaces/msp/statefulsets/nacos

uid: ec40757f-6689-4e88-97a1-b88b7d8fea35

spec:

podManagementPolicy: OrderedReady

replicas: 2

revisionHistoryLimit: 10

selector:

matchLabels:

app: nacos

serviceName: nacos-headless

template:

metadata:

annotations:

kubectl.kubernetes.io/restartedAt: '2021-07-05T12:15:45+08:00'

pod.alpha.kubernetes.io/initialized: 'true'

labels:

app: nacos

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- nacos

topologyKey: kubernetes.io/hostname

containers:

- env:

- name: NACOS_REPLICAS

value: '2'

- name: SERVICE_NAME

value: nacos-headless

- name: DOMAIN_NAME

value: cluster.local

- name: POD_NAMESPACE

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

- name: MYSQL_SERVICE_DB_NAME

valueFrom:

configMapKeyRef:

key: mysql.db.name

name: nacos-cm

- name: MYSQL_SERVICE_PORT

valueFrom:

configMapKeyRef:

key: mysql.port

name: nacos-cm

- name: MYSQL_SERVICE_USER

valueFrom:

configMapKeyRef:

key: mysql.user

name: nacos-cm

- name: MYSQL_SERVICE_PASSWORD

valueFrom:

configMapKeyRef:

key: mysql.password

name: nacos-cm

- name: NACOS_SERVER_PORT

value: '8848'

- name: NACOS_APPLICATION_PORT

value: '8848'

- name: PREFER_HOST_MODE

value: hostname

- name: MYSQL_SERVICE_HOST

valueFrom:

configMapKeyRef:

key: mysql.host

name: nacos-cm

image: '192.168.126.131:80/msp/nacos-server:V2.0.2'

imagePullPolicy: IfNotPresent

name: nacos

ports:

- containerPort: 8848

name: client-port

protocol: TCP

- containerPort: 9848

name: client-rpc

protocol: TCP

- containerPort: 9849

name: raft-rpc

protocol: TCP

- containerPort: 7848

name: old-raft-rpc

protocol: TCP

resources:

requests:

cpu: 500m

memory: 2Gi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /home/nacos/plugins/peer-finder

name: data

subPath: peer-finder

- mountPath: /home/nacos/data

name: data

subPath: data

- mountPath: /home/nacos/logs

name: data

subPath: logs

dnsPolicy: ClusterFirst

initContainers:

- image: '192.168.126.131:80/msp/nacos-peer-finder-plugin:V1.0'

imagePullPolicy: IfNotPresent

name: peer-finder-plugin-install

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /home/nacos/plugins/peer-finder

name: data

subPath: peer-finder

restartPolicy: Always

schedulerName: default-scheduler

serviceAccount: nfs-client-provisioner

serviceAccountName: nfs-client-provisioner

terminationGracePeriodSeconds: 30

updateStrategy:

rollingUpdate:

partition: 0

type: RollingUpdate

volumeClaimTemplates:

- metadata:

annotations:

volume.beta.kubernetes.io/storage-class: managed-nfs-storage

name: data

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 20Gi

volumeMode: Filesystem

status:

phase: Pending

status:

collisionCount: 0

currentReplicas: 2

currentRevision: nacos-8d5c8996f

observedGeneration: 15

readyReplicas: 2

replicas: 2

updateRevision: nacos-8d5c8996f

updatedReplicas: 2

---

apiVersion: v1

kind: Service

metadata:

creationTimestamp: '2021-07-02T15:06:32Z'

labels:

k8s.kuboard.cn/layer: cloud

k8s.kuboard.cn/name: nacos-headless

name: nacos-headless

namespace: msp

resourceVersion: '504739'

selfLink: /api/v1/namespaces/msp/services/nacos-headless

uid: 9dada13c-6565-494c-b0c4-7ea50182aeca

spec:

clusterIP: None

ports:

- name: server

port: 8848

protocol: TCP

targetPort: 8848

- name: client-rpc

port: 9848

protocol: TCP

targetPort: 9848

- name: raft-rpc

port: 9849

protocol: TCP

targetPort: 9849

- name: old-raft-rpc

port: 7848

protocol: TCP

targetPort: 7848

selector:

app: nacos

sessionAffinity: None

type: ClusterIP

---

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

creationTimestamp: '2021-07-03T05:50:23Z'

generation: 5

labels:

k8s.kuboard.cn/layer: cloud

k8s.kuboard.cn/name: nacos

name: nacos

namespace: msp

resourceVersion: '598033'

selfLink: /apis/networking.k8s.io/v1beta1/namespaces/msp/ingresses/nacos

uid: 4c10562d-4da9-4420-9a63-714fd3004f51

spec:

rules:

- host: www.nacos1.com

http:

paths:

- backend:

serviceName: nacos-headless

servicePort: 8848

path: /nacos

引用的数据字典配置,nacos-mysql是mysql的service名称

---

apiVersion: v1

data:

mysql.db.name: nacos_config

mysql.host: nacos-mysql

mysql.password: nacos

mysql.port: '3406'

mysql.user: nacos

kind: ConfigMap

metadata:

creationTimestamp: '2021-06-30T14:34:07Z'

name: nacos-cm

namespace: msp

resourceVersion: '382833'

selfLink: /api/v1/namespaces/msp/configmaps/nacos-cm

uid: 9ce8685b-4529-47c9-b066-d7b1aef432ae

nacos启动报错,无数据源问题

Invocation of init method failed; nested exception is ErrCode:500, ErrMsg:Nacos Server did not start because dumpservice bean construction failure :

No DataSource set

1)通过kuboard以bash进入pod确保能ping通数据库的service ip或者服务名

如果不能ping需要kube-proxy开启ipvs,参考进行查看设置

https://www.cnblogs.com/deny/p/13880589.html?ivk_sa=1024320u

- 修改kube-proxy

再master上执行:kubectl edit cm kube-proxy -n kube-system (如果是高可用,在第一个master上执行)

mode “ipvs” (找到kind: KubeProxyConfiguration这一项。。。他下面的第二行就是这个mode)

- 2、添加ipvs模块(每个节点上执行)

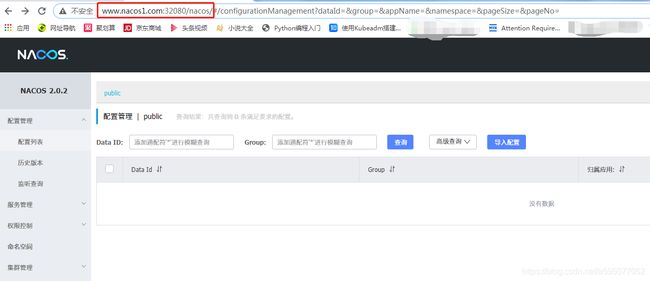

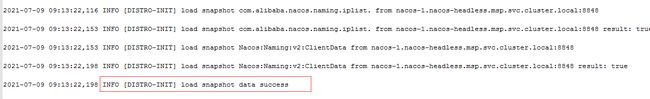

cat > /etc/sysconfig/modules/ipvs.modules < #!/bin/bash modprobe -- ip_vs modprobe -- ip_vs_rr modprobe -- ip_vs_wrr modprobe -- ip_vs_sh modprobe -- nf_conntrack_ipv4 EOF chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4 kubectl get pod -n kube-system | grep kube-proxy |awk '{system("kubectl delete pod "$1" -n kube-system")}' kubectl logs -n kube-system kube-proxy-ff74q (这个pod名称使用命令kubectl get pod -n kube-system查出来) 2)bash进入pod使用printenv查看环境变量MYSQL_SERVICE_HOST=nacos-mysql 3)数据库的端口配置未使用正确,应该是数据库容器组服务上的port 4)出现异常com.alibaba.nacos.api.exception.NacosException: java.net.UnknownHostException: jmenv.tbsite.net解决办法,参考:https://github.com/nacos-group/nacos-k8s/issues/144 原因是启动nacos的容器时无法创建/home/nacos/conf/cluster.conf文件,初始化容器无法生成cluster.conf文件,按照上面的解释是要修改注解k8s在1.13以后请使用publishNotReadyAddresses代替tolerate-unready-endpoints注解,publishNotReadyAddresses: true,但我这样设置没有效,脑袋一热将 nfs目录下的所有文件都清空了,这时报找不到对应容器的挂载生成的持久化文件了,后面在kuboard的存储页面找到对应的资源全部删除掉,再重新启动nacos的工作负载,再次进入对应的pod,查看启动日志,终于不报错了。 4、给nacos集群添加ingress-nginx代理,供集群外部访问 参考:https://www.cnblogs.com/caibao666/p/11600224.html,我这里采用NodePort方式。 1)部署nginx-ingress-controller https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/static/mandatory.yaml 镜像可改为 registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:0.25.1 nginx-ingress-controller:0.25.1版本注意要与k8s的版本保持兼容,我的是v.1.15.1。 可以给yaml文件中所有资源添加自定义的所属名称空间,默认是ingress-nginx ,注意:deamonset方式,还有3处修改: Kind值修改成DaemonSet 删除replicas配置 添加hostNetwork: true kubectl apply -f mandatory.yaml kubectl get pod -n msp kubectl describe pod nginx-ingress-controller-5gpls -n ingress-nginx kubectl logs nginx-ingress-controller-5gpls -n ingress-nginx kubectl delete from mandatory.yaml 执行时间长,稍等一段时间 网上说修改为33,不知道在哪能找到这个33,通过id或者cat /etc/passwd都没找到。 改为root用户的0还是无权限。 kubectl apply -f mandatory.yaml kubectl get pod -n ingress-nginx 指定固定nodeport(80对应32080,443对应32443),默认是自动分配的,名称空间可以改成自定义的 kubectl apply -f service-nodeport.yaml 查看是否创建成功 kubectl get svc -n msp 这种方式通过k8s的物理节点的端口(32080)进行访问,它与nginx-ingress-controller容器组的service绑定。nginx-ingress-controller内部启动了nginx服务,当前创建了Ingress后,会将其配置的路由规则应用到nginx-ingress-controller容器组内的nginx.conf中,如下图: 5、外部浏览器访问nacos的请求处理流程 1)修改本地windows的hosts文件,添加192.168.126.130 www.nacos1.com,www.nacos1.com这个是配置Ingress资源对象时指定的host,192.168.126.130是k8s的节点地址,当然windows系统所在机器能够访问节点192.168.126.130。这里必须配置www.nacos1.com是因为配置Ingress时,nginx-ingress-controller容器组pod内部的nginx服务的配置为相应添加与之对应的服务名,同时该配置文件中还有默认的服务名,它们监听相同的80和443端口,所以请求时要指定服务名即域名。默认的nginx服务配置(无法处理/nacos,导致报404) 如果使用ip访问会报如下404错误: 成功访问如下: 2)请求处理大概流程 1、制作Sentinel镜像 1)获取jar包 wget https://github.com/alibaba/Sentinel/releases/download/1.8.1/sentinel-dashboard-1.8.1.jar 2)创建Dockerfile文件 内容如下: #基础镜像,如果本地没有,会从远程仓库拉取 FROM openjdk:8-jdk-alpine #镜像制作人 MAINTAINER chenhj #在容器中创建挂载点,可以多个VOLUME["/tmp"] VOLUME /tmp #将主机下的jar拷贝到镜像中 ADD sentinel-dashboard-1.8.1.jar sentinel-dashboard.jar #声明了容器应该打开的端口并没有实际上将它打开 EXPOSE 8380 #执行java命令,启动应用 CMD java ${JAVA_OPTS} -jar sentinel-dashboard.jar 3)构建镜像 docker build -f Dockerfile -t 192.168.126.131:80/msp/sentinel:1.8.1 . 4)推送镜像 docker push 192.168.126.131:80/msp/sentinel:1.8.1 2、编写sentinel.yaml 3、编写sentinel-statefulset.yaml 4、部署 kubectl apply -f sentinel.yaml kubectl apply -f sentinel-statefulset.yaml 查看pod发现拉取镜像失败 kubectl get pod -n msp -o wide kubectl describe pod sentinel-0 -n msp 需要登录,解决办法: 创建一个密钥: kubectl create secret docker-registry -n msp registry-key --docker-server=192.168.126.131:80 --docker-username=admin --docker-password=Harbor12345 删除部署kubectl delete -f sentinel-statefulset.yaml 修改sentinel-statefulset.yaml,添加拉取时使用的密钥 imagePullSecrets: 重新部署后访问任意k8s节点,如http://k8s-master:31380即可访问成功,31380是service绑定的宿主机的物理端口,用户名密码是sentinel 无状态服务,使用Deployment部署 1、配置bootstrap.yml 主要指定公共配置,应用配置扩展名,流控sentinel控制台地址,监控指标,应用名 2、配置公共配置common.yaml 3、应用配置user-center.yaml 4、构建user-center镜像 1)Dockerfile文件配置 /dev/./urandom或者/dev/urandom都报类似于下面的错误,不知道怎么回事儿,Error: Could not find or load main class java $JAVA_OPTS -Djava.security.egd=file:.dev.urandom 2)mvn clean 3)mvn package 4)docker build 5)docker push 5、在nacos中配置yaml common.yaml、user-center.yaml 6、用户中心部署yaml 7启动报错 1)Ignore the empty nacos configuration and get it based on dataId[user-center] & group[DEFAULT_GROUP] 启动时报找不到配置文件,原因是在nacos创建的配置common.yaml、user-center.yaml没在应用的bootstrap.yml文件指定的命名空间下。将nacos中的配置文件克隆到指定的命名空间下。 2)向nacos注册中心发送心跳包时,nacos返回Distro protocol is not initialized [user-center:10.244.2.180:7000] [,] 2021-07-07 12:01:43.666 ERROR 1 [main] com.alibaba.nacos.client.naming request: /nacos/v1/ns/instance failed, servers: [nacos-0.nacos-headless.msp.svc.cluster.local:8848], code: 503, msg: server is DOWNnow, detailed error message: Optional[Distro protocol is not initialized] 这表明nacos的注册中心使用的Distro模块未成功初始化,到nacos的挂载目录查看日志文件,即 /data/nfs/msp-data-nacos-0-pvc-094ae124-4f0c-4296-879e-b1b9bd1b37ca/logs/protocol-distro.log。日志中会出现很多关键的异常信息如下: 2021-07-09 09:12:22,110 ERROR [DISTRO-INIT] load snapshot com.alibaba.nacos.naming.iplist. from nacos-1.nacos-headless.msp.svc.cluster.local:8848 failed. com.alibaba.nacos.core.distributed.distro.exception.DistroException: [DISTRO-EXCEPTION]Get snapshot from nacos-1.nacos-headless.msp.svc.cluster.local:8848 failed. Caused by: java.io.IOException: failed to req API: http://nacos-1.nacos-headless.msp.svc.cluster.local:8848/nacos/v1/ns/distro/datums. code: 500 msg: org.apache.http.conn.HttpHostConnectException: Connect to nacos-1.nacos-headless.msp.svc.cluster.local:8848 [nacos-1.nacos-headless.msp.svc.cluster.local/10.244.2.205] failed: Connection refused (Connection refused) com.alibaba.nacos.core.distributed.distro.exception.DistroException: [DISTRO-EXCEPTION][DISTRO-FAILED] Get distro snapshot failed! Caused by: com.alibaba.nacos.api.exception.NacosException: No rpc client related to member: Member{ip='nacos-1.nacos-headless.msp.svc.cluster.local', port=8848, state=UP, extendInfo={raftPort=7848}} WARN data storage DistroDataStorageImpl has not finished initial step, do not send verify data 但是nacos的pod日志报了nacos Have not found myself in list yet.度娘了一番发现出现类似于“pod数量为110个,所有node都达到110个时无法再调度”的相关问题,比如:https://blog.csdn.net/warrah/article/details/106488733 由此想到了是不是k8s节点的资源耗得差不了,因为我的k8s节点内存只有4个G左右,但部署的东西太多了导致的,接下了我就关了很多与nacos无关的容器,查看k8s的节点资源占用情况,果然发现了惊喜,下图已经是停了很多容器之后的情况,可怜的内存啊: 看看当前集群状况(已经关了很多,想来当前的电脑配置无法完成整个平台的搭建工作了,服务监控相关也没法弄了,VM太吃资源了,电脑的内存还得整到32G): 重启nacos最后正常了: 3)发送心跳报400 failed to req API:/api//nacos/v1/ns/instance/beat code:400 重启用户中心应用又可以了注册上了 4)登录访问时,用户中心无法连接数据库 因为数据库不支持用户名密码登录导致,暂将用户中心数据库脚本文件在认证中心数据库中执行,具体步骤: kubectl cp user-center.sql auth-center-mysql-0:/ -n msp kubectl exec -it auth-center-mysql-0 -n msp -- /bin/bash mysql -uroot –proot \. user-center.sql 部署与用户中心类似 部署与用户中心类似 后台应用主要是静态文件 在工程/src/main/view/static目录下,以nginx作为服务器进行部署,所以需要以nginx作为基础镜像进行构建。 工程目录结构: 1、在Dockerfile文件同级目录下构建脚本文件entry-point.sh 2、构建Dockerfile文件 问题 1、启动时报standard_init_linux.go:211: exec user process caused "no such file or directory" 使用docker run -it -p 8080:80 192.168.126.131:5000/msp/back-center:v1.0.2 /bin/bash进入容器,执行sh entry-point.sh后,输出错误如下: 但文件确实在目录下。 最后在linux环境下重建文件entry-point.sh后,重新构建镜像就可以了,确认是在windows下构建时,内容中带有特殊的字符,可能是带有windows的换行符\r\n,在容器环境中无法识别,而linux下的换行符是\n 2、访问时报403 查看nginx容器,日志报错如下: 2021/07/13 05:25:49 [error] 12#12: *11 open() "/usr/share/nginx/html/assets/libs/v5.3.0/ol.js" failed (13: Permission denied), client: 10.244.1.76, server: localhost, request: "GET /assets/libs/v5.3.0/ol.js HTTP/1.1", host: "back-center.msp.cluster.com:32080", referrer: "http://back-center.msp.cluster.com:32080/" 10.244.1.76 - - [13/Jul/2021:05:25:49 +0000] "GET /assets/libs/v5.3.0/ol.js HTTP/1.1" 403 555 "http://back-center.msp.cluster.com:32080/" "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/90.0.4430.93 Safari/537.36" "10.244.1.1" 发现是启动用户是root,而nginx.conf中指定的nginx https://blog.csdn.net/onlysunnyboy/article/details/75270533 解决办法:nginx容器启动后执行entry-point.sh脚本,脚本中将/etc/nginx/nginx.conf中的user nginx;替换成user root; 1、电脑非正常关机导致安装在VM上的k8s节点无法正常使用。 1)kubectl get pod systemctl status kubelet docker ps -a docker start $(docker ps -a | awk '{ print $1}' | tail -n +2) 最后按照https://ghostwritten.blog.csdn.net/article/details/111186072的前四种方法都不行,只有按方法5 重新部署集群了,之前部署的很多应用都没了。

五、部署Sentinel

---

apiVersion: v1

kind: Service

metadata:

name: sentinel

namespace: msp

labels:

app: sentinel

spec:

publishNotReadyAddresses: true

ports:

- port: 8380

name: server

targetPort: 8380

clusterIP: None

selector:

app: sentinel

---

# 外部访问服务

apiVersion: v1

kind: Service

metadata:

name: sentinel-svc

namespace: msp

labels:

app: sentinel

spec:

publishNotReadyAddresses: true

ports:

- name: http

protocol: "TCP"

port: 8380

targetPort: 8380

nodePort: 31380

type: NodePort

selector:

app: sentinel

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: sentinel

namespace: msp

spec:

serviceName: sentinel

replicas: 1

template:

metadata:

namespace: msp

labels:

app: sentinel

annotations:

pod.alpha.kubernetes.io/initialized: "true"

spec:

containers:

- name: sentinel

imagePullPolicy: IfNotPresent

image: 192.168.126.131:80/msp/sentinel:1.8.1

resources:

requests:

memory: "512Mi"

cpu: "200m"

ports:

- containerPort: 8380

name: client

env:

- name: TZ

value: Asia/Shanghai

- name: JAVA_OPTS

value: "-Dserver.port=8380 -Dcsp.sentinel.dashboard.server=localhost:8380 -Dproject.name=sentinel-dashboard -Djava.security.egd=file:/dev/./urandom -Dcsp.sentinel.api.port=8700"

selector:

matchLabels:

app: sentinel

六、部署用户中心

spring:

cloud:

nacos:

config:

# 共享配置的DataId,多个使用,分隔

# 越靠后,优先级越高;

# .yaml后缀不能少,只支持yaml/properties

shared-dataids: common.yaml ### 共享配置

refreshable-dataids: common.yaml ### 可刷新共享配置

server-addr: nacos-0.nacos-headless.msp.svc.cluster.local:8848 ### nacos server地址

file-extension: yaml ### dataId扩展名

namespace: 6594ff18-07f3-4bb8-8add-72a35235e3ac #命名空间 代指某个环境

sentinel:

transport:

# 指定sentinel 控制台的地址

dashboard: sentinel:8380

eager: true

application:

name: user-center

#metrics

management:

endpoints:

web:

exposure:

include: "*"

endpoint:

chaosmonkey:

enabled: true

health:

show-details: always

spring:

cloud:

nacos:

# 注册中心

discovery:

server-addr: nacos-0.nacos-headless.msp.svc.cluster.local:8848

namespace: 6594ff18-07f3-4bb8-8add-72a35235e3ac #命名空间 代指某个环境

# 配置中心

config:

# 共享配置的DataId,多个使用,分隔

# 越靠后,优先级越高;

# .yaml后缀不能少,只支持yaml/properties

shared-dataids: common.yaml ### 共享配置

refreshable-dataids: common.yaml ### 可刷新共享配置

server-addr: nacos-0.nacos-headless.msp.svc.cluster.local:8848 ### nacos server配置中心地址

file-extension: yaml ### dataId扩展名

namespace: 6594ff18-07f3-4bb8-8add-72a35235e3ac #命名空间 代指某个环境

sentinel:

transport:

# 指定sentinel 控制台的地址

dashboard: sentinel:8380

eager: true

jackson:

mapper:

ALLOW_EXPLICIT_PROPERTY_RENAMING: true

deserialization:

READ_DATE_TIMESTAMPS_AS_NANOSECONDS: false

serialization:

WRITE_DATE_TIMESTAMPS_AS_NANOSECONDS: false

WRITE_DATES_AS_TIMESTAMPS: true

#端口配置

server:

port: 7000 #固定端口

# port: ${randomServerPort.value[7000,7005]} #随机端口

spring:

datasource:

# JDBC 配置(驱动类自动从url的mysql识别,数据源类型自动识别)

url: jdbc:mysql://db-user-center:3106/user-center?useUnicode=true&characterEncoding=utf-8&allowMultiQueries=true&useSSL=false

username: root

password: root

driver-class-name: com.mysql.cj.jdbc.Driver

#连接池配置(通常来说,只需要修改initialSize、minIdle、maxActive

initial-size: 1

max-active: 20

min-idle: 1

# 配置获取连接等待超时的时间

max-wait: 60000

#打开PSCache,并且指定每个连接上PSCache的大小

pool-prepared-statements: true

max-pool-prepared-statement-per-connection-size: 20

validation-query: SELECT 'x'

test-on-borrow: false

test-on-return: false

test-while-idle: true

#配置间隔多久才进行一次检测,检测需要关闭的空闲连接,单位是毫秒

time-between-eviction-runs-millis: 60000

#配置一个连接在池中最小生存的时间,单位是毫秒

min-evictable-idle-time-millis: 300000

filters: stat,wall

# WebStatFilter配置,说明请参考Druid Wiki,配置_配置WebStatFilter

#是否启用StatFilter默认值true

web-stat-filter.enabled: true

web-stat-filter.url-pattern: /*

web-stat-filter.exclusions: "*.js , *.gif ,*.jpg ,*.png ,*.css ,*.ico , /druid/*"

web-stat-filter.session-stat-max-count: 1000

web-stat-filter.profile-enable: true

# StatViewServlet配置

#展示Druid的统计信息,StatViewServlet的用途包括:1.提供监控信息展示的html页面2.提供监控信息的JSON API

#是否启用StatViewServlet默认值true

stat-view-servlet.enabled: true

#根据配置中的url-pattern来访问内置监控页面,如果是上面的配置,内置监控页面的首页是/druid/index.html例如:

#http://110.76.43.235:9000/druid/index.html

#http://110.76.43.235:8080/mini-web/druid/index.html

stat-view-servlet.url-pattern: /druid/*

#允许清空统计数据

stat-view-servlet.reset-enable: true

stat-view-servlet.login-username: admin

stat-view-servlet.login-password: admin

#StatViewSerlvet展示出来的监控信息比较敏感,是系统运行的内部情况,如果你需要做访问控制,可以配置allow和deny这两个参数

#deny优先于allow,如果在deny列表中,就算在allow列表中,也会被拒绝。如果allow没有配置或者为空,则允许所有访问

#配置的格式

##基础镜像,如果本地没有,会从远程仓库拉取

FROM openjdk:8-jdk-alpine

#镜像制作人

MAINTAINER chenhj

#在容器中创建挂载点,可以多个VOLUME["/tmp"]

VOLUME /tmp

#声明了容器应该打开的端口并没有实际上将它打开

EXPOSE 8080

#定义参数

ARG JAR_FILE

#拷贝本地文件到镜像中

COPY ${JAR_FILE} app.jar

#指定容器启动时要执行的命令,但如果存在CMD命令,cmd中的参数会被附加到ENTRYPOINT指令的后面

ENTRYPOINT [ "java", "-Djava.security.egd=file:/dev/./urandom", "java $JAVA_OPTS -Djava.security.egd=file:/dev/./urandom", "-jar", "/app.jar" ]

---

apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: '3'

k8s.kuboard.cn/displayName: 用户中心

creationTimestamp: '2021-07-12T07:33:02Z'

generation: 4

labels:

k8s.kuboard.cn/layer: svc

k8s.kuboard.cn/name: user-center

name: user-center

namespace: msp

resourceVersion: '62194'

selfLink: /apis/apps/v1/namespaces/msp/deployments/user-center

uid: 2b651e82-506e-4e2f-8435-67b643ddc23e

spec:

progressDeadlineSeconds: 600

replicas: 0

revisionHistoryLimit: 10

selector:

matchLabels:

k8s.kuboard.cn/layer: svc

k8s.kuboard.cn/name: user-center

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

annotations:

kubectl.kubernetes.io/restartedAt: '2021-07-12T15:54:38+08:00'

labels:

k8s.kuboard.cn/layer: svc

k8s.kuboard.cn/name: user-center

spec:

containers:

- env:

- name: spring.datasource.druid.core.url

value: >-

jdbc:mysql://db-user-center:3106/user-center?useUnicode=true&characterEncoding=utf-8&allowMultiQueries=true&useSSL=false

- name: spring.datasource.druid.log.url

value: >-

jdbc:mysql://db-log-center:3206/log-center?useUnicode=true&characterEncoding=utf-8&allowMultiQueries=true&useSSL=false

- name: spring.redis.host

value: redis

image: '192.168.126.131:80/msp/user-center:v1.0.3'

imagePullPolicy: IfNotPresent

name: user-center

ports:

- containerPort: 7000

protocol: TCP

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

imagePullSecrets:

- name: harbor-msp

restartPolicy: Always

schedulerName: default-scheduler

terminationGracePeriodSeconds: 30

status:

conditions:

- lastTransitionTime: '2021-07-12T07:34:04Z'

lastUpdateTime: '2021-07-12T07:34:04Z'

message: Deployment has minimum availability.

reason: MinimumReplicasAvailable

status: 'True'

type: Available

- lastTransitionTime: '2021-07-12T07:33:02Z'

lastUpdateTime: '2021-07-12T07:54:41Z'

message: ReplicaSet "user-center-6bbfc58bd7" has successfully progressed.

reason: NewReplicaSetAvailable

status: 'True'

type: Progressing

observedGeneration: 4

---

apiVersion: v1

kind: Service

metadata:

creationTimestamp: '2021-07-12T07:33:02Z'

labels:

k8s.kuboard.cn/layer: svc

k8s.kuboard.cn/name: user-center

name: user-center

namespace: msp

resourceVersion: '58819'

selfLink: /api/v1/namespaces/msp/services/user-center

uid: a0ee37a4-8629-4d64-b517-b151daf54e19

spec:

clusterIP: 10.100.21.141

ports:

- name: ww7t3p

port: 7000

protocol: TCP

targetPort: 7000

selector:

k8s.kuboard.cn/layer: svc

k8s.kuboard.cn/name: user-center

sessionAffinity: None

type: ClusterIP

---

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

creationTimestamp: '2021-07-12T07:33:02Z'

generation: 1

labels:

k8s.kuboard.cn/layer: svc

k8s.kuboard.cn/name: user-center

name: user-center

namespace: msp

resourceVersion: '58821'

selfLink: /apis/networking.k8s.io/v1beta1/namespaces/msp/ingresses/user-center

uid: 496accf0-f13c-4e95-8b30-69b4b5063cc1

spec:

rules:

- host: svc.user-center.msp.cluster.kuboard.cn

http:

paths:

- backend:

serviceName: user-center

servicePort: ww7t3p

path: /

七、部署认证中心

八、部署网关

九、部署后台应用

#!/bin/sh

echo "GATEWAY_API_URL => ${GATEWAY_API_URL}"

sed -i "s#base_server.*#base_server: '${GATEWAY_API_URL}',$g" /usr/share/nginx/html/module/config.js

sed -i "s#user.*nginx;#user root;#g" /etc/nginx/nginx.conf

echo "args fllows:"

cat /usr/share/nginx/html/module/config.js

cat /etc/nginx/nginx.conf

echo "start nginx"

nginx -g "daemon off;"

FROM nginx:1.17.1

LABEL maintainer="kuboard.cn"

ADD ./entry-point.sh /entry-point.sh

RUN chmod +x /entry-point.sh && rm -rf /usr/share/nginx/html

# 创建环境变量的默认内容,防止 sed 脚本出错

ENV GATEWAY_API_URL http://gateway_api_url_not_set/

ENV CLOUD_EUREKA_URL http://cloud_eureka_url_not_set/

ADD ./src/main/view/static /usr/share/nginx/html

EXPOSE 80

CMD ["/entry-point.sh"]

GATEWAY_API_URL http://gateway_api_url_not_set/

CLOUD_EUREKA_URL http://cloud_eureka_url_not_set/

: No such file or directorynginx/html/module/config.js

: No such file or directorynginx/html/module/config.js

cat: '/usr/share/nginx/html/module/config.js'$'\r': No such file or directory

New state of 'nil' is invalid.

#!/bin/sh

echo "GATEWAY_API_URL => ${GATEWAY_API_URL}"

echo "CLOUD_EUREKA_URL -> ${CLOUD_EUREAKA_URL}"

sed -i "s#base_server.*#base_server: '${GATEWAY_API_URL}',$g" /usr/share/nginx/html/module/config.js

sed -i "s#eureka_server.*#eureka_server: '${CLOUD_EUREKA_URL}',#g" /usr/share/nginx/html/module/config.js

sed -i "s#user.*nginx;#user root;#g" /etc/nginx/nginx.conf

echo "args fllows:"

cat /usr/share/nginx/html/module/config.js

cat /etc/nginx/nginx.conf

echo "start nginx"

nginx -g "daemon off;"

附:k8s使用中的问题

The connection to the server 192.168.126.128:6443 was refused - did you specify the right host or port?7月 09 16:58:35 k8s-master kubelet[21332]: E0709 16:58:35.679351 21332 kubelet.go:2248] node "k8s-master" not foundCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

37b2c619ec03 2c4adeb21b4f "etcd --advertise-cl…" About a minute ago Exited (2) About a minute ago k8s_etcd_etcd-k8s-master_kube-system_d4ce83c21891db7e73e807ef995ca360_129

2536430e10b3 68c3eb07bfc3 "kube-apiserver --ad…" 4 minutes ago Exited (255) 4 minutes ago k8s_kube-apiserver_kube-apiserver-k8s-master_kube-system_4d8a5ffa907226d52ec5ad126ef212fb_137

7e259c79f9a6 b0b3c4c404da "kube-scheduler --bi…" 39 minutes ago Up 39 minutes k8s_kube-scheduler_kube-scheduler-k8s-master_kube-system_ecae9d12d3610192347be3d1aa5aa552_119

2f22d8c90df4 d75082f1d121 "kube-controller-man…" 39 minutes ago Up 39 minutes k8s_kube-controller-manager_kube-controller-manager-k8s-master_kube-system_5a1fa432561d9745fe013857ccb566c1_119

5d501d1d078d k8s.gcr.io/pause:3.1 "/pause" 39 minutes ago Up 39 minutes k8s_POD_kube-controller-manager-k8s-master_kube-system_5a1fa432561d9745fe013857ccb566c1_105

cfb01af4e5e8 k8s.gcr.io/pause:3.1 "/pause" 39 minutes ago Up 39 minutes k8s_POD_kube-scheduler-k8s-master_kube-system_ecae9d12d3610192347be3d1aa5aa552_88

83bdb7cba419 k8s.gcr.io/pause:3.1 "/pause" 39 minutes ago Up 39 minutes k8s_POD_kube-apiserver-k8s-master_kube-system_4d8a5ffa907226d52ec5ad126ef212fb_86

c5f742d2064e k8s.gcr.io/pause:3.1 "/pause" 39 minutes ago Up 39 minutes k8s_POD_etcd-k8s-master_kube-system_d4ce83c21891db7e73e807ef995ca360_72

2628f7f03f85 d75082f1d121 "kube-controller-man…" 41 minutes ago Exited (2) 41 minutes ago k8s_kube-controller-manager_kube-controller-manager-k8s-master_kube-system_5a1fa432561d9745fe013857ccb566c1_118

4b5bf17b2663 b0b3c4c404da "kube-scheduler --bi…" 41 minutes ago Exited (2) 41 minutes ago k8s_kube-scheduler_kube-scheduler-k8s-master_kube-system_ecae9d12d3610192347be3d1aa5aa552_118

0cc77025e7d9 k8s.gcr.io/pause:3.1 "/pause" 41 minutes ago Exited (0) 40 minutes ago k8s_POD_kube-scheduler-k8s-master_kube-system_ecae9d12d3610192347be3d1aa5aa552_87

425af1d425ac

37b2c619ec03

7e259c79f9a6

2f22d8c90df4

5d501d1d078d

cfb01af4e5e8

83bdb7cba419

c5f742d2064e

Error response from daemon: cannot join network of a non running container: 124351d81c42b393ea7cda5b859cb3c971f5dcf020ef7b1834b06777fda1a0ab

Error response from daemon: cannot join network of a non running container: 0cc77025e7d954264a4a27c5be58e43a434de5dc850c0ec59b6616e8ed91fae0

0cc77025e7d9

124351d81c42

Error response from daemon: cannot join network of a non running container: bfe9cb4f37a1df6d413b1458c2105f5f8e76a711c0ac613b5a698641247cd390

Error response from daemon: cannot join network of a non running container: b3792df16c18a5f5f6ad6aece0c9e92b4a277c9d7a2f9e8e45d0aaf6e82c169e

Error response from daemon: cannot join network of a non running container: b48f01632705e20548d4b83d283323a69aa9770e31dcde56c480dc0bd63ceb3b

b3792df16c18

bfe9cb4f37a1

b48f01632705

Error response from daemon: cannot join network of a non running container: 1402ff8e40bf602e96cf28b74b0fb4d80a3d1f272076260be211eee07324cbaf

Error response from daemon: cannot join network of a non running container: 1402ff8e40bf602e96cf28b74b0fb4d80a3d1f272076260be211eee07324cbaf

Error response from daemon: cannot join network of a non running container: d9b5c42c62ca11fbf1c5a80035ef508f5e33ea3320b8a5eb3b91b48a887ed60e

1402ff8e40bf

d9b5c42c62ca

348e331bb616

63032652160b

Error: failed to start containers: 2628f7f03f85, 4b5bf17b2663, 62ef0183bbdf, d2f5aa7e04de, 594be1b1fa38, 17373fd45907, 6c84555eda5d, 567b4db33d92