IOS 视频播放器 swift版

IOS 视频播放器 swift版

- 1. 使用第三方框架实现视频播放

-

- ijkplayer 实现视频播放

- 2. 使用IOS自带API实现视频播放

-

- AVPlayer播放视频

-

- AVPlayer简介

- AVPlayer 播放流程

- AVPlayer注意事项

- AVPlayer实现视频播放OC代码

- AVPlayer实现视频播放Swift代码

- 3. 使用FFmpeg自己解码播放

1. 使用第三方框架实现视频播放

ijkplayer 实现视频播放

- 点击这里下载 ijkplayer

2. 使用IOS自带API实现视频播放

AVPlayer播放视频

点击这里下载本篇博客Demo: kylVideoPlayer

AVPlayer简介

在iOS开发中,播放视频通常有两种方式,一种是使用MPMoviePlayerController(需要导入MediaPlayer.Framework),还有一种是使用AVPlayer。关于这两个类的区别可以参考这篇博客:AVPlayer和MPMoviePlayerController区别,简而言之就是MPMoviePlayerController使用更简单,功能不如AVPlayer强大,而AVPlayer使用稍微麻烦点,不过功能更加强大。

- 在开发中,单纯使用AVPlayer类是无法显示视频的,要将视频层添加至AVPlayerLayer中,这样才能将视频显示出来。

AVPlayer 播放流程

- AVPlayer播放视频主要代码如下:

NSURL *videoUrl = [NSURL URLWithString:@"http://www.jxvdy.com/file/upload/201405/05/18-24-58-42-627.mp4"];

self.playerItem = [AVPlayerItem playerItemWithURL:videoUrl];

[self.playerItem addObserver:self forKeyPath:@"status" options:NSKeyValueObservingOptionNew context:nil];// 监听status属性

[self.playerItem addObserver:self forKeyPath:@"loadedTimeRanges" options:NSKeyValueObservingOptionNew context:nil];// 监听loadedTimeRanges属性

self.player = [AVPlayer playerWithPlayerItem:self.playerItem];

[[NSNotificationCenterdefaultCenter]addObserver:selfselector:@selector(moviePlayDidEnd:) name:AVPlayerItemDidPlayToEndTimeNotificationobject:self.playerItem];

-

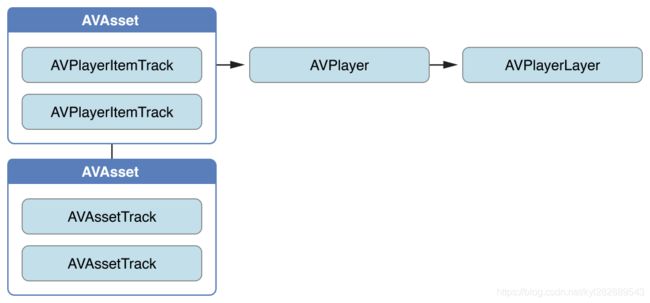

即先将在线视频链接存放在videoUrl中,然后初始化playerItem,playerItem是管理资源的对象,他们的关系如下:

-

然后监听playerItem的status和loadedTimeRange,

当status等于AVPlayerStatusReadyToPlay时代表视频已经可以播放了,我们就可以调用play方法播放了。

loadedTimeRange属性代表已经缓冲的进度,监听此属性可以在UI中更新缓冲进度,也是很有用的一个属性。 -

最后添加一个通知,用于监听视频是否已经播放完毕,然后实现KVO的方法:

- (void)observeValueForKeyPath:(NSString *)keyPath ofObject:(id)object change:(NSDictionary *)change context:(void *)context {

AVPlayerItem *playerItem = (AVPlayerItem *)object;

if ([keyPath isEqualToString:@"status"]) {

if ([playerItem status] == AVPlayerStatusReadyToPlay) {

NSLog(@"AVPlayerStatusReadyToPlay");

self.stateButton.enabled = YES;

CMTime duration = self.playerItem.duration;// 获取视频总长度

CGFloat totalSecond = playerItem.duration.value / playerItem.duration.timescale;// 转换成秒

_totalTime = [self convertTime:totalSecond];// 转换成播放时间

[self customVideoSlider:duration];// 自定义UISlider外观

NSLog(@"movie total duration:%f",CMTimeGetSeconds(duration));

[self monitoringPlayback:self.playerItem];// 监听播放状态

} else if ([playerItem status] == AVPlayerStatusFailed) {

NSLog(@"AVPlayerStatusFailed");

}

} else if ([keyPath isEqualToString:@"loadedTimeRanges"]) {

NSTimeInterval timeInterval = [self availableDuration];// 计算缓冲进度

NSLog(@"Time Interval:%f",timeInterval);

CMTime duration = self.playerItem.duration;

CGFloat totalDuration = CMTimeGetSeconds(duration);

[self.videoProgress setProgress:timeInterval / totalDuration animated:YES];

}

}

- (NSTimeInterval)availableDuration {

NSArray *loadedTimeRanges = [[self.playerView.player currentItem] loadedTimeRanges];

CMTimeRange timeRange = [loadedTimeRanges.firstObject CMTimeRangeValue];// 获取缓冲区域

float startSeconds = CMTimeGetSeconds(timeRange.start);

float durationSeconds = CMTimeGetSeconds(timeRange.duration);

NSTimeInterval result = startSeconds + durationSeconds;// 计算缓冲总进度

return result;

}

- (NSString *)convertTime:(CGFloat)second{

NSDate *d = [NSDate dateWithTimeIntervalSince1970:second];

NSDateFormatter *formatter = [[NSDateFormatter alloc] init];

if (second/3600 >= 1) {

[formatter setDateFormat:@"HH:mm:ss"];

} else {

[formatter setDateFormat:@"mm:ss"];

}

NSString *showtimeNew = [formatter stringFromDate:d];

return showtimeNew;

}

上面代码主要对status和loadedTimeRanges属性做出响应,status状态变为AVPlayerStatusReadyToPlay时,说明视频已经可以播放了,这时我们可以获取一些视频的信息,包含视频长度等,把播放按钮设备enabled,点击就可以调用play方法播放视频了。在AVPlayerStatusReadyToPlay的底部还有个monitoringPlayback方法:

/*

monitoringPlayback用于监听每秒的状态,

- (id)addPeriodicTimeObserverForInterval:(CMTime)interval queue:(dispatch_queue_t)queue usingBlock:(void (^)(CMTime time))block;

- 此方法就是关键,interval参数为响应的间隔时间,这里设为每秒都响应,queue是队列,传NULL代表在主线程执行。可以更新一个UI,比如进度条的当前时间等。

*/

- (void)monitoringPlayback:(AVPlayerItem *)playerItem {

self.playbackTimeObserver = [self.playerView.player addPeriodicTimeObserverForInterval:CMTimeMake(1, 1) queue:NULL usingBlock:^(CMTime time) {

CGFloat currentSecond = playerItem.currentTime.value/playerItem.currentTime.timescale;// 计算当前在第几秒

[self updateVideoSlider:currentSecond];

NSString *timeString = [self convertTime:currentSecond];

self.timeLabel.text = [NSString stringWithFormat:@"%@/%@",timeString,_totalTime];

}];

}

AVPlayer注意事项

- 需要区分两个枚举类型:

/* AVPlayerItemStatus是代表当前播放资源item 的状态

(可以理解成这url链接or视频文件。。。可以播放成功/失败)*/

typedef NS_ENUM(NSInteger, AVPlayerItemStatus) {

AVPlayerItemStatusUnknown,

AVPlayerItemStatusReadyToPlay,

AVPlayerItemStatusFailed

};

/*

AVPlayerStatus是代表当前播放器的状态

*/

typedef NS_ENUM(NSInteger, AVPlayerStatus) {

AVPlayerStatusUnknown,

AVPlayerStatusReadyToPlay,

AVPlayerStatusFailed

};

- CMTime 结构体

CMTimeMake(1, 1),其实就是1s调用一下block,

打个比方CMTimeMake(a, b)就是a/b秒之后调用一下block

更详细的理解CMTime可以参考这篇博客:视频合成中CMTime的理解 - 拖动slider 播放跳跃播放,要使用AVPlayer 对象的seekToTime:方法。

AVPlayer实现视频播放OC代码

主要有两个类:

VideoView, AVViewController

- VideoView类实现:

- 头文件

#import <UIKit/UIKit.h>

#import <AVFoundation/AVFoundation.h>

@protocol VideoSomeDelegate <NSObject>

@required

- (void)flushCurrentTime:(NSString *)timeString sliderValue:(float)sliderValue;

@end

@interface VideoView : UIView

@property (nonatomic ,strong) NSString *playerUrl;

@property (nonatomic ,readonly) AVPlayerItem *item;

@property (nonatomic ,readonly) AVPlayerLayer *playerLayer;

@property (nonatomic ,readonly) AVPlayer *player;

@property (nonatomic ,weak) id <VideoSomeDelegate> someDelegate;

- (id)initWithUrl:(NSString *)path delegate:(id<VideoSomeDelegate>)delegate;

@end

@interface VideoView (Guester)

- (void)addSwipeView;

@end

- 实现文件:

#import "VideoView.h"

#import <AVFoundation/AVFoundation.h>

#import <MediaPlayer/MPVolumeView.h>

typedef enum {

ChangeNone,

ChangeVoice,

ChangeLigth,

ChangeCMTime

}Change;

@interface VideoView ()

@property (nonatomic ,readwrite) AVPlayerItem *item;

@property (nonatomic ,readwrite) AVPlayerLayer *playerLayer;

@property (nonatomic ,readwrite) AVPlayer *player;

@property (nonatomic ,strong) id timeObser;

@property (nonatomic ,assign) float videoLength;

@property (nonatomic ,assign) Change changeKind;

@property (nonatomic ,assign) CGPoint lastPoint;

//Gesture

@property (nonatomic ,strong) UIPanGestureRecognizer *panGesture;

@property (nonatomic ,strong) MPVolumeView *volumeView;

@property (nonatomic ,weak) UISlider *volumeSlider;

@property (nonatomic ,strong) UIView *darkView;

@end

@implementation VideoView

- (id)initWithUrl:(NSString *)path delegate:(id<VideoSomeDelegate>)delegate {

if (self = [super init]) {

_playerUrl = path;

_someDelegate = delegate;

[self setBackgroundColor:[UIColor blackColor]];

[self setUpPlayer];

[self addSwipeView];

}

return self;

}

- (void)setUpPlayer {

NSURL *url = [NSURL URLWithString:_playerUrl];

_item = [[AVPlayerItem alloc] initWithURL:url];

_player = [AVPlayer playerWithPlayerItem:_item];

_playerLayer = [AVPlayerLayer playerLayerWithPlayer:_player];

_playerLayer.videoGravity = AVLayerVideoGravityResizeAspect;

[self.layer addSublayer:_playerLayer];

[self addVideoKVO];

[self addVideoTimerObserver];

[self addVideoNotic];

}

#pragma mark - KVO

- (void)addVideoKVO

{

//KVO

[_item addObserver:self forKeyPath:@"status" options:NSKeyValueObservingOptionNew context:nil];

[_item addObserver:self forKeyPath:@"loadedTimeRanges" options:NSKeyValueObservingOptionNew context:nil];

[_item addObserver:self forKeyPath:@"playbackBufferEmpty" options:NSKeyValueObservingOptionNew context:nil];

}

- (void)removeVideoKVO {

[_item removeObserver:self forKeyPath:@"status"];

[_item removeObserver:self forKeyPath:@"loadedTimeRanges"];

[_item removeObserver:self forKeyPath:@"playbackBufferEmpty"];

}

- (void)observeValueForKeyPath:(nullable NSString *)keyPath ofObject:(nullable id)object change:(nullable NSDictionary<NSString*, id> *)change context:(nullable void *)context {

if ([keyPath isEqualToString:@"status"]) {

AVPlayerItemStatus status = _item.status;

switch (status) {

case AVPlayerItemStatusReadyToPlay:

{

[_player play];

_videoLength = floor(_item.asset.duration.value * 1.0/ _item.asset.duration.timescale);

}

break;

case AVPlayerItemStatusUnknown:

{

NSLog(@"AVPlayerItemStatusUnknown");

}

break;

case AVPlayerItemStatusFailed:

{

NSLog(@"AVPlayerItemStatusFailed");

NSLog(@"%@",_item.error);

}

break;

default:

break;

}

} else if ([keyPath isEqualToString:@"loadedTimeRanges"]) {

} else if ([keyPath isEqualToString:@"playbackBufferEmpty"]) {

}

}

#pragma mark - Notic

- (void)addVideoNotic {

//Notification

[[NSNotificationCenter defaultCenter] addObserver:self selector:@selector(movieToEnd:) name:AVPlayerItemDidPlayToEndTimeNotification object:nil];

[[NSNotificationCenter defaultCenter] addObserver:self selector:@selector(movieJumped:) name:AVPlayerItemTimeJumpedNotification object:nil];

[[NSNotificationCenter defaultCenter] addObserver:self selector:@selector(movieStalle:) name:AVPlayerItemPlaybackStalledNotification object:nil];

[[NSNotificationCenter defaultCenter] addObserver:self selector:@selector(backGroundPauseMoive) name:UIApplicationDidEnterBackgroundNotification object:nil];

}

- (void)removeVideoNotic {

[[NSNotificationCenter defaultCenter] removeObserver:self name:AVPlayerItemDidPlayToEndTimeNotification object:nil];

[[NSNotificationCenter defaultCenter] removeObserver:self name:AVPlayerItemPlaybackStalledNotification object:nil];

[[NSNotificationCenter defaultCenter] removeObserver:self name:AVPlayerItemTimeJumpedNotification object:nil];

[[NSNotificationCenter defaultCenter] removeObserver:self];

}

- (void)movieToEnd:(NSNotification *)notic {

NSLog(@"%@",NSStringFromSelector(_cmd));

}

- (void)movieJumped:(NSNotification *)notic {

NSLog(@"%@",NSStringFromSelector(_cmd));

}

- (void)movieStalle:(NSNotification *)notic {

NSLog(@"%@",NSStringFromSelector(_cmd));

}

- (void)backGroundPauseMoive {

NSLog(@"%@",NSStringFromSelector(_cmd));

}

#pragma mark - TimerObserver

- (void)addVideoTimerObserver {

__weak typeof (self)self_ = self;

_timeObser = [_player addPeriodicTimeObserverForInterval:CMTimeMake(1, 1) queue:NULL usingBlock:^(CMTime time) {

float currentTimeValue = time.value*1.0/time.timescale/self_.videoLength;

NSString *currentString = [self_ getStringFromCMTime:time];

if ([self_.someDelegate respondsToSelector:@selector(flushCurrentTime:sliderValue:)]) {

[self_.someDelegate flushCurrentTime:currentString sliderValue:currentTimeValue];

} else {

NSLog(@"no response");

}

NSLog(@"%@",self_.someDelegate);

}];

}

- (void)removeVideoTimerObserver {

NSLog(@"%@",NSStringFromSelector(_cmd));

[_player removeTimeObserver:_timeObser];

}

#pragma mark - Utils

- (NSString *)getStringFromCMTime:(CMTime)time

{

float currentTimeValue = (CGFloat)time.value/time.timescale;//得到当前的播放时

NSDate * currentDate = [NSDate dateWithTimeIntervalSince1970:currentTimeValue];

NSCalendar *calendar = [[NSCalendar alloc] initWithCalendarIdentifier:NSCalendarIdentifierGregorian];

NSInteger unitFlags = NSCalendarUnitHour | NSCalendarUnitMinute | NSCalendarUnitSecond ;

NSDateComponents *components = [calendar components:unitFlags fromDate:currentDate];

if (currentTimeValue >= 3600 )

{

return [NSString stringWithFormat:@"%ld:%ld:%ld",components.hour,components.minute,components.second];

}

else

{

return [NSString stringWithFormat:@"%ld:%ld",components.minute,components.second];

}

}

- (NSString *)getVideoLengthFromTimeLength:(float)timeLength

{

NSDate * date = [NSDate dateWithTimeIntervalSince1970:timeLength];

NSCalendar *calendar = [[NSCalendar alloc] initWithCalendarIdentifier:NSCalendarIdentifierGregorian];

NSInteger unitFlags = NSCalendarUnitHour | NSCalendarUnitMinute | NSCalendarUnitSecond ;

NSDateComponents *components = [calendar components:unitFlags fromDate:date];

if (timeLength >= 3600 )

{

return [NSString stringWithFormat:@"%ld:%ld:%ld",components.hour,components.minute,components.second];

}

else

{

return [NSString stringWithFormat:@"%ld:%ld",components.minute,components.second];

}

}

- (void)layoutSubviews {

[super layoutSubviews];

_playerLayer.frame = self.bounds;

}

#pragma mark - release

- (void)dealloc {

NSLog(@"%@",NSStringFromSelector(_cmd));

[self removeVideoTimerObserver];

[self removeVideoNotic];

[self removeVideoKVO];

}

@end

#pragma mark - VideoView (Guester)

@implementation VideoView (Guester)

- (void)addSwipeView {

_panGesture = [[UIPanGestureRecognizer alloc] initWithTarget:self action:@selector(swipeAction:)];

[self addGestureRecognizer:_panGesture];

[self setUpDarkView];

}

- (void)setUpDarkView {

_darkView = [[UIView alloc] init];

[_darkView setTranslatesAutoresizingMaskIntoConstraints:NO];

[_darkView setBackgroundColor:[UIColor blackColor]];

_darkView.alpha = 0.0;

[self addSubview:_darkView];

NSMutableArray *darkArray = [NSMutableArray array];

[darkArray addObjectsFromArray:[NSLayoutConstraint constraintsWithVisualFormat:@"H:|[_darkView]|" options:0 metrics:nil views:NSDictionaryOfVariableBindings(_darkView)]];

[darkArray addObjectsFromArray:[NSLayoutConstraint constraintsWithVisualFormat:@"V:|[_darkView]|" options:0 metrics:nil views:NSDictionaryOfVariableBindings(_darkView)]];

[self addConstraints:darkArray];

}

- (void)swipeAction:(UISwipeGestureRecognizer *)gesture {

switch (gesture.state) {

case UIGestureRecognizerStateBegan:

{

_changeKind = ChangeNone;

_lastPoint = [gesture locationInView:self];

}

break;

case UIGestureRecognizerStateChanged:

{

[self getChangeKindValue:[gesture locationInView:self]];

}

break;

case UIGestureRecognizerStateEnded:

{

if (_changeKind == ChangeCMTime) {

[self changeEndForCMTime:[gesture locationInView:self]];

}

_changeKind = ChangeNone;

_lastPoint = CGPointZero;

}

default:

break;

}

}

- (void)getChangeKindValue:(CGPoint)pointNow {

switch (_changeKind) {

case ChangeNone:

{

[self changeForNone:pointNow];

}

break;

case ChangeCMTime:

{

[self changeForCMTime:pointNow];

}

break;

case ChangeLigth:

{

[self changeForLigth:pointNow];

}

break;

case ChangeVoice:

{

[self changeForVoice:pointNow];

}

break;

default:

break;

}

}

- (void)changeForNone:(CGPoint) pointNow {

if (fabs(pointNow.x - _lastPoint.x) > fabs(pointNow.y - _lastPoint.y)) {

_changeKind = ChangeCMTime;

} else {

float halfWight = self.bounds.size.width / 2;

if (_lastPoint.x < halfWight) {

_changeKind = ChangeLigth;

} else {

_changeKind = ChangeVoice;

}

_lastPoint = pointNow;

}

}

- (void)changeForCMTime:(CGPoint) pointNow {

float number = fabs(pointNow.x - _lastPoint.x);

if (pointNow.x > _lastPoint.x && number > 10) {

float currentTime = _player.currentTime.value / _player.currentTime.timescale;

float tobeTime = currentTime + number*0.5;

NSLog(@"forwart to changeTo time:%f",tobeTime);

} else if (pointNow.x < _lastPoint.x && number > 10) {

float currentTime = _player.currentTime.value / _player.currentTime.timescale;

float tobeTime = currentTime - number*0.5;

NSLog(@"back to time:%f",tobeTime);

}

}

- (void)changeForLigth:(CGPoint) pointNow {

float number = fabs(pointNow.y - _lastPoint.y);

if (pointNow.y > _lastPoint.y && number > 10) {

_lastPoint = pointNow;

[self minLigth];

} else if (pointNow.y < _lastPoint.y && number > 10) {

_lastPoint = pointNow;

[self upperLigth];

}

}

- (void)changeForVoice:(CGPoint)pointNow {

float number = fabs(pointNow.y - _lastPoint.y);

if (pointNow.y > _lastPoint.y && number > 10) {

_lastPoint = pointNow;

[self minVolume];

} else if (pointNow.y < _lastPoint.y && number > 10) {

_lastPoint = pointNow;

[self upperVolume];

}

}

- (void)changeEndForCMTime:(CGPoint)pointNow {

if (pointNow.x > _lastPoint.x ) {

NSLog(@"end for CMTime Upper");

float length = fabs(pointNow.x - _lastPoint.x);

[self upperCMTime:length];

} else {

NSLog(@"end for CMTime min");

float length = fabs(pointNow.x - _lastPoint.x);

[self mineCMTime:length];

}

}

- (void)upperLigth {

if (_darkView.alpha >= 0.1) {

_darkView.alpha = _darkView.alpha - 0.1;

}

}

- (void)minLigth {

if (_darkView.alpha <= 1.0) {

_darkView.alpha = _darkView.alpha + 0.1;

}

}

- (void)upperVolume {

if (self.volumeSlider.value <= 1.0) {

self.volumeSlider.value = self.volumeSlider.value + 0.1 ;

}

}

- (void)minVolume {

if (self.volumeSlider.value >= 0.0) {

self.volumeSlider.value = self.volumeSlider.value - 0.1 ;

}

}

#pragma mark -CMTIME

- (void)upperCMTime:(float)length {

float currentTime = _player.currentTime.value / _player.currentTime.timescale;

float tobeTime = currentTime + length*0.5;

if (tobeTime > _videoLength) {

[_player seekToTime:_item.asset.duration];

} else {

[_player seekToTime:CMTimeMake(tobeTime, 1)];

}

}

- (void)mineCMTime:(float)length {

float currentTime = _player.currentTime.value / _player.currentTime.timescale;

float tobeTime = currentTime - length*0.5;

if (tobeTime <= 0) {

[_player seekToTime:kCMTimeZero];

} else {

[_player seekToTime:CMTimeMake(tobeTime, 1)];

}

}

- (MPVolumeView *)volumeView {

if (_volumeView == nil) {

_volumeView = [[MPVolumeView alloc] init];

_volumeView.hidden = YES;

[self addSubview:_volumeView];

}

return _volumeView;

}

- (UISlider *)volumeSlider {

if (_volumeSlider== nil) {

NSLog(@"%@",[self.volumeView subviews]);

for (UIView *subView in [self.volumeView subviews]) {

if ([subView.class.description isEqualToString:@"MPVolumeSlider"]) {

_volumeSlider = (UISlider*)subView;

break;

}

}

}

return _volumeSlider;

}

@end

- AVViewController类实现:

#import <UIKit/UIKit.h>

@interface AVViewController : UIViewController

@end

#import "AVViewController.h"

#import "VideoView.h"

@interface AVViewController () <VideoSomeDelegate>

@property (nonatomic ,strong) VideoView *videoView;

@property (nonatomic ,strong) NSMutableArray<NSLayoutConstraint *> *array;

@property (nonatomic ,strong) UISlider *videoSlider;

@property (nonatomic ,strong) NSMutableArray<NSLayoutConstraint *> *sliderArray;

@end

@implementation AVViewController

- (void)viewDidLoad {

[super viewDidLoad];

[self.view setBackgroundColor:[UIColor whiteColor]];

[self initVideoView];

}

- (void)initVideoView {

//NSString *path = [[NSBundle mainBundle] pathForResource:@"some" ofType:@"mp4"];//这个时播放本地的,播放本地的时候还需要改VideoView.m中的代码

NSString *path = @"http://static.tripbe.com/videofiles/20121214/9533522808.f4v.mp4";

_videoView = [[VideoView alloc] initWithUrl:path delegate:self];

_videoView.someDelegate = self;

[_videoView setTranslatesAutoresizingMaskIntoConstraints:NO];

[self.view addSubview:_videoView];

[self initVideoSlider];

if (self.traitCollection.verticalSizeClass == UIUserInterfaceSizeClassCompact) {

[self installLandspace];

} else {

[self installVertical];

}

}

- (void)installVertical {

if (_array != nil) {

[self.view removeConstraints:_array];

[_array removeAllObjects];

[self.view removeConstraints:_sliderArray];

[_sliderArray removeAllObjects];

} else {

_array = [NSMutableArray array];

_sliderArray = [NSMutableArray array];

}

id topGuide = self.topLayoutGuide;

NSDictionary *dic = @{

@"top":@100,@"height":@180,@"edge":@20,@"space":@80};

[_array addObjectsFromArray:[NSLayoutConstraint constraintsWithVisualFormat:@"H:|[_videoView]|" options:0 metrics:nil views:NSDictionaryOfVariableBindings(_videoView)]];

[_array addObjectsFromArray:[NSLayoutConstraint constraintsWithVisualFormat:@"H:|-(edge)-[_videoSlider]-(edge)-|" options:0 metrics:dic views:NSDictionaryOfVariableBindings(_videoSlider)]];

[_array addObjectsFromArray:[NSLayoutConstraint constraintsWithVisualFormat:@"V:|[topGuide]-(top)-[_videoView(==height)]-(space)-[_videoSlider]" options:0 metrics:dic views:NSDictionaryOfVariableBindings(_videoView,topGuide,_videoSlider)]];

[self.view addConstraints:_array];

}

- (void)installLandspace {

if (_array != nil) {

[self.view removeConstraints:_array];

[_array removeAllObjects];

[self.view removeConstraints:_sliderArray];

[_sliderArray removeAllObjects];

} else {

_array = [NSMutableArray array];

_sliderArray = [NSMutableArray array];

}

id topGuide = self.topLayoutGuide;

NSDictionary *dic = @{

@"edge":@20,@"space":@30};

[_array addObjectsFromArray:[NSLayoutConstraint constraintsWithVisualFormat:@"H:|[_videoView]|" options:0 metrics:nil views:NSDictionaryOfVariableBindings(_videoView)]];

[_array addObjectsFromArray:[NSLayoutConstraint constraintsWithVisualFormat:@"V:|[topGuide][_videoView]|" options:0 metrics:nil views:NSDictionaryOfVariableBindings(_videoView,topGuide)]];

[self.view addConstraints:_array];

[_sliderArray addObjectsFromArray:[NSLayoutConstraint constraintsWithVisualFormat:@"H:|-(edge)-[_videoSlider]-(edge)-|" options:0 metrics:dic views:NSDictionaryOfVariableBindings(_videoSlider)]];

[_sliderArray addObjectsFromArray:[NSLayoutConstraint constraintsWithVisualFormat:@"V:[_videoSlider]-(space)-|" options:0 metrics:dic views:NSDictionaryOfVariableBindings(_videoSlider)]];

[self.view addConstraints:_sliderArray];

}

- (void)initVideoSlider {

_videoSlider = [[UISlider alloc] init];

[_videoSlider setTranslatesAutoresizingMaskIntoConstraints:NO];

[_videoSlider setThumbImage:[UIImage imageNamed:@"sliderButton"] forState:UIControlStateNormal];

[self.view addSubview:_videoSlider];

}

- (void)willTransitionToTraitCollection:(UITraitCollection *)newCollection withTransitionCoordinator:(id <UIViewControllerTransitionCoordinator>)coordinator {

[super willTransitionToTraitCollection:newCollection withTransitionCoordinator:coordinator];

[coordinator animateAlongsideTransition:^(id <UIViewControllerTransitionCoordinatorContext> context) {

if (newCollection.verticalSizeClass == UIUserInterfaceSizeClassCompact) {

[self installLandspace];

} else {

[self installVertical];

}

[self.view setNeedsLayout];

} completion:nil];

}

- (void)didReceiveMemoryWarning {

[super didReceiveMemoryWarning];

}

#pragma mark -

- (void)flushCurrentTime:(NSString *)timeString sliderValue:(float)sliderValue {

_videoSlider.value = sliderValue;

}

@end

AVPlayer实现视频播放Swift代码

主要文件就一个:

//

// kylKYLPlayer.swift

// kylVideoKYLPlayer

//

// Created by yulu kong on 2019/8/10.

// Copyright © 2019 yulu kong. All rights reserved.

//

import UIKit

import Foundation

import AVFoundation

import CoreGraphics

// MARK: - types

/// Video fill mode options for `KYLPlayer.fillMode`.

///

/// - resize: Stretch to fill.

/// - resizeAspectFill: Preserve aspect ratio, filling bounds.

/// - resizeAspectFit: Preserve aspect ratio, fill within bounds.

public enum KYLPlayerFillMode {

case resize

case resizeAspectFill

case resizeAspectFit // default

public var avFoundationType: String {

get {

switch self {

case .resize:

return AVLayerVideoGravity.resize.rawValue

case .resizeAspectFill:

return AVLayerVideoGravity.resizeAspectFill.rawValue

case .resizeAspectFit:

return AVLayerVideoGravity.resizeAspect.rawValue

}

}

}

}

/// Asset playback states.

public enum PlaybackState: Int, CustomStringConvertible {

case stopped = 0

case playing

case paused

case failed

public var description: String {

get {

switch self {

case .stopped:

return "Stopped"

case .playing:

return "Playing"

case .failed:

return "Failed"

case .paused:

return "Paused"

}

}

}

}

/// Asset buffering states.

public enum BufferingState: Int, CustomStringConvertible {

case unknown = 0

case ready

case delayed

public var description: String {

get {

switch self {

case .unknown:

return "Unknown"

case .ready:

return "Ready"

case .delayed:

return "Delayed"

}

}

}

}

// MARK: - KYLPlayerDelegate

/// KYLPlayer delegate protocol

public protocol KYLPlayerDelegate: NSObjectProtocol {

func KYLPlayerReady(_ KYLPlayer: KYLPlayer)

func KYLPlayerPlaybackStateDidChange(_ KYLPlayer: KYLPlayer)

func KYLPlayerBufferingStateDidChange(_ KYLPlayer: KYLPlayer)

// This is the time in seconds that the video has been buffered.

// If implementing a UIProgressView, user this value / KYLPlayer.maximumDuration to set progress.

func KYLPlayerBufferTimeDidChange(_ bufferTime: Double)

}

/// KYLPlayer playback protocol

public protocol KYLPlayerPlaybackDelegate: NSObjectProtocol {

func KYLPlayerCurrentTimeDidChange(_ KYLPlayer: KYLPlayer)

func KYLPlayerPlaybackWillStartFromBeginning(_ KYLPlayer: KYLPlayer)

func KYLPlayerPlaybackDidEnd(_ KYLPlayer: KYLPlayer)

func KYLPlayerPlaybackWillLoop(_ KYLPlayer: KYLPlayer)

}

// MARK: - KYLPlayer

/// ▶️ KYLPlayer, simple way to play and stream media

open class KYLPlayer: UIViewController {

/// KYLPlayer delegate.

open weak var KYLPlayerDelegate: KYLPlayerDelegate?

/// Playback delegate.

open weak var playbackDelegate: KYLPlayerPlaybackDelegate?

// configuration

/// Local or remote URL for the file asset to be played.

///

/// - Parameter url: URL of the asset.

open var url: URL? {

didSet {

setup(url: url)

}

}

/// Determines if the video should autoplay when a url is set

///

/// - Parameter bool: defaults to true

open var autoplay: Bool = true

/// For setting up with AVAsset instead of URL

/// Note: Resets URL (cannot set both)

open var asset: AVAsset? {

get {

return _asset }

set {

_ = newValue.map {

setupAsset($0) } }

}

/// Mutes audio playback when true.

open var muted: Bool {

get {

return self._avKYLPlayer.isMuted

}

set {

self._avKYLPlayer.isMuted = newValue

}

}

/// Volume for the KYLPlayer, ranging from 0.0 to 1.0 on a linear scale.

open var volume: Float {

get {

return self._avKYLPlayer.volume

}

set {

self._avKYLPlayer.volume = newValue

}

}

/// Specifies how the video is displayed within a KYLPlayer layer’s bounds.

/// The default value is `AVLayerVideoGravityResizeAspect`. See `FillMode` enum.

open var fillMode: String {

get {

return self._KYLPlayerView.fillMode

}

set {

self._KYLPlayerView.fillMode = newValue

}

}

/// Pauses playback automatically when resigning active.

open var playbackPausesWhenResigningActive: Bool = true

/// Pauses playback automatically when backgrounded.

open var playbackPausesWhenBackgrounded: Bool = true

/// Resumes playback when became active.

open var playbackResumesWhenBecameActive: Bool = true

/// Resumes playback when entering foreground.

open var playbackResumesWhenEnteringForeground: Bool = true

// state

/// Playback automatically loops continuously when true.

open var playbackLoops: Bool {

get {

return self._avKYLPlayer.actionAtItemEnd == .none

}

set {

if newValue {

self._avKYLPlayer.actionAtItemEnd = .none

} else {

self._avKYLPlayer.actionAtItemEnd = .pause

}

}

}

/// Playback freezes on last frame frame at end when true.

open var playbackFreezesAtEnd: Bool = false

/// Current playback state of the KYLPlayer.

open var playbackState: PlaybackState = .stopped {

didSet {

if playbackState != oldValue || !playbackEdgeTriggered {

self.KYLPlayerDelegate?.KYLPlayerPlaybackStateDidChange(self)

}

}

}

/// Current buffering state of the KYLPlayer.

open var bufferingState: BufferingState = .unknown {

didSet {

if bufferingState != oldValue || !playbackEdgeTriggered {

self.KYLPlayerDelegate?.KYLPlayerBufferingStateDidChange(self)

}

}

}

/// Playback buffering size in seconds.

open var bufferSize: Double = 10

/// Playback is not automatically triggered from state changes when true.

open var playbackEdgeTriggered: Bool = true

/// Maximum duration of playback.

open var maximumDuration: TimeInterval {

get {

if let KYLPlayerItem = self._KYLPlayerItem {

return CMTimeGetSeconds(KYLPlayerItem.duration)

} else {

return CMTimeGetSeconds(CMTime.indefinite)

}

}

}

/// Media playback's current time.

open var currentTime: TimeInterval {

get {

if let KYLPlayerItem = self._KYLPlayerItem {

return CMTimeGetSeconds(KYLPlayerItem.currentTime())

} else {

return CMTimeGetSeconds(CMTime.indefinite)

}

}

}

/// The natural dimensions of the media.

open var naturalSize: CGSize {

get {

if let KYLPlayerItem = self._KYLPlayerItem,

let track = KYLPlayerItem.asset.tracks(withMediaType: .video).first {

let size = track.naturalSize.applying(track.preferredTransform)

return CGSize(width: fabs(size.width), height: fabs(size.height))

} else {

return CGSize.zero

}

}

}

/// KYLPlayer view's initial background color.

open var layerBackgroundColor: UIColor? {

get {

guard let backgroundColor = self._KYLPlayerView.playerLayer.backgroundColor

else {

return nil

}

return UIColor(cgColor: backgroundColor)

}

set {

self._KYLPlayerView.playerLayer.backgroundColor = newValue?.cgColor

}

}

// MARK: - private instance vars

internal var _asset: AVAsset? {

didSet {

if let _ = self._asset {

// self.setupKYLPlayerItem(nil)

}

}

}

internal var _avKYLPlayer: AVPlayer

internal var _KYLPlayerItem: AVPlayerItem?

internal var _timeObserver: Any?

internal var _KYLPlayerView: KYLPlayerView = KYLPlayerView(frame: .zero)

internal var _seekTimeRequested: CMTime?

internal var _lastBufferTime: Double = 0

//Boolean that determines if the user or calling coded has trigged autoplay manually.

internal var _hasAutoplayActivated: Bool = true

// MARK: - object lifecycle

public convenience init() {

self.init(nibName: nil, bundle: nil)

}

public required init?(coder aDecoder: NSCoder) {

self._avKYLPlayer = AVPlayer()

self._avKYLPlayer.actionAtItemEnd = .pause

self._timeObserver = nil

super.init(coder: aDecoder)

}

public override init(nibName nibNameOrNil: String?, bundle nibBundleOrNil: Bundle?) {

self._avKYLPlayer = AVPlayer()

self._avKYLPlayer.actionAtItemEnd = .pause

self._timeObserver = nil

super.init(nibName: nibNameOrNil, bundle: nibBundleOrNil)

}

deinit {

self._avKYLPlayer.pause()

self.setupKYLPlayerItem(nil)

self.removeKYLPlayerObservers()

self.KYLPlayerDelegate = nil

self.removeApplicationObservers()

self.playbackDelegate = nil

self.removeKYLPlayerLayerObservers()

self._KYLPlayerView.player = nil

}

// MARK: - view lifecycle

open override func loadView() {

self._KYLPlayerView.playerLayer.isHidden = true

self.view = self._KYLPlayerView

}

open override func viewDidLoad() {

super.viewDidLoad()

if let url = url {

setup(url: url)

} else if let asset = asset {

setupAsset(asset)

}

self.addKYLPlayerLayerObservers()

self.addKYLPlayerObservers()

self.addApplicationObservers()

}

open override func viewDidDisappear(_ animated: Bool) {

super.viewDidDisappear(animated)

if self.playbackState == .playing {

self.pause()

}

}

// MARK: - Playback funcs

/// Begins playback of the media from the beginning.

open func playFromBeginning() {

self.playbackDelegate?.KYLPlayerPlaybackWillStartFromBeginning(self)

self._avKYLPlayer.seek(to: CMTime.zero)

self.playFromCurrentTime()

}

/// Begins playback of the media from the current time.

open func playFromCurrentTime() {

if !autoplay {

//external call to this method with auto play off. activate it before calling play

_hasAutoplayActivated = true

}

play()

}

fileprivate func play() {

if autoplay || _hasAutoplayActivated {

self.playbackState = .playing

self._avKYLPlayer.play()

}

}

/// Pauses playback of the media.

open func pause() {

if self.playbackState != .playing {

return

}

self._avKYLPlayer.pause()

self.playbackState = .paused

}

/// Stops playback of the media.

open func stop() {

if self.playbackState == .stopped {

return

}

self._avKYLPlayer.pause()

self.playbackState = .stopped

self.playbackDelegate?.KYLPlayerPlaybackDidEnd(self)

}

/// Updates playback to the specified time.

///

/// - Parameters:

/// - time: The time to switch to move the playback.

/// - completionHandler: Call block handler after seeking/

open func seek(to time: CMTime, completionHandler: ((Bool) -> Swift.Void)? = nil) {

if let KYLPlayerItem = self._KYLPlayerItem {

return KYLPlayerItem.seek(to: time, completionHandler: completionHandler)

} else {

_seekTimeRequested = time

}

}

/// Updates the playback time to the specified time bound.

///

/// - Parameters:

/// - time: The time to switch to move the playback.

/// - toleranceBefore: The tolerance allowed before time.

/// - toleranceAfter: The tolerance allowed after time.

/// - completionHandler: call block handler after seeking

open func seekToTime(to time: CMTime, toleranceBefore: CMTime, toleranceAfter: CMTime, completionHandler: ((Bool) -> Swift.Void)? = nil) {

if let KYLPlayerItem = self._KYLPlayerItem {

return KYLPlayerItem.seek(to: time, toleranceBefore: toleranceBefore, toleranceAfter: toleranceAfter, completionHandler: completionHandler)

}

}

/// Captures a snapshot of the current KYLPlayer view.

///

/// - Returns: A UIImage of the KYLPlayer view.

open func takeSnapshot() -> UIImage {

UIGraphicsBeginImageContextWithOptions(self._KYLPlayerView.frame.size, false, UIScreen.main.scale)

self._KYLPlayerView.drawHierarchy(in: self._KYLPlayerView.bounds, afterScreenUpdates: true)

let image = UIGraphicsGetImageFromCurrentImageContext()

UIGraphicsEndImageContext()

return image!

}

/// Return the av KYLPlayer layer for consumption by

/// things such as Picture in Picture

open func KYLPlayerLayer() -> AVPlayerLayer? {

return self._KYLPlayerView.playerLayer

}

}

// MARK: - loading funcs

extension KYLPlayer {

fileprivate func setup(url: URL?) {

guard isViewLoaded else {

return }

// ensure everything is reset beforehand

if self.playbackState == .playing {

self.pause()

}

//Reset autoplay flag since a new url is set.

_hasAutoplayActivated = false

if autoplay {

playbackState = .playing

} else {

playbackState = .stopped

}

// self.setupKYLPlayerItem(nil)

if let url = url {

let asset = AVURLAsset(url: url, options: .none)

self.setupAsset(asset)

}

}

fileprivate func setupAsset(_ asset: AVAsset) {

guard isViewLoaded else {

return }

if self.playbackState == .playing {

self.pause()

}

self.bufferingState = .unknown

self._asset = asset

let keys = [KYLPlayerTracksKey, KYLPlayerPlayableKey, KYLPlayerDurationKey]

self._asset?.loadValuesAsynchronously(forKeys: keys, completionHandler: {

() -> Void in

for key in keys {

var error: NSError? = nil

let status = self._asset?.statusOfValue(forKey: key, error:&error)

if status == .failed {

self.playbackState = .failed

return

}

}

if let asset = self._asset {

if !asset.isPlayable {

self.playbackState = .failed

return

}

let KYLPlayerItem = AVPlayerItem(asset:asset)

self.setupKYLPlayerItem(KYLPlayerItem)

}

})

}

fileprivate func setupKYLPlayerItem(_ KYLPlayerItem: AVPlayerItem?) {

self._KYLPlayerItem?.removeObserver(self, forKeyPath: KYLPlayerFullBufferKey, context: &KYLPlayerItemObserverContext)

self._KYLPlayerItem?.removeObserver(self, forKeyPath: KYLPlayerEmptyBufferKey, context: &KYLPlayerItemObserverContext)

self._KYLPlayerItem?.removeObserver(self, forKeyPath: KYLPlayerKeepUpKey, context: &KYLPlayerItemObserverContext)

self._KYLPlayerItem?.removeObserver(self, forKeyPath: KYLPlayerStatusKey, context: &KYLPlayerItemObserverContext)

self._KYLPlayerItem?.removeObserver(self, forKeyPath: KYLPlayerLoadedTimeRangesKey, context: &KYLPlayerItemObserverContext)

if let currentKYLPlayerItem = self._KYLPlayerItem {

NotificationCenter.default.removeObserver(self, name: .AVPlayerItemDidPlayToEndTime, object: currentKYLPlayerItem)

NotificationCenter.default.removeObserver(self, name: .AVPlayerItemFailedToPlayToEndTime, object: currentKYLPlayerItem)

NotificationCenter.default.removeObserver(self, name: .AVPlayerItemPlaybackStalled, object: currentKYLPlayerItem)

NotificationCenter.default.removeObserver(self, name: .AVPlayerItemTimeJumped, object: currentKYLPlayerItem)

NotificationCenter.default.removeObserver(self, name: .AVPlayerItemNewAccessLogEntry, object: currentKYLPlayerItem)

NotificationCenter.default.removeObserver(self, name: .AVPlayerItemNewErrorLogEntry, object: currentKYLPlayerItem)

}

self._KYLPlayerItem = KYLPlayerItem

if let seek = _seekTimeRequested, self._KYLPlayerItem != nil {

_seekTimeRequested = nil

self.seek(to: seek)

}

self._KYLPlayerItem?.addObserver(self, forKeyPath: KYLPlayerEmptyBufferKey, options: [.new, .old], context: &KYLPlayerItemObserverContext)

self._KYLPlayerItem?.addObserver(self, forKeyPath: KYLPlayerFullBufferKey, options: [.new, .old], context: &KYLPlayerItemObserverContext)

self._KYLPlayerItem?.addObserver(self, forKeyPath: KYLPlayerKeepUpKey, options: [.new, .old], context: &KYLPlayerItemObserverContext)

self._KYLPlayerItem?.addObserver(self, forKeyPath: KYLPlayerStatusKey, options: [.new, .old], context: &KYLPlayerItemObserverContext)

self._KYLPlayerItem?.addObserver(self, forKeyPath: KYLPlayerLoadedTimeRangesKey, options: [.new, .old], context: &KYLPlayerItemObserverContext)

if let updatedKYLPlayerItem = self._KYLPlayerItem {

NotificationCenter.default.addObserver(self, selector: #selector(KYLPlayerItemDidPlayToEndTime(_:)), name: .AVPlayerItemDidPlayToEndTime, object: updatedKYLPlayerItem)

NotificationCenter.default.addObserver(self, selector: #selector(KYLPlayerItemFailedToPlayToEndTime(_:)), name: .AVPlayerItemFailedToPlayToEndTime, object: updatedKYLPlayerItem)

NotificationCenter.default.addObserver(self, selector: #selector(KYLPlayerItemPlaybackStalled(_:)), name: .AVPlayerItemPlaybackStalled, object: updatedKYLPlayerItem)

NotificationCenter.default.addObserver(self, selector: #selector(KYLPlayerItemTimeJumped(_:)), name: .AVPlayerItemTimeJumped, object: updatedKYLPlayerItem)

NotificationCenter.default.addObserver(self, selector: #selector(KYLPlayerItemNewAccessLogEntry(_:)), name: .AVPlayerItemNewAccessLogEntry, object: updatedKYLPlayerItem)

NotificationCenter.default.addObserver(self, selector: #selector(KYLPlayerItemNewErrorLogEntry(_:)), name: .AVPlayerItemNewErrorLogEntry, object: updatedKYLPlayerItem)

}

self._avKYLPlayer.replaceCurrentItem(with: self._KYLPlayerItem)

// update new KYLPlayerItem settings

if self.playbackLoops {

self._avKYLPlayer.actionAtItemEnd = .none

} else {

self._avKYLPlayer.actionAtItemEnd = .pause

}

}

}

// MARK: - NSNotifications

extension KYLPlayer {

// MARK: - AVKYLPlayerItem

@objc internal func KYLPlayerItemDidPlayToEndTime(_ aNotification: Notification) {

if self.playbackLoops {

self.playbackDelegate?.KYLPlayerPlaybackWillLoop(self)

self._avKYLPlayer.seek(to: CMTime.zero)

} else {

if self.playbackFreezesAtEnd {

self.stop()

} else {

self._avKYLPlayer.seek(to: CMTime.zero, completionHandler: {

_ in

self.stop()

})

}

}

}

@objc internal func KYLPlayerItemFailedToPlayToEndTime(_ aNotification: Notification) {

print("\(#function), \(bufferingState), \(bufferSize), \(playbackState)")

self.playbackState = .failed

}

@objc internal func KYLPlayerItemPlaybackStalled(_ notifi:Notification){

print("\(#function), \(bufferingState), \(bufferSize), \(playbackState)")

}

@objc internal func KYLPlayerItemTimeJumped(_ notifi:Notification){

print("\(#function), \(bufferingState), \(bufferSize), \(playbackState)")

}

@objc internal func KYLPlayerItemNewAccessLogEntry(_ notifi:Notification){

print("\(#function), \(bufferingState), \(bufferSize), \(playbackState)")

}

@objc internal func KYLPlayerItemNewErrorLogEntry(_ notifi:Notification){

print("\(#function), \(bufferingState), \(bufferSize), \(playbackState)")

self.playbackState = .failed

}

// MARK: - UIApplication

internal func addApplicationObservers() {

NotificationCenter.default.addObserver(self, selector: #selector(handleApplicationWillResignActive(_:)), name: UIApplication.willResignActiveNotification, object: UIApplication.shared)

NotificationCenter.default.addObserver(self, selector: #selector(handleApplicationDidBecomeActive(_:)), name: UIApplication.didBecomeActiveNotification, object: UIApplication.shared)

NotificationCenter.default.addObserver(self, selector: #selector(handleApplicationDidEnterBackground(_:)), name: UIApplication.didEnterBackgroundNotification, object: UIApplication.shared)

NotificationCenter.default.addObserver(self, selector: #selector(handleApplicationWillEnterForeground(_:)), name: UIApplication.willEnterForegroundNotification, object: UIApplication.shared)

}

internal func removeApplicationObservers() {

NotificationCenter.default.removeObserver(self)

}

// MARK: - handlers

@objc internal func handleApplicationWillResignActive(_ aNotification: Notification) {

if self.playbackState == .playing && self.playbackPausesWhenResigningActive {

self.pause()

}

}

@objc internal func handleApplicationDidBecomeActive(_ aNotification: Notification) {

if self.playbackState != .playing && self.playbackResumesWhenBecameActive {

self.play()

}

}

@objc internal func handleApplicationDidEnterBackground(_ aNotification: Notification) {

if self.playbackState == .playing && self.playbackPausesWhenBackgrounded {

self.pause()

}

}

@objc internal func handleApplicationWillEnterForeground(_ aNoticiation: Notification) {

if self.playbackState != .playing && self.playbackResumesWhenEnteringForeground {

self.play()

}

}

}

// MARK: - KVO

// KVO contexts

private var KYLPlayerObserverContext = 0

private var KYLPlayerItemObserverContext = 0

private var KYLPlayerLayerObserverContext = 0

// KVO KYLPlayer keys

private let KYLPlayerTracksKey = "tracks"

private let KYLPlayerPlayableKey = "playable"

private let KYLPlayerDurationKey = "duration"

private let KYLPlayerRateKey = "rate"

// KVO KYLPlayer item keys

private let KYLPlayerStatusKey = "status"

private let KYLPlayerEmptyBufferKey = "playbackBufferEmpty"

private let KYLPlayerFullBufferKey = "playbackBufferFull"

private let KYLPlayerKeepUpKey = "playbackLikelyToKeepUp"

private let KYLPlayerLoadedTimeRangesKey = "loadedTimeRanges"

// KVO KYLPlayer layer keys

private let KYLPlayerReadyForDisplayKey = "readyForDisplay"

extension KYLPlayer {

// MARK: - AVKYLPlayerLayerObservers

internal func addKYLPlayerLayerObservers() {

self._KYLPlayerView.layer.addObserver(self, forKeyPath: KYLPlayerReadyForDisplayKey, options: [.new, .old], context: &KYLPlayerLayerObserverContext)

}

internal func removeKYLPlayerLayerObservers() {

self._KYLPlayerView.layer.removeObserver(self, forKeyPath: KYLPlayerReadyForDisplayKey, context: &KYLPlayerLayerObserverContext)

}

// MARK: - AVKYLPlayerObservers

internal func addKYLPlayerObservers() {

self._timeObserver = self._avKYLPlayer.addPeriodicTimeObserver(forInterval: CMTimeMake(value: 1, timescale: 100), queue: DispatchQueue.main, using: {

[weak self] timeInterval in

guard let strongSelf = self

else {

return

}

strongSelf.playbackDelegate?.KYLPlayerCurrentTimeDidChange(strongSelf)

})

self._avKYLPlayer.addObserver(self, forKeyPath: KYLPlayerRateKey, options: [.new, .old], context: &KYLPlayerObserverContext)

}

internal func removeKYLPlayerObservers() {

if let observer = self._timeObserver {

self._avKYLPlayer.removeTimeObserver(observer)

}

self._avKYLPlayer.removeObserver(self, forKeyPath: KYLPlayerRateKey, context: &KYLPlayerObserverContext)

}

// MARK: -

override open func observeValue(forKeyPath keyPath: String?, of object: Any?, change: [NSKeyValueChangeKey: Any]?, context: UnsafeMutableRawPointer?) {

// KYLPlayerRateKey, KYLPlayerObserverContext

//print("\(#function), keyPath=\(String(describing: keyPath)), obj = \(String(describing: object)), change =\(String(describing: change))")

if context == &KYLPlayerItemObserverContext {

// KYLPlayerStatusKey

if keyPath == KYLPlayerKeepUpKey {

// KYLPlayerKeepUpKey

if let item = self._KYLPlayerItem {

if item.isPlaybackLikelyToKeepUp {

self.bufferingState = .ready

if self.playbackState == .playing {

self.playFromCurrentTime()

}

}

}

if let status = change?[NSKeyValueChangeKey.newKey] as? NSNumber {

switch status.intValue {

case AVPlayer.Status.readyToPlay.rawValue:

self._KYLPlayerView.playerLayer.player = self._avKYLPlayer

self._KYLPlayerView.playerLayer.isHidden = false

case AVPlayer.Status.failed.rawValue:

self.playbackState = PlaybackState.failed

default:

break

}

}

} else if keyPath == KYLPlayerEmptyBufferKey {

// KYLPlayerEmptyBufferKey

if let item = self._KYLPlayerItem {

print("isPlaybackBufferEmpty = \(item.isPlaybackBufferEmpty)")

if item.isPlaybackBufferEmpty {

self.bufferingState = .delayed

}

else {

self.bufferingState = .ready

}

}

if let status = change?[NSKeyValueChangeKey.newKey] as? NSNumber {

switch status.intValue {

case AVPlayer.Status.readyToPlay.rawValue:

self._KYLPlayerView.playerLayer.player = self._avKYLPlayer

self._KYLPlayerView.playerLayer.isHidden = false

case AVPlayer.Status.failed.rawValue:

self.playbackState = PlaybackState.failed

default:

break

}

}

} else if keyPath == KYLPlayerLoadedTimeRangesKey {

// KYLPlayerLoadedTimeRangesKey

if let item = self._KYLPlayerItem {

self.bufferingState = .ready

let timeRanges = item.loadedTimeRanges

if let timeRange = timeRanges.first?.timeRangeValue {

let bufferedTime = CMTimeGetSeconds(CMTimeAdd(timeRange.start, timeRange.duration))

if _lastBufferTime != bufferedTime {

self.executeClosureOnMainQueueIfNecessary {

self.KYLPlayerDelegate?.KYLPlayerBufferTimeDidChange(bufferedTime)

}

_lastBufferTime = bufferedTime

}

}

let currentTime = CMTimeGetSeconds(item.currentTime())

if ((_lastBufferTime - currentTime) >= self.bufferSize ||

_lastBufferTime == maximumDuration ||

timeRanges.first == nil)

&& self.playbackState == .playing

{

self.play()

}

}

}

} else if context == &KYLPlayerLayerObserverContext {

if self._KYLPlayerView.playerLayer.isReadyForDisplay {

self.executeClosureOnMainQueueIfNecessary {

self.KYLPlayerDelegate?.KYLPlayerReady(self)

}

}

}

else if keyPath == KYLPlayerFullBufferKey {

// KYLPlayerFullBufferKey

if let item = self._KYLPlayerItem {

print("isPlaybackBufferFull = \(item.isPlaybackBufferFull)")

}

}

}

}

// MARK: - queues

extension KYLPlayer {

internal func executeClosureOnMainQueueIfNecessary(withClosure closure: @escaping () -> Void) {

if Thread.isMainThread {

closure()

} else {

DispatchQueue.main.async(execute: closure)

}

}

}

// MARK: - KYLPlayerView

internal class KYLPlayerView: UIView {

// MARK: - properties

override class var layerClass: AnyClass {

get {

return AVPlayerLayer.self

}

}

var playerLayer: AVPlayerLayer {

get {

return self.layer as! AVPlayerLayer

}

}

var player: AVPlayer? {

get {

return self.playerLayer.player

}

set {

self.playerLayer.player = newValue

}

}

var fillMode: String {

get {

return self.playerLayer.videoGravity.rawValue

}

set {

self.playerLayer.videoGravity = AVLayerVideoGravity(rawValue: newValue)

}

}

// MARK: - object lifecycle

override init(frame: CGRect) {

super.init(frame: frame)

self.playerLayer.backgroundColor = UIColor.black.cgColor

self.playerLayer.fillMode = CAMediaTimingFillMode(rawValue: KYLPlayerFillMode.resizeAspectFit.avFoundationType)

}

required init?(coder aDecoder: NSCoder) {

super.init(coder: aDecoder)

self.playerLayer.backgroundColor = UIColor.black.cgColor

self.playerLayer.fillMode = CAMediaTimingFillMode(rawValue: KYLPlayerFillMode.resizeAspectFit.avFoundationType)

}

deinit {

self.player?.pause()

self.player = nil

}

}