分布式推荐系统中,通信消耗优化方案

Distributed Mean Estimation with Limited Communication

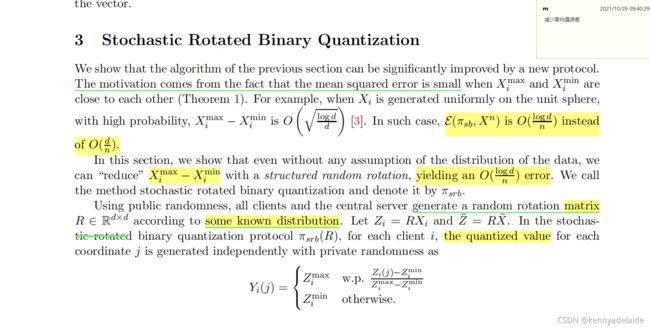

在分布式推荐系统中,矩阵分解MF 之后,在传输数据的时候,随着数据维度的增大,则通信资源的消耗IO流的消耗也增大,降低通信消耗对算法运行的时间有着明显的提升,本文通过以上论文中的部分描述,实现了基于量化函数的相关优化点。同时本文的工作也参考了论文:

DS-ADMM++: A Novel Distributed Quantized ADMM to Speed up Differentially Private Matrix

Factorization

这两篇文章的算法实现比较简单,

说那么多直接上代码:

import sys import time import numpy as np import random import pyopencl # parallel calculation. import numba # invoking CUDA api. from numba import vectorize from numba import cuda ''' @name: kenny adelaide @email;[email protected] @description: quantization protocol πsb '''

class DS_ADMM_MF:

def mean_GLOBLE_V(self,matrix):

'''

calkculating the mean vector via column.

'''

return np.array(matrix).sum() / np.array(matrix).shape[0]

def generate_normal_distribution_matrix(self,shape=[100, 100]):

'''

this is a random init function for a matrix , just for a new normal distribution and zero mean value.

'''

matrix = np.array(np.random.normal(0, 1, size=shape[0] * shape[1])).reshape(shape[0], shape[1])

return matrix

def calculate_Z_subscript_i(self,matrix, X_subscript_i):

'''

this is a function for calculating Z-subscript(i) = R*X-subscript(i). i had denoted the any client machine.

result discription: matrix's dimension is d*d, hence, returning value is a vector.

'''

X_subscript_i = np.array(X_subscript_i).reshape(X_subscript_i.shape[0], 1)

return np.dot(matrix.T, X_subscript_i).T

def stochastic_torated_binary_quantization(self,matrix, X_subscript_i):

'''

matrix is a init-normal-distribution and zero-mean-value variable,

X is input variable.

attention: matrix has denoted R, that is a global random rotation matrix.

'''

# 1.0 first, to calculate X-subscript(i)'s max and min value, attention: X-subscript(i) is a vector.

# invoking stochastic_torated_binary_quantization method and return Z-subscript(i).

Z_subscript_i = self.calculate_Z_subscript_i(matrix, X_subscript_i)

# print(Z_subscript_i)

max_min_values = []

max_min_values.append(np.max(Z_subscript_i))

max_min_values.append(np.min(Z_subscript_i))

Y_subscript_i_j = np.zeros(shape=Z_subscript_i.shape)

# 2.0, calculate the quantized vector.

for index, val in enumerate(Z_subscript_i):

Y_subscript_i_j[index] = (Z_subscript_i[index] - max_min_values[1]) / (max_min_values[0] - max_min_values[1])

return Y_subscript_i_j, max_min_values

def number_of_certain_probability(self,sequence, probability):

'''

this is a method to calculate some two value with probs.

sequence stroes two numbers.

'''

# result = []

#

# for index, val in enumerate(probability[0]):

# probs = [val, 1 - val]

# x = random.uniform(0, 1)

# cumulative_prob = 0.0

# for item, item_prob in zip(sequence, probs):

# cumulative_prob += item_prob

# if x <= cumulative_prob:

# result.append(sequence[1])

# else:

# result.append(sequence[0])

#

# return result[0:int(len(np.array(result)) / 2)]

result = []

for index, val in enumerate(probability[0]):

probs = [val, 1 - val]

nums = np.random.choice(sequence, size=1, p=probs)

result.append(nums)

return result

def mean(self,matrix):

matrix = np.array(matrix)

vector = []

for i in range(0, matrix.shape[1]):

vector.append(np.sum(matrix[:, i])/ matrix.shape[0])

return vector

def error(self,d,n, Z_subscript_i_max, Z_subscript_i_min):

'''

this is a certion for the result of estimation.

'''

for i in range(1,n):

pass

if __name__ == "__main__":

model = DS_ADMM_MF()

matrix = model.generate_normal_distribution_matrix(shape=[10, 10])

X = np.array([[1, 2, 3, 4, 5, 3, 2, 1, 2, 3], [2, 3, 4, 4, 7, 3, 1, 9, 0, 2], [4, 1, 1, 6, 7, 3, 0, 6, 8, 4]])

Y = []

for _, val in enumerate(X):

Y_subscript_i_j, max_min_values = model.stochastic_torated_binary_quantization(matrix, X[_])

Y_subscript_i_j = model.number_of_certain_probability(max_min_values, Y_subscript_i_j)

Y.append(Y_subscript_i_j)

result = model.mean(Y)

_X = np.dot(np.matrix(matrix).T, np.matrix(result).T).T

print(_X)

在以上的代码中,没有实现有关算法的标准衡量,可以参考论文自行实现。

以上是最主要的部分,算法的实现不难,难点在于对于公式的理解。 w.p 是以某种概率生成指定的数值。 该算法结合sgd 针对用户评分矩阵实现分布式计算有着良好的效果。