1.什么是Ingress

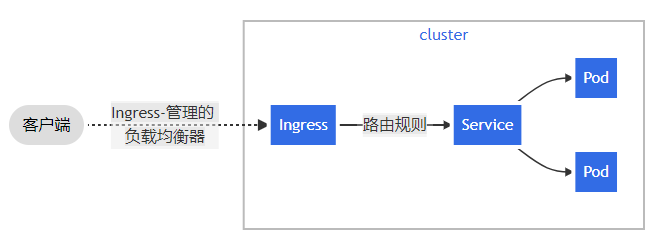

Ingress 公开了从k8s集群外部到集群内服务的 HTTP 和 HTTPS 路由。 流量路由由 Ingress 资源上定义的规则控制。

可以将 Ingress 配置为服务提供外部可访问的 URL、负载均衡流量、终止 SSL/TLS,以及提供基于名称的虚拟主机等能力

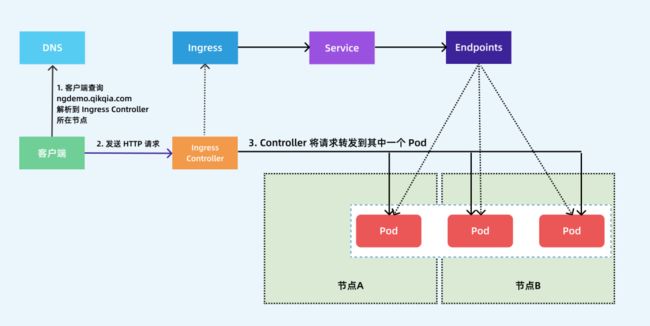

ingress具体的工作原理如下:

step1:ingress contronler通过与k8s的api进行交互,动态的去感知k8s集群中ingress服务规则的变化,然后读取它,并按照定义的ingress规则,转发到k8s集群中对应的service。

step2:而这个ingress规则写明了哪个域名对应k8s集群中的哪个service,然后再根据ingress-controller中的nginx配置模板,生成一段对应的nginx配置。

step3:然后再把该配置动态的写到ingress-controller的pod里,该ingress-controller的pod里面运行着一个nginx服务,控制器会把生成的nginx配置写入到nginx的配置文件中,然后reload一下,使其配置生效,以此来达到域名分配置及动态更新的效果。

ingress可以解决的问题

1)动态配置服务

如果按照传统方式, 当新增加一个服务时, 我们可能需要在流量入口加一个反向代理指向我们新的k8s服务. 而如果用了Ingress, 只需要配置好这个服务, 当服务启动时, 会自动注册到Ingress的中, 不需要而外的操作。

2)减少不必要的端口暴露

配置过k8s的都清楚, 第一步是要关闭防火墙的, 主要原因是k8s的很多服务会以NodePort方式映射出去, 这样就相当于给宿主机打了很多孔, 既不安全也不优雅. 而Ingress可以避免这个问题, 除了Ingress自身服务可能需要映射出去, 其他服务都不要用NodePort方式。

原文链接:https://blog.csdn.net/weixin_44729138/article/details/105978555

2.ingress控制器部署

为了让 Ingress 资源工作,集群必须有一个正在运行的 Ingress 控制器

与作为 kube-controller-manager 可执行文件的一部分运行的其他类型的控制器不同, Ingress 控制器不是随集群自动启动的。 基于此页面,你可选择最适合你的集群的 ingress 控制器实现。

Kubernetes 作为一个项目,目前支持和维护 AWS, GCE 和 nginx Ingress 控制器。

但是基于nginx服务的ingress controller根据不同的开发公司,又分为k8s社区的ingres-nginx和nginx公司的nginx-ingress。

在此根据github上的活跃度和关注人数,我们选择的是k8s社区的ingres-nginx进行部署测试

2.1 准备ingress控制器部署使用的docker镜像(v1.0.0的版本)

docker pull willdockerhub/ingress-nginx-controller:v1.0.0

docker tag 192.168.1.110/base/ingress-nginx-controller:v1.0.0

docker push 192.168.1.110/base/ingress-nginx-controller:v1.0.0

docker pull jettech/kube-webhook-certgen:v1.0.0

docker tag 192.168.1.110/base/ingress-nginx_kube-webhook-certgen:v1.0.0

docker push 192.168.1.110/base/ingress-nginx_kube-webhook-certgen:v1.0.0

2.2 修改ingress-controller-deploy.yaml

1.官方文件中创建的controller service 的type是LoadBalancer,改成NodePort,并添加相应的端口

2.将文件中的镜像替换为本地harbor中的镜像

# Source: ingress-nginx/templates/controller-service.yaml

apiVersion: v1

kind: Service

metadata:

annotations:

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

#type: LoadBalancer

type: NodePort

externalTrafficPolicy: Local

ports:

- name: http

port: 80

protocol: TCP

targetPort: http

nodePort: 40080

appProtocol: http

- name: https

port: 443

protocol: TCP

targetPort: https

nodePort: 40443

appProtocol: https

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

---

# Source: ingress-nginx/templates/controller-serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

automountServiceAccountToken: true

---

# Source: ingress-nginx/templates/controller-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

data:

---

# Source: ingress-nginx/templates/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

name: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ''

resources:

- nodes

verbs:

- get

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

---

# Source: ingress-nginx/templates/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

name: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- namespaces

verbs:

- get

- apiGroups:

- ''

resources:

- configmaps

- pods

- secrets

- endpoints

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- configmaps

resourceNames:

- ingress-controller-leader

verbs:

- get

- update

- apiGroups:

- ''

resources:

- configmaps

verbs:

- create

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

---

# Source: ingress-nginx/templates/controller-rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-service-webhook.yaml

apiVersion: v1

kind: Service

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller-admission

namespace: ingress-nginx

spec:

type: ClusterIP

ports:

- name: https-webhook

port: 443

targetPort: webhook

appProtocol: https

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

---

# Source: ingress-nginx/templates/controller-service.yaml

apiVersion: v1

kind: Service

metadata:

annotations:

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

#type: LoadBalancer

type: NodePort

externalTrafficPolicy: Local

ports:

- name: http

port: 80

protocol: TCP

targetPort: http

nodePort: 40080

appProtocol: http

- name: https

port: 443

protocol: TCP

targetPort: https

nodePort: 40443

appProtocol: https

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

---

# Source: ingress-nginx/templates/controller-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

revisionHistoryLimit: 10

minReadySeconds: 0

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

spec:

dnsPolicy: ClusterFirst

containers:

- name: controller

image: 192.168.1.110/base/ingress-nginx-controller:v1.0.0

imagePullPolicy: IfNotPresent

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

args:

- /nginx-ingress-controller

- --publish-service=$(POD_NAMESPACE)/ingress-nginx-controller

- --election-id=ingress-controller-leader

- --controller-class=k8s.io/ingress-nginx

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

securityContext:

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

runAsUser: 101

allowPrivilegeEscalation: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: LD_PRELOAD

value: /usr/local/lib/libmimalloc.so

livenessProbe:

failureThreshold: 5

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

ports:

- name: http

containerPort: 80

protocol: TCP

- name: https

containerPort: 443

protocol: TCP

- name: webhook

containerPort: 8443

protocol: TCP

volumeMounts:

- name: webhook-cert

mountPath: /usr/local/certificates/

readOnly: true

resources:

requests:

cpu: 100m

memory: 90Mi

nodeSelector:

kubernetes.io/os: linux

serviceAccountName: ingress-nginx

terminationGracePeriodSeconds: 300

volumes:

- name: webhook-cert

secret:

secretName: ingress-nginx-admission

---

# Source: ingress-nginx/templates/controller-ingressclass.yaml

# We don't support namespaced ingressClass yet

# So a ClusterRole and a ClusterRoleBinding is required

apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: nginx

namespace: ingress-nginx

spec:

controller: k8s.io/ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/validating-webhook.yaml

# before changing this value, check the required kubernetes version

# https://kubernetes.io/docs/reference/access-authn-authz/extensible-admission-controllers/#prerequisites

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingWebhookConfiguration

metadata:

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

name: ingress-nginx-admission

webhooks:

- name: validate.nginx.ingress.kubernetes.io

matchPolicy: Equivalent

rules:

- apiGroups:

- networking.k8s.io

apiVersions:

- v1

operations:

- CREATE

- UPDATE

resources:

- ingresses

failurePolicy: Fail

sideEffects: None

admissionReviewVersions:

- v1

clientConfig:

service:

namespace: ingress-nginx

name: ingress-nginx-controller-admission

path: /networking/v1/ingresses

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: ingress-nginx-admission

namespace: ingress-nginx

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

rules:

- apiGroups:

- admissionregistration.k8s.io

resources:

- validatingwebhookconfigurations

verbs:

- get

- update

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: ingress-nginx-admission

namespace: ingress-nginx

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

rules:

- apiGroups:

- ''

resources:

- secrets

verbs:

- get

- create

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: ingress-nginx-admission

namespace: ingress-nginx

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-createSecret.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ingress-nginx-admission-create

namespace: ingress-nginx

annotations:

helm.sh/hook: pre-install,pre-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

template:

metadata:

name: ingress-nginx-admission-create

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

containers:

- name: create

image: 192.168.1.110/base/ingress-nginx_kube-webhook-certgen:v1.0.0

imagePullPolicy: IfNotPresent

args:

- create

- --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc

- --namespace=$(POD_NAMESPACE)

- --secret-name=ingress-nginx-admission

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

restartPolicy: OnFailure

serviceAccountName: ingress-nginx-admission

nodeSelector:

kubernetes.io/os: linux

securityContext:

runAsNonRoot: true

runAsUser: 2000

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-patchWebhook.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ingress-nginx-admission-patch

namespace: ingress-nginx

annotations:

helm.sh/hook: post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

template:

metadata:

name: ingress-nginx-admission-patch

labels:

helm.sh/chart: ingress-nginx-4.0.1

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 1.0.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

containers:

- name: patch

image: 192.168.1.110/base/ingress-nginx_kube-webhook-certgen:v1.0.0

imagePullPolicy: IfNotPresent

args:

- patch

- --webhook-name=ingress-nginx-admission

- --namespace=$(POD_NAMESPACE)

- --patch-mutating=false

- --secret-name=ingress-nginx-admission

- --patch-failure-policy=Fail

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

restartPolicy: OnFailure

serviceAccountName: ingress-nginx-admission

nodeSelector:

kubernetes.io/os: linux

securityContext:

runAsNonRoot: true

runAsUser: 2000

2.3 创建ingress-controller

kubectl apply -f ingress-controller-deploy-v1.0.0.yml

2.4 查看相关资源是否创建成功

root@k8-deploy:~/k8s-yaml/ingress# kubectl get svc,pod -n ingress-nginx -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/ingress-nginx-controller NodePort 10.0.2.50 80:40080/TCP,443:40443/TCP 21h app.kubernetes.io/component=controller,app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx

service/ingress-nginx-controller-admission ClusterIP 10.0.135.64 443/TCP 21h app.kubernetes.io/component=controller,app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/ingress-nginx-admission-create-mr6qt 0/1 Completed 0 21h 10.100.112.44 192.168.2.19

pod/ingress-nginx-admission-patch-sxrl6 0/1 Completed 1 21h 10.100.112.45 192.168.2.19

pod/ingress-nginx-controller-556976fb4d-dljbp 1/1 Running 0 21h 10.100.224.73 192.168.2.18

# 查看ingress-nginx-controller 版本

# kubectl exec -it ingress-nginx-controller-556976fb4d-dljbp -n ingress-nginx -- /nginx-ingress-controller --version

-------------------------------------------------------------------------------

NGINX Ingress controller

Release: v1.0.0

Build: 041eb167c7bfccb1d1653f194924b0c5fd885e10

Repository: https://github.com/kubernetes/ingress-nginx

nginx version: nginx/1.20.1

-------------------------------------------------------------------------------

# 查看node节点上是否监听ingress-controller的40080,40443 NodePort端口

root@k8-deploy:~/k8s-yaml/ingress# for i in 17 18 19;do echo node:192.168.1.$i;ssh 192.168.2.$i -C "netstat -ntlp |grep -E '40080|40443'";echo;done

node:192.168.1.17

tcp 0 0 0.0.0.0:40443 0.0.0.0:* LISTEN 3452053/kube-proxy

tcp 0 0 0.0.0.0:40080 0.0.0.0:* LISTEN 3452053/kube-proxy

node:192.168.1.18

tcp 0 0 0.0.0.0:40080 0.0.0.0:* LISTEN 1364498/kube-proxy

tcp 0 0 0.0.0.0:40443 0.0.0.0:* LISTEN 1364498/kube-proxy

node:192.168.1.19

tcp 0 0 0.0.0.0:40443 0.0.0.0:* LISTEN 251137/kube-proxy

tcp 0 0 0.0.0.0:40080 0.0.0.0:* LISTEN 251137/kube-proxy

2.5 配置haproxy(边缘节点) 代理ingress

# cat /etc/haproxy/haproxy.cfg

...

frontend ingress

bind 192.168.2.110:80

mode tcp

log global

default_backend ingress-servers

frontend ingress-https

bind 192.168.2.110:443

mode tcp

log global

default_backend ingress-servers-https

backend ingress-servers

balance source

server websrv1 192.168.2.17:40080 check maxconn 2000

server websrv2 192.168.2.18:40080 check maxconn 2000

server websrv3 192.168.2.19:40080 check maxconn 2000

backend ingress-servers-https

balance source

server websrv1 192.168.2.17:40443 check maxconn 2000

server websrv2 192.168.2.18:40443 check maxconn 2000

server websrv3 192.168.2.19:40443 check maxconn 2000

3 基于域名的多虚拟主机的测试

下图显示了客户端是如果通过 Ingress Controller 连接到其中一个 Pod 的流程,客户端首先对 域名 执行 DNS 解析,得到 Ingress Controller 所在节点的 IP,然后客户端向 Ingress Controller 发送 HTTP 请求,然后根据 Ingress 对象里面的描述匹配域名,找到对应的 Service 对象,并获取关联的 Endpoints 列表,将客户端的请求转发给其中一个 Pod。

3.1 准备2个域名和镜像

为方便测试,域名放到hosts文件中

192.168.2.110 app1.test.com app2.test.com

测试的预期效果为访问不通的域名,会返回不同的内容。

2个镜像制作如下:

基础镜像使用之前已经做好的nginx镜像(应代码发布目录改为了/usr/share/nginx/html/)

nginx-app1# ll

-rw-r--r-- 1 root root 83 11月 11 13:54 Dockerfile

-rw-r--r-- 1 root root 23 11月 11 13:54 index.html

# cat Dockerfile

FROM 192.168.1.110/web/nginx-set-config:v1

COPY index.html /usr/share/nginx/html/

# cat index.html

nginx test html : app1

# docker build -t 192.168.1.110/web/nginx-set-config-app1:v1 .

# docker push 192.168.1.110/web/nginx-set-config-app1:v1

## ==========================

nginx-app2# ll

-rw-r--r-- 1 root root 83 11月 11 13:56 Dockerfile

-rw-r--r-- 1 root root 23 11月 11 13:56 index.html

# cat Dockerfile

FROM 192.168.1.110/web/nginx-set-config:v1

COPY index.html /usr/share/nginx/html/

# cat index.html

nginx test html : app2

# docker build -t 192.168.1.110/web/nginx-set-config-app2:v1 .

# docker push 192.168.1.110/web/nginx-set-config-app2:v1

3.2 编写2个service的yaml文件

root@k8-deploy:~/k8s-yaml/ingress# cat nginx-app1-svc.yml

apiVersion: v1

kind: Service

metadata:

name: nginx-app1-svc

namespace: yan-test

spec:

type: NodePort

ports:

- name: http

port: 80

targetPort: 80

nodePort: 40003

protocol: TCP

selector:

app: nginx-app1

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-app1-deployment

namespace: yan-test

labels:

app: nginx-app1

spec:

replicas: 1

selector:

matchLabels:

app: nginx-app1

template:

metadata:

labels:

app: nginx-app1

spec:

containers:

- name: nginx-app1-ct

image: 192.168.1.110/web/nginx-set-config-app1:v1

ports:

- containerPort: 80

## =========================

root@k8-deploy:~/k8s-yaml/ingress# cat nginx-app2-svc.yml

apiVersion: v1

kind: Service

metadata:

name: nginx-app2-svc

namespace: yan-test

spec:

type: NodePort

ports:

- name: http

port: 80

targetPort: 80

nodePort: 40004

protocol: TCP

selector:

app: nginx-app2

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-app2-deployment

namespace: yan-test

labels:

app: nginx-app2

spec:

replicas: 1

selector:

matchLabels:

app: nginx-app2

template:

metadata:

labels:

app: nginx-app2

spec:

containers:

- name: nginx-app2-ct

image: 192.168.1.110/web/nginx-set-config-app2:v1

ports:

- containerPort: 80

3.3 创建service

# kubectl apply -f nginx-app1-svc.yml

# kubectl apply -f nginx-app2-svc.yml

# kubectl get svc -n yan-test

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx-app1-svc NodePort 10.0.7.177 80:40003/TCP 23h

nginx-app2-svc NodePort 10.0.63.21 80:40004/TCP 23h

3.4 编写2个域名对应的2个service的ingress配置文件

# cat ingress-muti-host-nginx.yml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx-web

namespace: yan-test

annotations:

kubernetes.io/ingress.class: "nginx" ##指定Ingress Controller的类型

nginx.ingress.kubernetes.io/use-regex: "true" ##指定后面rules定义的path可以使用正则表达式

nginx.ingress.kubernetes.io/proxy-connect-timeout: "600" ##连接超时时间,默认为5s

nginx.ingress.kubernetes.io/proxy-send-timeout: "600" ##后端服务器回转数据超时时间,默认为60s

nginx.ingress.kubernetes.io/proxy-read-timeout: "600" ##后端服务器响应超时时间,默认为60s

nginx.ingress.kubernetes.io/proxy-body-size: "10m" ##客户端上传文件,最大大小,默认为20m

#nginx.ingress.kubernetes.io/rewrite-target: / ##URL重写

#nginx.ingress.kubernetes.io/app-root: /index.html

spec:

rules:

- host: app1.test.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx-app1-svc

port:

number: 80

- host: app2.test.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx-app2-svc

port:

number: 80

3.5 创建ingress规则

# kubectl apply -f ingress-muti-host-nginx.yml

# kubectl get ingress -n yan-test

NAME CLASS HOSTS ADDRESS PORTS AGE

nginx-web app1.test.com,app2.test.com 10.0.2.50 80 21h

3.6 使用域名访问测试

root@k8-deploy:~# curl app1.test.com

nginx test html : app1

root@k8-deploy:~# curl app2.test.com

nginx test html : app2

4 单域名多URL转发

预期效果为访问tomcat.test.com 返回tomato默认页面内容,访问tomcat.test.com/app1/index.html返回app1项目的内容

4.1 构建tomcat镜像

tomcat-app1# ll

总用量 20

drwxr-xr-x 3 root root 4096 11月 12 13:42 ./

drwxr-xr-x 6 root root 4096 11月 11 13:56 ../

drwxr-xr-x 2 root root 4096 11月 10 16:24 app1/

-rw-r--r-- 1 root root 164 11月 10 16:24 app1.tgz

-rw-r--r-- 1 root root 96 11月 10 16:23 Dockerfile

root@k8-deploy:~/k8s-yaml/ingress/images/tomcat-app1# cat Dockerfile

FROM 192.168.1.110/web/alpine-jdk-8u192-tomcat-8.5.70:v20211020-1014

ADD app1.tgz /opt/webapps

root@k8-deploy:~/k8s-yaml/ingress/images/tomcat-app1# cat app1/index.html

tomcat app1 html

# docker build -t 192.168.1.110/web/tomcat-app1:v1 .

# docker push 192.168.1.110/web/tomcat-app1:v1

4.2 编写svc ymal并创建tomcat svc

# cat tomcat-app1-svc.yml

apiVersion: v1

kind: Service

metadata:

name: tomcat-app1-svc

namespace: yan-test

spec:

type: NodePort

ports:

- name: http

port: 80

targetPort: 8080

nodePort: 40001

protocol: TCP

selector:

app: tomcat-app1

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: tomcat-app1-deployment

namespace: yan-test

labels:

app: tomcat-app1

spec:

replicas: 1

selector:

matchLabels:

app: tomcat-app1

template:

metadata:

labels:

app: tomcat-app1

spec:

containers:

- name: tomcat-app1-ct

image: 192.168.1.110/web/tomcat-app1:v1

ports:

- containerPort: 8080

4.3 创建tomcat svc并查看

# kubectl apply -f tomcat-app1-svc.yml

# kubectl get svc,pod -n yan-test -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/tomcat-app1-svc NodePort 10.0.144.64 80:40001/TCP 24h app=tomcat-app1

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/tomcat-app1-deployment-754855ff99-jfqwz 1/1 Running 0 24h 10.100.224.72 192.168.2.18

4.4 编写ingress yaml

# cat ingress-muti-path-tomcat.yml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: tomcat-ingress

namespace: yan-test

annotations:

kubernetes.io/ingress.class: "nginx" ##指定Ingress Controller的类型

nginx.ingress.kubernetes.io/use-regex: "true" ##指定后面rules定义的path可以使用正则表达式

nginx.ingress.kubernetes.io/proxy-connect-timeout: "600" ##连接超时时间,默认为5s

nginx.ingress.kubernetes.io/proxy-send-timeout: "600" ##后端服务器回转数据超时时间,默认为60s

nginx.ingress.kubernetes.io/proxy-read-timeout: "600" ##后端服务器响应超时时间,默认为60s

nginx.ingress.kubernetes.io/proxy-body-size: "10m" ##客户端上传文件,最大大小,默认为20m

#nginx.ingress.kubernetes.io/rewrite-target: / ##URL重写

#nginx.ingress.kubernetes.io/app-root: /index.html

spec:

rules:

- host: tomcat.test.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: tomcat-app1-svc

port:

number: 80

- path: /app1

pathType: Prefix

backend:

service:

name: tomcat-app1-svc

port:

number: 80

4.5 创建tomcat ingress规则

# kubectl apply -f ingress-muti-path-tomcat.yml

# kubectl get ingress -n yan-test

NAME CLASS HOSTS ADDRESS PORTS AGE

tomcat-ingress tomcat.test.com 10.0.2.50 80 26m

4.6 通过域名和不同的url进行验证

# curl tomcat.test.com

Apache Tomcat/8.5.70

...

# curl tomcat.test.com/app1/index.html

tomcat app1 html

5 ingress https配置测试

5.1 准备ssl证书

申请证书的域名需要完成ICP备案,使用阿里云免费的ssl了证书

root@k8-deploy:~/k8s-yaml/ingress/cert# ll

-rw-r--r-- 1 root root 1679 11月 16 15:02 6611016_ingress.t.faxuan.net.key

-rw-r--r-- 1 root root 4153 11月 16 15:02 6611016_ingress.t.faxuan.net_nginx.zip

-rw-r--r-- 1 root root 3813 11月 16 15:02 6611016_ingress.t.faxuan.net.pem

5.2 将ssl证书创建为secret引入k8s

root@k8-deploy:~/k8s-yaml/ingress/cert# kubectl create secret tls ingress.t.faxuan.net-secret \

> --key=./6611016_ingress.t.faxuan.net.key \

> --cert=./6611016_ingress.t.faxuan.net.pem \

> -n yan-test

secret/ingress.t.faxuan.net-secret created

root@k8-deploy:~/k8s-yaml/ingress/cert# kubectl get secrets -n yan-test

NAME TYPE DATA AGE

default-token-8fd2j kubernetes.io/service-account-token 3 68m

ingress.t.faxuan.net-secret kubernetes.io/tls 2 5s

5.3 编写 ingress htts规则的yaml

后端的server 使用之前已经创建的nginx-app1-svc

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: myapp

namespace: yan-test

annotations:

nginx.ingress.kubernetes.io/force-ssl-redirect: "true"

nginx.ingress.kubernetes.io/proxy-body-size: "20m"

spec:

ingressClassName: nginx

tls:

- hosts:

- ingress.t.faxuan.net

secretName: ingress.t.faxuan.net-secret

rules:

- host: ingress.t.faxuan.net

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx-app1-svc

port:

number: 80

5.4 使用yaml文件创建ingress规则

kubectl apply -f ingress-https-single.yml

root@k8-deploy:~/k8s-yaml/ingress# kubectl get ingress -n yan-test

NAME CLASS HOSTS ADDRESS PORTS AGE

myapp nginx ingress.t.faxuan.net 10.0.233.49 80, 443 17m

遇到的错误:

Error from server (InternalError): error when creating "ingress-https-single.yml": Internal error occurred: failed calling webhook "validate.nginx.ingress.kubernetes.io": the server rejected our request for an unknown reason

2种解决方法:

1.使用ingress的v1beta1版本格式的yaml文件

2.删除 ValidatingWebhookConfiguration资源中ingress-nginx-admission

kubectl delete -A ValidatingWebhookConfiguration ingress-nginx-admission

参考:https://knowledge.broadcom.com/external/article/227955/installation-reported-during-nginx-setup.html

5.5 haproxy 代理配置

listen ingress-443

bind *:443

mode tcp

server k8s1 192.168.2.17:40443 check inter 3s fall 3 rise 5

server k8s2 192.168.2.18:40443 check inter 3s fall 3 rise 5

server k8s3 192.168.2.19:40443 check inter 3s fall 3 rise 5

# 重启haproxy

systemctl restart haproxy