飞桨2.0 PaddleDetection:瓶装酒瑕疵检测迁移学习教程

序言

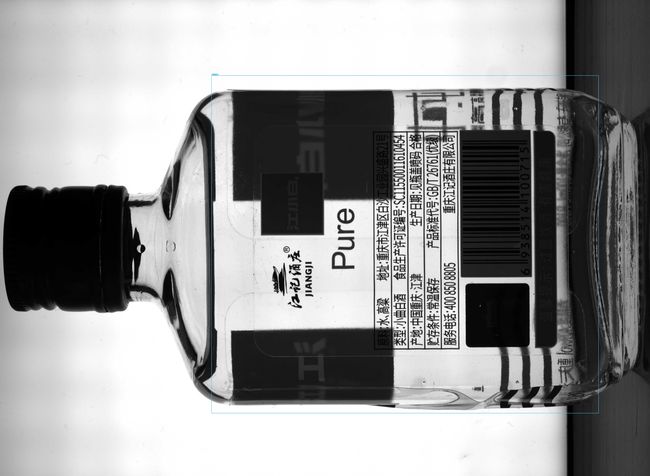

瓶装酒的生产过程中,受到原材料质量(酒瓶)以及加工工艺(灌装)等因素的影响,产品中可能存在各类瑕疵影响产品质量。一条产线一般有三到五个质检环节分别检测不同类型的瑕疵。由于瑕疵种类多样、有的瑕疵体积小不易察觉,瓶装酒厂家往往需要投入大量人力成本用于产品质检。高效、可靠的自动化质检能够降低大量人工成本,创造经济效益。

PaddleDetection提供了这类工业质检场景的通用解决方案,本文是在PaddlePaddle 2.0.0rc框架版本下PaddleDetection的使用教程。

PaddleDetection模型库与依赖安装

- PaddleDetection的文档,本文主要参考以下模块

使用教程

- 安装说明

- 快速开始

- 训练、评估流程

- 数据预处理及自定义数据集

- 配置模块设计和介绍

- 详细的配置信息和参数说明示例

- IPython Notebook demo

- 迁移学习教程

模型库

- 模型库

!git clone https://gitee.com/paddlepaddle/PaddleDetection.git

# 官方的国内镜像

Cloning into 'PaddleDetection'...

remote: Enumerating objects: 8784, done.[K

remote: Counting objects: 100% (8784/8784), done.[K

remote: Compressing objects: 100% (4820/4820), done.[K

remote: Total 8784 (delta 6342), reused 5425 (delta 3850), pack-reused 0[K

Receiving objects: 100% (8784/8784), 51.41 MiB | 4.19 MiB/s, done.

Resolving deltas: 100% (6342/6342), done.

Checking connectivity... done.

# 中文字体镜像

!git clone https://gitee.com/mirrors/noto-cjk.git

# 安装cocoAPI

# !git clone https://github.com/cocodataset/cocoapi.git

# 如果要加速的话可以找一个国内镜像

!git clone https://gitee.com/firefox1200/cocoapi.git

!cd cocoapi/PythonAPI&&make install

!pip install pycocotools -i https://mirror.baidu.com/pypi/simple

!pip install -r PaddleDetection/requirements.txt -i https://mirror.baidu.com/pypi/simple

!pip install scikit-image -i https://mirror.baidu.com/pypi/simple

!pip install kmeans -i https://mirror.baidu.com/pypi/simple

数据集信息

数智重庆.全球产业赋能创新大赛【赛场一】

数据准备

- 训练集和测试集有多份,目前还可以直接从服务器上获取

- 如果数据集的url失效,也可以从项目挂载的数据集中解压,请读者自行调整解压位置

!wget http://tianchi-competition.oss-cn-hangzhou.aliyuncs.com/231763/round1/chongqing1_round1_train1_20191223.zip

!wget http://tianchi-competition.oss-cn-hangzhou.aliyuncs.com/231763/round1/chongqing1_round1_testA_20191223.zip

!wget http://tianchi-competition.oss-cn-hangzhou.aliyuncs.com/231763/round2/chongqing1_round2_train_20200213.zip

!wget http://tianchi-competition.oss-cn-hangzhou.aliyuncs.com/231763/round1/chongqing1_round1_testB_20200210.zip --password=GXrhrvhEp92O*s2A

# 解压数据集

!unzip chongqing1_round1_train1_20191223.zip -d ./data

!unzip chongqing1_round1_testA_20191223.zip -d ./data

!unzip chongqing1_round2_train_20200213.zip -d ./data

!unzip -P GXrhrvhEp92O*s2A chongqing1_round1_testB_20200210.zip -d ./data

# !unzip chongqing1_round2_image_info.zip -d ./data

!mkdir PaddleDetection/dataset/coco/annotations

!mkdir PaddleDetection/dataset/coco/train2017

!mkdir PaddleDetection/dataset/coco/val2017

!mkdir PaddleDetection/dataset/coco/test2017

!cp data/chongqing1_round1_testA_20191223/images/*.jpg -d PaddleDetection/dataset/coco/test2017

EDA

-

此处提供了数据集简单的EDA分析,供读者参考

-

完整内容请查看:数据的简单分析和可视

import os

import json

import pandas as pd

import seaborn as sns

import pandas as pd

import matplotlib.pyplot as plt

from matplotlib.font_manager import FontProperties

import os, sys, zipfile

import urllib.request

import shutil

import random

import numpy as np

from tqdm import tqdm

import cv2

import kmeans

myfont = FontProperties(fname=r"/home/aistudio/noto-cjk/NotoSerifSC-Light.otf", size=12)

plt.rcParams['figure.figsize'] = (12, 12)

plt.rcParams['font.family']= myfont.get_family()

plt.rcParams['font.sans-serif'] = myfont.get_name()

plt.rcParams['axes.unicode_minus'] = False

def generate_anno_eda(dataset_path):

with open(os.path.join(dataset_path, 'annotations.json')) as f:

anno = json.load(f)

print('标签类别:', anno['categories'])

print('类别数量:', len(anno['categories']))

print('训练集图片数量:', len(anno['images']))

print('训练集标签数量:', len(anno['annotations']))

total=[]

for img in anno['images']:

hw = (img['height'],img['width'])

total.append(hw)

unique = set(total)

for k in unique:

print('长宽为(%d,%d)的图片数量为:'%k,total.count(k))

ids=[]

images_id=[]

for i in anno['annotations']:

ids.append(i['id'])

images_id.append(i['image_id'])

print('训练集图片数量:', len(anno['images']))

print('unique id 数量:', len(set(ids)))

print('unique image_id 数量', len(set(images_id)))

# 创建类别标签字典

category_dic=dict([(i['id'],i['name']) for i in anno['categories']])

counts_label=dict([(i['name'],0) for i in anno['categories']])

for i in anno['annotations']:

counts_label[category_dic[i['category_id']]] += 1

label_list = counts_label.keys() # 各部分标签

print('标签列表:', label_list)

size = counts_label.values() # 各部分大小

color = ['#FFB6C1', '#D8BFD8', '#9400D3', '#483D8B', '#4169E1', '#00FFFF','#B1FFF0','#ADFF2F','#EEE8AA','#FFA500','#FF6347'] # 各部分颜色

# explode = [0.05, 0, 0] # 各部分突出值

patches, l_text, p_text = plt.pie(size, labels=label_list, colors=color, labeldistance=1.1, autopct="%1.1f%%", shadow=False, startangle=90, pctdistance=0.6, textprops={

'fontproperties':myfont})

plt.axis("equal") # 设置横轴和纵轴大小相等,这样饼才是圆的

plt.legend(prop=myfont)

plt.show()

generate_anno_eda('data/chongqing1_round1_train1_20191223')

标签类别: [{'supercategory': '瓶盖破损', 'id': 1, 'name': '瓶盖破损'}, {'supercategory': '喷码正常', 'id': 9, 'name': '喷码正常'}, {'supercategory': '瓶盖断点', 'id': 5, 'name': '瓶盖断点'}, {'supercategory': '瓶盖坏边', 'id': 3, 'name': '瓶盖坏边'}, {'supercategory': '瓶盖打旋', 'id': 4, 'name': '瓶盖打旋'}, {'supercategory': '背景', 'id': 0, 'name': '背景'}, {'supercategory': '瓶盖变形', 'id': 2, 'name': '瓶盖变形'}, {'supercategory': '标贴气泡', 'id': 8, 'name': '标贴气泡'}, {'supercategory': '标贴歪斜', 'id': 6, 'name': '标贴歪斜'}, {'supercategory': '喷码异常', 'id': 10, 'name': '喷码异常'}, {'supercategory': '标贴起皱', 'id': 7, 'name': '标贴起皱'}]

类别数量: 11

训练集图片数量: 4516

训练集标签数量: 6945

长宽为(492,658)的图片数量为: 4105

长宽为(3000,4096)的图片数量为: 411

训练集图片数量: 4516

unique id 数量: 2011

unique image_id 数量 4516

标签列表: dict_keys(['瓶盖破损', '喷码正常', '瓶盖断点', '瓶盖坏边', '瓶盖打旋', '背景', '瓶盖变形', '标贴气泡', '标贴歪斜', '喷码异常', '标贴起皱'])

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-zBf63mJs-1610175324779)(output_19_1.png)]

generate_anno_eda('data/chongqing1_round2_train_20200213')

标签类别: [{'supercategory': '瓶盖破损', 'id': 1, 'name': '瓶盖破损'}, {'supercategory': '喷码正常', 'id': 9, 'name': '喷码正常'}, {'supercategory': '瓶盖断点', 'id': 5, 'name': '瓶盖断点'}, {'supercategory': '瓶盖坏边', 'id': 3, 'name': '瓶盖坏边'}, {'supercategory': '瓶盖打旋', 'id': 4, 'name': '瓶盖打旋'}, {'supercategory': '瓶身破损', 'id': 12, 'name': '瓶身破损'}, {'supercategory': '背景', 'id': 0, 'name': '背景'}, {'supercategory': '瓶盖变形', 'id': 2, 'name': '瓶盖变形'}, {'supercategory': '瓶身气泡', 'id': 13, 'name': '瓶身气泡'}, {'supercategory': '标贴气泡', 'id': 8, 'name': '标贴气泡'}, {'supercategory': '标贴歪斜', 'id': 6, 'name': '标贴歪斜'}, {'supercategory': '喷码异常', 'id': 10, 'name': '喷码异常'}, {'supercategory': '酒液杂质', 'id': 11, 'name': '酒液杂质'}, {'supercategory': '标贴起皱', 'id': 7, 'name': '标贴起皱'}]

类别数量: 14

训练集图片数量: 2668

训练集标签数量: 3658

长宽为(3000,4096)的图片数量为: 2668

训练集图片数量: 2668

unique id 数量: 3658

unique image_id 数量 2668

标签列表: dict_keys(['瓶盖破损', '喷码正常', '瓶盖断点', '瓶盖坏边', '瓶盖打旋', '瓶身破损', '背景', '瓶盖变形', '瓶身气泡', '标贴气泡', '标贴歪斜', '喷码异常', '酒液杂质', '标贴起皱'])

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-A3LPVMeu-1610175324782)(output_20_1.png)]

数据清洗

- 数据集的标注并不是标准的coco格式,因此需要额外开展数据清洗工作

- 参考数据清洗的简单demo

- PaddleDetection目前尚不支持解析标注文件中的中文字符,在infer阶段会报错(不影响训练),读者可以思考,是否在该阶段也替换中文标注内容

def generate_washed_anno(dataset_path):

with open(os.path.join(dataset_path, 'annotations.json')) as f:

json_file = json.load(f)

print('所有图片的数量:', len(json_file['images']))

print('所有标注的数量:', len(json_file['annotations']))

bg_imgs = set() # 所有标注中包含背景的图片 id

for c in json_file['annotations']:

if c['category_id'] == 0:

bg_imgs.add(c['image_id'])

print('所有标注中包含背景的图片数量:', len(bg_imgs))

bg_only_imgs = set() # 只有背景的图片的 id

for img_id in bg_imgs:

co = 0

for c in json_file['annotations']:

if c['image_id'] == img_id:

co += 1

if co == 1:

bg_only_imgs.add(img_id)

print('只包含背景的图片数量:', len(bg_only_imgs))

images_to_be_deleted = []

for img in json_file['images']:

if img['id'] in bg_only_imgs:

images_to_be_deleted.append(img)

# 删除的是只有一个标注,且为 background 的的图片

print('待删除图片的数量:', len(images_to_be_deleted))

for img in images_to_be_deleted:

json_file['images'].remove(img)

print('处理之后图片的数量:', len(json_file['images']))

ann_to_be_deleted = []

for c in json_file['annotations']:

if c['category_id'] == 0:

ann_to_be_deleted.append(c)

print('待删除标注的数量:', len(ann_to_be_deleted))

for img in ann_to_be_deleted:

json_file['annotations'].remove(img)

print('处理之后标注的数量:', len(json_file['annotations']))

bg_cate = {

'supercategory': '背景', 'id': 0, 'name': '背景'}

json_file['categories'].remove(bg_cate)

json_file['categories']

for idx in range(len(json_file['annotations'])):

json_file['annotations'][idx]['id'] = idx

with open(os.path.join(dataset_path, 'annotations_washed.json'), 'w') as f:

json.dump(json_file, f, ensure_ascii=False)

f.close()

print('清洗后标注文件已生成!')

generate_washed_anno('data/chongqing1_round1_train1_20191223')

所有图片的数量: 4516

所有标注的数量: 6945

所有标注中包含背景的图片数量: 1168

只包含背景的图片数量: 1168

待删除图片的数量: 1168

处理之后图片的数量: 3348

待删除标注的数量: 1170

处理之后标注的数量: 5775

清洗后标注文件已生成!

generate_washed_anno('data/chongqing1_round2_train_20200213')

所有图片的数量: 2668

所有标注的数量: 3658

所有标注中包含背景的图片数量: 985

只包含背景的图片数量: 985

待删除图片的数量: 985

处理之后图片的数量: 1683

待删除标注的数量: 985

处理之后标注的数量: 2673

清洗后标注文件已生成!

合并多份训练集与标注

!mkdir data/chongqing_train_merge

!mkdir data/chongqing_train_merge/images

!mv data/chongqing1_round1_train1_20191223/images/*.jpg data/chongqing_train_merge/images/

!mv data/chongqing1_round2_train_20200213/images/*.jpg data/chongqing_train_merge/images/

with open(os.path.join('data/chongqing1_round1_train1_20191223', 'annotations.json')) as f:

round1_full = json.load(f)

f.close()

with open(os.path.join('data/chongqing1_round1_train1_20191223', 'annotations_washed.json')) as f:

round1 = json.load(f)

f.close()

with open(os.path.join('data/chongqing1_round2_train_20200213', 'annotations_washed.json')) as f:

round2 = json.load(f)

f.close()

data_0={

}

data_0['images'] = []

data_0['info'] = round1['info']

data_0['license'] = ['AIC IVI Created']

# 复赛数据集在初赛基础上增加了3类缺陷

data_0['categories'] = round2['categories']

t1 = round1['images']

t2 = round1['annotations']

for item in round2['images']:

item['id'] += len(round1_full['images'])

t1.append(item)

for ann in round2['annotations']:

ann['image_id'] += len(round1_full['images'])

ann['id'] += len(round1_full['annotations'])

t2.append(ann)

data_0['images'] = t1

data_0['annotations'] = t2

# print(data_0)

# 保存到新的JSON文件,便于查看数据特点

json.dump(data_0,open('data/chongqing_train_merge/annotations_washed.json','w'),indent=4) # indent=4 更加美观显示

# 添加segmentation字段

def add_seg(json_anno):

new_json_anno = []

for c_ann in json_anno:

c_category_id = c_ann['category_id']

if not c_category_id:

continue

bbox = c_ann['bbox']

c_ann['segmentation'] = []

seg = []

#bbox[] is x,y,w,h

#left_top

seg.append(bbox[0])

seg.append(bbox[1])

#left_bottom

seg.append(bbox[0])

seg.append(bbox[1] + bbox[3])

#right_bottom

seg.append(bbox[0] + bbox[2])

seg.append(bbox[1] + bbox[3])

#right_top

seg.append(bbox[0] + bbox[2])

seg.append(bbox[1])

c_ann['segmentation'].append(seg)

new_json_anno.append(c_ann)

return new_json_anno

json_file = 'data/chongqing_train_merge/annotations_washed.json'

with open(json_file) as f:

a=json.load(f)

a['annotations'] = add_seg(a['annotations'])

f.close()

with open("data/chongqing_train_merge/new_ann_file.json","w") as f:

json.dump(a, f, ensure_ascii=False)

f.close()

训练集和验证集划分

-

这里用最简单的逻辑,每5张图分1张到验证集,另外4张放训练集

-

也可以参考mmdetection框架:清洗数据后将数据分为训练集和测试集并形成相应的annotations.json文件

def generate_coco_dataset(dataset_path, coco_path, split_ratio):

with open(os.path.join(dataset_path, 'new_ann_file.json')) as f:

merge = json.load(f)

data_0={

}

data_0['images'] = []

data_0['info'] = merge['info']

data_0['license'] = ['AIC IVI Created']

# 复赛数据集在初赛基础上增加了3类缺陷

data_0['categories'] = merge['categories']

t1 = []

t2 = []

t3 = []

t4 = []

# print(len(merge['images']))

index = range(0, len(merge['images']))

slide = int(split_ratio * len(merge['images']))

train_index = random.sample(index, slide)

for i,l in enumerate(merge['images']):

if i in train_index:

t1.append(l)

for ann in merge['annotations']:

if l['id'] == ann['image_id']:

t2.append(ann)

shutil.copy(os.path.join(dataset_path, 'images', l['file_name']), os.path.join(coco_path, 'train2017'))

data_0['images'] = t1

data_0['annotations'] = t2

# 保存到新的JSON文件,便于查看数据特点

json.dump(data_0,open(os.path.join(coco_path, 'annotations','instances_train2017.json'),'w'),indent=4) # indent=4 更加美观显示

for i,l in enumerate(merge['images']):

if i not in train_index:

t3.append(l)

for ann in merge['annotations']:

if l['id'] == ann['image_id']:

t4.append(ann)

shutil.copy(os.path.join(dataset_path, 'images', l['file_name']), os.path.join(coco_path, 'val2017'))

data_0['images'] = t3

data_0['annotations'] = t4

# 保存到新的JSON文件,便于查看数据特点

json.dump(data_0,open(os.path.join(coco_path, 'annotations','instances_val2017.json'),'w'),indent=4) # indent=4 更加美观显示

f.close()

generate_coco_dataset('data/chongqing_train_merge', 'PaddleDetection/dataset/coco', 0.8)

生成分类训练数据集

import os

import numpy as np

from tqdm import tqdm

import cv2

def get_annotations(datadir, mode="train"):

"""获取瑕疵标注信息"""

if mode == "train":

ann_file = 'instances_train2017.json'

else:

ann_file = 'instances_val2017.json'

with open(os.path.join(datadir, 'annotations', ann_file) ) as f:

json_file = json.load(f)

records = []

for objs in json_file['annotations']:

# print(objs)

box = []

label = []

bbox = objs['bbox']

# print(bbox)

# print(objs['image_id'])

x1 = int(bbox[0])

y1 = int(bbox[1])

x2 = int(bbox[0] + bbox[2])

y2 = int(bbox[1] + bbox[3])

box.append([x1, y1, x2, y2])

# 这里有个问题,因为分类训练的label是从0开始算的,所以需要将原始值减去1

objs['category_id'] = objs['category_id'] - 1

label.append(objs['category_id'])

for img in json_file['images']:

if img['id'] == objs['image_id']:

# print(img)

img_file = img['file_name']

# 缺陷id是唯一的,保证不会重复

fid = objs['id']

voc_rec = {

'im_file': img_file,

'im_id': fid,

'gt_class': label,

'gt_bbox': box

}

records.append(voc_rec)

f.close()

return records

def generate_data(datadir, save_dir, records, mode="train"):

im_out = []

if mode == "train":

images_dir = 'train2017'

else:

images_dir = 'val2017'

for record in tqdm(records):

img = cv2.imread(os.path.join(datadir, images_dir, record["im_file"]))

# img = imageio.imread(os.path.join(datadir, images_dir, record["im_file"]))

ffile = record["im_file"][:-4]

fid = record["im_id"]

box = record["gt_bbox"][0]

fl = record["gt_class"][0]

# print(fl)

# print(box)

# print(img)

# print(box[1])

im = img[box[1]: box[3], box[0]: box[2]]

fname = '{}/{}/{}_{}.jpg'.format(save_dir, mode, str(ffile), str(fid))

cv2.imwrite(fname, im)

outname = '{}/{}_{}.jpg'.format(mode, str(ffile), str(fid))

im_out.append("{} {}".format(outname, fl))

with open("{}/{}_list.txt".format(save_dir,mode), "w") as f:

f.write("\n".join(im_out))

f.close()

数据集相关计算

RGB通道的均值和标准差

# 先把测试集图片都移到coco test dataset目录下

!mv data/chongqing1_round1_testB_20200210/images/*.jpg PaddleDetection/dataset/coco/test2017/

!mv data/chongqing1_round1_testA_20191223/images/*.jpg PaddleDetection/dataset/coco/test2017/

"""

计算RGB通道的均值和标准差

"""

def compute(path):

file_names = os.listdir(path)

per_image_Rmean = []

per_image_Gmean = []

per_image_Bmean = []

per_image_Rstd = []

per_image_Gstd = []

per_image_Bstd = []

for file_name in file_names:

img = cv2.imread(os.path.join(path, file_name), 1)

per_image_Rmean.append(np.mean(img[:, :, 0]))

per_image_Gmean.append(np.mean(img[:, :, 1]))

per_image_Bmean.append(np.mean(img[:, :, 2]))

per_image_Rstd.append(np.std(img[:, :, 0]))

per_image_Gstd.append(np.std(img[:, :, 1]))

per_image_Bstd.append(np.std(img[:, :, 2]))

R_mean = np.mean(per_image_Rmean)/255.0

G_mean = np.mean(per_image_Gmean)/255.0

B_mean = np.mean(per_image_Bmean)/255.0

R_std = np.mean(per_image_Rstd)/255.0

G_std = np.mean(per_image_Gstd)/255.0

B_std = np.mean(per_image_Bstd)/255.0

image_mean = [R_mean, G_mean, B_mean]

image_std = [R_std, G_std, B_std]

return image_mean, image_std

path = 'PaddleDetection/dataset/coco/test2017'

image_mean, image_std = compute(path)

print(image_mean, image_std)

# [0.30180695179185196, 0.21959776452510077, 0.17572622616932373] [0.31354065666185776, 0.23364904437622586, 0.2054456915069016]

[0.30180695179185196, 0.21959776452510077, 0.17572622616932373] [0.31354065666185776, 0.23364904437622588, 0.20544569150690165]

Kmeans聚类计算anchor boxes

- 如果读者需要使用Yolo系列模型,可以参考Kmeans聚类结果设置anchors

def iou(box, clusters):

"""

Calculates the Intersection over Union (IoU) between a box and k clusters.

:param box: tuple or array, shifted to the origin (i. e. width and height)

:param clusters: numpy array of shape (k, 2) where k is the number of clusters

:return: numpy array of shape (k, 0) where k is the number of clusters

"""

x = np.minimum(clusters[:, 0], box[0])

y = np.minimum(clusters[:, 1], box[1])

if np.count_nonzero(x == 0) > 0 or np.count_nonzero(y == 0) > 0:

raise ValueError("Box has no area")

intersection = x * y

box_area = box[0] * box[1]

cluster_area = clusters[:, 0] * clusters[:, 1]

iou_ = intersection / (box_area + cluster_area - intersection)

return iou_

def avg_iou(boxes, clusters):

"""

Calculates the average Intersection over Union (IoU) between a numpy array of boxes and k clusters.

:param boxes: numpy array of shape (r, 2), where r is the number of rows

:param clusters: numpy array of shape (k, 2) where k is the number of clusters

:return: average IoU as a single float

"""

return np.mean([np.max(iou(boxes[i], clusters)) for i in range(boxes.shape[0])])

def translate_boxes(boxes):

"""

Translates all the boxes to the origin.

:param boxes: numpy array of shape (r, 4)

:return: numpy array of shape (r, 2)

"""

new_boxes = boxes.copy()

for row in range(new_boxes.shape[0]):

new_boxes[row][2] = np.abs(new_boxes[row][2] - new_boxes[row][0])

new_boxes[row][3] = np.abs(new_boxes[row][3] - new_boxes[row][1])

return np.delete(new_boxes, [0, 1], axis=1)

def kmeans(boxes, k, dist=np.median):

"""

Calculates k-means clustering with the Intersection over Union (IoU) metric.

:param boxes: numpy array of shape (r, 2), where r is the number of rows

:param k: number of clusters

:param dist: distance function

:return: numpy array of shape (k, 2)

"""

rows = boxes.shape[0]

distances = np.empty((rows, k))

last_clusters = np.zeros((rows,))

np.random.seed()

# the Forgy method will fail if the whole array contains the same rows

clusters = boxes[np.random.choice(rows, k, replace=False)]

while True:

for row in range(rows):

distances[row] = 1 - iou(boxes[row], clusters)

nearest_clusters = np.argmin(distances, axis=1)

if (last_clusters == nearest_clusters).all():

break

for cluster in range(k):

clusters[cluster] = dist(boxes[nearest_clusters == cluster], axis=0)

last_clusters = nearest_clusters

return clusters

CLUSTERS = 9

def load_dataset(path):

dataset = []

with open(os.path.join(path, 'annotations', 'instances_train2017.json')) as f:

json_file = json.load(f)

for item in json_file['images']:

height = item['height']

width = item['width']

for objs in json_file['annotations']:

if(objs['image_id'] == item['id']):

bbox = objs['bbox']

xmin = int(bbox[0]) / width

ymin = int(bbox[1]) / height

xmax = int(bbox[0] + bbox[2]) / width

ymax = int(bbox[1] + bbox[3]) / height

xmin = np.float64(xmin)

ymin = np.float64(ymin)

xmax = np.float64(xmax)

ymax = np.float64(ymax)

if xmax == xmin or ymax == ymin:

print(item['file_name'])

dataset.append([xmax - xmin, ymax - ymin])

f.close()

return np.array(dataset)

#print(__file__)

data = load_dataset('PaddleDetection/dataset/coco/')

out = kmeans(data, k=CLUSTERS)*608

#clusters = [[10,13],[16,30],[33,23],[30,61],[62,45],[59,119],[116,90],[156,198],[373,326]]

#out= np.array(clusters)/416.0

print(out)

print("Accuracy: {:.2f}%".format(avg_iou(data, out/608) * 100))

print("Boxes:\n {}-{}".format(out[:, 0], out[:, 1]))

ratios = np.around(out[:, 0] / out[:, 1], decimals=2).tolist()

print("Ratios:\n {}".format(sorted(ratios)))

[[ 57.2887538 44.48780488]

[329.87234043 133.46341463]

[ 23.10030395 56.84552846]

[329.6796875 337.44 ]

[332.6443769 148.29268293]

[ 7.8671875 11.552 ]

[145.99392097 49.43089431]

[ 4.3046875 5.67466667]

[ 16.63221884 21.00813008]]

Accuracy: 64.98%

Boxes:

[ 57.2887538 329.87234043 23.10030395 329.6796875 332.6443769

7.8671875 145.99392097 4.3046875 16.63221884]-[ 44.48780488 133.46341463 56.84552846 337.44 148.29268293

11.552 49.43089431 5.67466667 21.00813008]

Ratios:

[0.41, 0.68, 0.76, 0.79, 0.98, 1.29, 2.24, 2.47, 2.95]

迁移学习

迁移学习为利用已有知识,对新知识进行学习。例如利用ImageNet分类预训练模型做初始化来训练检测模型,利用在COCO数据集上的检测模型做初始化来训练基于PascalVOC数据集的检测模型。

在进行迁移学习时,由于会使用不同的数据集,数据类别数与COCO/VOC数据类别不同,导致在加载PaddlePaddle开源模型时,与类别数相关的权重(例如分类模块的fc层)会出现维度不匹配的问题;另外,如果需要结构更加复杂的模型,需要对已有开源模型结构进行调整,对应权重也需要选择性加载。因此,需要检测库能够指定参数字段,在加载模型时不加载匹配的权重。

PaddleDetection进行迁移学习

加载预训练模型

在进行迁移学习时,由于会使用不同的数据集,数据类别数与COCO/VOC数据类别不同,导致在加载开源模型(如COCO预训练模型)时,与类别数相关的权重(例如分类模块的fc层)会出现维度不匹配的问题;另外,如果需要结构更加复杂的模型,需要对已有开源模型结构进行调整,对应权重也需要选择性加载。因此,需要在加载模型时不加载不能匹配的权重。

在迁移学习中,对预训练模型进行选择性加载,支持如下两种迁移学习方式:

直接加载预训练权重(推荐方式)

模型中和预训练模型中对应参数形状不同的参数将自动被忽略,例如:

export CUDA_VISIBLE_DEVICES=0,1,2,3,4,5,6,7

python -u tools/train.py -c configs/faster_rcnn_r50_1x.yml \

-o pretrain_weights=https://paddlemodels.bj.bcebos.com/object_detection/faster_rcnn_r50_1x.tar

使用finetune_exclude_pretrained_params参数控制忽略参数名

可以显示的指定训练过程中忽略参数的名字,任何参数名均可加入finetune_exclude_pretrained_params中,为实现这一目的,可通过如下方式实现:

- 在 YMAL 配置文件中通过设置

finetune_exclude_pretrained_params字段。可参考配置文件 - 在 train.py的启动参数中设置

finetune_exclude_pretrained_params。例如:

export CUDA_VISIBLE_DEVICES=0,1,2,3,4,5,6,7

python -u tools/train.py -c configs/faster_rcnn_r50_1x.yml \

-o pretrain_weights=https://paddlemodels.bj.bcebos.com/object_detection/faster_rcnn_r50_1x.tar \

finetune_exclude_pretrained_params=['cls_score','bbox_pred'] \

- 说明:

- pretrain_weights的路径为COCO数据集上开源的faster RCNN模型链接,完整模型链接可参考MODEL_ZOO

- finetune_exclude_pretrained_params中设置参数字段,如果参数名能够匹配以上参数字段(通配符匹配方式),则在模型加载时忽略该参数。

如果用户需要利用自己的数据进行finetune,模型结构不变,只需要忽略与类别数相关的参数,不同模型类型所对应的忽略参数字段如下表所示:

| 模型类型 | 忽略参数字段 |

|---|---|

| Faster RCNN | cls_score, bbox_pred |

| Cascade RCNN | cls_score, bbox_pred |

| Mask RCNN | cls_score, bbox_pred, mask_fcn_logits |

| Cascade-Mask RCNN | cls_score, bbox_pred, mask_fcn_logits |

| RetinaNet | retnet_cls_pred_fpn |

| SSD | ^conv2d_ |

| YOLOv3 | yolo_output |

示例:迁移学习

在模型库找到想要的预训练模型,获取下载链接

Faster & Mask R-CNN

| 骨架网络 | 网络类型 | 每张GPU图片个数 | 学习率策略 | 推理时间(fps) | Box AP | Mask AP | 下载 |

|---|---|---|---|---|---|---|---|

| ResNet101-vd-FPN | CascadeClsAware Faster | 2 | 1x | - | 44.7(softnms) | - | 下载链接 |

参考配置文件

configs/faster_fpn_reader.yml

请注意,在TestReader中,anno_path为None,否则会因为加载的标注文件有中文字符,预测时会报错

TrainReader:

inputs_def:

fields: ['image', 'im_info', 'im_id', 'gt_bbox', 'gt_class', 'is_crowd']

dataset:

!COCODataSet

image_dir: train2017

anno_path: annotations/instances_train2017.json

dataset_dir: dataset/coco

sample_transforms:

- !DecodeImage

to_rgb: true

- !RandomFlipImage

prob: 0.5

- !NormalizeImage

is_channel_first: false

is_scale: true

mean: [0.30180695179185196, 0.21959776452510077, 0.17572622616932373]

std: [0.31354065666185776, 0.23364904437622586, 0.2054456915069016]

- !ResizeImage

target_size: 800

max_size: 1333

interp: 1

use_cv2: true

- !Permute

to_bgr: false

channel_first: true

batch_transforms:

- !PadBatch

pad_to_stride: 32

use_padded_im_info: false

batch_size: 1

shuffle: true

worker_num: 2

use_process: false

EvalReader:

inputs_def:

fields: ['image', 'im_info', 'im_id', 'im_shape']

# for voc

#fields: ['image', 'im_info', 'im_id', 'im_shape', 'gt_bbox', 'gt_class', 'is_difficult']

dataset:

!COCODataSet

image_dir: val2017

anno_path: annotations/instances_val2017.json

dataset_dir: dataset/coco

sample_transforms:

- !DecodeImage

to_rgb: true

with_mixup: false

- !NormalizeImage

is_channel_first: false

is_scale: true

mean: [0.30180695179185196, 0.21959776452510077, 0.17572622616932373]

std: [0.31354065666185776, 0.23364904437622586, 0.2054456915069016]

- !ResizeImage

interp: 1

max_size: 1333

target_size: 800

use_cv2: true

- !Permute

channel_first: true

to_bgr: false

batch_transforms:

- !PadBatch

pad_to_stride: 32

use_padded_im_info: true

batch_size: 1

shuffle: false

drop_empty: false

worker_num: 2

TestReader:

inputs_def:

# set image_shape if needed

fields: ['image', 'im_info', 'im_id', 'im_shape']

dataset:

!ImageFolder

anno_path: None

sample_transforms:

- !DecodeImage

to_rgb: true

with_mixup: false

- !NormalizeImage

is_channel_first: false

is_scale: true

mean: [0.30180695179185196, 0.21959776452510077, 0.17572622616932373]

std: [0.31354065666185776, 0.23364904437622586, 0.2054456915069016]

- !ResizeImage

interp: 1

max_size: 1333

target_size: 800

use_cv2: true

- !Permute

channel_first: true

to_bgr: false

batch_transforms:

- !PadBatch

pad_to_stride: 32

use_padded_im_info: true

batch_size: 1

shuffle: false

configs/cascade_rcnn_cls_aware_r101_vd_fpn_1x_softnms.yml

architecture: CascadeRCNNClsAware

max_iters: 90000

snapshot_iter: 10000

use_gpu: true

log_iter: 200

save_dir: output

pretrain_weights: https://paddle-imagenet-models-name.bj.bcebos.com/ResNet101_vd_pretrained.tar

weights: output/cascade_rcnn_cls_aware_r101_vd_fpn_1x_softnms/model_final

metric: COCO

num_classes: 11

CascadeRCNNClsAware:

backbone: ResNet

fpn: FPN

rpn_head: FPNRPNHead

roi_extractor: FPNRoIAlign

bbox_head: CascadeBBoxHead

bbox_assigner: CascadeBBoxAssigner

ResNet:

norm_type: bn

depth: 101

feature_maps: [2, 3, 4, 5]

freeze_at: 2

variant: d

FPN:

min_level: 2

max_level: 6

num_chan: 256

spatial_scale: [0.03125, 0.0625, 0.125, 0.25]

FPNRPNHead:

anchor_generator:

anchor_sizes: [32, 64, 128, 256, 512]

aspect_ratios: [0.5, 1.0, 2.0]

stride: [16.0, 16.0]

variance: [1.0, 1.0, 1.0, 1.0]

anchor_start_size: 32

min_level: 2

max_level: 6

num_chan: 256

rpn_target_assign:

rpn_batch_size_per_im: 256

rpn_fg_fraction: 0.5

rpn_positive_overlap: 0.7

rpn_negative_overlap: 0.3

rpn_straddle_thresh: 0.0

train_proposal:

min_size: 0.0

nms_thresh: 0.7

pre_nms_top_n: 2000

post_nms_top_n: 2000

test_proposal:

min_size: 0.0

nms_thresh: 0.7

pre_nms_top_n: 1000

post_nms_top_n: 1000

FPNRoIAlign:

canconical_level: 4

canonical_size: 224

min_level: 2

max_level: 5

box_resolution: 14

sampling_ratio: 2

CascadeBBoxAssigner:

batch_size_per_im: 512

bbox_reg_weights: [10, 20, 30]

bg_thresh_lo: [0.0, 0.0, 0.0]

bg_thresh_hi: [0.5, 0.6, 0.7]

fg_thresh: [0.5, 0.6, 0.7]

fg_fraction: 0.25

class_aware: True

CascadeBBoxHead:

head: CascadeTwoFCHead

nms: MultiClassSoftNMS

CascadeTwoFCHead:

mlp_dim: 1024

MultiClassSoftNMS:

score_threshold: 0.01

keep_top_k: 300

softnms_sigma: 0.5

LearningRate:

base_lr: 0.0005

schedulers:

- !PiecewiseDecay

gamma: 0.1

milestones: [60000, 80000]

- !LinearWarmup

start_factor: 0.0

steps: 2000

OptimizerBuilder:

optimizer:

momentum: 0.9

type: Momentum

regularizer:

factor: 0.0001

type: L2

_READER_: 'faster_fpn_reader.yml'

TrainReader:

batch_size: 4

训练之前要再次确认Numpy版本

如果出现下列报错,是因为Numpy版本过高导致的,因为在前面下依赖的时候将Numpy版本升级,与PaddlePaddle2.0.0rc不适配

2021-01-05 09:31:37,618-INFO: Start evaluate...

Loading and preparing results...

DONE (t=2.99s)

creating index...

index created!

Traceback (most recent call last):

File "tools/train.py", line 399, in <module>

main()

File "tools/train.py", line 320, in main

cfg['EvalReader']['dataset'])

File "/home/aistudio/PaddleDetection/ppdet/utils/eval_utils.py", line 241, in eval_results

save_only=save_only)

File "/home/aistudio/PaddleDetection/ppdet/utils/coco_eval.py", line 102, in bbox_eval

map_stats = cocoapi_eval(outfile, 'bbox', coco_gt=coco_gt)

File "/home/aistudio/PaddleDetection/ppdet/utils/coco_eval.py", line 244, in cocoapi_eval

coco_eval = COCOeval(coco_gt, coco_dt, style)

File "/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/pycocotools-2.0-py3.7-linux-x86_64.egg/pycocotools/cocoeval.py", line 75, in __init__

self.params = Params(iouType=iouType) # parameters

File "/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/pycocotools-2.0-py3.7-linux-x86_64.egg/pycocotools/cocoeval.py", line 527, in __init__

self.setDetParams()

File "/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/pycocotools-2.0-py3.7-linux-x86_64.egg/pycocotools/cocoeval.py", line 506, in setDetParams

self.iouThrs = np.linspace(.5, 0.95, np.round((0.95 - .5) / .05) + 1, endpoint=True)

File "<__array_function__ internals>", line 6, in linspace

File "/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/numpy/core/function_base.py", line 113, in linspace

num = operator.index(num)

TypeError: 'numpy.float64' object cannot be interpreted as an integer

terminate called without an active exception

--------------------------------------

C++ Traceback (most recent call last):

--------------------------------------

0 paddle::framework::SignalHandle(char const*, int)

1 paddle::platform::GetCurrentTraceBackString[abi:cxx11]()

----------------------

Error Message Summary:

----------------------

FatalError: `Process abort signal` is detected by the operating system.

[TimeInfo: *** Aborted at 1609810302 (unix time) try "date -d @1609810302" if you are using GNU date ***]

[SignalInfo: *** SIGABRT (@0x3e800005683) received by PID 22147 (TID 0x7ff15d3e1700) from PID 22147 ***]

Aborted (core dumped)

# 调整Numpy版本

!pip install -U numpy==1.17.0

Looking in indexes: https://mirror.baidu.com/pypi/simple/

Collecting numpy==1.17.0

[?25l Downloading https://mirror.baidu.com/pypi/packages/05/4b/55cfbfd3e5e85016eeef9f21c0ec809d978706a0d60b62cc28aeec8c792f/numpy-1.17.0-cp37-cp37m-manylinux1_x86_64.whl (20.3MB)

[K |████████████████████████████████| 20.3MB 9.6MB/s eta 0:00:011

[31mERROR: xarray 0.16.2 has requirement pandas>=0.25, but you'll have pandas 0.23.4 which is incompatible.[0m

[31mERROR: parl 1.3.2 has requirement pyarrow==0.13.0, but you'll have pyarrow 2.0.0 which is incompatible.[0m

[?25hInstalling collected packages: numpy

Found existing installation: numpy 1.19.4

Uninstalling numpy-1.19.4:

Successfully uninstalled numpy-1.19.4

Successfully installed numpy-1.17.0

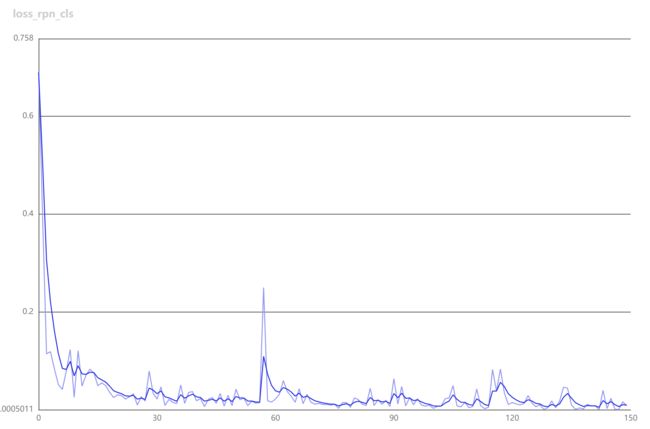

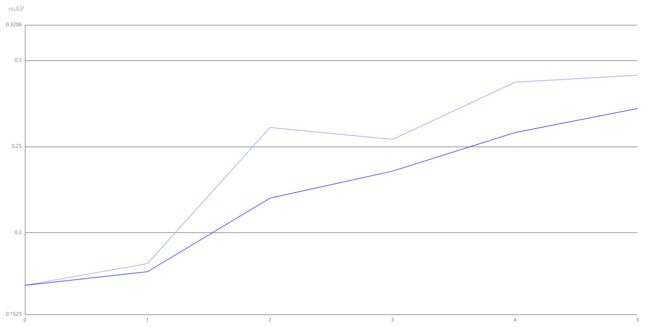

# 开始训练并打开visualdl,此处只训练了3万轮,训练时间在6小时左右,读者可以尝试完整跑完整个训练过程,mAP会进一步提升

!cd PaddleDetection && python -u tools/train.py -c configs/cascade_rcnn_cls_aware_r101_vd_fpn_1x_softnms.yml \

-o pretrain_weights=https://paddlemodels.bj.bcebos.com/object_detection/cascade_rcnn_cls_aware_r101_vd_fpn_1x_softnms.tar \

finetune_exclude_pretrained_params=['cls_score','bbox_pred'] \

--eval -o use_gpu=true --use_vdl=True --vdl_log_dir=vdl_dir/scalar

You are using Paddle compiled with TensorRT, but TensorRT dynamic library is not found. Ignore this if TensorRT is not needed.

/home/aistudio/PaddleDetection/ppdet/utils/voc_utils.py:70: DeprecationWarning: invalid escape sequence \.

elif re.match('test\.txt', fname):

/home/aistudio/PaddleDetection/ppdet/utils/voc_utils.py:68: DeprecationWarning: invalid escape sequence \.

if re.match('trainval\.txt', fname):

/home/aistudio/PaddleDetection/ppdet/core/workspace.py:118: DeprecationWarning: Using or importing the ABCs from 'collections' instead of from 'collections.abc' is deprecated, and in 3.8 it will stop working

isinstance(merge_dct[k], collections.Mapping)):

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/layers/math_op_patch.py:297: UserWarning: /home/aistudio/PaddleDetection/ppdet/modeling/backbones/fpn.py:108

The behavior of expression A + B has been unified with elementwise_add(X, Y, axis=-1) from Paddle 2.0. If your code works well in the older versions but crashes in this version, try to use elementwise_add(X, Y, axis=0) instead of A + B. This transitional warning will be dropped in the future.

op_type, op_type, EXPRESSION_MAP[method_name]))

2021-01-05 20:57:14,933-INFO: If regularizer of a Parameter has been set by 'fluid.ParamAttr' or 'fluid.WeightNormParamAttr' already. The Regularization[L2Decay, regularization_coeff=0.000100] in Optimizer will not take effect, and it will only be applied to other Parameters!

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/layers/math_op_patch.py:297: UserWarning: /home/aistudio/PaddleDetection/ppdet/modeling/roi_heads/cascade_head.py:245

The behavior of expression A + B has been unified with elementwise_add(X, Y, axis=-1) from Paddle 2.0. If your code works well in the older versions but crashes in this version, try to use elementwise_add(X, Y, axis=0) instead of A + B. This transitional warning will be dropped in the future.

op_type, op_type, EXPRESSION_MAP[method_name]))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/layers/math_op_patch.py:297: UserWarning: /home/aistudio/PaddleDetection/ppdet/modeling/roi_heads/cascade_head.py:249

The behavior of expression A + B has been unified with elementwise_add(X, Y, axis=-1) from Paddle 2.0. If your code works well in the older versions but crashes in this version, try to use elementwise_add(X, Y, axis=0) instead of A + B. This transitional warning will be dropped in the future.

op_type, op_type, EXPRESSION_MAP[method_name]))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/layers/math_op_patch.py:297: UserWarning: /home/aistudio/PaddleDetection/ppdet/modeling/roi_heads/cascade_head.py:255

The behavior of expression A / B has been unified with elementwise_div(X, Y, axis=-1) from Paddle 2.0. If your code works well in the older versions but crashes in this version, try to use elementwise_div(X, Y, axis=0) instead of A / B. This transitional warning will be dropped in the future.

op_type, op_type, EXPRESSION_MAP[method_name]))

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/layers/nn.py:13362: DeprecationWarning: inspect.getargspec() is deprecated since Python 3.0, use inspect.signature() or inspect.getfullargspec()

args = inspect.getargspec(self._func)

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/matplotlib/__init__.py:107: DeprecationWarning: Using or importing the ABCs from 'collections' instead of from 'collections.abc' is deprecated, and in 3.8 it will stop working

from collections import MutableMapping

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/matplotlib/colors.py:53: DeprecationWarning: Using or importing the ABCs from 'collections' instead of from 'collections.abc' is deprecated, and in 3.8 it will stop working

from collections import Sized

loading annotations into memory...

Done (t=0.01s)

creating index...

index created!

W0105 20:57:17.060937 1092 device_context.cc:320] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 11.0, Runtime API Version: 10.1

W0105 20:57:17.135076 1092 device_context.cc:330] device: 0, cuDNN Version: 7.6.

2021-01-05 20:57:21,404-INFO: Downloading ResNet101_vd_pretrained.tar from https://paddle-imagenet-models-name.bj.bcebos.com/ResNet101_vd_pretrained.tar

100%|████████████████████████████████| 175040/175040 [00:02<00:00, 61135.79KB/s]

2021-01-05 20:57:24,826-INFO: Decompressing /home/aistudio/.cache/paddle/weights/ResNet101_vd_pretrained.tar...

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/io.py:2216: UserWarning: This list is not set, Because of Paramerter not found in program. There are: fc_0.b_0 fc_0.w_0

format(" ".join(unused_para_list)))

loading annotations into memory...

Done (t=0.04s)

creating index...

index created!

/home/aistudio/PaddleDetection/ppdet/data/reader.py:89: DeprecationWarning: Using or importing the ABCs from 'collections' instead of from 'collections.abc' is deprecated, and in 3.8 it will stop working

if isinstance(item, collections.Sequence) and len(item) == 0:

2021-01-05 20:57:27,623-INFO: iter: 0, lr: 0.000000, 'loss_cls_0': '2.453082', 'loss_loc_0': '0.000000', 'loss_cls_1': '1.257472', 'loss_loc_1': '0.000003', 'loss_cls_2': '0.568658', 'loss_loc_2': '0.000003', 'loss_rpn_cls': '0.689106', 'loss_rpn_bbox': '0.037696', 'loss': '5.006020', eta: 0:44:10, batch_cost: 0.08836 sec, ips: 45.26822 images/sec

2021-01-05 21:00:12,238-INFO: iter: 200, lr: 0.000050, 'loss_cls_0': '0.140615', 'loss_loc_0': '0.000022', 'loss_cls_1': '0.083600', 'loss_loc_1': '0.000143', 'loss_cls_2': '0.040301', 'loss_loc_2': '0.000162', 'loss_rpn_cls': '0.641392', 'loss_rpn_bbox': '0.098699', 'loss': '1.053611', eta: 6:50:00, batch_cost: 0.82552 sec, ips: 4.84544 images/sec

2021-01-05 21:03:09,020-INFO: iter: 400, lr: 0.000100, 'loss_cls_0': '0.030720', 'loss_loc_0': '0.001567', 'loss_cls_1': '0.022762', 'loss_loc_1': '0.000066', 'loss_cls_2': '0.014929', 'loss_loc_2': '0.000068', 'loss_rpn_cls': '0.180909', 'loss_rpn_bbox': '0.056140', 'loss': '0.312434', eta: 7:16:10, batch_cost: 0.88413 sec, ips: 4.52421 images/sec

2021-01-05 21:06:02,715-INFO: iter: 600, lr: 0.000150, 'loss_cls_0': '0.024992', 'loss_loc_0': '0.003722', 'loss_cls_1': '0.019258', 'loss_loc_1': '0.001492', 'loss_cls_2': '0.011919', 'loss_loc_2': '0.000084', 'loss_rpn_cls': '0.112500', 'loss_rpn_bbox': '0.043896', 'loss': '0.217339', eta: 7:06:32, batch_cost: 0.87050 sec, ips: 4.59509 images/sec

2021-01-05 21:08:59,673-INFO: iter: 800, lr: 0.000200, 'loss_cls_0': '0.026494', 'loss_loc_0': '0.007774', 'loss_cls_1': '0.016447', 'loss_loc_1': '0.002729', 'loss_cls_2': '0.010184', 'loss_loc_2': '0.000176', 'loss_rpn_cls': '0.094266', 'loss_rpn_bbox': '0.042120', 'loss': '0.224630', eta: 7:10:24, batch_cost: 0.88441 sec, ips: 4.52277 images/sec

2021-01-05 21:11:34,220-INFO: iter: 1000, lr: 0.000250, 'loss_cls_0': '0.030684', 'loss_loc_0': '0.009519', 'loss_cls_1': '0.017671', 'loss_loc_1': '0.004104', 'loss_cls_2': '0.010640', 'loss_loc_2': '0.000898', 'loss_rpn_cls': '0.076730', 'loss_rpn_bbox': '0.035291', 'loss': '0.190934', eta: 6:11:27, batch_cost: 0.76854 sec, ips: 5.20470 images/sec

2021-01-05 21:14:13,594-INFO: iter: 1200, lr: 0.000300, 'loss_cls_0': '0.026984', 'loss_loc_0': '0.008838', 'loss_cls_1': '0.016142', 'loss_loc_1': '0.003650', 'loss_cls_2': '0.009341', 'loss_loc_2': '0.000609', 'loss_rpn_cls': '0.068042', 'loss_rpn_bbox': '0.037983', 'loss': '0.184763', eta: 6:24:34, batch_cost: 0.80121 sec, ips: 4.99242 images/sec

2021-01-05 21:16:57,206-INFO: iter: 1400, lr: 0.000350, 'loss_cls_0': '0.036046', 'loss_loc_0': '0.013957', 'loss_cls_1': '0.019316', 'loss_loc_1': '0.006035', 'loss_cls_2': '0.010939', 'loss_loc_2': '0.001333', 'loss_rpn_cls': '0.061063', 'loss_rpn_bbox': '0.034824', 'loss': '0.199633', eta: 6:27:57, batch_cost: 0.81388 sec, ips: 4.91471 images/sec

2021-01-05 21:19:33,242-INFO: iter: 1600, lr: 0.000400, 'loss_cls_0': '0.032256', 'loss_loc_0': '0.014501', 'loss_cls_1': '0.020583', 'loss_loc_1': '0.007055', 'loss_cls_2': '0.011019', 'loss_loc_2': '0.001614', 'loss_rpn_cls': '0.062161', 'loss_rpn_bbox': '0.033353', 'loss': '0.190411', eta: 6:11:19, batch_cost: 0.78450 sec, ips: 5.09879 images/sec

2021-01-05 21:22:23,286-INFO: iter: 1800, lr: 0.000450, 'loss_cls_0': '0.032514', 'loss_loc_0': '0.018158', 'loss_cls_1': '0.018047', 'loss_loc_1': '0.009745', 'loss_cls_2': '0.010193', 'loss_loc_2': '0.002037', 'loss_rpn_cls': '0.053048', 'loss_rpn_bbox': '0.035240', 'loss': '0.196530', eta: 6:39:22, batch_cost: 0.84975 sec, ips: 4.70728 images/sec

2021-01-05 21:24:59,835-INFO: iter: 2000, lr: 0.000500, 'loss_cls_0': '0.043271', 'loss_loc_0': '0.021829', 'loss_cls_1': '0.021004', 'loss_loc_1': '0.010664', 'loss_cls_2': '0.012062', 'loss_loc_2': '0.002792', 'loss_rpn_cls': '0.048725', 'loss_rpn_bbox': '0.031871', 'loss': '0.202684', eta: 6:05:18, batch_cost: 0.78281 sec, ips: 5.10982 images/sec

2021-01-05 21:27:41,834-INFO: iter: 2200, lr: 0.000500, 'loss_cls_0': '0.041003', 'loss_loc_0': '0.021591', 'loss_cls_1': '0.020952', 'loss_loc_1': '0.011292', 'loss_cls_2': '0.011224', 'loss_loc_2': '0.002525', 'loss_rpn_cls': '0.043570', 'loss_rpn_bbox': '0.028753', 'loss': '0.197997', eta: 6:15:16, batch_cost: 0.80994 sec, ips: 4.93861 images/sec

2021-01-05 21:30:27,211-INFO: iter: 2400, lr: 0.000500, 'loss_cls_0': '0.043053', 'loss_loc_0': '0.024386', 'loss_cls_1': '0.019802', 'loss_loc_1': '0.013453', 'loss_cls_2': '0.010550', 'loss_loc_2': '0.003711', 'loss_rpn_cls': '0.037526', 'loss_rpn_bbox': '0.031488', 'loss': '0.199871', eta: 6:20:28, batch_cost: 0.82711 sec, ips: 4.83613 images/sec

2021-01-05 21:32:59,545-INFO: iter: 2600, lr: 0.000500, 'loss_cls_0': '0.053189', 'loss_loc_0': '0.029826', 'loss_cls_1': '0.024508', 'loss_loc_1': '0.013675', 'loss_cls_2': '0.012944', 'loss_loc_2': '0.004038', 'loss_rpn_cls': '0.038670', 'loss_rpn_bbox': '0.031219', 'loss': '0.233869', eta: 5:47:50, batch_cost: 0.76170 sec, ips: 5.25140 images/sec

2021-01-05 21:35:42,920-INFO: iter: 2800, lr: 0.000500, 'loss_cls_0': '0.047952', 'loss_loc_0': '0.026338', 'loss_cls_1': '0.021230', 'loss_loc_1': '0.014309', 'loss_cls_2': '0.011081', 'loss_loc_2': '0.003688', 'loss_rpn_cls': '0.043751', 'loss_rpn_bbox': '0.031890', 'loss': '0.208616', eta: 6:07:34, batch_cost: 0.81082 sec, ips: 4.93326 images/sec

2021-01-05 21:38:28,805-INFO: iter: 3000, lr: 0.000500, 'loss_cls_0': '0.053722', 'loss_loc_0': '0.022755', 'loss_cls_1': '0.022325', 'loss_loc_1': '0.012091', 'loss_cls_2': '0.012037', 'loss_loc_2': '0.003324', 'loss_rpn_cls': '0.045868', 'loss_rpn_bbox': '0.034881', 'loss': '0.226761', eta: 6:15:58, batch_cost: 0.83551 sec, ips: 4.78747 images/sec

2021-01-05 21:41:21,359-INFO: iter: 3200, lr: 0.000500, 'loss_cls_0': '0.047701', 'loss_loc_0': '0.024353', 'loss_cls_1': '0.017759', 'loss_loc_1': '0.011699', 'loss_cls_2': '0.009760', 'loss_loc_2': '0.002277', 'loss_rpn_cls': '0.038088', 'loss_rpn_bbox': '0.030854', 'loss': '0.197340', eta: 6:22:42, batch_cost: 0.85680 sec, ips: 4.66851 images/sec

2021-01-05 21:44:13,735-INFO: iter: 3400, lr: 0.000500, 'loss_cls_0': '0.050315', 'loss_loc_0': '0.030603', 'loss_cls_1': '0.020508', 'loss_loc_1': '0.015659', 'loss_cls_2': '0.010529', 'loss_loc_2': '0.004211', 'loss_rpn_cls': '0.032069', 'loss_rpn_bbox': '0.027820', 'loss': '0.197400', eta: 6:21:24, batch_cost: 0.86034 sec, ips: 4.64934 images/sec

2021-01-05 21:46:56,238-INFO: iter: 3600, lr: 0.000500, 'loss_cls_0': '0.049907', 'loss_loc_0': '0.034421', 'loss_cls_1': '0.020692', 'loss_loc_1': '0.017621', 'loss_cls_2': '0.010607', 'loss_loc_2': '0.004791', 'loss_rpn_cls': '0.029823', 'loss_rpn_bbox': '0.023192', 'loss': '0.210793', eta: 6:00:39, batch_cost: 0.81969 sec, ips: 4.87987 images/sec

2021-01-05 21:49:36,743-INFO: iter: 3800, lr: 0.000500, 'loss_cls_0': '0.057379', 'loss_loc_0': '0.037542', 'loss_cls_1': '0.023396', 'loss_loc_1': '0.019874', 'loss_cls_2': '0.010865', 'loss_loc_2': '0.005763', 'loss_rpn_cls': '0.031163', 'loss_rpn_bbox': '0.025450', 'loss': '0.222307', eta: 5:50:30, batch_cost: 0.80270 sec, ips: 4.98318 images/sec

2021-01-05 21:52:21,032-INFO: iter: 4000, lr: 0.000500, 'loss_cls_0': '0.056744', 'loss_loc_0': '0.039169', 'loss_cls_1': '0.023172', 'loss_loc_1': '0.021154', 'loss_cls_2': '0.010635', 'loss_loc_2': '0.005901', 'loss_rpn_cls': '0.028357', 'loss_rpn_bbox': '0.024548', 'loss': '0.224867', eta: 5:55:45, batch_cost: 0.82098 sec, ips: 4.87224 images/sec

2021-01-05 21:55:07,256-INFO: iter: 4200, lr: 0.000500, 'loss_cls_0': '0.055949', 'loss_loc_0': '0.038298', 'loss_cls_1': '0.024296', 'loss_loc_1': '0.024089', 'loss_cls_2': '0.010945', 'loss_loc_2': '0.007038', 'loss_rpn_cls': '0.027430', 'loss_rpn_bbox': '0.025825', 'loss': '0.228521', eta: 5:56:45, batch_cost: 0.82967 sec, ips: 4.82118 images/sec

2021-01-05 21:57:53,794-INFO: iter: 4400, lr: 0.000500, 'loss_cls_0': '0.058873', 'loss_loc_0': '0.039653', 'loss_cls_1': '0.027211', 'loss_loc_1': '0.028895', 'loss_cls_2': '0.011239', 'loss_loc_2': '0.008330', 'loss_rpn_cls': '0.023555', 'loss_rpn_bbox': '0.023535', 'loss': '0.239780', eta: 5:56:02, batch_cost: 0.83445 sec, ips: 4.79355 images/sec

2021-01-05 22:00:44,533-INFO: iter: 4600, lr: 0.000500, 'loss_cls_0': '0.061847', 'loss_loc_0': '0.042509', 'loss_cls_1': '0.030613', 'loss_loc_1': '0.035833', 'loss_cls_2': '0.012384', 'loss_loc_2': '0.009080', 'loss_rpn_cls': '0.022916', 'loss_rpn_bbox': '0.023494', 'loss': '0.259332', eta: 6:01:26, batch_cost: 0.85381 sec, ips: 4.68490 images/sec

2021-01-05 22:03:24,879-INFO: iter: 4800, lr: 0.000500, 'loss_cls_0': '0.063371', 'loss_loc_0': '0.040130', 'loss_cls_1': '0.030213', 'loss_loc_1': '0.033407', 'loss_cls_2': '0.012849', 'loss_loc_2': '0.009641', 'loss_rpn_cls': '0.022259', 'loss_rpn_bbox': '0.024018', 'loss': '0.239246', eta: 5:35:08, batch_cost: 0.79796 sec, ips: 5.01281 images/sec

2021-01-05 22:06:15,639-INFO: iter: 5000, lr: 0.000500, 'loss_cls_0': '0.051159', 'loss_loc_0': '0.036952', 'loss_cls_1': '0.026734', 'loss_loc_1': '0.032490', 'loss_cls_2': '0.011440', 'loss_loc_2': '0.010483', 'loss_rpn_cls': '0.023847', 'loss_rpn_bbox': '0.023062', 'loss': '0.228851', eta: 5:56:24, batch_cost: 0.85540 sec, ips: 4.67618 images/sec

2021-01-05 22:06:15,639-INFO: Save model to output/cascade_rcnn_cls_aware_r101_vd_fpn_1x_softnms/5000.

2021-01-05 22:06:37,550-INFO: Test iter 0

2021-01-05 22:06:46,237-INFO: Test iter 100

2021-01-05 22:06:54,671-INFO: Test iter 200

2021-01-05 22:07:03,071-INFO: Test iter 300

2021-01-05 22:07:11,466-INFO: Test iter 400

2021-01-05 22:07:19,898-INFO: Test iter 500

2021-01-05 22:07:29,319-INFO: Test iter 600

2021-01-05 22:08:14,591-INFO: Test iter 700

2021-01-05 22:08:57,124-INFO: Test iter 800

2021-01-05 22:09:40,921-INFO: Test iter 900

2021-01-05 22:10:23,589-INFO: Test iter 1000

2021-01-05 22:10:26,063-INFO: Test finish iter 1007

2021-01-05 22:10:26,063-INFO: Total number of images: 1007, inference time: 4.399583983754978 fps.

loading annotations into memory...

Done (t=0.01s)

creating index...

index created!

2021-01-05 22:10:27,767-INFO: Start evaluate...

Loading and preparing results...

DONE (t=0.95s)

creating index...

index created!

:6: DeprecationWarning: object of type cannot be safely interpreted as an integer.

Running per image evaluation...

Evaluate annotation type *bbox*

DONE (t=2.70s).

Accumulating evaluation results...

DONE (t=1.07s).

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.169

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.319

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.158

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.052

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.091

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.160

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.209

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.269

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.284

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.177

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.205

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.273

2021-01-05 22:10:32,699-INFO: Save model to output/cascade_rcnn_cls_aware_r101_vd_fpn_1x_softnms/best_model.

2021-01-05 22:10:52,459-INFO: Best test box ap: 0.16946797762716523, in iter: 5000

2021-01-05 22:13:26,510-INFO: iter: 5200, lr: 0.000500, 'loss_cls_0': '0.054198', 'loss_loc_0': '0.041386', 'loss_cls_1': '0.029086', 'loss_loc_1': '0.035541', 'loss_cls_2': '0.012477', 'loss_loc_2': '0.012471', 'loss_rpn_cls': '0.019937', 'loss_rpn_bbox': '0.024147', 'loss': '0.253841', eta: 14:51:18, batch_cost: 2.15637 sec, ips: 1.85497 images/sec

2021-01-05 22:16:09,620-INFO: iter: 5400, lr: 0.000500, 'loss_cls_0': '0.065278', 'loss_loc_0': '0.044940', 'loss_cls_1': '0.032158', 'loss_loc_1': '0.040234', 'loss_cls_2': '0.013711', 'loss_loc_2': '0.013033', 'loss_rpn_cls': '0.020986', 'loss_rpn_bbox': '0.022511', 'loss': '0.261794', eta: 5:32:45, batch_cost: 0.81162 sec, ips: 4.92840 images/sec

2021-01-05 22:18:45,766-INFO: iter: 5600, lr: 0.000500, 'loss_cls_0': '0.057673', 'loss_loc_0': '0.040482', 'loss_cls_1': '0.030845', 'loss_loc_1': '0.041537', 'loss_cls_2': '0.013042', 'loss_loc_2': '0.014510', 'loss_rpn_cls': '0.018892', 'loss_rpn_bbox': '0.021275', 'loss': '0.248344', eta: 5:19:06, batch_cost: 0.78468 sec, ips: 5.09760 images/sec

2021-01-05 22:21:27,442-INFO: iter: 5800, lr: 0.000500, 'loss_cls_0': '0.062059', 'loss_loc_0': '0.043988', 'loss_cls_1': '0.034767', 'loss_loc_1': '0.046085', 'loss_cls_2': '0.014820', 'loss_loc_2': '0.016614', 'loss_rpn_cls': '0.020042', 'loss_rpn_bbox': '0.024015', 'loss': '0.276166', eta: 5:26:05, batch_cost: 0.80849 sec, ips: 4.94749 images/sec

2021-01-05 22:24:12,662-INFO: iter: 6000, lr: 0.000500, 'loss_cls_0': '0.062730', 'loss_loc_0': '0.041818', 'loss_cls_1': '0.035358', 'loss_loc_1': '0.045083', 'loss_cls_2': '0.014346', 'loss_loc_2': '0.017037', 'loss_rpn_cls': '0.019274', 'loss_rpn_bbox': '0.020919', 'loss': '0.270917', eta: 5:30:29, batch_cost: 0.82622 sec, ips: 4.84132 images/sec

2021-01-05 22:26:58,390-INFO: iter: 6200, lr: 0.000500, 'loss_cls_0': '0.060976', 'loss_loc_0': '0.044309', 'loss_cls_1': '0.034077', 'loss_loc_1': '0.045225', 'loss_cls_2': '0.015392', 'loss_loc_2': '0.018923', 'loss_rpn_cls': '0.019982', 'loss_rpn_bbox': '0.021688', 'loss': '0.274153', eta: 5:28:36, batch_cost: 0.82844 sec, ips: 4.82833 images/sec

2021-01-05 22:29:46,424-INFO: iter: 6400, lr: 0.000500, 'loss_cls_0': '0.053250', 'loss_loc_0': '0.039203', 'loss_cls_1': '0.032501', 'loss_loc_1': '0.041819', 'loss_cls_2': '0.014239', 'loss_loc_2': '0.016649', 'loss_rpn_cls': '0.019580', 'loss_rpn_bbox': '0.022341', 'loss': '0.252671', eta: 5:30:26, batch_cost: 0.84010 sec, ips: 4.76133 images/sec

2021-01-05 22:32:26,026-INFO: iter: 6600, lr: 0.000500, 'loss_cls_0': '0.063607', 'loss_loc_0': '0.043990', 'loss_cls_1': '0.037503', 'loss_loc_1': '0.050593', 'loss_cls_2': '0.016669', 'loss_loc_2': '0.022681', 'loss_rpn_cls': '0.016060', 'loss_rpn_bbox': '0.021256', 'loss': '0.286975', eta: 5:11:12, batch_cost: 0.79799 sec, ips: 5.01261 images/sec

2021-01-05 22:35:11,505-INFO: iter: 6800, lr: 0.000500, 'loss_cls_0': '0.064951', 'loss_loc_0': '0.046994', 'loss_cls_1': '0.038398', 'loss_loc_1': '0.056318', 'loss_cls_2': '0.018047', 'loss_loc_2': '0.025666', 'loss_rpn_cls': '0.016604', 'loss_rpn_bbox': '0.019919', 'loss': '0.296968', eta: 5:19:16, batch_cost: 0.82569 sec, ips: 4.84442 images/sec

2021-01-05 22:37:53,025-INFO: iter: 7000, lr: 0.000500, 'loss_cls_0': '0.073776', 'loss_loc_0': '0.041866', 'loss_cls_1': '0.033220', 'loss_loc_1': '0.039030', 'loss_cls_2': '0.016223', 'loss_loc_2': '0.014920', 'loss_rpn_cls': '0.023379', 'loss_rpn_bbox': '0.029821', 'loss': '0.302102', eta: 5:10:15, batch_cost: 0.80936 sec, ips: 4.94215 images/sec

2021-01-05 22:40:32,263-INFO: iter: 7200, lr: 0.000500, 'loss_cls_0': '0.079483', 'loss_loc_0': '0.041692', 'loss_cls_1': '0.032414', 'loss_loc_1': '0.038257', 'loss_cls_2': '0.016636', 'loss_loc_2': '0.020715', 'loss_rpn_cls': '0.020567', 'loss_rpn_bbox': '0.025319', 'loss': '0.290566', eta: 5:01:12, batch_cost: 0.79264 sec, ips: 5.04640 images/sec

2021-01-05 22:43:18,123-INFO: iter: 7400, lr: 0.000500, 'loss_cls_0': '0.062858', 'loss_loc_0': '0.043024', 'loss_cls_1': '0.033079', 'loss_loc_1': '0.045922', 'loss_cls_2': '0.016507', 'loss_loc_2': '0.021034', 'loss_rpn_cls': '0.016285', 'loss_rpn_bbox': '0.020960', 'loss': '0.286527', eta: 5:13:41, batch_cost: 0.83280 sec, ips: 4.80310 images/sec

2021-01-05 22:46:00,214-INFO: iter: 7600, lr: 0.000500, 'loss_cls_0': '0.066365', 'loss_loc_0': '0.048007', 'loss_cls_1': '0.039830', 'loss_loc_1': '0.050740', 'loss_cls_2': '0.018847', 'loss_loc_2': '0.024220', 'loss_rpn_cls': '0.018933', 'loss_rpn_bbox': '0.018931', 'loss': '0.316787', eta: 5:02:38, batch_cost: 0.81063 sec, ips: 4.93446 images/sec

2021-01-05 22:48:36,822-INFO: iter: 7800, lr: 0.000500, 'loss_cls_0': '0.063402', 'loss_loc_0': '0.043144', 'loss_cls_1': '0.036604', 'loss_loc_1': '0.050970', 'loss_cls_2': '0.017042', 'loss_loc_2': '0.025316', 'loss_rpn_cls': '0.018096', 'loss_rpn_bbox': '0.019829', 'loss': '0.291184', eta: 4:48:02, batch_cost: 0.77849 sec, ips: 5.13817 images/sec

2021-01-05 22:51:24,624-INFO: iter: 8000, lr: 0.000500, 'loss_cls_0': '0.065256', 'loss_loc_0': '0.046761', 'loss_cls_1': '0.037826', 'loss_loc_1': '0.051794', 'loss_cls_2': '0.019198', 'loss_loc_2': '0.026519', 'loss_rpn_cls': '0.017717', 'loss_rpn_bbox': '0.019827', 'loss': '0.298254', eta: 5:09:19, batch_cost: 0.84360 sec, ips: 4.74156 images/sec

2021-01-05 22:54:04,868-INFO: iter: 8200, lr: 0.000500, 'loss_cls_0': '0.064572', 'loss_loc_0': '0.045726', 'loss_cls_1': '0.040799', 'loss_loc_1': '0.060172', 'loss_cls_2': '0.020329', 'loss_loc_2': '0.033177', 'loss_rpn_cls': '0.015725', 'loss_rpn_bbox': '0.016582', 'loss': '0.308055', eta: 4:51:08, batch_cost: 0.80133 sec, ips: 4.99171 images/sec

2021-01-05 22:56:49,353-INFO: iter: 8400, lr: 0.000500, 'loss_cls_0': '0.067996', 'loss_loc_0': '0.046675', 'loss_cls_1': '0.042896', 'loss_loc_1': '0.062049', 'loss_cls_2': '0.022289', 'loss_loc_2': '0.033982', 'loss_rpn_cls': '0.014479', 'loss_rpn_bbox': '0.019303', 'loss': '0.331155', eta: 4:56:00, batch_cost: 0.82223 sec, ips: 4.86483 images/sec

2021-01-05 22:59:22,608-INFO: iter: 8600, lr: 0.000500, 'loss_cls_0': '0.062519', 'loss_loc_0': '0.044342', 'loss_cls_1': '0.037895', 'loss_loc_1': '0.058148', 'loss_cls_2': '0.020016', 'loss_loc_2': '0.033121', 'loss_rpn_cls': '0.015533', 'loss_rpn_bbox': '0.018067', 'loss': '0.309335', eta: 4:33:20, batch_cost: 0.76639 sec, ips: 5.21930 images/sec

2021-01-05 23:02:04,273-INFO: iter: 8800, lr: 0.000500, 'loss_cls_0': '0.064332', 'loss_loc_0': '0.046233', 'loss_cls_1': '0.041988', 'loss_loc_1': '0.058609', 'loss_cls_2': '0.021166', 'loss_loc_2': '0.032041', 'loss_rpn_cls': '0.014322', 'loss_rpn_bbox': '0.020238', 'loss': '0.321560', eta: 4:45:40, batch_cost: 0.80850 sec, ips: 4.94743 images/sec

2021-01-05 23:04:50,075-INFO: iter: 9000, lr: 0.000500, 'loss_cls_0': '0.062631', 'loss_loc_0': '0.045678', 'loss_cls_1': '0.040696', 'loss_loc_1': '0.062479', 'loss_cls_2': '0.020717', 'loss_loc_2': '0.033202', 'loss_rpn_cls': '0.014819', 'loss_rpn_bbox': '0.020391', 'loss': '0.306398', eta: 4:50:03, batch_cost: 0.82875 sec, ips: 4.82657 images/sec

2021-01-05 23:07:35,760-INFO: iter: 9200, lr: 0.000500, 'loss_cls_0': '0.065762', 'loss_loc_0': '0.048450', 'loss_cls_1': '0.042558', 'loss_loc_1': '0.064597', 'loss_cls_2': '0.022751', 'loss_loc_2': '0.037364', 'loss_rpn_cls': '0.014959', 'loss_rpn_bbox': '0.019045', 'loss': '0.333959', eta: 4:47:09, batch_cost: 0.82836 sec, ips: 4.82884 images/sec

2021-01-05 23:10:19,010-INFO: iter: 9400, lr: 0.000500, 'loss_cls_0': '0.067505', 'loss_loc_0': '0.049369', 'loss_cls_1': '0.044104', 'loss_loc_1': '0.068064', 'loss_cls_2': '0.023161', 'loss_loc_2': '0.037313', 'loss_rpn_cls': '0.013166', 'loss_rpn_bbox': '0.015955', 'loss': '0.344013', eta: 4:40:12, batch_cost: 0.81612 sec, ips: 4.90126 images/sec

2021-01-05 23:12:54,915-INFO: iter: 9600, lr: 0.000500, 'loss_cls_0': '0.063142', 'loss_loc_0': '0.048759', 'loss_cls_1': '0.043983', 'loss_loc_1': '0.066892', 'loss_cls_2': '0.021171', 'loss_loc_2': '0.035976', 'loss_rpn_cls': '0.013273', 'loss_rpn_bbox': '0.020711', 'loss': '0.335024', eta: 4:25:13, batch_cost: 0.78009 sec, ips: 5.12763 images/sec

2021-01-05 23:15:43,873-INFO: iter: 9800, lr: 0.000500, 'loss_cls_0': '0.062264', 'loss_loc_0': '0.046465', 'loss_cls_1': '0.040825', 'loss_loc_1': '0.061097', 'loss_cls_2': '0.021357', 'loss_loc_2': '0.034278', 'loss_rpn_cls': '0.018188', 'loss_rpn_bbox': '0.022862', 'loss': '0.337288', eta: 4:44:13, batch_cost: 0.84422 sec, ips: 4.73809 images/sec

2021-01-05 23:18:12,544-INFO: iter: 10000, lr: 0.000500, 'loss_cls_0': '0.068592', 'loss_loc_0': '0.045686', 'loss_cls_1': '0.041851', 'loss_loc_1': '0.056290', 'loss_cls_2': '0.021169', 'loss_loc_2': '0.034735', 'loss_rpn_cls': '0.024130', 'loss_rpn_bbox': '0.022144', 'loss': '0.348690', eta: 4:07:15, batch_cost: 0.74179 sec, ips: 5.39233 images/sec

2021-01-05 23:18:12,545-INFO: Save model to output/cascade_rcnn_cls_aware_r101_vd_fpn_1x_softnms/10000.

2021-01-05 23:18:32,878-INFO: Test iter 0

2021-01-05 23:18:42,559-INFO: Test iter 100

2021-01-05 23:18:51,977-INFO: Test iter 200

2021-01-05 23:19:01,319-INFO: Test iter 300

2021-01-05 23:19:10,298-INFO: Test iter 400

2021-01-05 23:19:19,694-INFO: Test iter 500

2021-01-05 23:19:30,167-INFO: Test iter 600

2021-01-05 23:20:12,973-INFO: Test iter 700

2021-01-05 23:20:56,846-INFO: Test iter 800

2021-01-05 23:21:41,043-INFO: Test iter 900

2021-01-05 23:22:24,846-INFO: Test iter 1000

2021-01-05 23:22:27,424-INFO: Test finish iter 1007

2021-01-05 23:22:27,424-INFO: Total number of images: 1007, inference time: 4.291148035091142 fps.

loading annotations into memory...

Done (t=0.01s)

creating index...

index created!

2021-01-05 23:22:30,228-INFO: Start evaluate...

Loading and preparing results...

DONE (t=1.66s)

creating index...

index created!

Running per image evaluation...

Evaluate annotation type *bbox*

DONE (t=4.36s).

Accumulating evaluation results...

DONE (t=1.82s).

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.182

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.349

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.179

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.109

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.122

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.153

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.215

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.283

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.293

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.249

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.235

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.247

2021-01-05 23:22:38,453-INFO: Save model to output/cascade_rcnn_cls_aware_r101_vd_fpn_1x_softnms/best_model.

2021-01-05 23:23:14,508-INFO: Best test box ap: 0.18210595131337717, in iter: 10000

2021-01-05 23:25:50,456-INFO: iter: 10200, lr: 0.000500, 'loss_cls_0': '0.067151', 'loss_loc_0': '0.044671', 'loss_cls_1': '0.035398', 'loss_loc_1': '0.045706', 'loss_cls_2': '0.018710', 'loss_loc_2': '0.026910', 'loss_rpn_cls': '0.021107', 'loss_rpn_bbox': '0.023018', 'loss': '0.306695', eta: 12:35:50, batch_cost: 2.29043 sec, ips: 1.74640 images/sec

2021-01-05 23:28:37,358-INFO: iter: 10400, lr: 0.000500, 'loss_cls_0': '0.062583', 'loss_loc_0': '0.044021', 'loss_cls_1': '0.038340', 'loss_loc_1': '0.060625', 'loss_cls_2': '0.019099', 'loss_loc_2': '0.034739', 'loss_rpn_cls': '0.017975', 'loss_rpn_bbox': '0.019496', 'loss': '0.312906', eta: 4:32:48, batch_cost: 0.83514 sec, ips: 4.78961 images/sec

2021-01-05 23:31:20,758-INFO: iter: 10600, lr: 0.000500, 'loss_cls_0': '0.067678', 'loss_loc_0': '0.046534', 'loss_cls_1': '0.039705', 'loss_loc_1': '0.063273', 'loss_cls_2': '0.020378', 'loss_loc_2': '0.034880', 'loss_rpn_cls': '0.016508', 'loss_rpn_bbox': '0.019341', 'loss': '0.338241', eta: 4:24:12, batch_cost: 0.81712 sec, ips: 4.89527 images/sec

2021-01-05 23:34:14,119-INFO: iter: 10800, lr: 0.000500, 'loss_cls_0': '0.064181', 'loss_loc_0': '0.048763', 'loss_cls_1': '0.040945', 'loss_loc_1': '0.063098', 'loss_cls_2': '0.022183', 'loss_loc_2': '0.041388', 'loss_rpn_cls': '0.014780', 'loss_rpn_bbox': '0.018421', 'loss': '0.335949', eta: 4:34:48, batch_cost: 0.85880 sec, ips: 4.65767 images/sec

2021-01-05 23:36:52,551-INFO: iter: 11000, lr: 0.000500, 'loss_cls_0': '0.064664', 'loss_loc_0': '0.048685', 'loss_cls_1': '0.042219', 'loss_loc_1': '0.072353', 'loss_cls_2': '0.021658', 'loss_loc_2': '0.040372', 'loss_rpn_cls': '0.016160', 'loss_rpn_bbox': '0.016732', 'loss': '0.329565', eta: 4:13:21, batch_cost: 0.80006 sec, ips: 4.99962 images/sec

2021-01-05 23:39:39,306-INFO: iter: 11200, lr: 0.000500, 'loss_cls_0': '0.069030', 'loss_loc_0': '0.052823', 'loss_cls_1': '0.043170', 'loss_loc_1': '0.069212', 'loss_cls_2': '0.022755', 'loss_loc_2': '0.042808', 'loss_rpn_cls': '0.014426', 'loss_rpn_bbox': '0.015931', 'loss': '0.363643', eta: 4:21:04, batch_cost: 0.83319 sec, ips: 4.80080 images/sec

2021-01-05 23:42:23,095-INFO: iter: 11400, lr: 0.000500, 'loss_cls_0': '0.064709', 'loss_loc_0': '0.043366', 'loss_cls_1': '0.038690', 'loss_loc_1': '0.051932', 'loss_cls_2': '0.020410', 'loss_loc_2': '0.026836', 'loss_rpn_cls': '0.032153', 'loss_rpn_bbox': '0.026254', 'loss': '0.333374', eta: 4:14:10, batch_cost: 0.81990 sec, ips: 4.87863 images/sec

2021-01-05 23:44:59,455-INFO: iter: 11600, lr: 0.000500, 'loss_cls_0': '0.069346', 'loss_loc_0': '0.049519', 'loss_cls_1': '0.042725', 'loss_loc_1': '0.065981', 'loss_cls_2': '0.021750', 'loss_loc_2': '0.035443', 'loss_rpn_cls': '0.020221', 'loss_rpn_bbox': '0.020773', 'loss': '0.334121', eta: 3:59:38, batch_cost: 0.78146 sec, ips: 5.11865 images/sec

2021-01-05 23:47:43,903-INFO: iter: 11800, lr: 0.000500, 'loss_cls_0': '0.067058', 'loss_loc_0': '0.047519', 'loss_cls_1': '0.042720', 'loss_loc_1': '0.066260', 'loss_cls_2': '0.021873', 'loss_loc_2': '0.040856', 'loss_rpn_cls': '0.015860', 'loss_rpn_bbox': '0.020680', 'loss': '0.340207', eta: 4:09:25, batch_cost: 0.82229 sec, ips: 4.86446 images/sec

2021-01-05 23:50:37,185-INFO: iter: 12000, lr: 0.000500, 'loss_cls_0': '0.062646', 'loss_loc_0': '0.049676', 'loss_cls_1': '0.044488', 'loss_loc_1': '0.070180', 'loss_cls_2': '0.022801', 'loss_loc_2': '0.040405', 'loss_rpn_cls': '0.016876', 'loss_rpn_bbox': '0.021076', 'loss': '0.346093', eta: 4:19:48, batch_cost: 0.86605 sec, ips: 4.61869 images/sec

2021-01-05 23:53:25,579-INFO: iter: 12200, lr: 0.000500, 'loss_cls_0': '0.063689', 'loss_loc_0': '0.050207', 'loss_cls_1': '0.042780', 'loss_loc_1': '0.073127', 'loss_cls_2': '0.022725', 'loss_loc_2': '0.044891', 'loss_rpn_cls': '0.017205', 'loss_rpn_bbox': '0.019897', 'loss': '0.359676', eta: 4:09:58, batch_cost: 0.84262 sec, ips: 4.74712 images/sec

2021-01-05 23:56:05,269-INFO: iter: 12400, lr: 0.000500, 'loss_cls_0': '0.067415', 'loss_loc_0': '0.045460', 'loss_cls_1': '0.046120', 'loss_loc_1': '0.070760', 'loss_cls_2': '0.025582', 'loss_loc_2': '0.044430', 'loss_rpn_cls': '0.016760', 'loss_rpn_bbox': '0.019427', 'loss': '0.360593', eta: 3:51:16, batch_cost: 0.78842 sec, ips: 5.07343 images/sec

2021-01-05 23:58:56,848-INFO: iter: 12600, lr: 0.000500, 'loss_cls_0': '0.061238', 'loss_loc_0': '0.022800', 'loss_cls_1': '0.029173', 'loss_loc_1': '0.010960', 'loss_cls_2': '0.016174', 'loss_loc_2': '0.005824', 'loss_rpn_cls': '0.078040', 'loss_rpn_bbox': '0.035954', 'loss': '0.332935', eta: 4:11:37, batch_cost: 0.86767 sec, ips: 4.61006 images/sec

2021-01-06 00:01:35,524-INFO: iter: 12800, lr: 0.000500, 'loss_cls_0': '0.064901', 'loss_loc_0': '0.027350', 'loss_cls_1': '0.022874', 'loss_loc_1': '0.012730', 'loss_cls_2': '0.012261', 'loss_loc_2': '0.005126', 'loss_rpn_cls': '0.042158', 'loss_rpn_bbox': '0.030900', 'loss': '0.223862', eta: 3:46:49, batch_cost: 0.79125 sec, ips: 5.05532 images/sec

2021-01-06 00:04:26,768-INFO: iter: 13000, lr: 0.000500, 'loss_cls_0': '0.064326', 'loss_loc_0': '0.040274', 'loss_cls_1': '0.025074', 'loss_loc_1': '0.024208', 'loss_cls_2': '0.013382', 'loss_loc_2': '0.010759', 'loss_rpn_cls': '0.024951', 'loss_rpn_bbox': '0.022420', 'loss': '0.240248', eta: 4:03:20, batch_cost: 0.85883 sec, ips: 4.65750 images/sec

2021-01-06 00:07:10,062-INFO: iter: 13200, lr: 0.000500, 'loss_cls_0': '0.072644', 'loss_loc_0': '0.050717', 'loss_cls_1': '0.031190', 'loss_loc_1': '0.038650', 'loss_cls_2': '0.018082', 'loss_loc_2': '0.026189', 'loss_rpn_cls': '0.016587', 'loss_rpn_bbox': '0.019350', 'loss': '0.296472', eta: 3:48:29, batch_cost: 0.81602 sec, ips: 4.90182 images/sec

2021-01-06 00:09:47,111-INFO: iter: 13400, lr: 0.000500, 'loss_cls_0': '0.072115', 'loss_loc_0': '0.050292', 'loss_cls_1': '0.035664', 'loss_loc_1': '0.053717', 'loss_cls_2': '0.020093', 'loss_loc_2': '0.035742', 'loss_rpn_cls': '0.015603', 'loss_rpn_bbox': '0.020776', 'loss': '0.326792', eta: 3:37:13, batch_cost: 0.78515 sec, ips: 5.09457 images/sec

2021-01-06 00:12:34,818-INFO: iter: 13600, lr: 0.000500, 'loss_cls_0': '0.070923', 'loss_loc_0': '0.049481', 'loss_cls_1': '0.036676', 'loss_loc_1': '0.060173', 'loss_cls_2': '0.021140', 'loss_loc_2': '0.036982', 'loss_rpn_cls': '0.016696', 'loss_rpn_bbox': '0.018729', 'loss': '0.329280', eta: 3:49:18, batch_cost: 0.83893 sec, ips: 4.76799 images/sec

2021-01-06 00:15:23,952-INFO: iter: 13800, lr: 0.000500, 'loss_cls_0': '0.066262', 'loss_loc_0': '0.049082', 'loss_cls_1': '0.039771', 'loss_loc_1': '0.056706', 'loss_cls_2': '0.021089', 'loss_loc_2': '0.036825', 'loss_rpn_cls': '0.014515', 'loss_rpn_bbox': '0.018457', 'loss': '0.332186', eta: 3:48:17, batch_cost: 0.84553 sec, ips: 4.73075 images/sec

2021-01-06 00:18:08,104-INFO: iter: 14000, lr: 0.000500, 'loss_cls_0': '0.072276', 'loss_loc_0': '0.052077', 'loss_cls_1': '0.044840', 'loss_loc_1': '0.070187', 'loss_cls_2': '0.025765', 'loss_loc_2': '0.045588', 'loss_rpn_cls': '0.016084', 'loss_rpn_bbox': '0.018939', 'loss': '0.375673', eta: 3:38:54, batch_cost: 0.82090 sec, ips: 4.87269 images/sec

2021-01-06 00:21:08,665-INFO: iter: 14200, lr: 0.000500, 'loss_cls_0': '0.076326', 'loss_loc_0': '0.052754', 'loss_cls_1': '0.044431', 'loss_loc_1': '0.069688', 'loss_cls_2': '0.025825', 'loss_loc_2': '0.044177', 'loss_rpn_cls': '0.015096', 'loss_rpn_bbox': '0.017612', 'loss': '0.382994', eta: 3:57:35, batch_cost: 0.90225 sec, ips: 4.43336 images/sec

2021-01-06 00:24:06,606-INFO: iter: 14400, lr: 0.000500, 'loss_cls_0': '0.072874', 'loss_loc_0': '0.050279', 'loss_cls_1': '0.046469', 'loss_loc_1': '0.071076', 'loss_cls_2': '0.025007', 'loss_loc_2': '0.046304', 'loss_rpn_cls': '0.011439', 'loss_rpn_bbox': '0.015747', 'loss': '0.367624', eta: 3:51:26, batch_cost: 0.89013 sec, ips: 4.49373 images/sec

2021-01-06 00:26:59,892-INFO: iter: 14600, lr: 0.000500, 'loss_cls_0': '0.066495', 'loss_loc_0': '0.051622', 'loss_cls_1': '0.042681', 'loss_loc_1': '0.072888', 'loss_cls_2': '0.024463', 'loss_loc_2': '0.048027', 'loss_rpn_cls': '0.012560', 'loss_rpn_bbox': '0.018051', 'loss': '0.343523', eta: 3:42:23, batch_cost: 0.86643 sec, ips: 4.61664 images/sec

2021-01-06 00:29:51,288-INFO: iter: 14800, lr: 0.000500, 'loss_cls_0': '0.071515', 'loss_loc_0': '0.053212', 'loss_cls_1': '0.043183', 'loss_loc_1': '0.072248', 'loss_cls_2': '0.024531', 'loss_loc_2': '0.047225', 'loss_rpn_cls': '0.013225', 'loss_rpn_bbox': '0.016982', 'loss': '0.348721', eta: 3:37:07, batch_cost: 0.85709 sec, ips: 4.66697 images/sec

2021-01-06 00:32:36,621-INFO: iter: 15000, lr: 0.000500, 'loss_cls_0': '0.070571', 'loss_loc_0': '0.049246', 'loss_cls_1': '0.045589', 'loss_loc_1': '0.072676', 'loss_cls_2': '0.025107', 'loss_loc_2': '0.048486', 'loss_rpn_cls': '0.013074', 'loss_rpn_bbox': '0.015932', 'loss': '0.379738', eta: 3:26:14, batch_cost: 0.82495 sec, ips: 4.84878 images/sec

2021-01-06 00:32:36,621-INFO: Save model to output/cascade_rcnn_cls_aware_r101_vd_fpn_1x_softnms/15000.

2021-01-06 00:32:56,975-INFO: Test iter 0

2021-01-06 00:33:05,701-INFO: Test iter 100

2021-01-06 00:33:14,292-INFO: Test iter 200

2021-01-06 00:33:22,707-INFO: Test iter 300

2021-01-06 00:33:31,037-INFO: Test iter 400

2021-01-06 00:33:39,479-INFO: Test iter 500

2021-01-06 00:33:49,083-INFO: Test iter 600

2021-01-06 00:34:32,669-INFO: Test iter 700

2021-01-06 00:35:16,346-INFO: Test iter 800

2021-01-06 00:36:01,073-INFO: Test iter 900

2021-01-06 00:36:46,427-INFO: Test iter 1000

2021-01-06 00:36:49,627-INFO: Test finish iter 1007

2021-01-06 00:36:49,627-INFO: Total number of images: 1007, inference time: 4.3263168012490025 fps.

loading annotations into memory...

Done (t=0.01s)

creating index...

index created!

2021-01-06 00:36:50,417-INFO: Start evaluate...

Loading and preparing results...

DONE (t=0.42s)

creating index...

index created!

Running per image evaluation...

Evaluate annotation type *bbox*

DONE (t=1.97s).

Accumulating evaluation results...

DONE (t=0.66s).

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.261

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.462

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.256

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.189

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.185

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.237

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.287

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.363

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.370

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.315

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.296

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.348

2021-01-06 00:36:53,609-INFO: Save model to output/cascade_rcnn_cls_aware_r101_vd_fpn_1x_softnms/best_model.

2021-01-06 00:37:29,535-INFO: Best test box ap: 0.2611445159639011, in iter: 15000

2021-01-06 00:40:04,188-INFO: iter: 15200, lr: 0.000500, 'loss_cls_0': '0.066876', 'loss_loc_0': '0.051639', 'loss_cls_1': '0.047556', 'loss_loc_1': '0.076481', 'loss_cls_2': '0.026373', 'loss_loc_2': '0.048205', 'loss_rpn_cls': '0.012475', 'loss_rpn_bbox': '0.016148', 'loss': '0.350458', eta: 9:11:52, batch_cost: 2.23731 sec, ips: 1.78786 images/sec

2021-01-06 00:42:46,245-INFO: iter: 15400, lr: 0.000500, 'loss_cls_0': '0.066060', 'loss_loc_0': '0.052031', 'loss_cls_1': '0.044632', 'loss_loc_1': '0.082567', 'loss_cls_2': '0.025446', 'loss_loc_2': '0.058891', 'loss_rpn_cls': '0.011484', 'loss_rpn_bbox': '0.015583', 'loss': '0.365930', eta: 3:17:26, batch_cost: 0.81138 sec, ips: 4.92989 images/sec

2021-01-06 00:45:37,729-INFO: iter: 15600, lr: 0.000500, 'loss_cls_0': '0.072315', 'loss_loc_0': '0.055202', 'loss_cls_1': '0.045002', 'loss_loc_1': '0.076404', 'loss_cls_2': '0.025505', 'loss_loc_2': '0.053287', 'loss_rpn_cls': '0.013081', 'loss_rpn_bbox': '0.016834', 'loss': '0.384814', eta: 3:26:00, batch_cost: 0.85834 sec, ips: 4.66018 images/sec

2021-01-06 00:48:23,624-INFO: iter: 15800, lr: 0.000500, 'loss_cls_0': '0.070336', 'loss_loc_0': '0.050973', 'loss_cls_1': '0.046747', 'loss_loc_1': '0.074327', 'loss_cls_2': '0.027804', 'loss_loc_2': '0.049670', 'loss_rpn_cls': '0.014746', 'loss_rpn_bbox': '0.017956', 'loss': '0.368038', eta: 3:16:18, batch_cost: 0.82950 sec, ips: 4.82218 images/sec

2021-01-06 00:51:11,615-INFO: iter: 16000, lr: 0.000500, 'loss_cls_0': '0.071403', 'loss_loc_0': '0.053685', 'loss_cls_1': '0.047917', 'loss_loc_1': '0.079114', 'loss_cls_2': '0.025545', 'loss_loc_2': '0.057177', 'loss_rpn_cls': '0.012593', 'loss_rpn_bbox': '0.015095', 'loss': '0.373875', eta: 3:16:01, batch_cost: 0.84012 sec, ips: 4.76123 images/sec

2021-01-06 00:54:04,151-INFO: iter: 16200, lr: 0.000500, 'loss_cls_0': '0.072183', 'loss_loc_0': '0.052932', 'loss_cls_1': '0.050209', 'loss_loc_1': '0.086099', 'loss_cls_2': '0.026967', 'loss_loc_2': '0.061982', 'loss_rpn_cls': '0.011397', 'loss_rpn_bbox': '0.017264', 'loss': '0.392682', eta: 3:18:21, batch_cost: 0.86246 sec, ips: 4.63791 images/sec

2021-01-06 00:56:49,088-INFO: iter: 16400, lr: 0.000500, 'loss_cls_0': '0.068158', 'loss_loc_0': '0.050173', 'loss_cls_1': '0.049182', 'loss_loc_1': '0.082593', 'loss_cls_2': '0.026114', 'loss_loc_2': '0.057491', 'loss_rpn_cls': '0.012618', 'loss_rpn_bbox': '0.016772', 'loss': '0.389515', eta: 3:06:54, batch_cost: 0.82457 sec, ips: 4.85099 images/sec

2021-01-06 00:59:49,505-INFO: iter: 16600, lr: 0.000500, 'loss_cls_0': '0.071238', 'loss_loc_0': '0.054461', 'loss_cls_1': '0.046717', 'loss_loc_1': '0.080054', 'loss_cls_2': '0.025314', 'loss_loc_2': '0.055334', 'loss_rpn_cls': '0.012206', 'loss_rpn_bbox': '0.016646', 'loss': '0.388371', eta: 3:21:28, batch_cost: 0.90211 sec, ips: 4.43407 images/sec

2021-01-06 01:02:51,205-INFO: iter: 16800, lr: 0.000500, 'loss_cls_0': '0.075484', 'loss_loc_0': '0.053586', 'loss_cls_1': '0.047874', 'loss_loc_1': '0.073687', 'loss_cls_2': '0.027131', 'loss_loc_2': '0.050229', 'loss_rpn_cls': '0.018113', 'loss_rpn_bbox': '0.023147', 'loss': '0.399621', eta: 3:19:57, batch_cost: 0.90888 sec, ips: 4.40101 images/sec

2021-01-06 01:05:45,816-INFO: iter: 17000, lr: 0.000500, 'loss_cls_0': '0.070221', 'loss_loc_0': '0.049896', 'loss_cls_1': '0.047129', 'loss_loc_1': '0.077793', 'loss_cls_2': '0.026747', 'loss_loc_2': '0.055582', 'loss_rpn_cls': '0.014799', 'loss_rpn_bbox': '0.017906', 'loss': '0.388288', eta: 3:09:03, batch_cost: 0.87256 sec, ips: 4.58419 images/sec

2021-01-06 01:08:47,833-INFO: iter: 17200, lr: 0.000500, 'loss_cls_0': '0.069130', 'loss_loc_0': '0.046456', 'loss_cls_1': '0.038361', 'loss_loc_1': '0.063351', 'loss_cls_2': '0.021034', 'loss_loc_2': '0.042539', 'loss_rpn_cls': '0.023127', 'loss_rpn_bbox': '0.022972', 'loss': '0.354758', eta: 3:13:27, batch_cost: 0.90684 sec, ips: 4.41090 images/sec

2021-01-06 01:11:47,992-INFO: iter: 17400, lr: 0.000500, 'loss_cls_0': '0.071986', 'loss_loc_0': '0.049988', 'loss_cls_1': '0.040766', 'loss_loc_1': '0.069570', 'loss_cls_2': '0.022108', 'loss_loc_2': '0.045157', 'loss_rpn_cls': '0.019879', 'loss_rpn_bbox': '0.021197', 'loss': '0.354816', eta: 3:09:47, batch_cost: 0.90374 sec, ips: 4.42607 images/sec

2021-01-06 01:14:39,452-INFO: iter: 17600, lr: 0.000500, 'loss_cls_0': '0.077550', 'loss_loc_0': '0.051988', 'loss_cls_1': '0.047692', 'loss_loc_1': '0.078635', 'loss_cls_2': '0.025124', 'loss_loc_2': '0.053583', 'loss_rpn_cls': '0.018500', 'loss_rpn_bbox': '0.019003', 'loss': '0.388664', eta: 2:57:18, batch_cost: 0.85794 sec, ips: 4.66232 images/sec

2021-01-06 01:17:37,300-INFO: iter: 17800, lr: 0.000500, 'loss_cls_0': '0.068284', 'loss_loc_0': '0.052294', 'loss_cls_1': '0.049155', 'loss_loc_1': '0.081252', 'loss_cls_2': '0.026631', 'loss_loc_2': '0.060193', 'loss_rpn_cls': '0.014038', 'loss_rpn_bbox': '0.018192', 'loss': '0.393549', eta: 2:59:26, batch_cost: 0.88249 sec, ips: 4.53262 images/sec