推荐系统(十六)推荐系统中的attention机制

契机

推荐系统中存在各种各样的序列特征,比如手机APP中各个场景下用户的点击行为序列,曝光序列,加入购物车序列等等,如何建模这些序列与target_item之间的关系成为最近大家都在研究的问题。传统处理序列特征的RNN和LSTM模型,由于耗时较多,慢慢不太适用于对耗时要求越来越高的推荐系统。最近attention机制的崛起恰巧能够解决耗时问题,且效果也比较好,所以本文会讲一些自己对推荐系统中的attention机制的理解,不对的地方请大家指出。

target-attention

原理

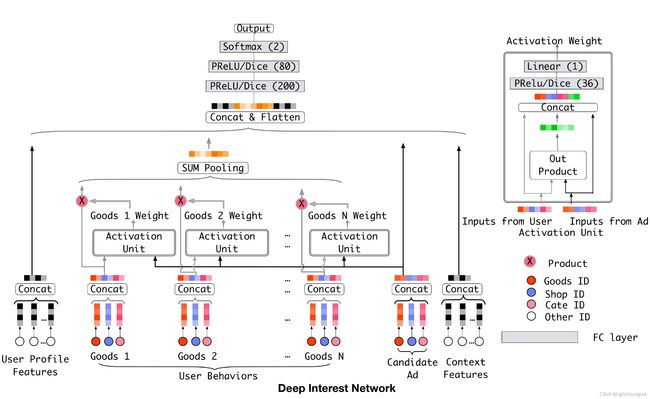

attention机制在推荐系统中第一次广泛应用应该是阿里DIN模型,该模型用attention方式建立了用户历史点击序列与当前待打分item之间的联系,较为成功地解决了序列特征的建模问题,由于整体原理比较简单,在这里不过多讲述。

代码实现

DIN模型作者提供的代码不是特别好理解,组内同事按照自己的理解,改进了下target-attention的实现方法,更符合attention本来 Q , K , V Q,K,V Q,K,V实现机制,代码如下所示,可以看出,target-attention和传统attention之间的区别在于多了一个 W W W,将原先的 K K K转换为 K t r a n s f o r m K_{transform} Ktransform,这样做的好处在于模型并不是强依赖于 K K K,而依赖于 K K K的一个高维抽象表示。传统attention机制实现代码可以参见这里。

# coding=utf-8

import tensorflow as tf

import numpy as np

def target_attention(Q, K, V):

""" target_attention implementation

:param Q:

:param K:

:param V:

:return: target_attention tensor

"""

k1, k2 = K.get_shape().as_list()[-1], Q.get_shape().as_list()[-1]

W = tf.get_variable("w", shape=[k1, k2], initializer=tf.keras.initializers.he_normal())

K_transform = tf.tensordot(K, W, axes=1)

d_k = tf.cast((k1 + k2) / 2, dtype=tf.float32)

logit = tf.matmul(K_transform, tf.expand_dims(Q, axis=-1)) / tf.sqrt(d_k)

weight = tf.nn.softmax(tf.squeeze(logit, axis=-1), axis=-1)

attention = tf.matmul(tf.expand_dims(weight, axis=1), V)

return tf.squeeze(attention, axis=1)

if __name__ == '__main__':

seq_len = 3

embedding_size = 4

seq_tensor = tf.placeholder(dtype=tf.float32, shape=(None, seq_len, embedding_size)) # 序列特征

target_tensor = tf.placeholder(dtype=tf.float32, shape=(None, embedding_size)) # target_item

t_attn = target_attention(target_tensor, seq_tensor, seq_tensor)

feed_dict = {

target_tensor: np.array([

[3.0, 4.0, 5.0, 6.0]

]),

seq_tensor: np.array([[

[1.0, 2.0, 3.0, 4.0],

[5.0, 6.0, 7.0, 8.0],

[9.0, 10.0, 11.0, 12.0]

]])

}

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

t_attn_out = sess.run(t_attn, feed_dict=feed_dict)

print(t_attn_out)

通常情况下,推荐系统中attention的 K K K和 V V V都为用户的点击序列,序列由item_id组成, Q Q Q为target_item_id;但也有许多变种,比如 Q Q Q为item_id + item_side_info,期望通过增加side_info来增加模型稳定性;或者 Q Q Q为点击序列的均值, K K K和 V V V为曝光未点击序列,期望利用点击序列过滤掉曝光未点击序列中用户感兴趣的item。

对于推荐系统中的target_attention,debug和case排查一直都是比较难解决的问题。个人建议是把 Q Q Q和 K K K组合计算后的权重(即上述代码的weight)打印出来,分析一下均值、方差以及直方图分布,就能在一定程度上发现问题。举个例子,如果发现所有权重的均值都差不多,说明attention压根就没起作用,要么是序列特征出问题了,要么是target_item构造的emb有问题,这样由果推因定位问题的速度还是比较快的。

self-attention

self-attention顾名思义,就是自己学习自己的attention权重,旨在增强序列内部各元素之间的联系,更像是一种可学习的归一化操作,具体代码如下所示:

import tensorflow as tf

def attention(Q, K, scaled_=True):

""" attention implementation

:param Q:

:param K:

:param scaled_: whether scaling logit by sqrt{dim of K}

:return: attention result

"""

logit = tf.matmul(Q, K, transpose_b=True) # [batch_size, sequence_length, sequence_length]

if scaled_:

d_k = tf.cast(tf.shape(K)[-1], dtype=tf.float32)

logit = tf.divide(logit, tf.sqrt(d_k)) # [batch_size, sequence_length, sequence_length]

weight = tf.nn.softmax(logit, dim=-1) # [batch_size, sequence_length, sequence_length]

return weight

def self_attention(Q, K, V):

""" self_attention implementation

:param V:

:param K:

:param Q:

:return: self attention result

"""

weight = attention(Q, K) # [batch_size, sequence_length, sequence_length]

s_attn = tf.matmul(weight, V) # [batch_size, sequence_length, n_classes]

return s_attn

排查self-attention的问题所用的手段,个人理解和target-attention比较类似,观察下weight的分布情况,进而由果推因。

multi-head attention

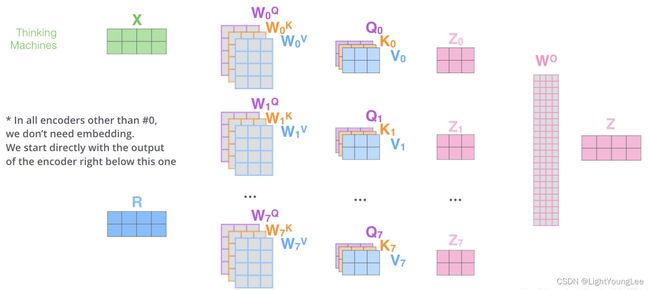

multi-head attention是在self-attention上做进一步拓展,具体原理如下图所示,

代码参考这里,如下所示。

class MultiHeadAttention(tf.keras.layers.Layer):

def __init__(self, d_model, num_heads):

super(MultiHeadAttention, self).__init__()

self.num_heads = num_heads

self.d_model = d_model

assert d_model % self.num_heads == 0

self.depth = d_model // self.num_heads

self.wq = tf.keras.layers.Dense(d_model)

self.wk = tf.keras.layers.Dense(d_model)

self.wv = tf.keras.layers.Dense(d_model)

self.dense = tf.keras.layers.Dense(d_model)

def split_heads(self, x, batch_size):

"""

分拆最后一个维度到 (num_heads, depth).

转置结果使得形状为 (batch_size, num_heads, seq_len, depth)

"""

x = tf.reshape(x, (batch_size, -1, self.num_heads, self.depth))

return tf.transpose(x, perm=[0, 2, 1, 3])

def call(self, v, k, q):

batch_size = tf.shape(q)[0]

# 第一步

q = self.wq(q) # (batch_size, seq_len, d_model)

k = self.wk(k) # (batch_size, seq_len, d_model)

v = self.wv(v) # (batch_size, seq_len, d_model)

# 第二步

q = self.split_heads(q, batch_size) # (batch_size, num_heads, seq_len_q, depth)

k = self.split_heads(k, batch_size) # (batch_size, num_heads, seq_len_k, depth)

v = self.split_heads(v, batch_size) # (batch_size, num_heads, seq_len_v, depth)

# 第三步

scaled_attention = self_attention(q, k, v) # (batch_size, num_heads, seq_len_q, depth)

scaled_attention = tf.transpose(scaled_attention, perm=[0, 2, 1, 3]) # (batch_size, seq_len_q, num_heads, depth)

# 第四步

concat_attention = tf.reshape(scaled_attention, (batch_size, -1, self.d_model)) # (batch_size, seq_len_q, d_model)

# 第五步

m_attention = self.dense(concat_attention) # (batch_size, seq_len_q, d_model)

return m_attention

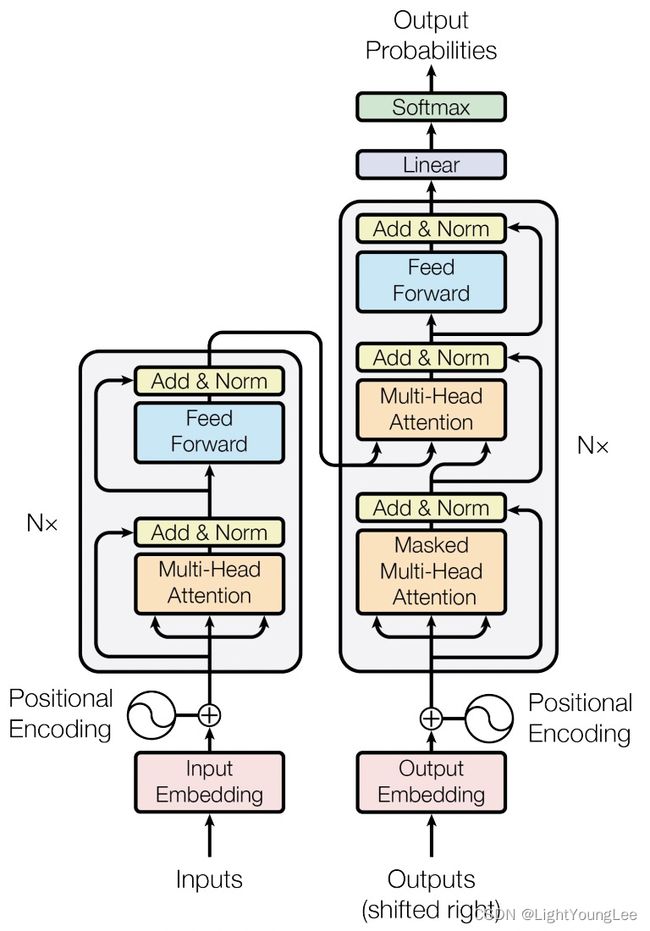

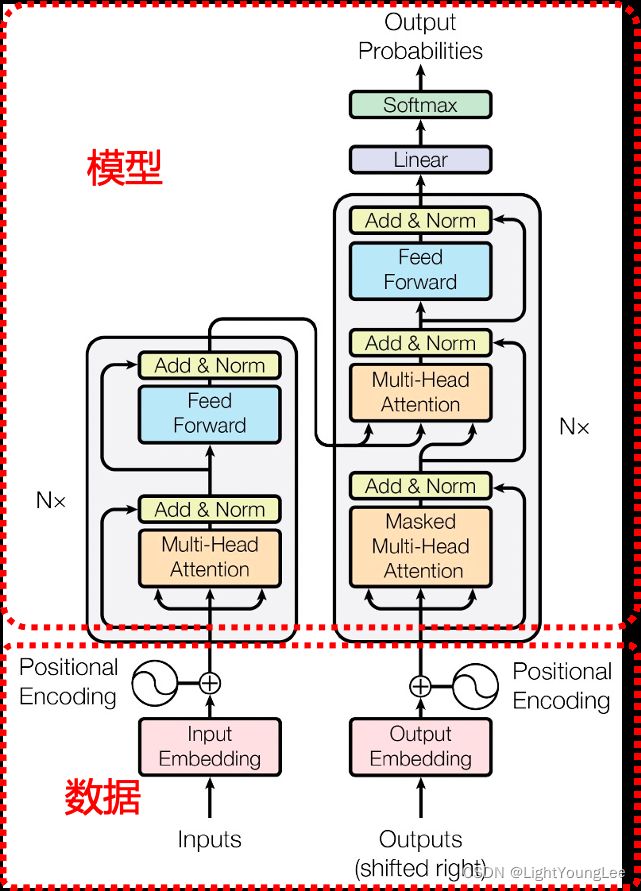

transformer

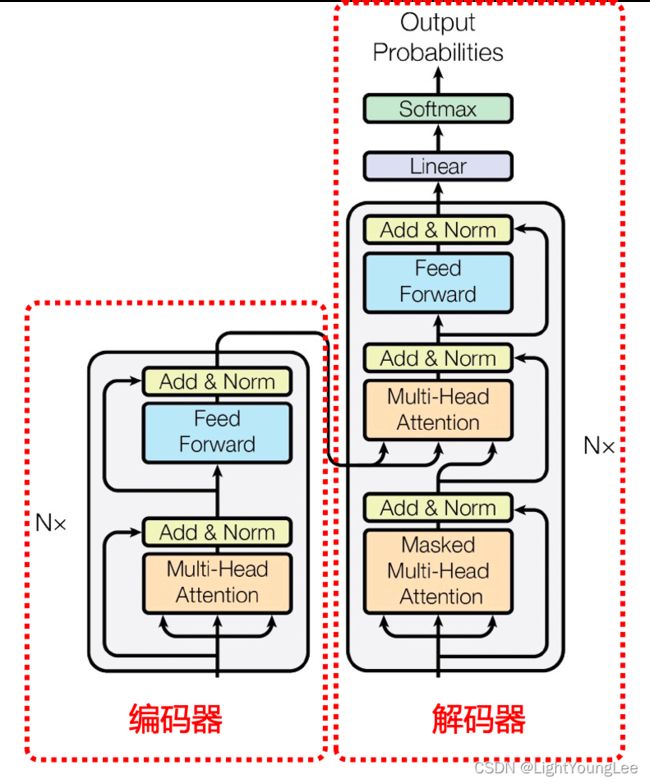

transformer总体结构如下图所示,

整体流程分为两块:数据和模型。

输入数据是positional encoding,核心是对embedding进行再编码,这里不赘述。模型分为编码器和解码器,下面会具体讲述这两个部件。

编码器

编码器(Encoder)的任务是把输入position encoding转化为较为高阶的表示。它由N个EncodeLayer组成,具体示意图和代码如下,后续会逐步解析。

class Encoder(tf.keras.layers.Layer):

def __init__(self, num_layers, d_model, num_heads, dff, input_vocab_size,

maximum_position_encoding, rate=0.1):

super(Encoder, self).__init__()

self.d_model = d_model

self.num_layers = num_layers

self.embedding = tf.keras.layers.Embedding(input_vocab_size, d_model)

self.pos_encoding = positional_encoding(maximum_position_encoding,

self.d_model)

self.enc_layers = [EncoderLayer(d_model, num_heads, dff, rate)

for _ in range(num_layers)]

self.dropout = tf.keras.layers.Dropout(rate)

def call(self, x, training, mask):

seq_len = tf.shape(x)[1]

# 将嵌入和位置编码相加。

x = self.embedding(x) # (batch_size, input_seq_len, d_model)

x *= tf.math.sqrt(tf.cast(self.d_model, tf.float32))

x += self.pos_encoding[:, :seq_len, :]

x = self.dropout(x, training=training)

for i in range(self.num_layers):

x = self.enc_layers[i](x, training, mask)

return x # (batch_size, input_seq_len, d_model)

从代码可以看出,当前EncoderLayer输入为上一个EncodeLayer的输出,符合seq2seq模型中编码器的定义。

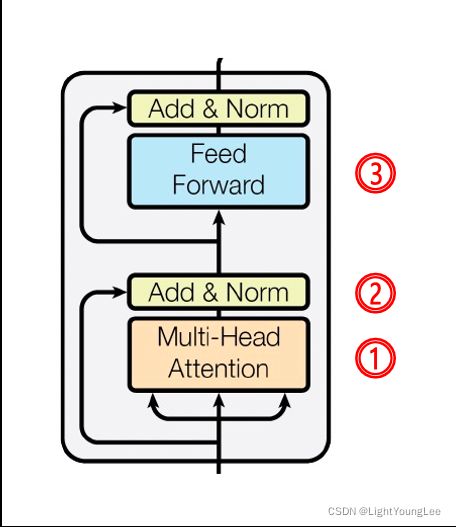

EncodeLayer

EncodeLayer由3个部分组成:masked-multi-head attention、残差网络和前向反馈网络,具体示意图和代码如下,后续会逐步解析。

class EncoderLayer(tf.keras.layers.Layer):

def __init__(self, d_model, num_heads, dff, rate=0.1):

super(EncoderLayer, self).__init__()

self.mha = MultiHeadAttention(d_model, num_heads)

self.ffn = point_wise_feed_forward_network(d_model, dff)

self.layernorm1 = tf.keras.layers.LayerNormalization(epsilon=1e-6)

self.layernorm2 = tf.keras.layers.LayerNormalization(epsilon=1e-6)

self.dropout1 = tf.keras.layers.Dropout(rate)

self.dropout2 = tf.keras.layers.Dropout(rate)

def call(self, x, training, mask):

attn_output, _ = self.mha(x, x, x, mask) # (batch_size, input_seq_len, d_model)

attn_output = self.dropout1(attn_output, training=training)

out1 = self.layernorm1(x + attn_output) # (batch_size, input_seq_len, d_model)

ffn_output = self.ffn(out1) # (batch_size, input_seq_len, d_model)

ffn_output = self.dropout2(ffn_output, training=training)

out2 = self.layernorm2(out1 + ffn_output) # (batch_size, input_seq_len, d_model)

return out2

masked-multi-head attention

第一部分是masked-multi-head attention,它multi-head attention的区别在于,计算第k个位置上attention_score时,把后面的输入信息都置为0。具体代码如下所示:

def create_look_ahead_mask(size):

mask = 1 - tf.linalg.band_part(tf.ones((size, size)), -1, 0)

return mask # (seq_len, seq_len)

残差网络

第二部分是残差网络,指类似resnet的残差结构 + layer_norm,这里不再赘述。

前向反馈网络

第三部分是前向反馈网络,即为DNN全连接网络。

def point_wise_feed_forward_network(d_model, dff):

return tf.keras.Sequential([

tf.keras.layers.Dense(dff, activation='relu'), # (batch_size, seq_len, dff)

tf.keras.layers.Dense(d_model) # (batch_size, seq_len, d_model)

])

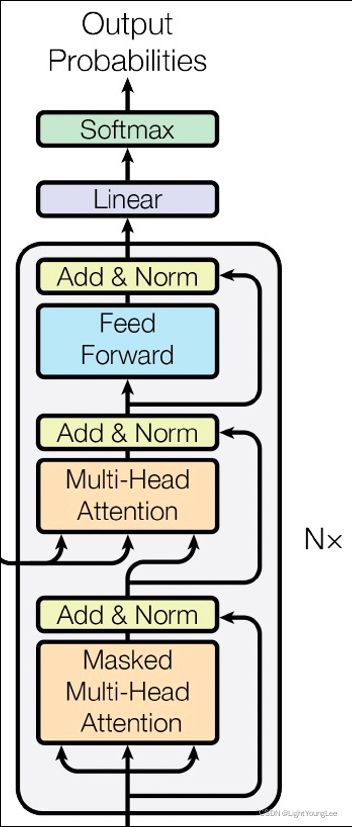

解码器

解码器(Decoder)的原理比Encoder相对复杂些,由N个DecodeLayer组成,具体示意图和代码如下所示,然后逐层去解析。

class Decoder(tf.keras.layers.Layer):

def __init__(self, num_layers, d_model, num_heads, dff, target_vocab_size,

maximum_position_encoding, rate=0.1):

super(Decoder, self).__init__()

self.d_model = d_model

self.num_layers = num_layers

self.embedding = tf.keras.layers.Embedding(target_vocab_size, d_model)

self.pos_encoding = positional_encoding(maximum_position_encoding, d_model)

self.dec_layers = [DecoderLayer(d_model, num_heads, dff, rate)

for _ in range(num_layers)]

self.dropout = tf.keras.layers.Dropout(rate)

def call(self, x, enc_output, training,

look_ahead_mask, padding_mask):

seq_len = tf.shape(x)[1]

attention_weights = {

}

x = self.embedding(x) # (batch_size, target_seq_len, d_model)

x *= tf.math.sqrt(tf.cast(self.d_model, tf.float32))

x += self.pos_encoding[:, :seq_len, :]

x = self.dropout(x, training=training)

for i in range(self.num_layers):

x, block1, block2 = self.dec_layers[i](x, enc_output, training,

look_ahead_mask, padding_mask)

attention_weights['decoder_layer{}_block1'.format(i + 1)] = block1

attention_weights['decoder_layer{}_block2'.format(i + 1)] = block2

# x.shape == (batch_size, target_seq_len, d_model)

return x, attention_weights

从上面代码可以看出,Decoder的整体流程是先经过一个DecoderLayer,然后之后的每个DecoderLayer的输入Q为上一个DecoderLayer的输出,K和V为最后一个Encoder的输出。

DecoderLayer

DecoderLayer的基本组成部分和Encoder大体类似,这里直接上代码:

class DecoderLayer(tf.keras.layers.Layer):

def __init__(self, d_model, num_heads, dff, rate=0.1):

super(DecoderLayer, self).__init__()

self.mha1 = MultiHeadAttention(d_model, num_heads)

self.mha2 = MultiHeadAttention(d_model, num_heads)

self.ffn = point_wise_feed_forward_network(d_model, dff)

self.layernorm1 = tf.keras.layers.LayerNormalization(epsilon=1e-6)

self.layernorm2 = tf.keras.layers.LayerNormalization(epsilon=1e-6)

self.layernorm3 = tf.keras.layers.LayerNormalization(epsilon=1e-6)

self.dropout1 = tf.keras.layers.Dropout(rate)

self.dropout2 = tf.keras.layers.Dropout(rate)

self.dropout3 = tf.keras.layers.Dropout(rate)

def call(self, x, enc_output, training,

look_ahead_mask, padding_mask):

# enc_output.shape == (batch_size, input_seq_len, d_model)

attn1, attn_weights_block1 = self.mha1(x, x, x, look_ahead_mask) # (batch_size, target_seq_len, d_model)

attn1 = self.dropout1(attn1, training=training)

out1 = self.layernorm1(attn1 + x)

attn2, attn_weights_block2 = self.mha2(

enc_output, enc_output, out1, padding_mask) # (batch_size, target_seq_len, d_model)

attn2 = self.dropout2(attn2, training=training)

out2 = self.layernorm2(attn2 + out1) # (batch_size, target_seq_len, d_model)

ffn_output = self.ffn(out2) # (batch_size, target_seq_len, d_model)

ffn_output = self.dropout3(ffn_output, training=training)

out3 = self.layernorm3(ffn_output + out2) # (batch_size, target_seq_len, d_model)

return out3, attn_weights_block1, attn_weights_block2

从Decoder这一侧可以看出,transformer模型依然符合传统意义上的seq2seq模型的架构,只是每个部件由attention代替。

参考链接

- 阿里DIN模型

- attention应用

- tf官方讲解transformer