python自然语言处理实战 | NLP中用到的机器学习算法学习笔记

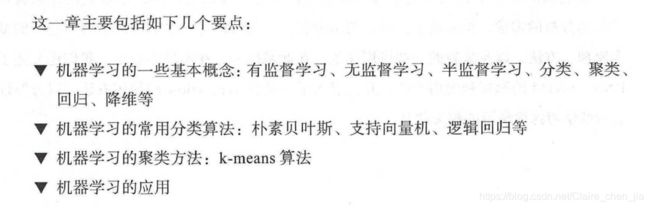

这是对涂铭等老师撰写的《Python自然语言处理实战:核心技术与算法》中第9章NLP中用到的机器学习算法

的学习笔记。

这里写目录标题

- 文本分类:中文垃圾邮件分类

- 文本聚类实战:用K-means 对豆瓣读书数据聚类

- 总结

文本分类:中文垃圾邮件分类

- 特征提取器构建(前期准备)

"""

@author: liushuchun

"""

from sklearn.feature_extraction.text import CountVectorizer

def bow_extractor(corpus, ngram_range=(1, 1)):

vectorizer = CountVectorizer(min_df=1, ngram_range=ngram_range)

features = vectorizer.fit_transform(corpus)

return vectorizer, features

from sklearn.feature_extraction.text import TfidfTransformer

def tfidf_transformer(bow_matrix):

transformer = TfidfTransformer(norm='l2',

smooth_idf=True,

use_idf=True)

tfidf_matrix = transformer.fit_transform(bow_matrix)

return transformer, tfidf_matrix

from sklearn.feature_extraction.text import TfidfVectorizer

def tfidf_extractor(corpus, ngram_range=(1, 1)):

vectorizer = TfidfVectorizer(min_df=1,

norm='l2',

smooth_idf=True,

use_idf=True,

ngram_range=ngram_range)

features = vectorizer.fit_transform(corpus)

return vectorizer, features

- 数据标注处理(前期准备)

"""

@author: liushuchun

"""

import re

import string

import jieba

# 加载停用词

with open("dict/stop_words.utf8", encoding="utf8") as f:

stopword_list = f.readlines()

def tokenize_text(text):

tokens = jieba.cut(text)

tokens = [token.strip() for token in tokens]

return tokens

def remove_special_characters(text):

tokens = tokenize_text(text)

pattern = re.compile('[{}]'.format(re.escape(string.punctuation)))

filtered_tokens = filter(None, [pattern.sub('', token) for token in tokens])

filtered_text = ' '.join(filtered_tokens)

return filtered_text

def remove_stopwords(text):

tokens = tokenize_text(text)

filtered_tokens = [token for token in tokens if token not in stopword_list]

filtered_text = ''.join(filtered_tokens)

return filtered_text

def normalize_corpus(corpus, tokenize=False):

normalized_corpus = []

for text in corpus:

text = remove_special_characters(text)

text = remove_stopwords(text)

normalized_corpus.append(text)

if tokenize:

text = tokenize_text(text)

normalized_corpus.append(text)

return normalized_corpus

(3)邮件分类全流程(只需运行这个,把前两个文件放在同一路径下)

"""

author: liushuchun

"""

import numpy as np

from sklearn.model_selection import train_test_split

def get_data():

'''

获取数据

:return: 文本数据,对应的labels

'''

with open("data/ham_data.txt", encoding="utf8") as ham_f, open("data/spam_data.txt", encoding="utf8") as spam_f:

ham_data = ham_f.readlines()

spam_data = spam_f.readlines()

ham_label = np.ones(len(ham_data)).tolist()

spam_label = np.zeros(len(spam_data)).tolist()

corpus = ham_data + spam_data

labels = ham_label + spam_label

return corpus, labels

def prepare_datasets(corpus, labels, test_data_proportion=0.3):

'''

:param corpus: 文本数据

:param labels: label数据

:param test_data_proportion:测试数据占比

:return: 训练数据,测试数据,训练label,测试label

'''

train_X, test_X, train_Y, test_Y = train_test_split(corpus, labels,

test_size=test_data_proportion, random_state=42)

return train_X, test_X, train_Y, test_Y

def remove_empty_docs(corpus, labels):

filtered_corpus = []

filtered_labels = []

for doc, label in zip(corpus, labels):

if doc.strip():

filtered_corpus.append(doc)

filtered_labels.append(label)

return filtered_corpus, filtered_labels

from sklearn import metrics

def get_metrics(true_labels, predicted_labels):

print('准确率:', np.round(

metrics.accuracy_score(true_labels,

predicted_labels),

2))

print('精度:', np.round(

metrics.precision_score(true_labels,

predicted_labels,

average='weighted'),

2))

print('召回率:', np.round(

metrics.recall_score(true_labels,

predicted_labels,

average='weighted'),

2))

print('F1得分:', np.round(

metrics.f1_score(true_labels,

predicted_labels,

average='weighted'),

2))

def train_predict_evaluate_model(classifier,

train_features, train_labels,

test_features, test_labels):

# build model

classifier.fit(train_features, train_labels)

# predict using model

predictions = classifier.predict(test_features)

# evaluate model prediction performance

get_metrics(true_labels=test_labels,

predicted_labels=predictions)

return predictions

def main():

corpus, labels = get_data() # 获取数据集

print("总的数据量:", len(labels))

corpus, labels = remove_empty_docs(corpus, labels)

print('样本之一:', corpus[10])

print('样本的label:', labels[10])

label_name_map = ["垃圾邮件", "正常邮件"]

print('实际类型:', label_name_map[int(labels[10])], label_name_map[int(labels[5900])])

# 对数据进行划分

train_corpus, test_corpus, train_labels, test_labels = prepare_datasets(corpus,

labels,

test_data_proportion=0.3)

from normalization import normalize_corpus

# 进行归一化

norm_train_corpus = normalize_corpus(train_corpus)

norm_test_corpus = normalize_corpus(test_corpus)

''.strip()

from feature_extractors import bow_extractor, tfidf_extractor

import gensim

import jieba

# 词袋模型特征

bow_vectorizer, bow_train_features = bow_extractor(norm_train_corpus)

bow_test_features = bow_vectorizer.transform(norm_test_corpus)

# tfidf 特征

tfidf_vectorizer, tfidf_train_features = tfidf_extractor(norm_train_corpus)

tfidf_test_features = tfidf_vectorizer.transform(norm_test_corpus)

# tokenize documents

tokenized_train = [jieba.lcut(text)

for text in norm_train_corpus]

print(tokenized_train[2:10])

tokenized_test = [jieba.lcut(text)

for text in norm_test_corpus]

# build word2vec 模型

model = gensim.models.Word2Vec(tokenized_train,

size=500,

window=100,

min_count=30,

sample=1e-3)

from sklearn.naive_bayes import MultinomialNB

from sklearn.linear_model import SGDClassifier

from sklearn.linear_model import LogisticRegression

mnb = MultinomialNB()

svm = SGDClassifier(loss='hinge', n_iter_no_change=100)

lr = LogisticRegression()

# 基于词袋模型的多项朴素贝叶斯

print("基于词袋模型特征的贝叶斯分类器")

mnb_bow_predictions = train_predict_evaluate_model(classifier=mnb,

train_features=bow_train_features,

train_labels=train_labels,

test_features=bow_test_features,

test_labels=test_labels)

# 基于词袋模型特征的逻辑回归

print("基于词袋模型特征的逻辑回归")

lr_bow_predictions = train_predict_evaluate_model(classifier=lr,

train_features=bow_train_features,

train_labels=train_labels,

test_features=bow_test_features,

test_labels=test_labels)

# 基于词袋模型的支持向量机方法

print("基于词袋模型的支持向量机")

svm_bow_predictions = train_predict_evaluate_model(classifier=svm,

train_features=bow_train_features,

train_labels=train_labels,

test_features=bow_test_features,

test_labels=test_labels)

# 基于tfidf的多项式朴素贝叶斯模型

print("基于tfidf的贝叶斯模型")

mnb_tfidf_predictions = train_predict_evaluate_model(classifier=mnb,

train_features=tfidf_train_features,

train_labels=train_labels,

test_features=tfidf_test_features,

test_labels=test_labels)

# 基于tfidf的逻辑回归模型

print("基于tfidf的逻辑回归模型")

lr_tfidf_predictions=train_predict_evaluate_model(classifier=lr,

train_features=tfidf_train_features,

train_labels=train_labels,

test_features=tfidf_test_features,

test_labels=test_labels)

# 基于tfidf的支持向量机模型

print("基于tfidf的支持向量机模型")

svm_tfidf_predictions = train_predict_evaluate_model(classifier=svm,

train_features=tfidf_train_features,

train_labels=train_labels,

test_features=tfidf_test_features,

test_labels=test_labels)

import re

num = 0

for document, label, predicted_label in zip(test_corpus, test_labels, svm_tfidf_predictions):

if label == 0 and predicted_label == 0:

print('邮件类型:', label_name_map[int(label)])

print('预测的邮件类型:', label_name_map[int(predicted_label)])

print('文本:-')

print(re.sub('\n', ' ', document))

num += 1

if num == 4:

break

num = 0

for document, label, predicted_label in zip(test_corpus, test_labels, svm_tfidf_predictions):

if label == 1 and predicted_label == 0:

print('邮件类型:', label_name_map[int(label)])

print('预测的邮件类型:', label_name_map[int(predicted_label)])

print('文本:-')

print(re.sub('\n', ' ', document))

num += 1

if num == 4:

break

if __name__ == "__main__":

main()

文本聚类实战:用K-means 对豆瓣读书数据聚类

- 爬取豆瓣读书数据

import ssl

import bs4

import re

import requests

import csv

import codecs

import time

from urllib import request, error

context = ssl._create_unverified_context()

class DouBanSpider:

def __init__(self):

self.userAgent = "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/59.0.3071.115 Safari/537.36"

self.headers = {

"User-Agent": self.userAgent}

# 拿到豆瓣图书的分类标签

def getBookCategroies(self):

try:

url = "https://book.douban.com/tag/?view=type&icn=index-sorttags-all"

response = request.urlopen(url, context=context)

content = response.read().decode("utf-8")

return content

except error.HTTPError as identifier:

print("errorCode: " + identifier.code + "errrorReason: " + identifier.reason)

return None

# 找到每个标签的内容

def getCategroiesContent(self):

content = self.getBookCategroies()

if not content:

print("页面抓取失败...")

return None

soup = bs4.BeautifulSoup(content, "lxml")

categroyMatch = re.compile(r"^/tag/*")

categroies = []

for categroy in soup.find_all("a", {

"href": categroyMatch}):

if categroy:

categroies.append(categroy.string)

return categroies

# 拿到每个标签的链接

def getCategroyLink(self):

categroies = self.getCategroiesContent()

categroyLinks = []

for item in categroies:

link = "https://book.douban.com/tag/" + str(item)

categroyLinks.append(link)

return categroyLinks

def getBookInfo(self, categroyLinks):

self.setCsvTitle()

categroies = categroyLinks

try:

for link in categroies:

print("正在爬取:" + link)

bookList = []

response = requests.get(link)

soup = bs4.BeautifulSoup(response.text, 'lxml')

bookCategroy = soup.h1.string

for book in soup.find_all("li", {

"class": "subject-item"}):

bookSoup = bs4.BeautifulSoup(str(book), "lxml")

bookTitle = bookSoup.h2.a["title"]

bookAuthor = bookSoup.find("div", {

"class": "pub"})

bookComment = bookSoup.find("span", {

"class": "pl"})

bookContent = bookSoup.li.p

# print(bookContent)

if bookTitle and bookAuthor and bookComment and bookContent:

bookList.append([bookTitle.strip(),bookCategroy.strip() , bookAuthor.string.strip(),

bookComment.string.strip(), bookContent.string.strip()])

self.saveBookInfo(bookList)

time.sleep(3)

print("爬取结束....")

except error.HTTPError as identifier:

print("errorCode: " + identifier.code + "errrorReason: " + identifier.reason)

return None

def setCsvTitle(self):

csvFile = codecs.open("data/data.csv", 'a', 'utf_8_sig')

try:

writer = csv.writer(csvFile)

writer.writerow(['title', 'tag', 'info', 'comments', 'content'])

finally:

csvFile.close()

def saveBookInfo(self, bookList):

bookList = bookList

csvFile = codecs.open("data/data.csv", 'a', 'utf_8_sig')

try:

writer = csv.writer(csvFile)

for book in bookList:

writer.writerow(book)

finally:

csvFile.close()

def start(self):

categroyLink = self.getCategroyLink()

self.getBookInfo(categroyLink)

douBanSpider = DouBanSpider()

douBanSpider.start()

- 数据标准化处理

"""

@author: liushuchun

"""

import re

import string

import jieba

# 加载停用词

with open("dict/stop_words.utf8", encoding="utf8") as f:

stopword_list = f.readlines()

def tokenize_text(text):

tokens = jieba.lcut(text)

tokens = [token.strip() for token in tokens]

return tokens

def remove_special_characters(text):

tokens = tokenize_text(text)

pattern = re.compile('[{}]'.format(re.escape(string.punctuation)))

filtered_tokens = filter(None, [pattern.sub('', token) for token in tokens])

filtered_text = ' '.join(filtered_tokens)

return filtered_text

def remove_stopwords(text):

tokens = tokenize_text(text)

filtered_tokens = [token for token in tokens if token not in stopword_list]

filtered_text = ''.join(filtered_tokens)

return filtered_text

def normalize_corpus(corpus):

normalized_corpus = []

for text in corpus:

text =" ".join(jieba.lcut(text))

normalized_corpus.append(text)

return normalized_corpus

- 文本聚类全流程(只需运行这个,把前两个文件放在同一路径下)

```python

"""

@author: liushuchun

"""

import pandas as pd

import numpy as np

from sklearn.feature_extraction.text import CountVectorizer, TfidfVectorizer

def build_feature_matrix(documents, feature_type='frequency',

ngram_range=(1, 1), min_df=0.0, max_df=1.0):

feature_type = feature_type.lower().strip()

if feature_type == 'binary':

vectorizer = CountVectorizer(binary=True,

max_df=max_df, ngram_range=ngram_range)

elif feature_type == 'frequency':

vectorizer = CountVectorizer(binary=False, min_df=min_df,

max_df=max_df, ngram_range=ngram_range)

elif feature_type == 'tfidf':

vectorizer = TfidfVectorizer()

else:

raise Exception("Wrong feature type entered. Possible values: 'binary', 'frequency', 'tfidf'")

feature_matrix = vectorizer.fit_transform(documents).astype(float)

return vectorizer, feature_matrix

book_data = pd.read_csv('data/data.csv') #读取文件

print(book_data.head())

book_titles = book_data['title'].tolist()

book_content = book_data['content'].tolist()

print('书名:', book_titles[0])

print('内容:', book_content[0][:10])

from normalization import normalize_corpus

# normalize corpus

norm_book_content = normalize_corpus(book_content)

# 提取 tf-idf 特征

vectorizer, feature_matrix = build_feature_matrix(norm_book_content,

feature_type='tfidf',

min_df=0.2, max_df=0.90,

ngram_range=(1, 2))

# 查看特征数量

print(feature_matrix.shape)

# 获取特征名字

feature_names = vectorizer.get_feature_names()

# 打印某些特征

print(feature_names[:10])

from sklearn.cluster import KMeans

def k_means(feature_matrix, num_clusters=10):

km = KMeans(n_clusters=num_clusters,

max_iter=10000)

km.fit(feature_matrix)

clusters = km.labels_

return km, clusters

num_clusters = 10

km_obj, clusters = k_means(feature_matrix=feature_matrix,

num_clusters=num_clusters)

book_data['Cluster'] = clusters

from collections import Counter

# 获取每个cluster的数量

c = Counter(clusters)

print(c.items())

def get_cluster_data(clustering_obj, book_data,

feature_names, num_clusters,

topn_features=10):

cluster_details = {}

# 获取cluster的center

ordered_centroids = clustering_obj.cluster_centers_.argsort()[:, ::-1]

# 获取每个cluster的关键特征

# 获取每个cluster的书

for cluster_num in range(num_clusters):

cluster_details[cluster_num] = {}

cluster_details[cluster_num]['cluster_num'] = cluster_num

key_features = [feature_names[index]

for index

in ordered_centroids[cluster_num, :topn_features]]

cluster_details[cluster_num]['key_features'] = key_features

books = book_data[book_data['Cluster'] == cluster_num]['title'].values.tolist()

cluster_details[cluster_num]['books'] = books

return cluster_details

def print_cluster_data(cluster_data):

# print cluster details

for cluster_num, cluster_details in cluster_data.items():

print('Cluster {} details:'.format(cluster_num))

print('-' * 20)

print('Key features:', cluster_details['key_features'])

print('book in this cluster:')

print(', '.join(cluster_details['books']))

print('=' * 40)

import matplotlib.pyplot as plt

from sklearn.manifold import MDS

from sklearn.metrics.pairwise import cosine_similarity

import random

from matplotlib.font_manager import FontProperties

def plot_clusters(num_clusters, feature_matrix,

cluster_data, book_data,

plot_size=(16, 8)):

# generate random color for clusters

def generate_random_color():

color = '#%06x' % random.randint(0, 0xFFFFFF)

return color

# define markers for clusters

markers = ['o', 'v', '^', '<', '>', '8', 's', 'p', '*', 'h', 'H', 'D', 'd']

# build cosine distance matrix

cosine_distance = 1 - cosine_similarity(feature_matrix)

# dimensionality reduction using MDS

mds = MDS(n_components=2, dissimilarity="precomputed",

random_state=1)

# get coordinates of clusters in new low-dimensional space

plot_positions = mds.fit_transform(cosine_distance)

x_pos, y_pos = plot_positions[:, 0], plot_positions[:, 1]

# build cluster plotting data

cluster_color_map = {}

cluster_name_map = {}

for cluster_num, cluster_details in cluster_data[0:500].items():

# assign cluster features to unique label

cluster_color_map[cluster_num] = generate_random_color()

cluster_name_map[cluster_num] = ', '.join(cluster_details['key_features'][:5]).strip()

# map each unique cluster label with its coordinates and books

cluster_plot_frame = pd.DataFrame({'x': x_pos,

'y': y_pos,

'label': book_data['Cluster'].values.tolist(),

'title': book_data['title'].values.tolist()

})

grouped_plot_frame = cluster_plot_frame.groupby('label')

# set plot figure size and axes

fig, ax = plt.subplots(figsize=plot_size)

ax.margins(0.05)

# plot each cluster using co-ordinates and book titles

for cluster_num, cluster_frame in grouped_plot_frame:

marker = markers[cluster_num] if cluster_num < len(markers) \

else np.random.choice(markers, size=1)[0]

ax.plot(cluster_frame['x'], cluster_frame['y'],

marker=marker, linestyle='', ms=12,

label=cluster_name_map[cluster_num],

color=cluster_color_map[cluster_num], mec='none')

ax.set_aspect('auto')

ax.tick_params(axis='x', which='both', bottom='off', top='off',

labelbottom='off')

ax.tick_params(axis='y', which='both', left='off', top='off',

labelleft='off')

fontP = FontProperties()

fontP.set_size('small')

ax.legend(loc='upper center', bbox_to_anchor=(0.5, -0.01), fancybox=True,

shadow=True, ncol=5, numpoints=1, prop=fontP)

# add labels as the film titles

for index in range(len(cluster_plot_frame)):

ax.text(cluster_plot_frame.ix[index]['x'],

cluster_plot_frame.ix[index]['y'],

cluster_plot_frame.ix[index]['title'], size=8)

# show the plot

plt.show()

cluster_data = get_cluster_data(clustering_obj=km_obj,

book_data=book_data,

feature_names=feature_names,

num_clusters=num_clusters,

topn_features=5)

print_cluster_data(cluster_data)

plot_clusters(num_clusters=num_clusters,

feature_matrix=feature_matrix,

cluster_data=cluster_data,

book_data=book_data,

plot_size=(16, 8))

from sklearn.cluster import AffinityPropagation

def affinity_propagation(feature_matrix):

sim = feature_matrix * feature_matrix.T

sim = sim.todense()

ap = AffinityPropagation()

ap.fit(sim)

clusters = ap.labels_

return ap, clusters

# get clusters using affinity propagation

ap_obj, clusters = affinity_propagation(feature_matrix=feature_matrix)

book_data['Cluster'] = clusters

# get the total number of books per cluster

c = Counter(clusters)

print(c.items())

# get total clusters

total_clusters = len(c)

print('Total Clusters:', total_clusters)

cluster_data = get_cluster_data(clustering_obj=ap_obj,

book_data=book_data,

feature_names=feature_names,

num_clusters=total_clusters,

topn_features=5)

print_cluster_data(cluster_data)

plot_clusters(num_clusters=num_clusters,

feature_matrix=feature_matrix,

cluster_data=cluster_data,

book_data=book_data,

plot_size=(16, 8))

from scipy.cluster.hierarchy import ward, dendrogram

def ward_hierarchical_clustering(feature_matrix):

cosine_distance = 1 - cosine_similarity(feature_matrix)

linkage_matrix = ward(cosine_distance)

return linkage_matrix

def plot_hierarchical_clusters(linkage_matrix, book_data, figure_size=(8, 12)):

# set size

fig, ax = plt.subplots(figsize=figure_size)

book_titles = book_data['title'].values.tolist()

# plot dendrogram

ax = dendrogram(linkage_matrix, orientation="left", labels=book_titles)

plt.tick_params(axis='x',

which='both',

bottom='off',

top='off',

labelbottom='off')

plt.tight_layout()

plt.savefig('ward_hierachical_clusters.png', dpi=200)

# build ward's linkage matrix

linkage_matrix = ward_hierarchical_clustering(feature_matrix)

# plot the dendrogram

plot_hierarchical_clusters(linkage_matrix=linkage_matrix,

book_data=book_data,

figure_size=(8, 10))