docker容器网络配置

docker容器网络配置

Linux内核实现名称空间的创建

ip netns命令

可以借助ip netns命令来完成对 Network Namespace 的各种操作。ip netns命令来自于iproute安装包,一般系统会默认安装,如果没有的话,请自行安装。

注意:ip netns命令修改网络配置时需要 sudo 权限。

可以通过ip netns命令完成对Network Namespace 的相关操作,可以通过ip netns help查看命令帮助信息:

[root@localhost ~]# ip netns help

Usage: ip netns list

ip netns add NAME

ip netns attach NAME PID

ip netns set NAME NETNSID

ip [-all] netns delete [NAME]

ip netns identify [PID]

ip netns pids NAME

ip [-all] netns exec [NAME] cmd ...

ip netns monitor

ip netns list-id

NETNSID := auto | POSITIVE-INT

默认情况下,Linux系统中是没有任何 Network Namespace的,所以ip netns list命令不会返回任何信息。

创建Network Namespace

通过命令创建一个名为na的命名空间:

[root@localhost ~]# ip netns list

[root@localhost ~]# ip netns add na

[root@localhost ~]# ip netns list

na

新创建的 Network Namespace 会出现在/var/run/netns/目录下。如果相同名字的 namespace 已经存在,命令会报Cannot create namespace file “/var/run/netns/ns0”: File exists的错误。

[root@localhost ~]# ip netns add na

Cannot create namespace file "/var/run/netns/na": File exists

对于每个 Network Namespace 来说,它会有自己独立的网卡、路由表、ARP 表、iptables 等和网络相关的资源。

删除Network Namespace

[root@localhost ~]# ip netns list

na1

na

[root@localhost ~]# ip netns del na1

[root@localhost ~]# ip netns list

na

操作Network Namespace

ip命令提供了ip netns exec子命令可以在对应的 Network Namespace 中执行命令。

查看新创建 Network Namespace 的网卡信息

[root@localhost ~]# ip netns exec na ip addr

1: lo: mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

可以看到,新创建的Network Namespace中会默认创建一个lo回环网卡,此时网卡处于关闭状态。此时,尝试去 ping 该lo回环网卡,会提示Network is unreachable

[root@localhost ~]# ip netns exec na ping 127.0.0.1

connect: Network is unreachable

通过下面的命令启用lo回环网卡:

[root@localhost ~]# ip netns exec na ip link set lo up

[root@localhost ~]# ip netns exec na ip addr

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

[root@localhost ~]# ip netns exec na ping 127.0.0.1

PING 127.0.0.1 (127.0.0.1) 56(84) bytes of data.

64 bytes from 127.0.0.1: icmp_seq=1 ttl=64 time=0.018 ms

64 bytes from 127.0.0.1: icmp_seq=2 ttl=64 time=0.019 ms

64 bytes from 127.0.0.1: icmp_seq=3 ttl=64 time=0.052 ms

^C

--- 127.0.0.1 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 55ms

rtt min/avg/max/mdev = 0.018/0.029/0.052/0.017 ms

[root@localhost ~]#

转移设备

我们可以在不同的 Network Namespace 之间转移设备(如veth)。由于一个设备只能属于一个 Network Namespace ,所以转移后在这个 Network Namespace 内就看不到这个设备了。

其中,veth设备属于可转移设备,而很多其它设备(如lo、vxlan、ppp、bridge等)是不可以转移的。

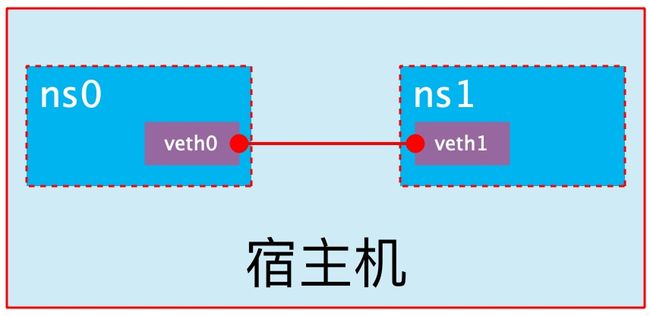

veth pair

veth pair 全称是 Virtual Ethernet Pair,是一个成对的端口,所有从这对端口一 端进入的数据包都将从另一端出来,反之也是一样。

引入veth pair是为了在不同的 Network Namespace 直接进行通信,利用它可以直接将两个 Network Namespace 连接起来。

创建veth pair

[root@localhost ~]# ip link add type veth

[root@localhost ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens160: mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:0c:29:be:41:85 brd ff:ff:ff:ff:ff:ff

inet 192.168.244.144/24 brd 192.168.244.255 scope global noprefixroute ens160

valid_lft forever preferred_lft forever

inet6 fe80::c8bb:96e4:534:b9f/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: docker0: mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:e6:a4:c6:45 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

4: veth0@veth1: mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether d2:eb:f7:8c:e8:de brd ff:ff:ff:ff:ff:ff

5: veth1@veth0: mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether ae:b7:90:35:f7:6a brd ff:ff:ff:ff:ff:ff

可以看到,此时系统中新增了一对veth pair,将veth0和veth1两个虚拟网卡连接了起来,此时这对 veth pair 处于”未启用“状态。

实现Network Namespace间通信

下面我们利用veth pair实现两个不同的 Network Namespace 之间的通信。刚才我们已经创建了一个名为na的 Network Namespace,下面再创建一个信息Network Namespace,命名为na1

[root@localhost ~]# ip netns add na1

[root@localhost ~]# ip netns list

na1

na

然后我们将veth0加入到na,将veth1加入到na1

[root@localhost ~]# ip link set veth0 netns na

[root@localhost ~]# ip link set veth1 netns na1

[root@localhost ~]#

然后我们分别为这对veth pair配置上ip地址,并启用它们

[root@localhost ~]# ip netns exec na ip link set veth0 up

[root@localhost ~]# ip netns exec na ip addr add 192.168.244.161/24 dev veth0

[root@localhost ~]# ip netns exec na ip link set lo up

[root@localhost ~]# ip netns exec na1 ip link set veth1 up

[root@localhost ~]# ip netns exec na1 ip addr add 192.168.244.162/24 dev veth1

查看这对veth pair的状态

[root@localhost ~]# ip netns exec na ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

4: veth0@if5: mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether d2:eb:f7:8c:e8:de brd ff:ff:ff:ff:ff:ff link-netns na1

inet 192.168.161.0/24 scope global veth0

valid_lft forever preferred_lft forever

inet6 fe80::d0eb:f7ff:fe8c:e8de/64 scope link

valid_lft forever preferred_lft forever

[root@localhost ~]# ip netns exec na1 ip a

1: lo: mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

5: veth1@if4: mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether ae:b7:90:35:f7:6a brd ff:ff:ff:ff:ff:ff link-netns na

inet 192.168.162.0/24 scope global veth1

valid_lft forever preferred_lft forever

inet6 fe80::acb7:90ff:fe35:f76a/64 scope link

valid_lft forever preferred_lft forever

从上面看到手误IP配置有问题,那么把IP删掉重新配置IP

[root@localhost ~]# ip netns exec na1 ip addr del 192.168.162/24 dev veth1

[root@localhost ~]# ip netns exec na ip addr del 192.168.161/24 dev veth0

[root@localhost ~]# ip netns exec na ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

4: veth0@if5: mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether d2:eb:f7:8c:e8:de brd ff:ff:ff:ff:ff:ff link-netns na1

inet6 fe80::d0eb:f7ff:fe8c:e8de/64 scope link

valid_lft forever preferred_lft forever

[root@localhost ~]# ip netns exec na1 ip a

1: lo: mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

5: veth1@if4: mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether ae:b7:90:35:f7:6a brd ff:ff:ff:ff:ff:ff link-netns na

inet6 fe80::acb7:90ff:fe35:f76a/64 scope link

valid_lft forever preferred_lft forever

重新添加IP

[root@localhost ~]# ip netns exec na ip addr add 192.168.244.161/24 dev veth0

[root@localhost ~]# ip netns exec na1 ip addr add 192.168.244.162/24 dev veth1

[root@localhost ~]# ip netns exec na ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

4: veth0@if5: mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether d2:eb:f7:8c:e8:de brd ff:ff:ff:ff:ff:ff link-netns na1

inet 192.168.244.161/24 scope global veth0

valid_lft forever preferred_lft forever

inet6 fe80::d0eb:f7ff:fe8c:e8de/64 scope link

valid_lft forever preferred_lft forever

[root@localhost ~]# ip netns exec na1 ip a

1: lo: mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

5: veth1@if4: mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether ae:b7:90:35:f7:6a brd ff:ff:ff:ff:ff:ff link-netns na

inet 192.168.244.162/24 scope global veth1

valid_lft forever preferred_lft forever

inet6 fe80::acb7:90ff:fe35:f76a/64 scope link

valid_lft forever preferred_lft forever

我们已经成功启用了这个veth pair,并为每个veth设备分配了对应的ip地址。我们尝试在na1中访问na中的ip地址:

[root@localhost ~]# ip netns exec na ping 192.168.244.162

PING 192.168.244.162 (192.168.244.162) 56(84) bytes of data.

64 bytes from 192.168.244.162: icmp_seq=1 ttl=64 time=0.024 ms

64 bytes from 192.168.244.162: icmp_seq=2 ttl=64 time=0.025 ms

64 bytes from 192.168.244.162: icmp_seq=3 ttl=64 time=0.047 ms

^C

--- 192.168.244.162 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 28ms

rtt min/avg/max/mdev = 0.024/0.032/0.047/0.010 ms

[root@localhost ~]#

可以看到,veth pair成功实现了两个不同Network Namespace之间的网络交互。

veth设备重命名

要停掉设备在改名

[root@localhost ~]# ip netns exec na ip link set veth0 down

[root@localhost ~]# ip netns exec na ip link set dev veth0 name eth0

[root@localhost ~]# ip netns exec na ifconfig -aeth0: flags=4098 mtu 1500

inet 192.168.244.161 netmask 255.255.255.0 broadcast 0.0.0.0

ether d2:eb:f7:8c:e8:de txqueuelen 1000 (Ethernet)

RX packets 24 bytes 1832 (1.7 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 24 bytes 1832 (1.7 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73 mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10

loop txqueuelen 1000 (Local Loopback)

RX packets 18 bytes 1512 (1.4 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 18 bytes 1512 (1.4 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

[root@localhost ~]# ip netns exec na ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

4: eth0@if5: mtu 1500 qdisc noqueue state DOWN group default qlen 1000

link/ether d2:eb:f7:8c:e8:de brd ff:ff:ff:ff:ff:ff link-netns na1

inet 192.168.244.161/24 scope global eth0

valid_lft forever preferred_lft forever

四种网络模式配置

bridge模式配置 默认模式

[root@localhost ~]# docker run -it --name y1 --rm 5d0da3dc9764

[root@142f4392c416 /]# ifconfig

eth0: flags=4163 mtu 1500

inet 172.17.0.2 netmask 255.255.0.0 broadcast 172.17.255.255

ether 02:42:ac:11:00:02 txqueuelen 0 (Ethernet)

RX packets 4288 bytes 12825200 (12.2 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 3825 bytes 211570 (206.6 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73 mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

loop txqueuelen 1000 (Local Loopback)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

# 在创建容器时添加--network bridge与不加--network选项效果是一致的

[root@localhost ~]# docker run -it --name y1 --network bridge --rm 5d0da3dc9764

[root@2f013241a2e0 /]#

[root@2f013241a2e0 /]# ifconfig

eth0: flags=4163 mtu 1500

inet 172.17.0.2 netmask 255.255.0.0 broadcast 172.17.255.255

ether 02:42:ac:11:00:02 txqueuelen 0 (Ethernet)

RX packets 3669 bytes 12791670 (12.1 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 3364 bytes 186676 (182.3 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73 mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

loop txqueuelen 1000 (Local Loopback)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

container模式配置

启动第一个容器

[root@localhost ~]# docker run -it --name t1 --rm centos

[root@4f3e9bed6a1c /]# ifconfig

eth0: flags=4163 mtu 1500

inet 172.17.0.2 netmask 255.255.0.0 broadcast 172.17.255.255

ether 02:42:ac:11:00:02 txqueuelen 0 (Ethernet)

RX packets 2393 bytes 12722766 (12.1 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 2230 bytes 125452 (122.5 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73 mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

loop txqueuelen 1000 (Local Loopback)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

启动第二个容器

[root@localhost ~]# docker run -it --name t2 --rm centos

[root@375b574b007a /]# ifconfig

eth0: flags=4163 mtu 1500

inet 172.17.0.3 netmask 255.255.0.0 broadcast 172.17.255.255

ether 02:42:ac:11:00:03 txqueuelen 0 (Ethernet)

RX packets 3494 bytes 12782136 (12.1 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 3240 bytes 180060 (175.8 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73 mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

loop txqueuelen 1000 (Local Loopback)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

可以看到名为t2的容器IP地址是172.17.0.3,与第一个容器的IP地址不是一样的,也就是说并没有共享网络,此时如果我们将第二个容器的启动方式改变一下,就可以使名为t2的容器IP与t1容器IP一致,也即共享IP,但不共享文件系统。

退出t2容器重新创建容器

[root@localhost ~]# docker run -it --name t2 --rm --network container:t1 centos

[root@4f3e9bed6a1c /]# ifconfig

eth0: flags=4163 mtu 1500

inet 172.17.0.2 netmask 255.255.0.0 broadcast 172.17.255.255

ether 02:42:ac:11:00:02 txqueuelen 0 (Ethernet)

RX packets 4983 bytes 25455898 (24.2 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 4615 bytes 259286 (253.2 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73 mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

loop txqueuelen 1000 (Local Loopback)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

可以看到他的ip也是172.17.0.2

host模式配置

启动容器时直接指明模式为host

[root@localhost ~]# docker run -it --name t1 --network host --rm centos

[root@localhost /]# ifconfig

docker0: flags=4099 mtu 1500

inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255

inet6 fe80::42:e6ff:fea4:c645 prefixlen 64 scopeid 0x20

ether 02:42:e6:a4:c6:45 txqueuelen 0 (Ethernet)

RX packets 13576 bytes 565803 (552.5 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 14948 bytes 53378486 (50.9 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens160: flags=4163 mtu 1500

inet 192.168.244.144 netmask 255.255.255.0 broadcast 192.168.244.255

inet6 fe80::c8bb:96e4:534:b9f prefixlen 64 scopeid 0x20

ether 00:0c:29:be:41:85 txqueuelen 1000 (Ethernet)

RX packets 399905 bytes 171226799 (163.2 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 563731 bytes 104542604 (99.6 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73 mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10

loop txqueuelen 1000 (Local Loopback)

RX packets 24 bytes 1628 (1.5 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 24 bytes 1628 (1.5 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

此时如果我们在这个容器中启动一个http站点,我们就可以直接用宿主机的IP直接在浏览器中访问这个容器中的站点了。

yum -y install net-tools

容器的常用操作

查看容器的主机名

[root@localhost ~]# docker run -it --name t1 --network bridge --rm centos:latest

[root@430b810c5319 /]# hostname

430b810c5319

在容器启动时注入主机名

[root@localhost ~]# docker run -it --name t1 --network bridge --hostname wy --rm centos:latest

[root@wy /]# hostname

wy

[root@wy /]# cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.17.0.2 wy

[root@wy /]# cat /etc/resolv.conf

# Generated by NetworkManager

nameserver 114.114.114.114

[root@wy /]# ping www.baidu.com

PING www.a.shifen.com (36.152.44.95) 56(84) bytes of data.

64 bytes from 36.152.44.95 (36.152.44.95): icmp_seq=1 ttl=127 time=42.5 ms

^C

--- www.a.shifen.com ping statistics ---

2 packets transmitted, 1 received, 50% packet loss, time 1002ms

rtt min/avg/max/mdev = 42.511/42.511/42.511/0.000 ms

[root@wy /]#

手动指定容器要使用的DNS

[root@localhost ~]# docker run -it --name y1 --network bridge --hostname wy --dns 114.114.114.114 --rm centos:latest

[root@wy /]# cat /etc/resolv.conf

nameserver 114.114.114.114

[root@wy /]# nslookup -type=a www.baidu.com

bash: nslookup: command not found

[root@wy /]#

手动往/etc/hosts文件中注入主机名到IP地址的映射

[root@localhost ~]# docker run -it --name y1 --network bridge --hostname wy --add-host www.a.com:1.1.1.1 --rm centos:latest

[root@wy /]# cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

1.1.1.1 www.a.com

172.17.0.2 wy

开放容器端口

执行docker run的时候有个-p选项,可以将容器中的应用端口映射到宿主机中,从而实现让外部主机可以通过访问宿主机的某端口来访问容器内应用的目的。

-p选项能够使用多次,其所能够暴露的端口必须是容器确实在监听的端口。

-p选项的使用格式:

- -p

- 将指定的容器端口映射至主机所有地址的一个动态端口

- -p :

- 将容器端口映射至指定的主机端口

- -p ::

- 将指定的容器端口映射至主机指定的动态端口

- -p ::

- 将指定的容器端口映射至主机指定的端口

动态端口指的是随机端口,具体的映射结果可使用docker port命令查看。

[root@localhost ~]# docker run -it --name web --rm -p nginx

#在开一个端口查看

[root@localhost ~]# docker port web

80/tcp -> 0.0.0.0:49163

80/tcp -> :::49163

由此可见,容器的80端口被暴露到了宿主机的49163端口上,此时我们在宿主机上访问一下这个端口看是否能访问到容器内的站点

[root@localhost ~]# curl http://127.0.0.1:49163

Welcome to nginx!

Welcome to nginx!

If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.

For online documentation and support please refer to

nginx.org.

Commercial support is available at

nginx.com.

Thank you for using nginx.

iptables防火墙规则将随容器的创建自动生成,随容器的删除自动删除规则。

将容器端口映射到指定IP的随机端口

[root@localhost ~]# docker run -it --name web --rm -p 192.168.244.144::80 centos:latest

[root@60800c971b48 /]#

[root@localhost ~]# docker port web

80/tcp -> 192.168.244.144:49157

[root@localhost ~]#

将容器端口映射到宿主机的指定端口

[root@localhost ~]# docker run -it --name web --rm -p 80:80 centos:latest

[root@localhost ~]# docker port web

80/tcp -> 0.0.0.0:80

80/tcp -> :::80

自定义docker0桥的网络属性信息

官方文档配置

https://docs.docker.com/network/bridge/

自定义docker0桥的网络属性信息需要修改/etc/docker/daemon.json配置文件

[root@localhost ~]# vi /etc/docker/daemon.json

{

"bip": "192.168.1.5/24",

"fixed-cidr": "192.168.1.5/25",

"fixed-cidr-v6": "2001:db8::/64",

"mtu": 1500,

"default-gateway": "10.20.1.1",

"default-gateway-v6": "2001:db8:abcd::89",

"dns": ["10.20.1.2","10.20.1.3"]

}

~

核心选项为bip,即bridge ip之意,用于指定docker0桥自身的IP地址;其它选项可通过此地址计算得出。

docker远程连接

dockerd守护进程的C/S,其默认仅监听Unix Socket格式的地址(/var/run/docker.sock),如果要使用TCP套接字,则需要修改/etc/docker/daemon.json配置文件,添加如下内容,然后重启docker服务:

"hosts": ["tcp://0.0.0.0:2375", "unix:///var/run/docker.sock"]

在客户端上向dockerd直接传递“-H|–host”选项指定要控制哪台主机上的docker容器

docker -H 192.168.244.145:2375 ps

docker创建自定义桥

创建一个额外的自定义桥,区别于docker0

[root@localhost ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

413997d70707 bridge bridge local

0a04824fc9b6 host host local

4dcb8fbdb599 none null local

[root@localhost ~]# docker network create -d bridge --subnet "192.168.2.0/24" --gateway "192.168.2.1" br0

b340ce91fb7c569935ca495f1dc30b8c37204b2a8296c56a29253a067f5dedc9

[root@localhost ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

b340ce91fb7c br0 bridge local

413997d70707 bridge bridge local

0a04824fc9b6 host host local

4dcb8fbdb599 none null local

使用新创建的自定义桥来创建容器:

[root@localhost ~]# docker run -it --name b1 --network br0 busybox

/ # ifconfig

eth0 Link encap:Ethernet HWaddr 02:42:C0:A8:02:02

inet addr:192.168.2.2 Bcast:192.168.2.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:11 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:926 (926.0 B) TX bytes:0 (0.0 B)

再创建一个容器,使用默认的bridge桥:

[root@localhost ~]# docker run --name b2 -it busybox

/ # ls

bin dev etc home proc root sys tmp usr var

/ # ifconfig

eth0 Link encap:Ethernet HWaddr 02:42:0A:00:00:02

inet addr:10.0.0.2 Bcast:10.0.255.255 Mask:255.255.0.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:6 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:508 (508.0 B) TX bytes:0 (0.0 B)

s:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:926 (926.0 B) TX bytes:0 (0.0 B)

再创建一个容器,使用默认的bridge桥:

[root@localhost ~]# docker run --name b2 -it busybox

/ # ls

bin dev etc home proc root sys tmp usr var

/ # ifconfig

eth0 Link encap:Ethernet HWaddr 02:42:0A:00:00:02

inet addr:10.0.0.2 Bcast:10.0.255.255 Mask:255.255.0.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:6 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:508 (508.0 B) TX bytes:0 (0.0 B)