基于pytorch构建双向LSTM(Bi-LSTM)文本情感分类实例

学长给的代码,感觉结构清晰,还是蛮不错的,想以后就照着这样的结构走好了,记录一下。

首先配置环境

matplotlib==3.4.2

numpy==1.20.3

pandas==1.3.0

sklearn==0.0

spacy==3.1.2

torch==1.8.0

TorchSnooper==0.8

tqdm==4.61.0

也不一定要完全一样,到时候哪里报错就改哪里就好了

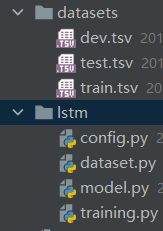

数据集

https://download.csdn.net/download/qq_52785473/79483740

上传到资源里,设置的是0积分下载

下载之后创建一个datasets文件夹解压到里面就好了,或者改改代码也行。

首先创建配置文件config.py

class my_config():

max_length = 20 # 每句话截断长度

batch_size = 64 # 一个batch的大小

embedding_size = 256 # 词向量大小

hidden_size = 128 # 隐藏层大小

num_layers = 2 # 网络层数

dropout = 0.5 # 遗忘程度

output_size = 2 # 输出大小

lr = 0.001 # 学习率

epoch = 5 # 训练次数

再创建dataset.py

import pandas as pd

import os

import torchtext

from tqdm import tqdm

class mydata(object):

def __init__(self):

self.data_dir = './datasets'

self.n_class = 2

def _generator(self, filename): # 加载每行数据及其标签

path = os.path.join(self.data_dir, filename)

df = pd.read_csv(path, sep='\t', header=None)

for index, line in df.iterrows():

sentence = line[0]

label = line[1]

yield sentence, label

def load_train_data(self): # 加载数据

return self._generator('train.tsv')

def load_dev_data(self):

return self._generator('dev.tsv')

def load_test_data(self):

return self._generator('test.tsv')

class Dataset(object):

def __init__(self, dataset: mydata, config):

self.dataset = dataset

self.config = config # 配置文件

def load_data(self):

tokenizer = lambda sentence: [x for x in sentence.split() if x != ' '] # 以空格切词

# 定义field

TEXT = torchtext.data.Field(sequential=True, tokenize=tokenizer, lower=True, fix_length=self.config.max_length)

LABEL = torchtext.data.Field(sequential=False, use_vocab=False)

# text, label能取出example对应的数据

# 相当于定义了一种数据类型吧

datafield = [("text", TEXT), ("label", LABEL)]

# 加载数据

train_gen = self.dataset.load_train_data()

dev_gen = self.dataset.load_dev_data()

test_gen = self.dataset.load_test_data()

# 转换数据为example对象(数据+标签)

train_example = [torchtext.data.Example.fromlist(it, datafield) for it in tqdm(train_gen)]

dev_example = [torchtext.data.Example.fromlist(it, datafield) for it in tqdm(dev_gen)]

test_example = [torchtext.data.Example.fromlist(it, datafield) for it in tqdm(test_gen)]

# 转换成dataset

train_data = torchtext.data.Dataset(train_example, datafield) # example, field传入

dev_data = torchtext.data.Dataset(dev_example, datafield)

test_data = torchtext.data.Dataset(test_example, datafield)

# 训练集创建字典,默认添加两个特殊字符和

TEXT.build_vocab(train_data)

# 获取字典大小

self.vocab = TEXT.vocab

# 放入迭代器并打包成batch及按元素个数排序,到时候直接调用即可

self.train_iterator = torchtext.data.BucketIterator(

(train_data),

batch_size=self.config.batch_size,

sort_key=lambda x: len(x.text),

shuffle=False

)

self.dev_iterator, self.test_iterator = torchtext.data.BucketIterator.splits(

(dev_data, test_data),

batch_size=self.config.batch_size,

sort_key=lambda x: len(x.text),

repeat=False

)

print(f"load {len(train_data)} training examples")

print(f"load {len(dev_data)} dev examples")

print(f"load {len(test_data)} test examples")

再创建model.py

import torch

import torch.nn as nn

from config import my_config

class myLSTM(nn.Module):

def __init__(self, vocab_size, config: my_config):

super(myLSTM, self).__init__() # 初始化

self.vocab_size = vocab_size

self.config = config

self.embeddings = nn.Embedding(vocab_size, self.config.embedding_size) # 配置嵌入层,计算出词向量

self.lstm = nn.LSTM(

input_size=self.config.embedding_size, # 输入大小为转化后的词向量

hidden_size=self.config.hidden_size, # 隐藏层大小

num_layers=self.config.num_layers, # 堆叠层数,有几层隐藏层就有几层

dropout=self.config.dropout, # 遗忘门参数

bidirectional=True # 双向LSTM

)

self.dropout = nn.Dropout(self.config.dropout)

self.fc = nn.Linear(

self.config.num_layers * self.config.hidden_size * 2, # 因为双向所有要*2

self.config.output_size

)

self.softmax = nn.Softmax(dim=-1)

def forward(self, x):

embedded = self.embeddings(x)

lstm_out, (h_n, c_n) = self.lstm(embedded)

feature = self.dropout(h_n)

# 这里将所有隐藏层进行拼接来得出输出结果,没有使用模型的输出

feature_map = torch.cat([feature[i, :, :] for i in range(feature.shape[0])], dim=-1)

out = self.fc(feature_map)

return self.softmax(out)

最后创建training.py

from dataset import mydata, Dataset

from model import myLSTM

from config import my_config

import torch

import torch.nn as nn

import numpy as np

from sklearn.metrics import accuracy_score

import matplotlib.pyplot as plt

def run_epoch(model, train_iterator, dev_iterator, optimzer, loss_fn): # 训练模型

'''

:param model:模型

:param train_iterator:训练数据的迭代器

:param dev_iterator: 验证数据的迭代器

:param optimzer: 优化器

:param loss_fn: 损失函数

'''

losses = []

for i, batch in enumerate(train_iterator):

if torch.cuda.is_available():

input = batch.text.cuda()

label = batch.label.type(torch.cuda.LongTensor)

else:

input = batch.text

label = batch.label

pred = model(input) # 预测

loss = loss_fn(pred, label) # 计算损失值

loss.backward() # 误差反向传播

losses.append(loss.data.numpy()) # 记录误差

optimzer.step() # 优化一次

# if i % 30 == 0: # 训练30个batch后查看损失值和准确率

# avg_train_loss = np.mean(losses)

# print(f'iter:{i + 1},avg_train_loss:{avg_train_loss:.4f}')

# losses = []

# val_acc = evaluate_model(model, dev_iterator)

# print('val_acc:{:.4f}'.format(val_acc))

# model.train()

def evaluate_model(model, dev_iterator): # 评价模型

'''

:param model:模型

:param dev_iterator:待评价的数据

:return:评价(准确率)

'''

all_pred = []

all_y = []

for i, batch in enumerate(dev_iterator):

if torch.cuda.is_available():

input = batch.text.cuda()

label = batch.label.type(torch.cuda.LongTensor)

else:

input = batch.text

label = batch.label

y_pred = model(input) # 预测

predicted = torch.max(y_pred.cpu().data, 1)[1] # 选择概率最大作为当前数据预测结果

all_pred.extend(predicted.numpy())

all_y.extend(label.numpy())

score = accuracy_score(all_y, np.array(all_pred).flatten()) # 计算准确率

return score

if __name__ == '__main__':

config = my_config() # 配置对象实例化

data_class = mydata() # 数据类实例化

config.output_size = data_class.n_class

dataset = Dataset(data_class, config) # 数据预处理实例化

dataset.load_data() # 进行数据预处理

train_iterator = dataset.train_iterator # 得到处理好的数据迭代器

dev_iterator = dataset.dev_iterator

test_iterator = dataset.test_iterator

vocab_size = len(dataset.vocab) # 字典大小

# 初始化模型

model = myLSTM(vocab_size, config)

optimzer = torch.optim.Adam(model.parameters(), lr=config.lr) # 优化器

loss_fn = nn.CrossEntropyLoss() # 交叉熵损失函数

y = []

for i in range(config.epoch):

print(f'epoch:{i + 1}')

run_epoch(model, train_iterator, dev_iterator, optimzer, loss_fn)

# 训练一次后评估一下模型

train_acc = evaluate_model(model, train_iterator)

dev_acc = evaluate_model(model, dev_iterator)

test_acc = evaluate_model(model, test_iterator)

print('#' * 20)

print('train_acc:{:.4f}'.format(train_acc))

print('dev_acc:{:.4f}'.format(dev_acc))

print('test_acc:{:.4f}'.format(test_acc))

y.append(test_acc)

# 训练完画图

x = [i for i in range(len(y))]

fig = plt.figure()

plt.plot(x, y)

plt.show()

最后运行training就可以跑模型了。

至于模型的保存和之后的调用

可以参考关于pytorch模型保存与调用的一种方法及一些坑。

稍微改改就好了。