背景

之前有提到过使用Prometheus做Springboot的监控,这次以一个实例来说明,通过一种统一的方式,监控数据库连接池的运行情况。

原理

其实在Springboot内部监控都是结合了micrometer来做的,基于他的MeterRegistry,实现多种方式的监控,如PrometheusMeterRegistry。他也提供了对于很多的监控实现,如缓存,线程池,tomcat,JVM,OKhttp等。对应在io.micrometer.core.instrument.binder包中。

数据库相关datasource的监控默认在spring-boot-actuator(使用版本为2.1.4.RELEASE),具体在org.springframework.boot.actuate.metrics.jdbc.DataSourcePoolMetrics。这里会参考已有数据库连接池监控的实现,完成对项目中使用的druid的简单监控。

关于mecrometer的相关的依赖,可以引入如下:

io.micrometer

micrometer-core

1.5.1

io.micrometer

micrometer-registry-prometheus

1.5.1

步骤

分析已有实现

我们看org.springframework.boot.actuate.metrics.jdbc.DataSourcePoolMetrics中的实现,是使用的org.springframework.boot.jdbc.metadata.DataSourcePoolMetadata来获取数据库连接池运行数据的。再看这个接口,在spring-boot:2.1.4.RELEASE中包括tomcat,dbcp2,hikari是有默认实现,比如下面是hikari的实现,获取到了当前运行连接数,最高连接数,探活SQL等。

/**

* {@link DataSourcePoolMetadata} for a Hikari {@link DataSource}.

*

* @author Stephane Nicoll

* @since 2.0.0

*/

public class HikariDataSourcePoolMetadata

extends AbstractDataSourcePoolMetadata {

public HikariDataSourcePoolMetadata(HikariDataSource dataSource) {

super(dataSource);

}

@Override

public Integer getActive() {

try {

return getHikariPool().getActiveConnections();

}

catch (Exception ex) {

return null;

}

}

private HikariPool getHikariPool() {

return (HikariPool) new DirectFieldAccessor(getDataSource())

.getPropertyValue("pool");

}

@Override

public Integer getMax() {

return getDataSource().getMaximumPoolSize();

}

@Override

public Integer getMin() {

return getDataSource().getMinimumIdle();

}

@Override

public String getValidationQuery() {

return getDataSource().getConnectionTestQuery();

}

@Override

public Boolean getDefaultAutoCommit() {

return getDataSource().isAutoCommit();

}

}

DataSourcePoolMetadata的定义,可以参考注释理解各个方法的意义。

public interface DataSourcePoolMetadata {

/**

* Return the usage of the pool as value between 0 and 1 (or -1 if the pool is not

* limited).

*

* - 1 means that the maximum number of connections have been allocated

* - 0 means that no connection is currently active

* - -1 means there is not limit to the number of connections that can be allocated

*

*

* This may also return {@code null} if the data source does not provide the necessary

* information to compute the poll usage.

* @return the usage value or {@code null}

*/

Float getUsage();

/**

* Return the current number of active connections that have been allocated from the

* data source or {@code null} if that information is not available.

* @return the number of active connections or {@code null}

*/

Integer getActive();

/**

* Return the maximum number of active connections that can be allocated at the same

* time or {@code -1} if there is no limit. Can also return {@code null} if that

* information is not available.

* @return the maximum number of active connections or {@code null}

*/

Integer getMax();

/**

* Return the minimum number of idle connections in the pool or {@code null} if that

* information is not available.

* @return the minimum number of active connections or {@code null}

*/

Integer getMin();

/**

* Return the query to use to validate that a connection is valid or {@code null} if

* that information is not available.

* @return the validation query or {@code null}

*/

String getValidationQuery();

/**

* The default auto-commit state of connections created by this pool. If not set

* ({@code null}), default is JDBC driver default (If set to null then the

* java.sql.Connection.setAutoCommit(boolean) method will not be called.)

* @return the default auto-commit state or {@code null}

*/

Boolean getDefaultAutoCommit();

}

具体看下org.springframework.boot.actuate.metrics.jdbc.DataSourcePoolMetrics的实现,这里将单个datasource导入,然后根据实现的DataSourcePoolMetadata,获取到了具体的运行数据,然后,本身实现了MeterBinder接口,所以最终通过在bindTo(MetricRegistry registry)调用bindPoolMetadata() -> bindDataSource()完成监控的记录。

public class DataSourcePoolMetrics implements MeterBinder {

private final DataSource dataSource;

private final CachingDataSourcePoolMetadataProvider metadataProvider;

private final Iterable tags;

public DataSourcePoolMetrics(DataSource dataSource,

Collection metadataProviders,

String dataSourceName, Iterable tags) {

this(dataSource, new CompositeDataSourcePoolMetadataProvider(metadataProviders),

dataSourceName, tags);

}

public DataSourcePoolMetrics(DataSource dataSource,

DataSourcePoolMetadataProvider metadataProvider, String name,

Iterable tags) {

Assert.notNull(dataSource, "DataSource must not be null");

Assert.notNull(metadataProvider, "MetadataProvider must not be null");

this.dataSource = dataSource;

this.metadataProvider = new CachingDataSourcePoolMetadataProvider(

metadataProvider);

this.tags = Tags.concat(tags, "name", name);

}

@Override

public void bindTo(MeterRegistry registry) {

if (this.metadataProvider.getDataSourcePoolMetadata(this.dataSource) != null) {

bindPoolMetadata(registry, "active", DataSourcePoolMetadata::getActive);

bindPoolMetadata(registry, "max", DataSourcePoolMetadata::getMax);

bindPoolMetadata(registry, "min", DataSourcePoolMetadata::getMin);

}

}

private void bindPoolMetadata(MeterRegistry registry,

String metricName, Function function) {

bindDataSource(registry, metricName,

this.metadataProvider.getValueFunction(function));

}

private void bindDataSource(MeterRegistry registry,

String metricName, Function function) {

if (function.apply(this.dataSource) != null) {

registry.gauge("jdbc.connections." + metricName, this.tags, this.dataSource,

(m) -> function.apply(m).doubleValue());

}

}

private static class CachingDataSourcePoolMetadataProvider

implements DataSourcePoolMetadataProvider {

private static final Map cache = new ConcurrentReferenceHashMap<>();

private final DataSourcePoolMetadataProvider metadataProvider;

CachingDataSourcePoolMetadataProvider(

DataSourcePoolMetadataProvider metadataProvider) {

this.metadataProvider = metadataProvider;

}

public Function getValueFunction(

Function function) {

return (dataSource) -> function.apply(getDataSourcePoolMetadata(dataSource));

}

@Override

public DataSourcePoolMetadata getDataSourcePoolMetadata(DataSource dataSource) {

DataSourcePoolMetadata metadata = cache.get(dataSource);

if (metadata == null) {

metadata = this.metadataProvider.getDataSourcePoolMetadata(dataSource);

cache.put(dataSource, metadata);

}

return metadata;

}

}

}

而关于什么时候使用了DataSourceMetric,可以看它的构造方法引用,找到org.springframework.boot.actuate.autoconfigure.metrics.jdbc.DataSourcePoolMetricsAutoConfiguration,如下,可以看到,是通过spring的依赖注入,完成对所有datasource构造DataSourceMetric并注册到MeterRegistry的。

//注入所有Datasource ,结构为Map,名称和对应实例

@Autowired

public void bindDataSourcesToRegistry(Map dataSources) {

dataSources.forEach(this::bindDataSourceToRegistry);

}

private void bindDataSourceToRegistry(String beanName, DataSource dataSource) {

String dataSourceName = getDataSourceName(beanName);

new DataSourcePoolMetrics(dataSource, this.metadataProviders, dataSourceName,

Collections.emptyList()).bindTo(this.registry);

}

/**

* Get the name of a DataSource based on its {@code beanName}.

* @param beanName the name of the data source bean

* @return a name for the given data source

*/

private String getDataSourceName(String beanName) {

if (beanName.length() > DATASOURCE_SUFFIX.length()

&& StringUtils.endsWithIgnoreCase(beanName, DATASOURCE_SUFFIX)) {

return beanName.substring(0,

beanName.length() - DATASOURCE_SUFFIX.length());

}

return beanName;

}

实现druid连接池监控

完成了原理的分析,现在我们再来了解怎么实现对druid的监控。参考上面,定义一个自定义的DruidDataSourcePoolMetadata实现自DataSourcePoolMetadata或者AbstractDataSourcePoolMetadata。但目前问题是,上面使用的是

CachingDataSourcePoolMetadataProvider一个内部类的provider,没法直接使用将DruidDataSourcePoolMetadata添加到里边。但回到DataSourcePoolMetrics和DataSourcePoolMetricsAutoConfiguration,可以看到DataSourcePoolMetadataProvider可以注入多个。因此,再实现个针对DruidDataSourcePoolMetadata的DataSourcePoolMetadataProvider,然后执行注入,就可以得到我们想要的功能。

@Bean

public DataSourcePoolMetadataProvider druidPoolDataSourceMetadataProvider() {

return (dataSource) -> {

DruidDataSource ds = DataSourceUnwrapper.unwrap(dataSource,

DruidDataSource.class);

if (ds != null) {

return new DruidDataSourcePoolMetadata(ds);

}

return null;

};

}

/**

* 参考 org.springframework.boot.jdbc.metadata.HikariDataSourcePoolMetadata

*/

private class DruidDataSourcePoolMetadata extends AbstractDataSourcePoolMetadata {

/**

* Create an instance with the data source to use.

*

* @param dataSource the data source

*/

DruidDataSourcePoolMetadata(DruidDataSource dataSource) {

super(dataSource);

}

@Override

public Integer getActive() {

return getDataSource().getActiveCount();

}

@Override

public Integer getMax() {

return getDataSource().getMaxActive();

}

@Override

public Integer getMin() {

return getDataSource().getMinIdle();

}

@Override

public String getValidationQuery() {

return getDataSource().getValidationQuery();

}

@Override

public Boolean getDefaultAutoCommit() {

return getDataSource().isDefaultAutoCommit();

}

}

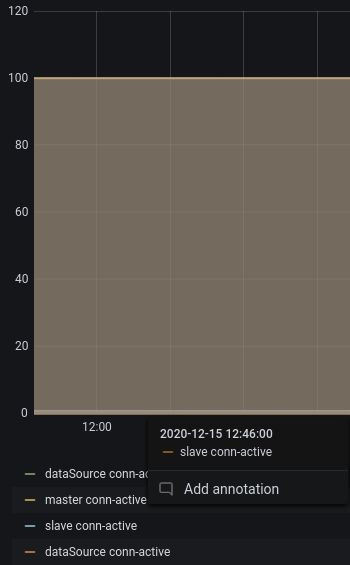

结果

总结

以上就是本期的所有内容,感谢阅读。可以看到spring-boot在模块化方面特别好,各种自定义实现可以在多个层次很好的组合起来,对于开发人员来说是十分方便的。这个例子中很多的思路可以作为以后开发的参考。同时,druid本身自带很多运行参数的监控,参考com.alibaba.druid.stat,如果能添加更多指标的数据并和DataSourceMetric结合,也是不错的选择,看起来实现也并不是很复杂。

参考资料

- micrometer

- custom_metrics_micrometer_prometheus_spring_boot_actuator