使用流程

我们都知道一个简单的OkHttp请求流程是这么写的:

val url = "http://www.baidu.com/"

//1.新建OKHttpClient客户端

val okHttpClient = OkHttpClient()

//新建一个Request对象

val request = Request.Builder()

.url(url)

.build()

//2.Response为OKHttp中的响应

okHttpClient.newCall(request).enqueue(object :Callback{

override fun onFailure(call: Call, e: IOException) {}

override fun onResponse(call: Call, response: Response) {}

})

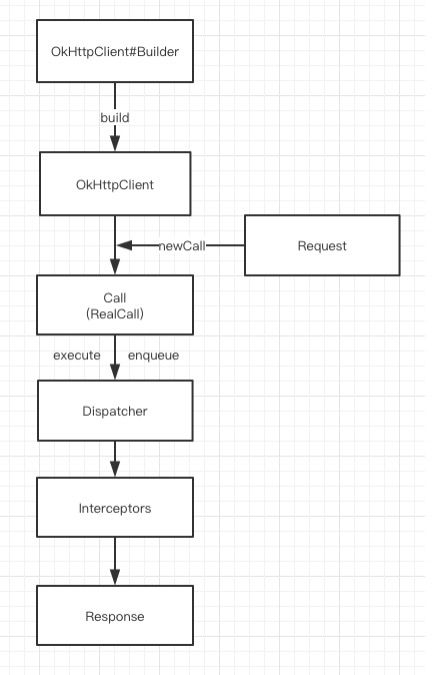

在使用OkHttp发起一次请求时,对于使用者最少存在OkHttpClient、Request与Call三个角色,其中OkHttpClient和Request的创建可以通过它提供的Builder(建造者模式)。而Call则是把Request交给OkHttpClient之后返回的一个已准备好执行的请求。

但是它的实际请求流程是这样的:

发现调用execute或者enqueue之后会去到dispatcher(分发器)再到interceptors(拦截器),这两个是什么呢?后面会讲到

先来看看execute或者enqueue调用后做了什么事。点击execute或者enqueue发现是接口方法,那就找它的实现体,看看newCall()

//OkHttpClient.kt

override fun newCall(request: Request): Call {

return RealCall.newRealCall(this, request, forWebSocket = false)

}

再点RealCall.newRealCall(),发现当OkHttpClient调用newCall时其实调用的是RealCall.newRealCall(),所以具体实现都在RealCall里面。

假如调用异步请求enqueue是这样的:okHttpClient.newCall(request).enqueue() -> RealCall.newRealCall().enqueue()

//RealCall.kt

override fun enqueue(responseCallback: Callback) {

//1

synchronized(this) {

check(!executed) { "Already Executed" }

executed = true

}

//2

transmitter.callStart()

//3

client.dispatcher.enqueue(AsyncCall(responseCallback))

}

分析:

- 1:首先用判断这个Call是否被调用,如果没有则executed = true,继续往下走;如果被调用了则会抛异常。

- 2:用于监听请求的信息,包括DNS的解析开始和结束时间,连接的开始和结束时间。

- 3:重点在这里,调用了OkHttpClient的dispatcher的enqueue方法,先不看AsyncCall是什么,其实到这里就是:OkHttpClient.newCall().enqueue() -> RealCall.newCall().enqueue() -> Dispatcher.enqueue()

而Dispatcher是一个分发器,至于为什么被叫分发器,看完下面的解析你就知道了。

分发器Dispatcher

在Dispatcher中这么两个变量、三个双向队列和一个线程池:

//Dispatcher.kt

//异步请求同时存在的最大请求

var maxRequests = 64

//异步请求同一域名同时存在的最大请求

var maxRequestsPerHost = 5

/** Ready async calls in the order they'll be run. */

private val readyAsyncCalls = ArrayDeque()

/** Running asynchronous calls. Includes canceled calls that haven't finished yet. */

private val runningAsyncCalls = ArrayDeque()

/** Running synchronous calls. Includes canceled calls that haven't finished yet. */

private val runningSyncCalls = ArrayDeque()

//SynchronousQueue的线程池

@get:Synchronized

@get:JvmName("executorService") val executorService: ExecutorService

get() {

if (executorServiceOrNull == null) {

executorServiceOrNull = ThreadPoolExecutor(0, Int.MAX_VALUE, 60, TimeUnit.SECONDS,

SynchronousQueue(), threadFactory("OkHttp Dispatcher", false))

}

return executorServiceOrNull!!

}

第一个队列是在异步请求中,用于存储等待执行Call的队列;第二个是在异步请求中,存储正在执行Call的队列;第三个则是同步请求中,存储正在执行Call的队列。至于在异步请求中,当前Call是去到等待队列还是执行队列,取决于maxRequests和maxRequestsPerHost。

maxRequests 是当前最多同时的请求个数,maxRequestsPerHost 是当前主机最多的请求个数。

executorService线程池则用SynchronousQueue存储,主要是避免任务假如成功,导致任务等待阻塞,所以SynchronousQueue让其加入队列失败(SynchronousQueue是没有容量的队列),直接新建新的线程执行该任务

刚才说到最终会来到Dispatcher.enqueue()

//Dispatcher.kt

internal fun enqueue(call: AsyncCall) {

synchronized(this) {

readyAsyncCalls.add(call)

// Mutate the AsyncCall so that it shares the AtomicInteger of an existing running call to

// the same host.

if (!call.get().forWebSocket) {

val existingCall = findExistingCallWithHost(call.host())

if (existingCall != null) call.reuseCallsPerHostFrom(existingCall)

}

}

promoteAndExecute()

}

首先会去把这个Call加入到等待队列中,然后直接走promoteAndExecute()

promoteAndExecute()的作用是处理对等待队列中的Call,简单点来说就是等待队列为空,则不作处理;若不为空,根据情况分析:

//Dispatcher.kt

private fun promoteAndExecute(): Boolean {

this.assertThreadDoesntHoldLock()

val executableCalls = mutableListOf()

val isRunning: Boolean

synchronized(this) {

//1

val i = readyAsyncCalls.iterator()

while (i.hasNext()) {

val asyncCall = i.next()

//2

if (runningAsyncCalls.size >= this.maxRequests) break // Max capacity.

if (asyncCall.callsPerHost().get() >= this.maxRequestsPerHost) continue // Host max capacity.

i.remove()

asyncCall.callsPerHost().incrementAndGet()

//3

executableCalls.add(asyncCall)

runningAsyncCalls.add(asyncCall)

}

isRunning = runningCallsCount() > 0

}

//4

for (i in 0 until executableCalls.size) {

val asyncCall = executableCalls[i]

asyncCall.executeOn(executorService)

}

return isRunning

}

分析:

在这之前,注意enqueue的时候先把这个Call加入等待队列了

- 1:首先把等待队列遍历,往下遍历,并取出当前的Call。

- 2:首先判断当前运行中的队列大小是不是等于或者超过设定的maxRequests的值,如果是就break出;如果不会则往下走,判断当前访问的主机请求数等于或者超过设定的当前主机请求数,则跳过继续往下下一个Call判断;如果不会则往下走,把这个Call从等待队列中移除(队列只是用来存储Call,表示当前的Call的状态),并把当前Call访问的主机数加一。

- 3:把可执行的Call加入executableCalls,并加入运行中的队列。

- 4:假如executableCalls为空这里不走;不为空则遍历所有可执行的Call,并把线程池executorService丢给executeOn()。

而这个executeOn是AsyncCall里面的,那就来看看它是什么东西吧,点进去发现是RealCall的内部类,继承了Runnable,所以是任务

//AsynCall.class

fun executeOn(executorService: ExecutorService) {

client.dispatcher.assertThreadDoesntHoldLock()

var success = false

try {

executorService.execute(this)

success = true

} catch (e: RejectedExecutionException) {

val ioException = InterruptedIOException("executor rejected")

ioException.initCause(e)

transmitter.noMoreExchanges(ioException)

responseCallback.onFailure(this@RealCall, ioException)

} finally {

if (!success) {

client.dispatcher.finished(this) // This call is no longer running!

}

}

}

原来把这个线程池丢给它是为了执行它的任务,这个任务是它本身,也就是它的run方法

//AsyncCall.class

override fun run() {

threadName("OkHttp ${redactedUrl()}") {

var signalledCallback = false

transmitter.timeoutEnter()

try {

//1

val response = getResponseWithInterceptorChain()

signalledCallback = true

responseCallback.onResponse(this@RealCall, response)

} catch (e: IOException) {

if (signalledCallback) {

// Do not signal the callback twice!

Platform.get().log("Callback failure for ${toLoggableString()}", INFO, e)

} else {

responseCallback.onFailure(this@RealCall, e)

}

} catch (t: Throwable) {

cancel()

if (!signalledCallback) {

val canceledException = IOException("canceled due to $t")

canceledException.addSuppressed(t)

responseCallback.onFailure(this@RealCall, canceledException)

}

throw t

} finally {

//2

client.dispatcher.finished(this)

}

}

}

分析:

- 1:getResponseWithInterceptorChain后面会讲到,是OkHttp重要的一部分,作用是拦截器处理请求后,得到成功的响应结果,并把结果回调出去我们使用的地方,也就是我们代码中的onResponse;假如异常,则把失败信息回调出去,对应代码中的onFailure。

- 2:不管成功失败,都会走finished。

看看Dispatcher.finished是怎么处理的

//Dispatcher.kt

internal fun finished(call: AsyncCall) {

call.callsPerHost().decrementAndGet()

finished(runningAsyncCalls, call)

}

先把当前访问主机记录的个数减一再走finished(runningAsyncCalls, call)

//Dispatcher.kt

private fun finished(calls: Deque, call: T) {

val idleCallback: Runnable?

synchronized(this) {

//1

if (!calls.remove(call)) throw AssertionError("Call wasn't in-flight!")

idleCallback = this.idleCallback

}

//2

val isRunning = promoteAndExecute()

if (!isRunning && idleCallback != null) {

idleCallback.run()

}

}

- 1:在运行队列中把该Call移除

- 2:又走了一遍promoteAndExecute(),这里主要是对等待队列中的Call处理,因为一个运行中的Call处理完,就有空出来的位置给等待队列中的Call执行,所以在异步请求中,每当处理完一个运行中的Call都会去等待队列里面去取Call,取到则处理,取不到则不处理。

所以分发器异步请求处理流程大概是这个样子的:

小结一下:

- 如何决定将请求放进ready还是running?

如果当前正在请求数为64,则将请求放进ready等待队列,如果小于64,但是已经存在同一域名主机的请求5个,也会放进ready;否则放进running队列立即执行。 - 从ready移到running的条件是什么?

每个请求执行完成会从running移除,同时进行第一步相同逻辑判断,决定是否移动。 - 分发器线程池的作用是什么?

无等待,高并发。

异步请求流程讲完了,看看同步请求

//RealCall.kt

override fun execute(): Response {

synchronized(this) {

check(!executed) { "Already Executed" }

executed = true

}

transmitter.timeoutEnter()

transmitter.callStart()

try {

//1

client.dispatcher.executed(this)

return getResponseWithInterceptorChain()

} finally {

//2

client.dispatcher.finished(this)

}

}

//Dispatcher.kt

@Synchronized internal fun executed(call: RealCall) {

runningSyncCalls.add(call)

}

- 1:同步请求就比较简单了,先把Call加入运行中的队列,因为同步队列所以是在当前线程执行而不需要线程池,然后getResponseWithInterceptorChain得到响应结果返回出来。

- 2:最后会走Dispatcher.finished,这个方法个异步的finished参数不一样,一个是AsyncCall,一个是RealCall。

//Dispatcher.kt

internal fun finished(call: RealCall) {

finished(runningSyncCalls, call)

}

最后调用的finished和异步请求的一样,都是从运行队列移除当前Call,然后去等待队列取消息,得到则处理,取不到则不处理。

总结一下分发器Dispatcher的作用:内部维护队列与线程池,完成请求调配。所有逻辑大部分都集中在拦截器中,但进入拦截器之前还需要依靠分发器来调配请求任务。

拦截器

下面讲讲OkHttp重点的拦截器

刚才说到getResponseWithInterceptorChain最终会得到响应结果,也就是说拦截器这块是真正进行网络请求的地方。拦截器采用的是责任链模式,什么是责任链模式呢?举个例子假如你要点外卖:A是你本人,B是骑手,C是商家

A只负责点外卖和收外卖,不用考虑商家是怎么做的

B是收到订单后去商家拿外卖,然后送到你手里

C是把外卖打包好,然后拿给骑手

其实这就是一个U型操作,一条链回去一条链回来,并且除了最后一个外,都有一个前置、中置和后置操作,最后一个结合起来只是把外卖拿给骑手的操作,也就是中置操作。前置操作是准备,中置操作是往下传,后置操作是得到结果后做处理,再往回传这么一个操作流程。如果还是不太清楚的话去搜下责任链模式的例子。

而在OkHttp拦截器中,就是采用这么一个模式,把所有的拦截器都串起来执行。

//RealCall.kt

@Throws(IOException::class)

fun getResponseWithInterceptorChain(): Response {

// Build a full stack of interceptors.

//1

val interceptors = mutableListOf()

interceptors += client.interceptors

interceptors += RetryAndFollowUpInterceptor(client)

interceptors += BridgeInterceptor(client.cookieJar)

interceptors += CacheInterceptor(client.cache)

interceptors += ConnectInterceptor

if (!forWebSocket) {

interceptors += client.networkInterceptors

}

interceptors += CallServerInterceptor(forWebSocket)

//2

val chain = RealInterceptorChain(interceptors, transmitter, null, 0, originalRequest, this,

client.connectTimeoutMillis, client.readTimeoutMillis, client.writeTimeoutMillis)

var calledNoMoreExchanges = false

try {

//3

val response = chain.proceed(originalRequest)

if (transmitter.isCanceled) {

response.closeQuietly()

throw IOException("Canceled")

}

return response

} catch (e: IOException) {

calledNoMoreExchanges = true

throw transmitter.noMoreExchanges(e) as Throwable

} finally {

if (!calledNoMoreExchanges) {

transmitter.noMoreExchanges(null)

}

}

}

分析:

- 1:把所有需要用的拦截器串起来存在mutableList里面,为什么是可变的List?因为client.interceptors和client.networkInterceptors我们可以自定义自己的拦截器添加进去,所以这两个的区别就在于client.interceptors拦截器在整条链的链头,而client.networkInterceptors是在链尾的前一个(CallServerInterceptor才是链尾)

- 2:得到一条链子之后,需要对它进行包装,主要目的是出入一些参数后续使用,例如刚开始的请求数据originalRequest,超时时间等等...

- 3:前面还是准备工作,调用chain.proceed(originalRequest)才真正执行,这条链才开始动起来。为什么能动起来?往下看

//RealInterceptorChain.kt

@Throws(IOException::class)

fun proceed(request: Request, transmitter: Transmitter, exchange: Exchange?): Response {

if (index >= interceptors.size) throw AssertionError()

calls++

// If we already have a stream, confirm that the incoming request will use it.

check(this.exchange == null || this.exchange.connection()!!.supportsUrl(request.url)) {

"network interceptor ${interceptors[index - 1]} must retain the same host and port"

}

// If we already have a stream, confirm that this is the only call to chain.proceed().

check(this.exchange == null || calls <= 1) {

"network interceptor ${interceptors[index - 1]} must call proceed() exactly once"

}

// Call the next interceptor in the chain.

//1

val next = RealInterceptorChain(interceptors, transmitter, exchange,

index + 1, request, call, connectTimeout, readTimeout, writeTimeout)

val interceptor = interceptors[index]

//2

@Suppress("USELESS_ELVIS")

val response = interceptor.intercept(next) ?: throw NullPointerException(

"interceptor $interceptor returned null")

// Confirm that the next interceptor made its required call to chain.proceed().

check(exchange == null || index + 1 >= interceptors.size || next.calls == 1) {

"network interceptor $interceptor must call proceed() exactly once"

}

check(response.body != null) { "interceptor $interceptor returned a response with no body" }

return response

}

分析:

- 1:首先先建立一条链子next ,其实值都没变,只有把索引值加一,这样链子就开始往下;并取到第一个拦截器interceptor (index刚传进来的时候为0)

- 2:interceptor.intercept(next),执行刚才的第一个拦截器的intercept方法并传了下一条链子进去。

链子组装好了,并且也转起来了,那就来看看那些拦截器是干什么的吧(只讲标注出来的重点,其他关系不大的代码可以不看)

重定向拦截器RetryAndFollowUpInterceptor

在不添加拦截器的情况下,第一个拦截器是RetryAndFollowUpInterceptor拦截器,根据命名可以猜到是关于重试的拦截器。其实它是重试和重定向拦截器:

@Throws(IOException::class)

override fun intercept(chain: Interceptor.Chain): Response {

var request = chain.request()

val realChain = chain as RealInterceptorChain

val transmitter = realChain.transmitter()

var followUpCount = 0

var priorResponse: Response? = null

//1

while (true) {

transmitter.prepareToConnect(request)

if (transmitter.isCanceled) {

throw IOException("Canceled")

}

var response: Response

var success = false

try {

//2

response = realChain.proceed(request, transmitter, null)

success = true

} catch (e: RouteException) {

//3

// The attempt to connect via a route failed. The request will not have been sent.

if (!recover(e.lastConnectException, transmitter, false, request)) {

throw e.firstConnectException

}

continue

} catch (e: IOException) {

//4

// An attempt to communicate with a server failed. The request may have been sent.

val requestSendStarted = e !is ConnectionShutdownException

if (!recover(e, transmitter, requestSendStarted, request)) throw e

continue

} finally {

// The network call threw an exception. Release any resources.

if (!success) {

transmitter.exchangeDoneDueToException()

}

}

//5

// Attach the prior response if it exists. Such responses never have a body.

if (priorResponse != null) {

response = response.newBuilder()

.priorResponse(priorResponse.newBuilder()

.body(null)

.build())

.build()

}

val exchange = response.exchange

val route = exchange?.connection()?.route()

val followUp = followUpRequest(response, route)

//6

if (followUp == null) {

if (exchange != null && exchange.isDuplex) {

transmitter.timeoutEarlyExit()

}

return response

}

val followUpBody = followUp.body

if (followUpBody != null && followUpBody.isOneShot()) {

return response

}

response.body?.closeQuietly()

if (transmitter.hasExchange()) {

exchange?.detachWithViolence()

}

if (++followUpCount > MAX_FOLLOW_UPS) {

throw ProtocolException("Too many follow-up requests: $followUpCount")

}

request = followUp

priorResponse = response

}

}

刚才提到责任链是有前置操作、中置操作和后置操作,那这个拦截器的这些操作是什么?边分析边总结

分析:

- 1:while(true),不断的去取response,直到取到了才返回出来,不然一直在里面去取去重试,这就是为什么能重试了。

- 2:realChain.proceed,把请求request传给下一个拦截器,所以这个是中置操作

- 3和4:都是对请求失败的处理,从这里开始就是后置操作了,这里已经得到结果了,如果请求失败就重试,然后continue重新开始请求。

- 5:当需要重定向的时候,会得到重定向信息,然后又开始新一轮的请求,只不过这次用的是重定向会回来后的请求。

- 6:当我不用重试,也不用重定向了,那就是拿到最终的response了,返回给外部处理。

那前置工作是什么?

看到刚开始的transmitter.prepareToConnect(request),这是对请求前的准备和检查,里面涉及到了ExchangeFinder,其实就是创建一个ExchangeFinder对象,赋值给exchangeFinder,后面在连接拦截器的时候会讲到。

//Transmitter.kt

fun prepareToConnect(request: Request) {

if (this.request != null) {

if (this.request!!.url.canReuseConnectionFor(request.url) && exchangeFinder!!.hasRouteToTry()) {

return // Already ready.

}

check(exchange == null)

if (exchangeFinder != null) {

maybeReleaseConnection(null, true)

exchangeFinder = null

}

}

this.request = request

this.exchangeFinder = ExchangeFinder(

this, connectionPool, createAddress(request.url), call, eventListener)

}

总结一下重定向拦截器的工作:

前置:对请求进行准备和检查

中置:准备工作完后传给下一个拦截器

后置:从下个拦截器接收到的信息做处理(重试、重定向或者放回response)

桥接拦截器BridgeInterceptor

接下来看看下一个拦截器,BridgeInterceptor桥接拦截器

//BridgeInterceptor.kt

@Throws(IOException::class)

override fun intercept(chain: Interceptor.Chain): Response {

val userRequest = chain.request()

val requestBuilder = userRequest.newBuilder()

//1

val body = userRequest.body

if (body != null) {

val contentType = body.contentType()

if (contentType != null) {

requestBuilder.header("Content-Type", contentType.toString())

}

val contentLength = body.contentLength()

if (contentLength != -1L) {

requestBuilder.header("Content-Length", contentLength.toString())

requestBuilder.removeHeader("Transfer-Encoding")

} else {

requestBuilder.header("Transfer-Encoding", "chunked")

requestBuilder.removeHeader("Content-Length")

}

}

if (userRequest.header("Host") == null) {

requestBuilder.header("Host", userRequest.url.toHostHeader())

}

if (userRequest.header("Connection") == null) {

requestBuilder.header("Connection", "Keep-Alive")

}

// If we add an "Accept-Encoding: gzip" header field we're responsible for also decompressing

// the transfer stream.

var transparentGzip = false

if (userRequest.header("Accept-Encoding") == null && userRequest.header("Range") == null) {

transparentGzip = true

requestBuilder.header("Accept-Encoding", "gzip")

}

val cookies = cookieJar.loadForRequest(userRequest.url)

if (cookies.isNotEmpty()) {

requestBuilder.header("Cookie", cookieHeader(cookies))

}

if (userRequest.header("User-Agent") == null) {

requestBuilder.header("User-Agent", userAgent)

}

//2

val networkResponse = chain.proceed(requestBuilder.build())

//3

cookieJar.receiveHeaders(userRequest.url, networkResponse.headers)

val responseBuilder = networkResponse.newBuilder()

.request(userRequest)

if (transparentGzip &&

"gzip".equals(networkResponse.header("Content-Encoding"), ignoreCase = true) &&

networkResponse.promisesBody()) {

val responseBody = networkResponse.body

if (responseBody != null) {

val gzipSource = GzipSource(responseBody.source())

val strippedHeaders = networkResponse.headers.newBuilder()

.removeAll("Content-Encoding")

.removeAll("Content-Length")

.build()

responseBuilder.headers(strippedHeaders)

val contentType = networkResponse.header("Content-Type")

responseBuilder.body(RealResponseBody(contentType, -1L, gzipSource.buffer()))

}

}

return responseBuilder.build()

}

这个拦截器主要是对我们的请求进一步的包装,因为一开始我们的请求只有一条地址路径,而这个拦截器能解析我们请求,并加上一些重要的请求头信息,这样我们就不用了手动加上或者本来不知道的请求头信息(Content-Length等等)。

分析:

- 1:首先在原始请求的基础上,加上了一些请求头信息,组装成完整的请求信息,还添加上了gzip解析(压缩能增加传输速度),这是前置工作。

- 2:把组装好的请求头信息传到下一个拦截器去处理,这是中置工作。

- 3:从下个拦截器得到的response做处理,假如支持gzip解析,则解析并返回给上一个拦截器(重试和重定向拦截器),否则直接返回上一个拦截器。这是后置工作。

缓存拦截器CacheInterceptor

接下来看下一个拦截器,CacheInterceptor缓存拦截器。缓存拦截器本身主要逻辑其实都在缓存策略中,拦截器本身逻辑非常简单,所以先来讲下缓存拦截器中的缓存策略CacheStrategy.Factory(now, chain.request(), cacheCandidate).compute(),它实际返回一个带有networkRequest和cacheResponse的CacheStrategy缓存策略:

//CacheStrategy.kt

init {

//1

if (cacheResponse != null) {

this.sentRequestMillis = cacheResponse.sentRequestAtMillis

this.receivedResponseMillis = cacheResponse.receivedResponseAtMillis

val headers = cacheResponse.headers

//2

for (i in 0 until headers.size) {

val fieldName = headers.name(i)

val value = headers.value(i)

when {

fieldName.equals("Date", ignoreCase = true) -> {

servedDate = value.toHttpDateOrNull()

servedDateString = value

}

fieldName.equals("Expires", ignoreCase = true) -> {

expires = value.toHttpDateOrNull()

}

fieldName.equals("Last-Modified", ignoreCase = true) -> {

lastModified = value.toHttpDateOrNull()

lastModifiedString = value

}

fieldName.equals("ETag", ignoreCase = true) -> {

etag = value

}

fieldName.equals("Age", ignoreCase = true) -> {

ageSeconds = value.toNonNegativeInt(-1)

}

}

}

}

}

分析:

- 1:若存在候选缓存,获取该response请求时发起和接收的时间(在CallServerInterceptor中记录的这些时间)

- 2:遍历header,保存Date、Expires、Last-Modified、ETag、Age等缓存机制相关字段的值

//CacheStrategy.kt

private fun computeCandidate(): CacheStrategy {

//1

// No cached response.

if (cacheResponse == null) {

return CacheStrategy(request, null)

}

//2

// Drop the cached response if it's missing a required handshake.

if (request.isHttps && cacheResponse.handshake == null) {

return CacheStrategy(request, null)

}

//3

// If this response shouldn't have been stored, it should never be used as a response source.

// This check should be redundant as long as the persistence store is well-behaved and the

// rules are constant.

if (!isCacheable(cacheResponse, request)) {

return CacheStrategy(request, null)

}

//4

val requestCaching = request.cacheControl

if (requestCaching.noCache || hasConditions(request)) {

return CacheStrategy(request, null)

}

val responseCaching = cacheResponse.cacheControl

val ageMillis = cacheResponseAge()

var freshMillis = computeFreshnessLifetime()

if (requestCaching.maxAgeSeconds != -1) {

freshMillis = minOf(freshMillis, SECONDS.toMillis(requestCaching.maxAgeSeconds.toLong()))

}

var minFreshMillis: Long = 0

if (requestCaching.minFreshSeconds != -1) {

minFreshMillis = SECONDS.toMillis(requestCaching.minFreshSeconds.toLong())

}

var maxStaleMillis: Long = 0

if (!responseCaching.mustRevalidate && requestCaching.maxStaleSeconds != -1) {

maxStaleMillis = SECONDS.toMillis(requestCaching.maxStaleSeconds.toLong())

}

//5

if (!responseCaching.noCache && ageMillis + minFreshMillis < freshMillis + maxStaleMillis) {

val builder = cacheResponse.newBuilder()

if (ageMillis + minFreshMillis >= freshMillis) {

builder.addHeader("Warning", "110 HttpURLConnection \"Response is stale\"")

}

val oneDayMillis = 24 * 60 * 60 * 1000L

if (ageMillis > oneDayMillis && isFreshnessLifetimeHeuristic()) {

builder.addHeader("Warning", "113 HttpURLConnection \"Heuristic expiration\"")

}

return CacheStrategy(null, builder.build())

}

//6

// Find a condition to add to the request. If the condition is satisfied, the response body

// will not be transmitted.

val conditionName: String

val conditionValue: String?

when {

etag != null -> {

conditionName = "If-None-Match"

conditionValue = etag

}

lastModified != null -> {

conditionName = "If-Modified-Since"

conditionValue = lastModifiedString

}

servedDate != null -> {

conditionName = "If-Modified-Since"

conditionValue = servedDateString

}

else -> return CacheStrategy(request, null) // No condition! Make a regular request.

}

val conditionalRequestHeaders = request.headers.newBuilder()

conditionalRequestHeaders.addLenient(conditionName, conditionValue!!)

//7

val conditionalRequest = request.newBuilder()

.headers(conditionalRequestHeaders.build())

.build()

return CacheStrategy(conditionalRequest, cacheResponse)

}

分析:

- 1:cacheResponse 是从缓存中找到的响应,如果为null,那就表示没有找到对应的缓存,创建的 CacheStrategy 实例,对象只存在 networkRequest ,这代表了需要发起网络请求。

- 2:继续往下走意味着 cacheResponse 必定存在,但是它不一定能用。后续进行有效性的一系列判断,如果本次请求是HTTPS,但是缓存中没有对应的握手信息,那么缓存无效。

- 3:isCacheable方法通过response的状态码以及response、request的缓存控制字段来判断是否可缓存。缓存响应中的响应码为 200, 203, 204, 300, 301, 404, 405, 410, 414, 501, 308 的情况下,只判断服务器是不是给了Cache-Control: no-store (资源不能被缓存),所以如果服务器给到了这个响应头,那就和前面两个判定一致(缓存不可用)。否则继续进一步判断缓存是否可用

- 4:判断request中的缓存控制字段和header是否设置If-Modified-Since或If-None-Match。走到这一步,OkHttp需要先对用户本次发起的 Request 进行判定,如果用户指定了 Cache-Control: no-cache (不使用缓存)的请求头或者请求头包含 If-Modified-Since 或 If-None-Match (请求验证),那么就不允许使用缓存。

- 5:判断缓存是否在有效期内。

- 6:缓存超出有效期,判断是否设置了Etag和Last-Modified。如果都没有,意味着无法与服务器发起比较,只能重新请求。如果有,则添加请求头,Etag对应If-None-Match、Last-Modified对应If-Modified-Since

- 7:至此,缓存的判定结束,拦截器中只需要判断 CacheStrategy 中 networkRequest 与 cacheResponse 的不同组合就能够判断是否允许使用缓存。

注意:但是需要注意的是,如果用户在创建请求时,配置了 onlyIfCached 这意味着用户这次希望这个请求只从缓存获得,不需要发起请求。那如果生成的 CacheStrategy 存在 networkRequest 这意味着肯定会发起请求,此时出现冲突!那会直接给到拦截器一个既没有 networkRequest 又没有 cacheResponse 的对象。拦截器直接返回用户 504 !

讲完缓存策略现在来看缓存拦截器就很简单了:

//CacheStrategy.kt

fun compute(): CacheStrategy {

val candidate = computeCandidate()

// We're forbidden from using the network and the cache is insufficient.

if (candidate.networkRequest != null && request.cacheControl.onlyIfCached) {

return CacheStrategy(null, null)

}

return candidate

}

总结下缓存策略:

1、如果从缓存获取的 Response 是null,那就需要使用网络请求获取响应;

2、如果是Https请求,但是又丢失了握手信息,那也不能使用缓存,需要进行网络请求;

3、如果判断响应码不能缓存且响应头有 no-store 标识,那就需要进行网络请求;

4、如果请求头有 no-cache 标识或者有 If-Modified-Since/If-None-Match ,那么需要进行网络请求;

5、如果响应头没有 no-cache 标识,且缓存时间没有超过极限时间,那么可以使用缓存,不需要进行网络请求;

6、如果缓存过期了,判断响应头是否设置 Etag/Last-Modified/Date ,没有那就直接使用网络请求否则需要考虑服务器返回304;

并且,只要需要进行网络请求,请求头中就不能包含 only-if-cached ,否则框架直接返回504!

//CacheInterceptor.kt

@Throws(IOException::class)

override fun intercept(chain: Interceptor.Chain): Response {

//1

val cacheCandidate = cache?.get(chain.request())

val now = System.currentTimeMillis()

//2

val strategy = CacheStrategy.Factory(now, chain.request(), cacheCandidate).compute()

val networkRequest = strategy.networkRequest

val cacheResponse = strategy.cacheResponse

cache?.trackResponse(strategy)

if (cacheCandidate != null && cacheResponse == null) {

// The cache candidate wasn't applicable. Close it.

cacheCandidate.body?.closeQuietly()

}

//3

// If we're forbidden from using the network and the cache is insufficient, fail.

if (networkRequest == null && cacheResponse == null) {

return Response.Builder()

.request(chain.request())

.protocol(Protocol.HTTP_1_1)

.code(HTTP_GATEWAY_TIMEOUT)

.message("Unsatisfiable Request (only-if-cached)")

.body(EMPTY_RESPONSE)

.sentRequestAtMillis(-1L)

.receivedResponseAtMillis(System.currentTimeMillis())

.build()

}

//4

// If we don't need the network, we're done.

if (networkRequest == null) {

return cacheResponse!!.newBuilder()

.cacheResponse(stripBody(cacheResponse))

.build()

}

var networkResponse: Response? = null

try {

//5

networkResponse = chain.proceed(networkRequest)

} finally {

// If we're crashing on I/O or otherwise, don't leak the cache body.

if (networkResponse == null && cacheCandidate != null) {

cacheCandidate.body?.closeQuietly()

}

}

//6

// If we have a cache response too, then we're doing a conditional get.

if (cacheResponse != null) {

if (networkResponse?.code == HTTP_NOT_MODIFIED) {

val response = cacheResponse.newBuilder()

.headers(combine(cacheResponse.headers, networkResponse.headers))

.sentRequestAtMillis(networkResponse.sentRequestAtMillis)

.receivedResponseAtMillis(networkResponse.receivedResponseAtMillis)

.cacheResponse(stripBody(cacheResponse))

.networkResponse(stripBody(networkResponse))

.build()

networkResponse.body!!.close()

// Update the cache after combining headers but before stripping the

// Content-Encoding header (as performed by initContentStream()).

cache!!.trackConditionalCacheHit()

cache.update(cacheResponse, response)

return response

} else {

cacheResponse.body?.closeQuietly()

}

}

val response = networkResponse!!.newBuilder()

.cacheResponse(stripBody(cacheResponse))

.networkResponse(stripBody(networkResponse))

.build()

//7

if (cache != null) {

if (response.promisesBody() && CacheStrategy.isCacheable(response, networkRequest)) {

// Offer this request to the cache.

val cacheRequest = cache.put(response)

return cacheWritingResponse(cacheRequest, response)

}

if (HttpMethod.invalidatesCache(networkRequest.method)) {

try {

cache.remove(networkRequest)

} catch (_: IOException) {

// The cache cannot be written.

}

}

}

return response

}

缓存拦截器说复杂不复杂,说简单不简单,把流程缕清就好了。

- 强制缓存,即缓存在有效期内就直接返回缓存,不进行网络请求。

- 对比缓存,即缓存超过有效期,进行网络请求。若数据未修改,服务端返回不带body的304响应,表示客户端缓存仍有效可用;否则返回完整最新数据,客户端取网络请求的最新数据。

分析:

- 1:cache即构建OkHttpClient时传入的Cache对象的内部成员,用request的url作为key,查找缓存的response作为候选缓存,当没传Cache对象时,cacheCandidate 为空,cacheCandidate = null

- 2:生成缓存策略,获取缓存策略生成的networkRequest和cacheResponse(合法缓存),networkRequest是否为空决定是否请求网络,cacheResponse是否为空决定是否使用缓存,下面流程会根据生成的networkRequest和cacheResponse来决定执行什么缓存策略。

- 3:即无网络请求又无合法缓存,返回状态码504的response(HTTP_GATEWAY_TIMEOUT = 504),

- 4:强制缓存策略

- 5:调用下一层拦截器

- 6:当服务端放回networkResponse后,执行对比缓存策略,服务端返回304状态码(HTTP_NOT_MODIFIED = 304),表示本地缓存仍有效

- 7:判断能否缓存networkResponse,实现本次缓存

总结一下:

networkRequest、cacheResponse均为空,构建返回一个状态码为504的response;

networkRequest为空、cacheResponse不为空,执行强制缓存

networkRequest、cacheResponse均不为空,执行对比缓存

networkRequest不为空、cacheResponse为空,网络请求获取到最新response后,视情况缓存response

缓存只能缓存GET请求的响应

缓存拦截器本身主要逻辑其实都在缓存策略中,拦截器本身逻辑非常简单,如果确定需要发起网络请求,则下一个拦截器为 ConnectInterceptor

连接拦截器ConnectInterceptor

接着下一个ConnectInterceptor连接拦截器

//ConnectInterceptor.kt

@Throws(IOException::class)

override fun intercept(chain: Interceptor.Chain): Response {

val realChain = chain as RealInterceptorChain

val request = realChain.request()

val transmitter = realChain.transmitter()

// We need the network to satisfy this request. Possibly for validating a conditional GET.

val doExtensiveHealthChecks = request.method != "GET"

val exchange = transmitter.newExchange(chain, doExtensiveHealthChecks)

return realChain.proceed(request, transmitter, exchange)

}

代码也是很简单,但里面的逻辑很多,只有前置和中置操作,没有后置操作,因为这里是建立一个连接去发请求的,所以没有后置工作。这个拦截器中最重要的是调用newExchange然后new一个exchange出来传给下一个拦截器

//Transmitter.kt

internal fun newExchange(chain: Interceptor.Chain, doExtensiveHealthChecks: Boolean): Exchange {

synchronized(connectionPool) {

check(!noMoreExchanges) { "released" }

check(exchange == null) {

"cannot make a new request because the previous response is still open: " +

"please call response.close()"

}

}

//1

val codec = exchangeFinder!!.find(client, chain, doExtensiveHealthChecks)

//2

val result = Exchange(this, call, eventListener, exchangeFinder!!, codec)

synchronized(connectionPool) {

this.exchange = result

this.exchangeRequestDone = false

this.exchangeResponseDone = false

return result

}

}

重点只看1、2,分析:

- 1:调用exchangeFinder.find得到一个codec(code & decoder),连接的编码解码器,而这个exchangeFinder就是在重试和重定向拦截器做前置工作时候准备的,在这里就用上了。

- 2:然后得到编码解码器codec后拼成一个Exchange返回出去。

注意:编码解码器就是在发请求报文和接收请求报文需要按照格式去读,可以是http1格式也可以是http2格式,不同格式就是不用编码。

看看怎么得到codec的

//ExchangeFinder.kt

fun find(

client: OkHttpClient,

chain: Interceptor.Chain,

doExtensiveHealthChecks: Boolean

): ExchangeCodec {

val connectTimeout = chain.connectTimeoutMillis()

val readTimeout = chain.readTimeoutMillis()

val writeTimeout = chain.writeTimeoutMillis()

val pingIntervalMillis = client.pingIntervalMillis

val connectionRetryEnabled = client.retryOnConnectionFailure

try {

//1

val resultConnection = findHealthyConnection(

connectTimeout = connectTimeout,

readTimeout = readTimeout,

writeTimeout = writeTimeout,

pingIntervalMillis = pingIntervalMillis,

connectionRetryEnabled = connectionRetryEnabled,

doExtensiveHealthChecks = doExtensiveHealthChecks

)

//2

return resultConnection.newCodec(client, chain)

} catch (e: RouteException) {

trackFailure()

throw e

} catch (e: IOException) {

trackFailure()

throw RouteException(e)

}

}

得到codec需要两个步骤:

- 1:获取一个健康的连接。

- 2:然后用健康的链接根据client和chain创建一个对应的编码解码器。

怎么得到一个健康的连接呢?

//ExchangeFinder.kt

@Throws(IOException::class)

private fun findHealthyConnection(

connectTimeout: Int,

readTimeout: Int,

writeTimeout: Int,

pingIntervalMillis: Int,

connectionRetryEnabled: Boolean,

doExtensiveHealthChecks: Boolean

): RealConnection {

while (true) {

//1

val candidate = findConnection(

connectTimeout = connectTimeout,

readTimeout = readTimeout,

writeTimeout = writeTimeout,

pingIntervalMillis = pingIntervalMillis,

connectionRetryEnabled = connectionRetryEnabled

)

// If this is a brand new connection, we can skip the extensive health checks.

synchronized(connectionPool) {

if (candidate.successCount == 0) {

return candidate

}

}

// Do a (potentially slow) check to confirm that the pooled connection is still good. If it

// isn't, take it out of the pool and start again.

//2

if (!candidate.isHealthy(doExtensiveHealthChecks)) {

candidate.noNewExchanges()

continue

}

return candidate

}

}

分析:

- 1:创建一个连接出来。

- 2:然后判断健不健康,不健康的话就继续continue(注意while),直到取到一个健康的连接返回出去。

注意:我们的目的是要得到一个编码解码器codec才引申出健康的连接。

那么怎么创建一个连接呢?

//ExchangeFinder.kt

@Throws(IOException::class)

private fun findConnection(

connectTimeout: Int,

readTimeout: Int,

writeTimeout: Int,

pingIntervalMillis: Int,

connectionRetryEnabled: Boolean

): RealConnection {

var foundPooledConnection = false

var result: RealConnection? = null

var selectedRoute: Route? = null

var releasedConnection: RealConnection?

val toClose: Socket?

synchronized(connectionPool) {

if (transmitter.isCanceled) throw IOException("Canceled")

hasStreamFailure = false // This is a fresh attempt.

//1

releasedConnection = transmitter.connection

toClose = if (transmitter.connection != null && transmitter.connection!!.noNewExchanges) {

transmitter.releaseConnectionNoEvents()

} else {

null

}

//2

if (transmitter.connection != null) {

// We had an already-allocated connection and it's good.

result = transmitter.connection

releasedConnection = null

}

//3

if (result == null) {

// Attempt to get a connection from the pool.

//4

if (connectionPool.transmitterAcquirePooledConnection(address, transmitter, null, false)) {

foundPooledConnection = true

result = transmitter.connection

} else if (nextRouteToTry != null) {

selectedRoute = nextRouteToTry

nextRouteToTry = null

} else if (retryCurrentRoute()) {

selectedRoute = transmitter.connection!!.route()

}

}

}

toClose?.closeQuietly()

if (releasedConnection != null) {

eventListener.connectionReleased(call, releasedConnection!!)

}

if (foundPooledConnection) {

eventListener.connectionAcquired(call, result!!)

}

//5

if (result != null) {

// If we found an already-allocated or pooled connection, we're done.

return result!!

}

// If we need a route selection, make one. This is a blocking operation.

var newRouteSelection = false

if (selectedRoute == null && (routeSelection == null || !routeSelection!!.hasNext())) {

newRouteSelection = true

routeSelection = routeSelector.next()

}

var routes: List? = null

synchronized(connectionPool) {

if (transmitter.isCanceled) throw IOException("Canceled")

if (newRouteSelection) {

// Now that we have a set of IP addresses, make another attempt at getting a connection from

// the pool. This could match due to connection coalescing.

routes = routeSelection!!.routes

//6

if (connectionPool.transmitterAcquirePooledConnection(

address, transmitter, routes, false)) {

foundPooledConnection = true

result = transmitter.connection

}

}

if (!foundPooledConnection) {

if (selectedRoute == null) {

selectedRoute = routeSelection!!.next()

}

// Create a connection and assign it to this allocation immediately. This makes it possible

// for an asynchronous cancel() to interrupt the handshake we're about to do.

//7

result = RealConnection(connectionPool, selectedRoute!!)

connectingConnection = result

}

}

// If we found a pooled connection on the 2nd time around, we're done.

if (foundPooledConnection) {

eventListener.connectionAcquired(call, result!!)

return result!!

}

//8

// Do TCP + TLS handshakes. This is a blocking operation.

result!!.connect(

connectTimeout,

readTimeout,

writeTimeout,

pingIntervalMillis,

connectionRetryEnabled,

call,

eventListener

)

connectionPool.routeDatabase.connected(result!!.route())

var socket: Socket? = null

synchronized(connectionPool) {

connectingConnection = null

// Last attempt at connection coalescing, which only occurs if we attempted multiple

// concurrent connections to the same host.

//9

if (connectionPool.transmitterAcquirePooledConnection(address, transmitter, routes, true)) {

// We lost the race! Close the connection we created and return the pooled connection.

result!!.noNewExchanges = true

socket = result!!.socket()

result = transmitter.connection

// It's possible for us to obtain a coalesced connection that is immediately unhealthy. In

// that case we will retry the route we just successfully connected with.

nextRouteToTry = selectedRoute

} else {

//10

connectionPool.put(result!!)

transmitter.acquireConnectionNoEvents(result!!)

}

}

socket?.closeQuietly()

eventListener.connectionAcquired(call, result!!)

return result!!

}

代码比较长,只分析重点

分析:

因为初次进来的时候,所有都是默认值,都为空,所以1和2中的connection为空,直接看到3,之后再看1和2。

- 3:刚进来的时候result初始值为空,来到了4.

- 4:调用连接池connectionPool的transmitterAcquirePooledConnection,主要是去连接池里面拿连接。

//RealConnectionPool.kt

fun transmitterAcquirePooledConnection(

address: Address,

transmitter: Transmitter,

routes: List?,

requireMultiplexed: Boolean

): Boolean {

this.assertThreadHoldsLock()

for (connection in connections) {

if (requireMultiplexed && !connection.isMultiplexed) continue

if (!connection.isEligible(address, routes)) continue

transmitter.acquireConnectionNoEvents(connection)

return true

}

return false

}

循环遍历连接池里面的连接,因为刚才4传进来的requireMultiplexed = false,所以往下走,只要connection.isEligible(address, routes)为true,代表这个可用,那我就直接拿出这条连接来使用,acquireConnectionNoEvents(connection)赋值到Transmitter的connection,下次就用到的时候就不为空了。

注意:我们的目的是要得到一个编码解码器codec才引申出健康的连接再到怎么取连接。

那么问题又来了,什么才算可用的连接呢?

//RealConnection.kt

internal fun isEligible(address: Address, routes: List?): Boolean {

//1

// If this connection is not accepting new exchanges, we're done.

if (transmitters.size >= allocationLimit || noNewExchanges) return false

//2

// If the non-host fields of the address don't overlap, we're done.

if (!this.route.address.equalsNonHost(address)) return false

//3

// If the host exactly matches, we're done: this connection can carry the address.

if (address.url.host == this.route().address.url.host) {

return true // This connection is a perfect match.

}

// At this point we don't have a hostname match. But we still be able to carry the request if

// our connection coalescing requirements are met. See also:

// https://hpbn.co/optimizing-application-delivery/#eliminate-domain-sharding

// https://daniel.haxx.se/blog/2016/08/18/http2-connection-coalescing/

// 1. This connection must be HTTP/2.

if (http2Connection == null) return false

// 2. The routes must share an IP address.

if (routes == null || !routeMatchesAny(routes)) return false

// 3. This connection's server certificate's must cover the new host.

if (address.hostnameVerifier !== OkHostnameVerifier) return false

if (!supportsUrl(address.url)) return false

// 4. Certificate pinning must match the host.

try {

address.certificatePinner!!.check(address.url.host, handshake()!!.peerCertificates)

} catch (_: SSLPeerUnverifiedException) {

return false

}

return true // The caller's address can be carried by this connection.

}

这里可分为两部分,一部分是http1的,一部分是http2的。

分析:

- 1:Http1的情况系allocationLimit为1,Http2的情况下allocationLimit为4,transmitters.size是连接的请求数量,在http1时,每个http连接只能接受一个请求,noNewExchanges代表目前连接是否还接受新的请求。所以,假如我还接受别的请求,并且算上这个请求没有超出allocationLimit,继续往下走,否则继续返回false。

- 2:现在的这个请求是否与连接的请求是否连在同个地方,也就是一下这些值要相等,包括端口以及加密信息、代理配置等等...

//Address.kt

internal fun equalsNonHost(that: Address): Boolean {

return this.dns == that.dns &&

this.proxyAuthenticator == that.proxyAuthenticator &&

this.protocols == that.protocols &&

this.connectionSpecs == that.connectionSpecs &&

this.proxySelector == that.proxySelector &&

this.proxy == that.proxy &&

this.sslSocketFactory == that.sslSocketFactory &&

this.hostnameVerifier == that.hostnameVerifier &&

this.certificatePinner == that.certificatePinner &&

this.url.port == that.url.port

}

- 3:目标主机名与连接的主机名相同就返回

可能你会觉得,这样做就可以判断可不可用了,那下面代码是干嘛的?主要是针对Http2的(包括是Https的情况下),其实还有一种叫连接合并(connection coalescing)的东西,这种情况是不同域名指向了同一主机IP,这种情况是存在的,所以我们可以不要求域名要一致。但是怎么保证我们解析到相同IP能正确访问到我们的想访问的主机呢?可以看他们的证书签名。

假如域名不一样,继续往下看,这个连接必须是Http2的情况下才可能存在可用的链接,否则直接返回false。接着routes(Route后面会讲到)不为空并且IP和代理模式要一样,才会往下走,但是第一次传进来的时候routes是空的,所以第一次如果不在前面取到可用链接的话,后面就直接返回,然后回到findConnection里面继续往下走;所以routes是判断是否能连接合并,假如都有了,那往下走,证书签名、端口也要一样才能算一个可用的连接。

所以isEligible中,可用的连接是:我的请求个数没超限,并且用同样的方式连接同一个主机。

注意:我们的目的是要得到一个编码解码器codec才引申出健康的连接再到怎么取连接。

回到刚才长长代码的findConnection里面继续,现在我们就知道了怎么去连接池里面取一个可用的链接了,接着往下:

- 5:假如4取到后可用的连接后,之后返回,下面就不走了,出去判断健不健康了。

- 6:如果在第一次取的时候拿不到,那么再去拿一次,只不过这次对比第一次多了个routes,那么就讲一下Address和Route是什么?

Address:里面两个信息,一个是主机名,一个是端口,有了这个就可以直接解析得到IP然后结合代理类型找到真的代理的主机。而这个Address是在第一个拦截器准备工作创建exchangeFinder的时候传进去的。Address除了主机名uriHost和端口uriPort,其他参数都是从OkHttpClient里面取到的。

Route:包含了Address(域名和端口)、Proxy代理和IP地址。合并连接中需要Route中结合Proxy和IP地址端口决定的,所以传了Route才能找到连接路线才能进行连接合并。

同样拿到之后也是会返回result = transmitter.connection - 7:假如第二次还是没取到,foundPooledConnection = false,那就自己创建一个连接。

- 8:建立一个连接。(7只是创建)

- 9:为什么还要再去连接池里面找呢?当连接池里面没有对应的可用连接时,第一次和第二次肯定找不到,那么就要自己创建连接了,此时刚好有两个请求都是指向同一个连接,那么创建两个相同的连接就浪费资源了。所以先创建好的去连接池里面找找不到,那么就到了10(直接把连接塞进连接池);之后因为synchronized,后面进来的去连接池里面找,发现找到了,就把刚才自己创建好的连接扔掉。这里创建的连接都是都是多路复用的,因为刚才请求都是同个路径,所以需要多路复用。

- 10:把新建的连接放进连接池。

刚才说初次进来的时候connection肯定是空的,但是现在创建完就不为空,那么这里是什么时候调用的?当请求失败,重定向的时候。 - 1:当我重新回来的时候,发现有新的连接,并且不能重新复用的,那就把这个连接设为关闭,到后面再把连接断开。

- 2:经过1之后,说明这连接时可以用的,那就直接拿这连接来使用。

总结一下:去找可用连接有五个操作

1、看看有没有可用连接,有可用连接且符合就直接用。

2、从池子里面拿一个不带多路复用的连接。

3、从池子里面拿一个可以多路复用的连接。

4、创建连接。

5、创建过连接,去池子里面拿,只拿多路复用的连接,拿到的话就把刚才创建的扔掉。

注意:我们的目的是要得到一个编码解码器codec才引申出健康的连接再到怎么取连接。

现在来看下什么是这个取到的连接怎么才是健康的

//RealConnection.kt

/** Returns true if this connection is ready to host new streams. */

fun isHealthy(doExtensiveChecks: Boolean): Boolean {

val socket = this.socket!!

val source = this.source!!

if (socket.isClosed || socket.isInputShutdown || socket.isOutputShutdown) {

return false

}

val http2Connection = this.http2Connection

if (http2Connection != null) {

return http2Connection.isHealthy(System.nanoTime())

}

if (doExtensiveChecks) {

try {

val readTimeout = socket.soTimeout

try {

socket.soTimeout = 1

return !source.exhausted()

} finally {

socket.soTimeout = readTimeout

}

} catch (_: SocketTimeoutException) {

// Read timed out; socket is good.

} catch (_: IOException) {

return false // Couldn't read; socket is closed.

}

}

return true

}

这里面主要看socket关闭没有,再看看http2健不健康也就是http2的是否超出心跳时间。

所以到这里就已经有了一个健康可用的连接,用于创建一个对应的编码解码器codec

回到连接拦截器里面的调用Transmitter.newExchange,现在codec有了,这个codec不仅包含了编码解码器,还有一个可用的健康的连接。再把这个codec装进Exchange,然后返回给连接拦截器,最后连接拦截器把exchange传给下一个拦截器。

请求拦截器CallServerInterceptor

接着最后一个拦截器,CallServerInterceptor请求拦截器

//CallServerInterceptor.kt

@Throws(IOException::class)

override fun intercept(chain: Interceptor.Chain): Response {

val realChain = chain as RealInterceptorChain

val exchange = realChain.exchange()

val request = realChain.request()

val requestBody = request.body

val sentRequestMillis = System.currentTimeMillis()

exchange.writeRequestHeaders(request)

var invokeStartEvent = true

var responseBuilder: Response.Builder? = null

if (HttpMethod.permitsRequestBody(request.method) && requestBody != null) {

// If there's a "Expect: 100-continue" header on the request, wait for a "HTTP/1.1 100

// Continue" response before transmitting the request body. If we don't get that, return

// what we did get (such as a 4xx response) without ever transmitting the request body.

if ("100-continue".equals(request.header("Expect"), ignoreCase = true)) {

exchange.flushRequest()

responseBuilder = exchange.readResponseHeaders(expectContinue = true)

exchange.responseHeadersStart()

invokeStartEvent = false

}

if (responseBuilder == null) {

if (requestBody.isDuplex()) {

// Prepare a duplex body so that the application can send a request body later.

exchange.flushRequest()

val bufferedRequestBody = exchange.createRequestBody(request, true).buffer()

requestBody.writeTo(bufferedRequestBody)

} else {

// Write the request body if the "Expect: 100-continue" expectation was met.

val bufferedRequestBody = exchange.createRequestBody(request, false).buffer()

requestBody.writeTo(bufferedRequestBody)

bufferedRequestBody.close()

}

} else {

exchange.noRequestBody()

if (!exchange.connection()!!.isMultiplexed) {

// If the "Expect: 100-continue" expectation wasn't met, prevent the HTTP/1 connection

// from being reused. Otherwise we're still obligated to transmit the request body to

// leave the connection in a consistent state.

exchange.noNewExchangesOnConnection()

}

}

} else {

exchange.noRequestBody()

}

if (requestBody == null || !requestBody.isDuplex()) {

exchange.finishRequest()

}

if (responseBuilder == null) {

responseBuilder = exchange.readResponseHeaders(expectContinue = false)!!

if (invokeStartEvent) {

exchange.responseHeadersStart()

invokeStartEvent = false

}

}

var response = responseBuilder

.request(request)

.handshake(exchange.connection()!!.handshake())

.sentRequestAtMillis(sentRequestMillis)

.receivedResponseAtMillis(System.currentTimeMillis())

.build()

var code = response.code

if (code == 100) {

// Server sent a 100-continue even though we did not request one. Try again to read the actual

// response status.

responseBuilder = exchange.readResponseHeaders(expectContinue = false)!!

if (invokeStartEvent) {

exchange.responseHeadersStart()

}

response = responseBuilder

.request(request)

.handshake(exchange.connection()!!.handshake())

.sentRequestAtMillis(sentRequestMillis)

.receivedResponseAtMillis(System.currentTimeMillis())

.build()

code = response.code

}

exchange.responseHeadersEnd(response)

response = if (forWebSocket && code == 101) {

// Connection is upgrading, but we need to ensure interceptors see a non-null response body.

response.newBuilder()

.body(EMPTY_RESPONSE)

.build()

} else {

response.newBuilder()

.body(exchange.openResponseBody(response))

.build()

}

if ("close".equals(response.request.header("Connection"), ignoreCase = true) ||

"close".equals(response.header("Connection"), ignoreCase = true)) {

exchange.noNewExchangesOnConnection()

}

if ((code == 204 || code == 205) && response.body?.contentLength() ?: -1L > 0L) {

throw ProtocolException(

"HTTP $code had non-zero Content-Length: ${response.body?.contentLength()}")

}

return response

}

这个拦截器就比较简单,主要是去发请求和读响应,然后把响应往回传这么一个操作,因为责任链中最后一个点没有前置和后置,主要是把前面拦截器做的事情进行最后的请求和返回响应。这样整个结果往回传,最终回到了RealCall里面的response = getResponseWithInterceptorChain()

总结

整个OkHttp功能的实现就在这五个默认的拦截器中,所以先理解拦截器模式的工作机制是先决条件。这五个拦截器分别为: 重试拦截器、桥接拦截器、缓存拦截器、连接拦截器、请求服务拦截器。每一个拦截器负责的工作不一样,就好像工厂流水线,最终经过这五道工序,就完成了最终的产品。但是与流水线不同的是,OkHttp中的拦截器每次发起请求都会在交给下一个拦截器之前干一些事情,在获得了结果之后又干一些事情。整个过程在请求向是顺序的,而响应向则是逆序。

当用户发起一个请求后,会由任务分发起 Dispatcher 将请求包装并交给重试拦截器处理。

1、重试拦截器在交出(交给下一个拦截器)之前,负责判断用户是否取消了请求;在获得了结果之后,会根据响应码判断是否需要重定向,如果满足条件那么就会重启执行所有拦截器。

2、桥接拦截器在交出之前,负责将HTTP协议必备的请求头加入其中(如:Host)并添加一些默认的行为(如:GZIP压缩);在获得了结果后,调用保存cookie接口并解析GZIP数据。

3、缓存拦截器顾名思义,交出之前读取并判断是否使用缓存;获得结果后判断是否缓存。

4、连接拦截器在交出之前,负责找到或者新建一个连接,并获得对应的socket流;在获得结果后不进行额外的处理。

5、请求服务器拦截器进行真正的与服务器的通信,向服务器发送数据,解析读取的响应数据。

在经过了这一系列的流程后,就完成了一次HTTP请求!

OkHttp的优点:

- 支持Http1、Http2

- 连接池复用底层TCP,减少请求延时

- 无缝的支持GZIP减少数据流量,提高传输效率

- 缓存响应数据减少重复的网络请求

- 请求失败自动重试,自动重定向