【Starrocks】建表篇Fe源码解析

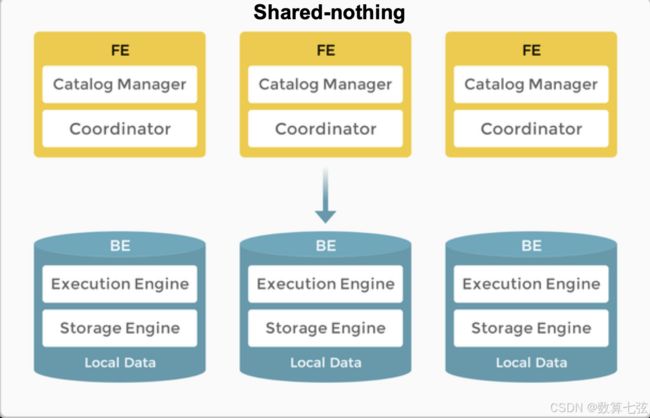

Starrocks 系统架构简述

现在市面上主流的OLAP存算一体架构主要有两类进程:Frontend(FE)和Backend(BE)

Frontend一般是用Java写的。主要职责有:

- 接收用户连接请求(MySQL协议层)

- 元数据存储与管理

- 查询语句的解析与查询计划的生成

- 集群管控

Backend一般是用C++写的,主要职责有:

- 数据存储与管理

- 查询计划的执行

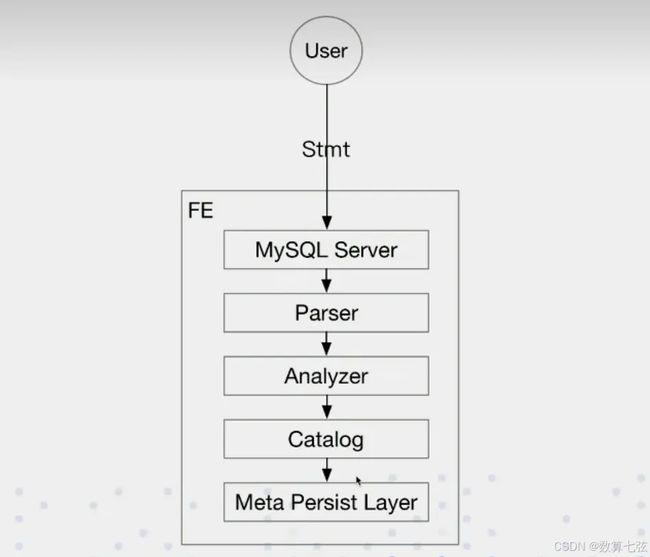

建表语句的执行过程

- 与Mysql协议层交互

- 词法解析

- 语法解析

- 修改元数据信息

- 元数据持久化

- 执行成功

源码查看

Main函数 ------ fe\fe-core\src\main\java\com\starrocks\StarRocksFE.java

// entrance for starrocks frontend

public static void start(String starRocksDir, String pidDir, String[] args) {

// 环境检查与设置

// 验证环境变量STARROCKS_HOME是否已设置,此变量指向StarRocks的安装目录

if (Strings.isNullOrEmpty(starRocksDir)) {

System.err.println("env STARROCKS_HOME is not set.");

return;

}

// 检查PID_DIR环境变量是否存在,用于存放进程ID文件

if (Strings.isNullOrEmpty(pidDir)) {

System.err.println("env PID_DIR is not set.");

return;

}

// 调用parseArgs(args)方法解析传入的命令行参数

CommandLineOptions cmdLineOpts = parseArgs(args);

try {

// 尝试在指定的pidDir目录下创建并锁定一个名为fe.pid的文件。如果文件已被锁定,说明FE服务可能已在运行

if (!createAndLockPidFile(pidDir + "/fe.pid")) {

throw new IOException("pid file is already locked.");

}

// 使用Config类初始化配置信息,读取starRocksDir下的fe.conf配置文件

new Config().init(starRocksDir + "/conf/fe.conf");

// 在日志初始化之前进行命令行选项的校验,避免不必要的标准输出信息

// NOTE: do it before init log4jConfig to avoid unnecessary stdout messages

checkCommandLineOptions(cmdLineOpts);

// 调用Log4jConfig.initLogging()初始化日志记录系统

Log4jConfig.initLogging();

// 设置DNS缓存的生存时间(TTL)为60秒

java.security.Security.setProperty("networkaddress.cache.ttl", "60");

// Need to put if before `GlobalStateMgr.getCurrentState().waitForReady()`, because it may access aws service

// 根据需要配置AWS HTTP客户端

setAWSHttpClient();

// 通过MetaHelper.checkMetaDir()确保元数据目录的正确性

MetaHelper.checkMetaDir();

LOG.info("StarRocks FE starting, version: {}-{}", Version.STARROCKS_VERSION, Version.STARROCKS_COMMIT_HASH);

// 初始化FrontendOptions,设置执行环境

FrontendOptions.init(args);

ExecuteEnv.setup();

// 初始化全局状态管理器,这是FE的核心组件,管理着所有全局状态和服务

GlobalStateMgr.getCurrentState().initialize(args);

StateChangeExecutor.getInstance().setMetaContext(

GlobalStateMgr.getCurrentState().getMetaContext());

if (RunMode.isSharedDataMode()) {

Journal journal = GlobalStateMgr.getCurrentState().getJournal();

if (journal instanceof BDBJEJournal) {

BDBEnvironment bdbEnvironment = ((BDBJEJournal) journal).getBdbEnvironment();

StarMgrServer.getCurrentState().initialize(bdbEnvironment,

GlobalStateMgr.getCurrentState().getImageDir());

} else {

LOG.error("journal type should be BDBJE for star mgr!");

System.exit(-1);

}

StateChangeExecutor.getInstance().registerStateChangeExecution(

StarMgrServer.getCurrentState().getStateChangeExecution());

}

StateChangeExecutor.getInstance().registerStateChangeExecution(

GlobalStateMgr.getCurrentState().getStateChangeExecution());

// 注册GlobalStateMgr的状态变更执行器到StateChangeExecutor,并启动它

StateChangeExecutor.getInstance().start();

// 等待全局状态管理器准备就绪,这是服务可以开始接受请求的标志

GlobalStateMgr.getCurrentState().waitForReady();

// 保存FE的启动类型信息

FrontendOptions.saveStartType();

// 启动CoordinatorMonitor进行服务健康监控

CoordinatorMonitor.getInstance().start();

// 核心服务启动 分别启动查询引擎服务(QeService)、

// Thrift服务(FrontendThriftServer)和HTTP服务(HttpServer),

// 为用户提供MySQL协议、Thrift协议和HTTP协议的服务接口

// 1. QeService for MySQL Server

// 2. FrontendThriftServer for Thrift Server

// 3. HttpServer for HTTP Server

QeService qeService = new QeService(Config.query_port, Config.mysql_service_nio_enabled,

ExecuteEnv.getInstance().getScheduler());

FrontendThriftServer frontendThriftServer = new FrontendThriftServer(Config.rpc_port);

HttpServer httpServer = new HttpServer(Config.http_port);

httpServer.setup();

frontendThriftServer.start();

httpServer.start();

qeService.start();

ThreadPoolManager.registerAllThreadPoolMetric();

addShutdownHook();

LOG.info("FE started successfully");

while (!stopped) {

Thread.sleep(2000);

}

} catch (Throwable e) {

LOG.error("StarRocksFE start failed", e);

System.exit(-1);

}

System.exit(0);

}

小节

- 核心服务启动 分别启动查询引擎服务(QeService)、Thrift服务(FrontendThriftServer)和HTTP服务(HttpServer),为用户提供MySQL协议、Thrift协议和HTTP协议的服务接口

- QeService我理解应该是查询客户端一些sql请求,通过mysql server 9030端口监听用户发送的sql

- HttpServer是fe获取streamload请求的服务,fe通过8030监听用户的streamload请求,然后fe和be之间通过webserver_port 8040端口通信将数据发往be

- FrontendThriftServer fe 9020 rpc端口 =》be 9060 用于接收来自fe的请求

QeService.java

public class QeService {

private static final Logger LOG = LogManager.getLogger(QeService.class);

// MySQL protocol service

// QeService实际上是一个MysqlService的封装

private MysqlServer mysqlServer;

public QeService(int port, boolean nioEnabled, ConnectScheduler scheduler) throws Exception {

SSLContext sslContext = null;

if (!Strings.isNullOrEmpty(Config.ssl_keystore_location)

&& SSLChannelImpClassLoader.loadSSLChannelImpClazz() != null) {

sslContext = createSSLContext();

}

// 启动mysql 9030端口的一个服务

// 所有协议的实现都是在这个mysqlServer里实现

if (nioEnabled) {

mysqlServer = new NMysqlServer(port, scheduler, sslContext);

} else {

mysqlServer = new MysqlServer(port, scheduler, sslContext);

}

}

public void start() throws IOException {

if (!mysqlServer.start()) {

LOG.error("mysql server start failed");

System.exit(-1);

}

LOG.info("QE service start.");

}

public MysqlServer getMysqlServer() {

return mysqlServer;

}

public void setMysqlServer(MysqlServer mysqlServer) {

this.mysqlServer = mysqlServer;

}

private SSLContext createSSLContext() throws Exception {

KeyStore keyStore = KeyStore.getInstance("JKS");

try (InputStream keyStoreIS = new FileInputStream(Config.ssl_keystore_location)) {

keyStore.load(keyStoreIS, Config.ssl_keystore_password.toCharArray());

}

KeyManagerFactory kmf = KeyManagerFactory.getInstance(KeyManagerFactory.getDefaultAlgorithm());

kmf.init(keyStore, Config.ssl_key_password.toCharArray());

SSLContext sslContext = SSLContext.getInstance("TLSv1.2");

TrustManager[] trustManagers = null;

if (!Strings.isNullOrEmpty(Config.ssl_truststore_location)) {

trustManagers = createTrustManagers(Config.ssl_truststore_location, Config.ssl_truststore_password);

}

sslContext.init(kmf.getKeyManagers(), trustManagers, new SecureRandom());

return sslContext;

}

/**

* Creates the trust managers required to initiate the {@link SSLContext}, using a JKS keystore as an input.

*

* @param filepath - the path to the JKS keystore.

* @param keystorePassword - the keystore's password.

* @return {@link TrustManager} array, that will be used to initiate the {@link SSLContext}.

* @throws Exception

*/

private TrustManager[] createTrustManagers(String filepath, String keystorePassword) throws Exception {

KeyStore trustStore = KeyStore.getInstance("JKS");

InputStream trustStoreIS = new FileInputStream(filepath);

try {

trustStore.load(trustStoreIS, keystorePassword.toCharArray());

} finally {

if (trustStoreIS != null) {

trustStoreIS.close();

}

}

TrustManagerFactory trustFactory = TrustManagerFactory.getInstance(TrustManagerFactory.getDefaultAlgorithm());

trustFactory.init(trustStore);

return trustFactory.getTrustManagers();

}

}

小节

这里涉及的MYSQL交互协议感兴趣的可以了解:https://dev.mysql.com/doc/internals/en/client-server-protocol.html

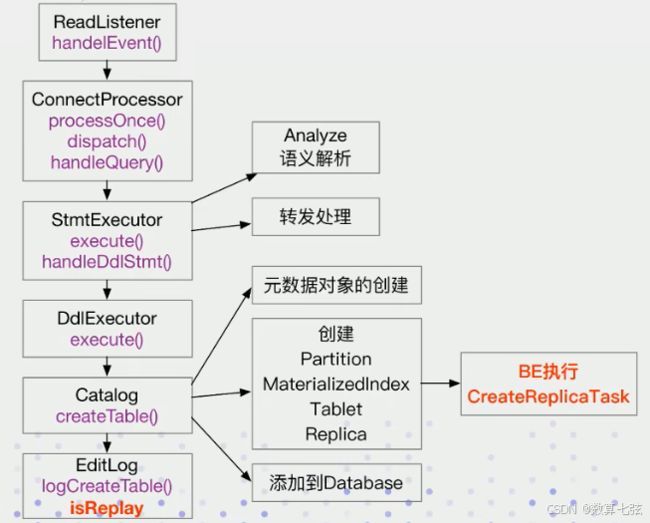

代码调用关系

第一部分是协议部分,用IO实现的ReadListener事件监听器,监听用户连接,和客户端创建连接后开始接受客户端请求

第二部分识别到各种sql的类型

第三部分识别sql语句类型,比如说建表、查询语句、insert语句、routine load语句、stream load语句等

第四部分是语句类型的执行器

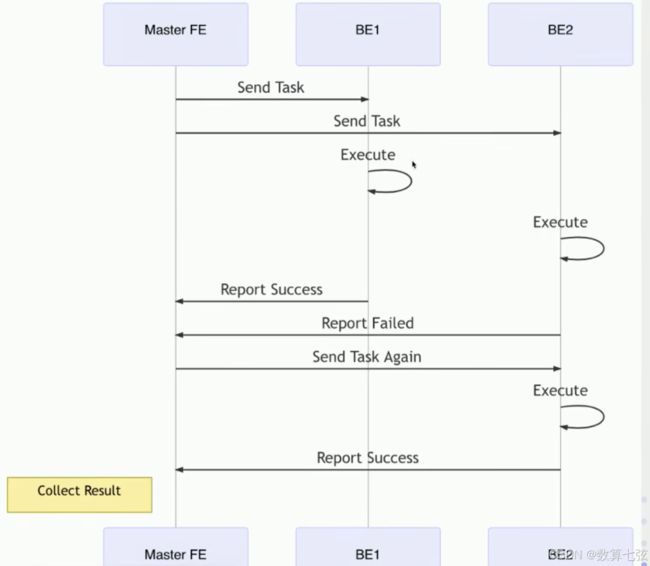

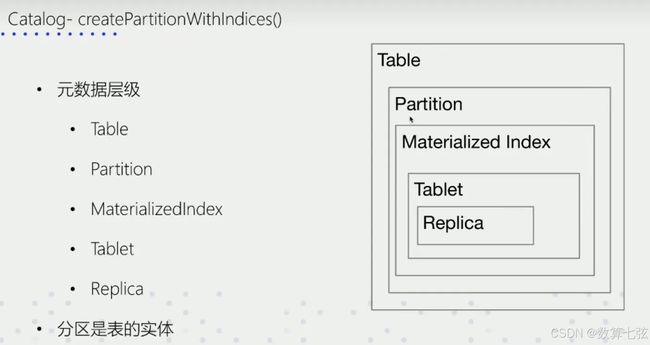

第五部分是执行器具体执行时,先进行原数据的修改,然后通知be task创建tablet

第六部分创建完tablet后进行元数据的持久化

ReadListener-handleEvent

/**

* listener for handle mysql cmd.

mysql协议监听服务,通过监听ConduitStreamSourceChannel,把监听到的channel放到worker线程里

然后通过connectProcessor.processOnce()去执行一次客户端的请求

*/

public class ReadListener implements ChannelListener<ConduitStreamSourceChannel> {

private static final Logger LOG = LogManager.getLogger(ReadListener.class);

private NConnectContext ctx;

private ConnectProcessor connectProcessor;

public ReadListener(NConnectContext nConnectContext, ConnectProcessor connectProcessor) {

this.ctx = nConnectContext;

this.connectProcessor = connectProcessor;

}

@Override

public void handleEvent(ConduitStreamSourceChannel channel) {

// suspend must be call sync in current thread (the IO-Thread notify the read event),

// otherwise multi handler(task thread) would be waked up by once query.

XnioIoThread.requireCurrentThread();

ctx.suspendAcceptQuery();

// start async query handle in task thread.

try {

channel.getWorker().execute(() -> {

ctx.setThreadLocalInfo();

try {

connectProcessor.processOnce();

if (!ctx.isKilled()) {

ctx.resumeAcceptQuery();

} else {

ctx.stopAcceptQuery();

ctx.cleanup();

}

} catch (RpcException rpce) {

LOG.debug("Exception happened in one session(" + ctx + ").", rpce);

ctx.setKilled();

ctx.cleanup();

} catch (Exception e) {

LOG.warn("Exception happened in one session(" + ctx + ").", e);

ctx.setKilled();

ctx.cleanup();

} finally {

ConnectContext.remove();

}

});

} catch (Throwable e) {

if (e instanceof Error) {

LOG.error("connect processor exception because ", e);

} else {

// should be unexpected exception, so print warn log

LOG.warn("connect processor exception because ", e);

}

ctx.setKilled();

ctx.cleanup();

ConnectContext.remove();

}

}

}

ConnectProcessor-processOnce() -> dispatch()

public void processOnce() throws IOException {

// set status of query to OK.

ctx.getState().reset();

executor = null;

// reset sequence id of MySQL protocol

// 收到客户端请求

final MysqlChannel channel = ctx.getMysqlChannel();

channel.setSequenceId(0);

try {

// 从channel中获取到数据包

packetBuf = channel.fetchOnePacket();

if (packetBuf == null) {

throw new RpcException(ctx.getRemoteIP(), "Error happened when receiving packet.");

}

} catch (AsynchronousCloseException e) {

// when this happened, timeout checker close this channel

// killed flag in ctx has been already set, just return

return;

}

// 解析数据包

dispatch();

// finalize

finalizeCommand();

ctx.setCommand(MysqlCommand.COM_SLEEP);

}

private void dispatch() throws IOException {

// 解析过程 1.拿到包头

int code = packetBuf.get();

// 2. 获取到mysql指令

MysqlCommand command = MysqlCommand.fromCode(code);

if (command == null) {

ErrorReport.report(ErrorCode.ERR_UNKNOWN_COM_ERROR);

ctx.getState().setError("Unknown command(" + command + ")");

LOG.debug("Unknown MySQL protocol command");

return;

}

ctx.setCommand(command);

ctx.setStartTime();

ctx.setResourceGroup(null);

ctx.setErrorCode("");

switch (command) {

// 3.根据不同的请求处理不同类型的函数

case COM_INIT_DB:

handleInitDb();

break;

case COM_QUIT:

handleQuit();

break;

// 4.我们只关注这块,所有的ddl dml sql

case COM_QUERY:

case COM_STMT_PREPARE:

handleQuery();

ctx.setStartTime();

break;

case COM_STMT_RESET:

handleStmtReset();

break;

case COM_STMT_CLOSE:

handleStmtClose();

break;

case COM_FIELD_LIST:

handleFieldList();

break;

case COM_CHANGE_USER:

handleChangeUser();

break;

case COM_RESET_CONNECTION:

handleResetConnection();

break;

case COM_PING:

handlePing();

break;

case COM_STMT_EXECUTE:

handleExecute();

break;

default:

ctx.getState().setError("Unsupported command(" + command + ")");

LOG.debug("Unsupported command: {}", command);

break;

}

}

ConnectProcessor - handleQuery()

// process COM_QUERY statement,

protected void handleQuery() {

MetricRepo.COUNTER_REQUEST_ALL.increase(1L);

// convert statement to Java string

String originStmt = null;

byte[] bytes = packetBuf.array();

int ending = packetBuf.limit() - 1;

while (ending >= 1 && bytes[ending] == '\0') {

ending--;

}

// 从连接串中获取到原始sql语句的字符串

originStmt = new String(bytes, 1, ending, StandardCharsets.UTF_8);

// execute this query.

StatementBase parsedStmt = null;

try {

ctx.setQueryId(UUIDUtil.genUUID());

List<StatementBase> stmts;

try {

// 解析获取到的原始sql

stmts = com.starrocks.sql.parser.SqlParser.parse(originStmt, ctx.getSessionVariable());

} catch (ParsingException parsingException) {

throw new AnalysisException(parsingException.getMessage());

}

for (int i = 0; i < stmts.size(); ++i) {

ctx.getState().reset();

if (i > 0) {

ctx.resetReturnRows();

ctx.setQueryId(UUIDUtil.genUUID());

}

parsedStmt = stmts.get(i);

// from jdbc no params like that. COM_STMT_PREPARE + select 1

if (ctx.getCommand() == MysqlCommand.COM_STMT_PREPARE && !(parsedStmt instanceof PrepareStmt)) {

parsedStmt = new PrepareStmt("", parsedStmt, new ArrayList<>());

}

// only for JDBC, COM_STMT_PREPARE bundled with jdbc

if (ctx.getCommand() == MysqlCommand.COM_STMT_PREPARE && (parsedStmt instanceof PrepareStmt)) {

((PrepareStmt) parsedStmt).setName(String.valueOf(ctx.getStmtId()));

if (!(((PrepareStmt) parsedStmt).getInnerStmt() instanceof QueryStatement)) {

throw new AnalysisException("prepare statement only support QueryStatement");

}

}

parsedStmt.setOrigStmt(new OriginStatement(originStmt, i));

Tracers.init(ctx, parsedStmt.getTraceMode(), parsedStmt.getTraceModule());

// Only add the last running stmt for multi statement,

// because the audit log will only show the last stmt.

if (i == stmts.size() - 1) {

addRunningQueryDetail(parsedStmt);

}

executor = new StmtExecutor(ctx, parsedStmt);

ctx.setExecutor(executor);

ctx.setIsLastStmt(i == stmts.size() - 1);

executor.execute();

// do not execute following stmt when current stmt failed, this is consistent with mysql server

if (ctx.getState().getStateType() == QueryState.MysqlStateType.ERR) {

break;

}

if (i != stmts.size() - 1) {

// NOTE: set serverStatus after executor.execute(),

// because when execute() throws exception, the following stmt will not execute

// and the serverStatus with MysqlServerStatusFlag.SERVER_MORE_RESULTS_EXISTS will

// cause client error: Packet sequence number wrong

ctx.getState().serverStatus |= MysqlServerStatusFlag.SERVER_MORE_RESULTS_EXISTS;

finalizeCommand();

}

}

} catch (AnalysisException e) {

LOG.warn("Failed to parse SQL: " + originStmt + ", because.", e);

ctx.getState().setError(e.getMessage());

ctx.getState().setErrType(QueryState.ErrType.ANALYSIS_ERR);

} catch (Throwable e) {

// Catch all throwable.

// If reach here, maybe StarRocks bug.

LOG.warn("Process one query failed. SQL: " + originStmt + ", because unknown reason: ", e);

ctx.getState().setError("Unexpected exception: " + e.getMessage());

ctx.getState().setErrType(QueryState.ErrType.INTERNAL_ERR);

} finally {

Tracers.close();

}

// audit after exec

// replace '\n' to '\\n' to make string in one line

// TODO(cmy): when user send multi-statement, the executor is the last statement's executor.

// We may need to find some way to resolve this.

if (executor != null) {

auditAfterExec(originStmt, executor.getParsedStmt(), executor.getQueryStatisticsForAuditLog());

} else {

// executor can be null if we encounter analysis error.

auditAfterExec(originStmt, null, null);

}

addFinishedQueryDetail();

}

SqlParse.parse -> parseWithStarRocksDialect

public static List<StatementBase> parse(String sql, SessionVariable sessionVariable) {

if (sessionVariable.getSqlDialect().equalsIgnoreCase("trino")) {

return parseWithTrinoDialect(sql, sessionVariable);

} else {

return parseWithStarRocksDialect(sql, sessionVariable);

}

}

private static List<StatementBase> parseWithStarRocksDialect(String sql, SessionVariable sessionVariable) {

List<StatementBase> statements = Lists.newArrayList();

StarRocksParser parser = parserBuilder(sql, sessionVariable);

// 调用parser.sqlStatements().singleStatement()来解析SQL字符串,得到一系列单个语句的解析上下文(SingleStatementContext)。每个上下文代表SQL中的一个独立可执行语句。

List<StarRocksParser.SingleStatementContext> singleStatementContexts =

parser.sqlStatements().singleStatement();

//遍历并处理每个语句,根据;切割,把多条sql语句返回

for (int idx = 0; idx < singleStatementContexts.size(); ++idx) {

// 创建一个HintCollector实例,传入解析器的词法单元流,用于收集SQL中的提示(hint)信息

HintCollector collector = new HintCollector((CommonTokenStream) parser.getTokenStream());

// 调用collector.collect()方法收集当前语句的hint信息

collector.collect(singleStatementContexts.get(idx));

// 初始化一个AstBuilder实例,传入会话变量中的SQL模式和hint收集器的上下文映射

AstBuilder astBuilder = new AstBuilder(sessionVariable.getSqlMode(), collector.getContextWithHintMap());

// 使用AstBuilder的visitSingleStatement方法访问当前的SingleStatementContext,生成对应的StatementBase对象

StatementBase statement = (StatementBase) astBuilder.visitSingleStatement(singleStatementContexts.get(idx));

// 检查AstBuilder是否收集到了参数,且当前语句不是PrepareStmt类型

// 如果条件满足,创建一个新的PrepareStmt对象,将原语句作为内部语句,参数附上

if (astBuilder.getParameters() != null && astBuilder.getParameters().size() != 0

&& !(statement instanceof PrepareStmt)) {

// for prepare stm1 from '', here statement is inner statement

statement = new PrepareStmt("", statement, astBuilder.getParameters());

}

// 否则,为当前语句设置原始SQL来源信息(OriginStatement)

else {

statement.setOrigStmt(new OriginStatement(sql, idx));

}

statements.add(statement);

}

if (ConnectContext.get() != null) {

// 检查全局的ConnectContext是否存在,如果存在,则将其关系别名的大小写敏感性设置为fals

ConnectContext.get().setRelationAliasCaseInSensitive(false);

}

return statements;

}

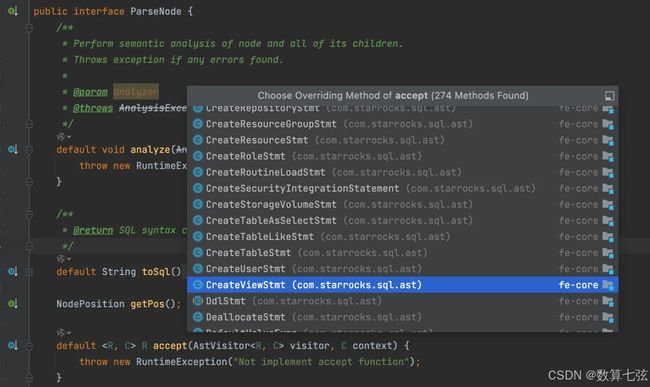

ConnectProcessor - 词法语法解析

这里进行两类解析

词法解析:sql中各个token语句拆开,判断每个单词是否正确

语法解析:将各个token进行组合生成ast(抽象语法树)最后执行的语句,判断整个语句语法是否正确

语义解析:含义的正确性

- 名称是否合法

- 列类型是否合法

- 分区是否合法

- 权限是否正确

详细执行计划:https://www.cnblogs.com/guoyu1/p/17938981

ConnectorProcessor executor.execute(); -> StmtExecuto - sql语句执行

转发处理

Leader 1 Follow 2 Observer 3

- Fe 有Leader 、 Follower 和 Observer

- 只有Leader有元数据的修改能力,并且部分元数据信息只有Leader有

- FE用户客户端是可以连接到任意一个fe节点的,或者slb分配到任意一个节点上,所有需要修改元数据的操作,需要转发到Leader执行,Leader处理完后返回该接受请求的FE节点后成功再返回到客户端上,starrcocks通过这样的内部转发机制去保证每个fe都能处理所有的SQL

- 转发类型

- FORWARD_NO_SYNC

- FORWARD_WITH_SYNC

- NO_FORWARD

FORWARD_NO_SYNC,FE接收到的节点会转发到Leader去执行,但是不需要等待修改元数据信息的同步返回,例如show backends

FORWARD_WITH_SYNC针对大部分需要Leader去修改元数据信息的动作,如建表删表语句等等

NO_FORWARD所有的查询语句,直接在当前节点可进行查询,不需要转发

解析后进行sql命令的执行,建表语句的执行过程:DDLStmtExecutor - execute()

DdlExecutor -> visitCreateTableStatement

public static ShowResultSet execute(StatementBase stmt, ConnectContext context) throws Exception {

try {

return stmt.accept(StmtExecutorVisitor.getInstance(), context);

} catch (RuntimeException re) {

if (re.getCause() instanceof DdlException) {

throw (DdlException) re.getCause();

} else if (re.getCause() instanceof IOException) {

throw (IOException) re.getCause();

} else if (re.getCause() != null) {

throw new DdlException(re.getCause().getMessage(), re);

} else {

throw re;

}

}

}

@Override

public ShowResultSet visitCreateTableStatement(CreateTableStmt stmt, ConnectContext context) {

ErrorReport.wrapWithRuntimeException(() -> {

context.getGlobalStateMgr().getMetadataMgr().createTable(stmt);

});

return null;

}

public boolean createTable(CreateTableStmt stmt) throws DdlException {

// 获取建表语句的catalog 包括Catalog名称、数据库名(dbName)、表名(tableName)以及一些额外的创建选项(如ifNotExists标志)

String catalogName = stmt.getCatalogName();

Optional<ConnectorMetadata> connectorMetadata = getOptionalMetadata(catalogName);

if (connectorMetadata.isPresent()) {

// 判断是内部表还是外部表的建表语句

if (!CatalogMgr.isInternalCatalog(catalogName)) {

String dbName = stmt.getDbName();

String tableName = stmt.getTableName();

if (getDb(catalogName, dbName) == null) {

ErrorReport.reportDdlException(ErrorCode.ERR_BAD_DB_ERROR, dbName);

}

if (tableExists(catalogName, dbName, tableName)) {

if (stmt.isSetIfNotExists()) {

LOG.info("create table[{}] which already exists", tableName);

return false;

} else {

ErrorReport.reportDdlException(ErrorCode.ERR_TABLE_EXISTS_ERROR, tableName);

}

}

}

return connectorMetadata.get().createTable(stmt);

} else {

throw new DdlException("Invalid catalog " + catalogName + " , ConnectorMetadata doesn't exist");

}

}

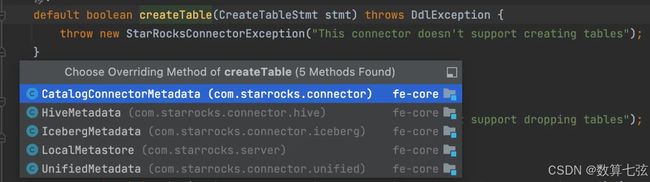

然后根据不同类型的外部表或者内部表执行具体的建表逻辑

如果是建内部表走的是LocalMetastore类

/**

* Following is the step to create an olap table:

* 1. create columns

* 2. create partition info

* 3. create distribution info

* 4. set table id and base index id

* 5. set bloom filter columns

* 6. set and build TableProperty includes:

* 6.1. dynamicProperty

* 6.2. replicationNum

* 6.3. inMemory

* 7. set index meta

* 8. check colocation properties

* 9. create tablet in BE

* 10. add this table to FE's meta

* 11. add this table to ColocateGroup if necessary

*

* @return whether the table is created

*/

@Override

public boolean createTable(CreateTableStmt stmt) throws DdlException {

// check if db exists

Database db = getDb(stmt.getDbName());

if (db == null) {

ErrorReport.reportDdlException(ErrorCode.ERR_BAD_DB_ERROR, stmt.getDbName());

}

// perform the existence check which is cheap before any further heavy operations.

// NOTE: don't even check the quota if already exists.

db.readLock();

try {

String tableName = stmt.getTableName();

if (db.getTable(tableName) != null) {

if (!stmt.isSetIfNotExists()) {

ErrorReport.reportDdlException(ErrorCode.ERR_TABLE_EXISTS_ERROR, tableName);

}

LOG.info("create table[{}] which already exists", tableName);

return false;

}

} finally {

db.readUnlock();

}

// only internal table should check quota and cluster capacity

if (!stmt.isExternal()) {

// check cluster capacity

GlobalStateMgr.getCurrentSystemInfo().checkClusterCapacity();

// check db quota

db.checkQuota();

}

AbstractTableFactory tableFactory = TableFactoryProvider.getFactory(stmt.getEngineName());

if (tableFactory == null) {

ErrorReport.reportDdlException(ErrorCode.ERR_UNKNOWN_STORAGE_ENGINE, stmt.getEngineName());

}

Table table = tableFactory.createTable(this, db, stmt);

String storageVolumeId = GlobalStateMgr.getCurrentState().getStorageVolumeMgr()

.getStorageVolumeIdOfTable(table.getId());

try {

onCreate(db, table, storageVolumeId, stmt.isSetIfNotExists());

} catch (DdlException e) {

if (table.isCloudNativeTable()) {

GlobalStateMgr.getCurrentState().getStorageVolumeMgr().unbindTableToStorageVolume(table.getId());

}

throw e;

}

return true;

}

OlapTableFactory - createTable

@Override

@NotNull

public Table createTable(LocalMetastore metastore, Database db, CreateTableStmt stmt) throws DdlException {

GlobalStateMgr stateMgr = metastore.getStateMgr();

ColocateTableIndex colocateTableIndex = metastore.getColocateTableIndex();

String tableName = stmt.getTableName();

LOG.debug("begin create olap table: {}", tableName);

// create columns

List<Column> baseSchema = stmt.getColumns();

metastore.validateColumns(baseSchema);

// create partition info

PartitionDesc partitionDesc = stmt.getPartitionDesc();

PartitionInfo partitionInfo;

Map<String, Long> partitionNameToId = Maps.newHashMap();

if (partitionDesc != null) {

// gen partition id first

if (partitionDesc instanceof RangePartitionDesc) {

RangePartitionDesc rangePartitionDesc = (RangePartitionDesc) partitionDesc;

for (SingleRangePartitionDesc desc : rangePartitionDesc.getSingleRangePartitionDescs()) {

long partitionId = metastore.getNextId();

partitionNameToId.put(desc.getPartitionName(), partitionId);

}

} else if (partitionDesc instanceof ListPartitionDesc) {

ListPartitionDesc listPartitionDesc = (ListPartitionDesc) partitionDesc;

listPartitionDesc.findAllPartitionNames()

.forEach(partitionName -> partitionNameToId.put(partitionName, metastore.getNextId()));

} else if (partitionDesc instanceof ExpressionPartitionDesc) {

ExpressionPartitionDesc expressionPartitionDesc = (ExpressionPartitionDesc) partitionDesc;

for (SingleRangePartitionDesc desc : expressionPartitionDesc.getRangePartitionDesc()

.getSingleRangePartitionDescs()) {

long partitionId = metastore.getNextId();

partitionNameToId.put(desc.getPartitionName(), partitionId);

}

DynamicPartitionUtil.checkIfExpressionPartitionAllowed(stmt.getProperties(),

expressionPartitionDesc.getExpr());

} else {

throw new DdlException("Currently only support range or list partition with engine type olap");

}

partitionInfo = partitionDesc.toPartitionInfo(baseSchema, partitionNameToId, false);

// Automatic partitioning needs to ensure that at least one tablet is opened.

if (partitionInfo.isAutomaticPartition()) {

long partitionId = metastore.getNextId();

String replicateNum = String.valueOf(RunMode.defaultReplicationNum());

if (stmt.getProperties() != null) {

replicateNum = stmt.getProperties().getOrDefault("replication_num",

String.valueOf(RunMode.defaultReplicationNum()));

}

partitionInfo.createAutomaticShadowPartition(partitionId, replicateNum);

partitionNameToId.put(ExpressionRangePartitionInfo.AUTOMATIC_SHADOW_PARTITION_NAME, partitionId);

}

} else {

if (DynamicPartitionUtil.checkDynamicPartitionPropertiesExist(stmt.getProperties())) {

throw new DdlException("Only support dynamic partition properties on range partition table");

}

long partitionId = metastore.getNextId();

// use table name as single partition name

partitionNameToId.put(tableName, partitionId);

partitionInfo = new SinglePartitionInfo();

}

// get keys type

KeysDesc keysDesc = stmt.getKeysDesc();

Preconditions.checkNotNull(keysDesc);

KeysType keysType = keysDesc.getKeysType();

// create distribution info

DistributionDesc distributionDesc = stmt.getDistributionDesc();

Preconditions.checkNotNull(distributionDesc);

DistributionInfo distributionInfo = distributionDesc.toDistributionInfo(baseSchema);

short shortKeyColumnCount = 0;

List<Integer> sortKeyIdxes = new ArrayList<>();

if (stmt.getSortKeys() != null) {

List<String> baseSchemaNames = baseSchema.stream().map(Column::getName).collect(Collectors.toList());

for (String column : stmt.getSortKeys()) {

int idx = baseSchemaNames.indexOf(column);

if (idx == -1) {

throw new DdlException("Invalid column '" + column + "': not exists in all columns.");

}

sortKeyIdxes.add(idx);

}

shortKeyColumnCount =

GlobalStateMgr.calcShortKeyColumnCount(baseSchema, stmt.getProperties(), sortKeyIdxes);

} else {

shortKeyColumnCount = GlobalStateMgr.calcShortKeyColumnCount(baseSchema, stmt.getProperties());

}

LOG.debug("create table[{}] short key column count: {}", tableName, shortKeyColumnCount);

// indexes

TableIndexes indexes = new TableIndexes(stmt.getIndexes());

// set base index info to table

// this should be done before create partition.

Map<String, String> properties = stmt.getProperties();

// create table

long tableId = GlobalStateMgr.getCurrentState().getNextId();

OlapTable table;

// only OlapTable support light schema change so far

Boolean useFastSchemaEvolution = true;

if (stmt.isExternal()) {

table = new ExternalOlapTable(db.getId(), tableId, tableName, baseSchema, keysType, partitionInfo,

distributionInfo, indexes, properties);

if (GlobalStateMgr.getCurrentState().getNodeMgr()

.checkFeExistByRPCPort(((ExternalOlapTable) table).getSourceTableHost(),

((ExternalOlapTable) table).getSourceTablePort())) {

throw new DdlException("can not create OLAP external table of self cluster");

}

useFastSchemaEvolution = false;

} else if (stmt.isOlapEngine()) {

RunMode runMode = RunMode.getCurrentRunMode();

String volume = "";

if (properties != null && properties.containsKey(PropertyAnalyzer.PROPERTIES_STORAGE_VOLUME)) {

volume = properties.remove(PropertyAnalyzer.PROPERTIES_STORAGE_VOLUME);

}

if (runMode == RunMode.SHARED_DATA) {

if (volume.equals(StorageVolumeMgr.LOCAL)) {

throw new DdlException("Cannot create table " +

"without persistent volume in current run mode \"" + runMode + "\"");

}

table = new LakeTable(tableId, tableName, baseSchema, keysType, partitionInfo, distributionInfo, indexes);

StorageVolumeMgr svm = GlobalStateMgr.getCurrentState().getStorageVolumeMgr();

if (table.isCloudNativeTable() && !svm.bindTableToStorageVolume(volume, db.getId(), tableId)) {

throw new DdlException(String.format("Storage volume %s not exists", volume));

}

String storageVolumeId = svm.getStorageVolumeIdOfTable(tableId);

metastore.setLakeStorageInfo(db, table, storageVolumeId, properties);

useFastSchemaEvolution = false;

} else {

table = new OlapTable(tableId, tableName, baseSchema, keysType, partitionInfo, distributionInfo, indexes);

}

} else {

throw new DdlException("Unrecognized engine \"" + stmt.getEngineName() + "\"");

}

try {

table.setComment(stmt.getComment());

// set base index id

long baseIndexId = metastore.getNextId();

table.setBaseIndexId(baseIndexId);

// get use light schema change

try {

useFastSchemaEvolution &= PropertyAnalyzer.analyzeUseFastSchemaEvolution(properties);

} catch (AnalysisException e) {

throw new DdlException(e.getMessage());

}

table.setUseFastSchemaEvolution(useFastSchemaEvolution);

for (Column column : baseSchema) {

column.setUniqueId(table.incAndGetMaxColUniqueId());

}

List<Integer> sortKeyUniqueIds = new ArrayList<>();

if (useFastSchemaEvolution) {

for (Integer idx : sortKeyIdxes) {

sortKeyUniqueIds.add(baseSchema.get(idx).getUniqueId());

}

} else {

LOG.debug("table: {} doesn't use light schema change", table.getName());

}

// analyze bloom filter columns

Set<String> bfColumns = null;

double bfFpp = 0;

try {

bfColumns = PropertyAnalyzer.analyzeBloomFilterColumns(properties, baseSchema,

table.getKeysType() == KeysType.PRIMARY_KEYS);

if (bfColumns != null && bfColumns.isEmpty()) {

bfColumns = null;

}

bfFpp = PropertyAnalyzer.analyzeBloomFilterFpp(properties);

if (bfColumns != null && bfFpp == 0) {

bfFpp = FeConstants.DEFAULT_BLOOM_FILTER_FPP;

} else if (bfColumns == null) {

bfFpp = 0;

}

table.setBloomFilterInfo(bfColumns, bfFpp);

BloomFilterIndexUtil.analyseBfWithNgramBf(new HashSet<>(stmt.getIndexes()), bfColumns);

} catch (AnalysisException e) {

throw new DdlException(e.getMessage());

}

// analyze replication_num

short replicationNum = RunMode.defaultReplicationNum();

String logReplicationNum = "";

try {

boolean isReplicationNumSet =

properties != null && properties.containsKey(PropertyAnalyzer.PROPERTIES_REPLICATION_NUM);

if (properties != null) {

logReplicationNum = properties.get(PropertyAnalyzer.PROPERTIES_REPLICATION_NUM);

}

replicationNum = PropertyAnalyzer.analyzeReplicationNum(properties, replicationNum);

if (isReplicationNumSet) {

table.setReplicationNum(replicationNum);

}

} catch (AnalysisException ex) {

throw new DdlException(String.format("%s table=%s, properties.replication_num=%s",

ex.getMessage(), table.getName(), logReplicationNum));

}

// analyze location property

analyzeLocationOnCreateTable(table, properties);

// set in memory

boolean isInMemory =

PropertyAnalyzer.analyzeBooleanProp(properties, PropertyAnalyzer.PROPERTIES_INMEMORY, false);

table.setIsInMemory(isInMemory);

Pair<Boolean, Boolean> analyzeRet = PropertyAnalyzer.analyzeEnablePersistentIndex(properties,

table.getKeysType() == KeysType.PRIMARY_KEYS);

boolean enablePersistentIndex = analyzeRet.first;

boolean enablePersistentIndexByUser = analyzeRet.second;

if (enablePersistentIndex && table.isCloudNativeTable()) {

TPersistentIndexType persistentIndexType;

try {

persistentIndexType = PropertyAnalyzer.analyzePersistentIndexType(properties);

} catch (AnalysisException e) {

throw new DdlException(e.getMessage());

}

// Judge there are whether compute nodes without storagePath or not.

// Cannot create cloud native table with persistent_index = true when ComputeNode without storagePath

Set<Long> cnUnSetStoragePath =

GlobalStateMgr.getCurrentState().getNodeMgr().getClusterInfo().getAvailableComputeNodeIds().

stream()

.filter(id -> !GlobalStateMgr.getCurrentState().getNodeMgr().getClusterInfo().getComputeNode(id).

isSetStoragePath()).collect(Collectors.toSet());

if (cnUnSetStoragePath.size() != 0 && persistentIndexType == TPersistentIndexType.LOCAL) {

// Check CN storage path when using local persistent index

if (enablePersistentIndexByUser) {

throw new DdlException("Cannot create cloud native table with local persistent index" +

"when ComputeNode without storage_path, nodeId:" + cnUnSetStoragePath);

} else {

// if user has not requested persistent index, switch it to false

enablePersistentIndex = false;

}

}

if (enablePersistentIndex) {

table.setPersistentIndexType(persistentIndexType);

}

}

table.setEnablePersistentIndex(enablePersistentIndex);

try {

table.setPrimaryIndexCacheExpireSec(PropertyAnalyzer.analyzePrimaryIndexCacheExpireSecProp(properties,

PropertyAnalyzer.PROPERTIES_PRIMARY_INDEX_CACHE_EXPIRE_SEC, 0));

} catch (AnalysisException e) {

throw new DdlException(e.getMessage());

}

if (properties != null && (properties.containsKey(PropertyAnalyzer.PROPERTIES_BINLOG_ENABLE) ||

properties.containsKey(PropertyAnalyzer.PROPERTIES_BINLOG_MAX_SIZE) ||

properties.containsKey(PropertyAnalyzer.PROPERTIES_BINLOG_TTL))) {

try {

boolean enableBinlog = PropertyAnalyzer.analyzeBooleanProp(properties,

PropertyAnalyzer.PROPERTIES_BINLOG_ENABLE, false);

long binlogTtl = PropertyAnalyzer.analyzeLongProp(properties,

PropertyAnalyzer.PROPERTIES_BINLOG_TTL, Config.binlog_ttl_second);

long binlogMaxSize = PropertyAnalyzer.analyzeLongProp(properties,

PropertyAnalyzer.PROPERTIES_BINLOG_MAX_SIZE, Config.binlog_max_size);

BinlogConfig binlogConfig = new BinlogConfig(0, enableBinlog,

binlogTtl, binlogMaxSize);

table.setCurBinlogConfig(binlogConfig);

LOG.info("create table {} set binlog config, enable_binlog = {}, binlogTtl = {}, binlog_max_size = {}",

tableName, enableBinlog, binlogTtl, binlogMaxSize);

} catch (AnalysisException e) {

throw new DdlException(e.getMessage());

}

}

try {

long bucketSize = PropertyAnalyzer.analyzeLongProp(properties,

PropertyAnalyzer.PROPERTIES_BUCKET_SIZE, Config.default_automatic_bucket_size);

if (bucketSize >= 0) {

table.setAutomaticBucketSize(bucketSize);

} else {

throw new DdlException("Illegal bucket size: " + bucketSize);

}

} catch (AnalysisException e) {

throw new DdlException(e.getMessage());

}

// write quorum

try {

table.setWriteQuorum(PropertyAnalyzer.analyzeWriteQuorum(properties));

} catch (AnalysisException e) {

throw new DdlException(e.getMessage());

}

// replicated storage

table.setEnableReplicatedStorage(

PropertyAnalyzer.analyzeBooleanProp(

properties, PropertyAnalyzer.PROPERTIES_REPLICATED_STORAGE,

Config.enable_replicated_storage_as_default_engine));

if (table.enableReplicatedStorage().equals(false)) {

for (Column col : baseSchema) {

if (col.isAutoIncrement()) {

throw new DdlException("Table with AUTO_INCREMENT column must use Replicated Storage");

}

}

}

boolean hasGin = table.getIndexes().stream().anyMatch(index -> index.getIndexType() == IndexType.GIN);

if (hasGin && table.enableReplicatedStorage()) {

throw new SemanticException("GIN does not support replicated mode");

}

TTabletType tabletType = TTabletType.TABLET_TYPE_DISK;

try {

tabletType = PropertyAnalyzer.analyzeTabletType(properties);

} catch (AnalysisException e) {

throw new DdlException(e.getMessage());

}

if (table.isCloudNativeTable()) {

if (properties != null) {

try {

PeriodDuration duration = PropertyAnalyzer.analyzeDataCachePartitionDuration(properties);

if (duration != null) {

table.setDataCachePartitionDuration(duration);

}

} catch (AnalysisException e) {

throw new DdlException(e.getMessage());

}

}

}

if (properties != null) {

if (properties.containsKey(PropertyAnalyzer.PROPERTIES_STORAGE_COOLDOWN_TTL) ||

properties.containsKey(PropertyAnalyzer.PROPERTIES_STORAGE_COOLDOWN_TIME)) {

if (table.getKeysType() == KeysType.PRIMARY_KEYS) {

throw new DdlException("Primary key table does not support storage medium cool down currently.");

}

if (partitionInfo instanceof ListPartitionInfo) {

throw new DdlException("List partition table does not support storage medium cool down currently.");

}

if (partitionInfo instanceof RangePartitionInfo) {

RangePartitionInfo rangePartitionInfo = (RangePartitionInfo) partitionInfo;

List<Column> partitionColumns = rangePartitionInfo.getPartitionColumns();

if (partitionColumns.size() > 1) {

throw new DdlException("Multi-column range partition table " +

"does not support storage medium cool down currently.");

}

Column column = partitionColumns.get(0);

if (!column.getType().getPrimitiveType().isDateType()) {

throw new DdlException("Only support partition is date type for" +

" storage medium cool down currently.");

}

}

}

}

if (properties != null) {

try {

PeriodDuration duration = PropertyAnalyzer.analyzeStorageCoolDownTTL(properties, false);

if (duration != null) {

table.setStorageCoolDownTTL(duration);

}

} catch (AnalysisException e) {

throw new DdlException(e.getMessage());

}

}

if (partitionInfo.getType() == PartitionType.UNPARTITIONED) {

// if this is an unpartitioned table, we should analyze data property and replication num here.

// if this is a partitioned table, there properties are already analyzed in RangePartitionDesc analyze phase.

// use table name as this single partition name

long partitionId = partitionNameToId.get(tableName);

DataProperty dataProperty = null;

try {

boolean hasMedium = false;

if (properties != null) {

hasMedium = properties.containsKey(PropertyAnalyzer.PROPERTIES_STORAGE_MEDIUM);

}

dataProperty = PropertyAnalyzer.analyzeDataProperty(properties,

DataProperty.getInferredDefaultDataProperty(), false);

if (hasMedium) {

table.setStorageMedium(dataProperty.getStorageMedium());

}

} catch (AnalysisException e) {

throw new DdlException(e.getMessage());

}

Preconditions.checkNotNull(dataProperty);

partitionInfo.setDataProperty(partitionId, dataProperty);

partitionInfo.setReplicationNum(partitionId, replicationNum);

partitionInfo.setIsInMemory(partitionId, isInMemory);

partitionInfo.setTabletType(partitionId, tabletType);

StorageInfo storageInfo = table.getTableProperty().getStorageInfo();

DataCacheInfo dataCacheInfo = storageInfo == null ? null : storageInfo.getDataCacheInfo();

partitionInfo.setDataCacheInfo(partitionId, dataCacheInfo);

}

// check colocation properties

String colocateGroup = PropertyAnalyzer.analyzeColocate(properties);

if (StringUtils.isNotEmpty(colocateGroup)) {

if (!distributionInfo.supportColocate()) {

throw new DdlException("random distribution does not support 'colocate_with'");

}

colocateTableIndex.addTableToGroup(db, table, colocateGroup, false /* expectLakeTable */);

}

// get base index storage type. default is COLUMN

TStorageType baseIndexStorageType;

try {

baseIndexStorageType = PropertyAnalyzer.analyzeStorageType(properties, table);

} catch (AnalysisException e) {

throw new DdlException(e.getMessage());

}

Preconditions.checkNotNull(baseIndexStorageType);

// set base index meta

int schemaVersion = 0;

try {

schemaVersion = PropertyAnalyzer.analyzeSchemaVersion(properties);

} catch (AnalysisException e) {

throw new DdlException(e.getMessage());

}

int schemaHash = Util.schemaHash(schemaVersion, baseSchema, bfColumns, bfFpp);

if (stmt.getSortKeys() != null) {

table.setIndexMeta(baseIndexId, tableName, baseSchema, schemaVersion, schemaHash,

shortKeyColumnCount, baseIndexStorageType, keysType, null, sortKeyIdxes,

sortKeyUniqueIds);

} else {

table.setIndexMeta(baseIndexId, tableName, baseSchema, schemaVersion, schemaHash,

shortKeyColumnCount, baseIndexStorageType, keysType, null);

}

for (AlterClause alterClause : stmt.getRollupAlterClauseList()) {

AddRollupClause addRollupClause = (AddRollupClause) alterClause;

Long baseRollupIndex = table.getIndexIdByName(tableName);

// get storage type for rollup index

TStorageType rollupIndexStorageType = null;

try {

rollupIndexStorageType = PropertyAnalyzer.analyzeStorageType(addRollupClause.getProperties(), table);

} catch (AnalysisException e) {

throw new DdlException(e.getMessage());

}

Preconditions.checkNotNull(rollupIndexStorageType);

// set rollup index meta to olap table

List<Column> rollupColumns = stateMgr.getRollupHandler().checkAndPrepareMaterializedView(addRollupClause,

table, baseRollupIndex);

short rollupShortKeyColumnCount =

GlobalStateMgr.calcShortKeyColumnCount(rollupColumns, alterClause.getProperties());

int rollupSchemaHash = Util.schemaHash(schemaVersion, rollupColumns, bfColumns, bfFpp);

long rollupIndexId = metastore.getNextId();

table.setIndexMeta(rollupIndexId, addRollupClause.getRollupName(), rollupColumns, schemaVersion,

rollupSchemaHash, rollupShortKeyColumnCount, rollupIndexStorageType, keysType);

}

// analyze version info

Long version = null;

try {

version = PropertyAnalyzer.analyzeVersionInfo(properties);

} catch (AnalysisException e) {

throw new DdlException(e.getMessage());

}

Preconditions.checkNotNull(version);

// storage_format is not necessary, remove storage_format if exists.

if (properties != null) {

properties.remove("storage_format");

}

//storage type

table.setStorageType(baseIndexStorageType.name());

// get compression type

TCompressionType compressionType = TCompressionType.LZ4_FRAME;

try {

compressionType = PropertyAnalyzer.analyzeCompressionType(properties);

} catch (AnalysisException e) {

throw new DdlException(e.getMessage());

}

table.setCompressionType(compressionType);

// partition live number

int partitionLiveNumber;

if (properties != null && properties.containsKey(PropertyAnalyzer.PROPERTIES_PARTITION_LIVE_NUMBER)) {

try {

partitionLiveNumber = PropertyAnalyzer.analyzePartitionLiveNumber(properties, true);

} catch (AnalysisException e) {

throw new DdlException(e.getMessage());

}

table.setPartitionLiveNumber(partitionLiveNumber);

}

try {

processConstraint(db, table, properties);

} catch (AnalysisException e) {

throw new DdlException(

String.format("processing constraint failed when creating table:%s. exception msg:%s",

table.getName(), e.getMessage()), e);

}

// a set to record every new tablet created when create table

// if failed in any step, use this set to do clear things

Set<Long> tabletIdSet = new HashSet<Long>();

// do not create partition for external table

if (table.isOlapOrCloudNativeTable()) {

if (partitionInfo.getType() == PartitionType.UNPARTITIONED) {

if (properties != null && !properties.isEmpty()) {

// here, all properties should be checked

throw new DdlException("Unknown properties: " + properties);

}

// this is a 1-level partitioned table, use table name as partition name

long partitionId = partitionNameToId.get(tableName);

Partition partition = metastore.createPartition(db, table, partitionId, tableName, version, tabletIdSet);

metastore.buildPartitions(db, table, partition.getSubPartitions().stream().collect(Collectors.toList()));

table.addPartition(partition);

} else if (partitionInfo.isRangePartition() || partitionInfo.getType() == PartitionType.LIST) {

try {

// just for remove entries in stmt.getProperties(),

// and then check if there still has unknown properties

boolean hasMedium = false;

if (properties != null) {

hasMedium = properties.containsKey(PropertyAnalyzer.PROPERTIES_STORAGE_MEDIUM);

}

DataProperty dataProperty = PropertyAnalyzer.analyzeDataProperty(properties,

DataProperty.getInferredDefaultDataProperty(), false);

DynamicPartitionUtil.checkAndSetDynamicPartitionProperty(table, properties);

if (table.dynamicPartitionExists() && table.getColocateGroup() != null) {

HashDistributionInfo info = (HashDistributionInfo) distributionInfo;

if (info.getBucketNum() !=

table.getTableProperty().getDynamicPartitionProperty().getBuckets()) {

throw new DdlException("dynamic_partition.buckets should equal the distribution buckets"

+ " if creating a colocate table");

}

}

if (hasMedium) {

table.setStorageMedium(dataProperty.getStorageMedium());

}

if (properties != null && !properties.isEmpty()) {

// here, all properties should be checked

throw new DdlException("Unknown properties: " + properties);

}

} catch (AnalysisException e) {

throw new DdlException(e.getMessage());

}

// this is a 2-level partitioned tables

List<Partition> partitions = new ArrayList<>(partitionNameToId.size());

for (Map.Entry<String, Long> entry : partitionNameToId.entrySet()) {

Partition partition = metastore.createPartition(db, table, entry.getValue(), entry.getKey(), version,

tabletIdSet);

partitions.add(partition);

}

// It's ok if partitions is empty.

metastore.buildPartitions(db, table, partitions.stream().map(Partition::getSubPartitions)

.flatMap(p -> p.stream()).collect(Collectors.toList()));

for (Partition partition : partitions) {

table.addPartition(partition);

}

} else {

throw new DdlException("Unsupported partition method: " + partitionInfo.getType().name());

}

// if binlog_enable is true when creating table,

// then set binlogAvailableVersion without statistics through reportHandler

if (table.isBinlogEnabled()) {

Map<String, String> binlogAvailableVersion = table.buildBinlogAvailableVersion();

table.setBinlogAvailableVersion(binlogAvailableVersion);

LOG.info("set binlog available version when create table, tableName : {}, partitions : {}",

tableName, binlogAvailableVersion.toString());

}

}

// process lake table colocation properties, after partition and tablet creation

colocateTableIndex.addTableToGroup(db, table, colocateGroup, true /* expectLakeTable */);

} catch (DdlException e) {

GlobalStateMgr.getCurrentState().getStorageVolumeMgr().unbindTableToStorageVolume(tableId);

throw e;

}

return table;

}