数据仓库之电商数仓-- 3.4、电商数据仓库系统(ADS层)

目录

- 九、数仓搭建-ADS层

-

- 9.1 建表说明

- 9.2 访客主题

-

- 9.2.1 访客统计

- 9.2.2 路径分析

- 9.3 用户主题

-

- 9.3.1 用户统计

- 9.3.2 用户变动统计

- 9.3.3 用户行为漏斗分析

- 9.3.4 用户留存率

- 9.4 商品主题

-

- 9.4.1 商品统计

- 9.4.2 品牌复购率

- 9.5 订单主题

-

- 9.5.1 订单统计

- 9.5.2 各地区订单统计

- 9.6 优惠券主题

-

- 9.6.1 优惠券统计

- 9.7 活动主题

-

- 9.7.1 活动统计

- 9.8 ADS层业务数据导入脚本

- 第10章 全流程调度

-

- 10.1 Azkaban部署

- 10.2 创建MySQL数据库和表

- 10.3 Sqoop导出脚本

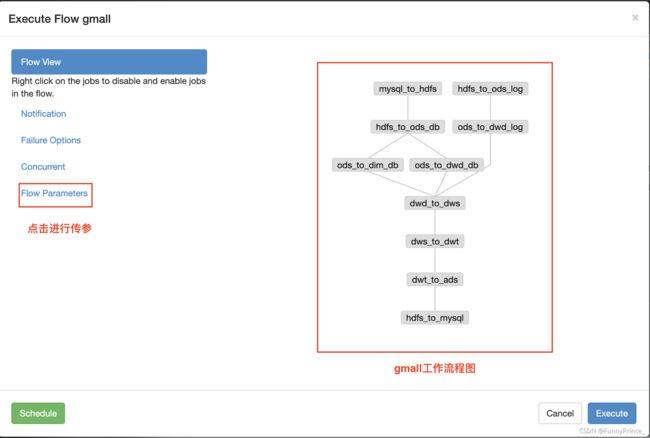

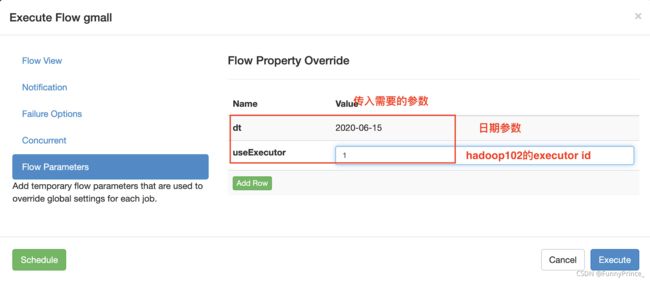

- 10.4 全调度流程

-

- 10.4.1 数据准备

- 10.4.2 编写Azkaban工作流程配置文件

-----------------------------------------------------分隔符-----------------------------------------------------

数据仓库之电商数仓-- 1、用户行为数据采集==>

数据仓库之电商数仓-- 2、业务数据采集平台==>

数据仓库之电商数仓-- 3.1、电商数据仓库系统(DIM层、ODS层、DWD层)==>

数据仓库之电商数仓-- 3.2、电商数据仓库系统(DWS层)==>

数据仓库之电商数仓-- 3.3、电商数据仓库系统(DWT层)==>

数据仓库之电商数仓-- 3.4、电商数据仓库系统(ADS层)==>

数据仓库之电商数仓-- 4、可视化报表Superset==>

数据仓库之电商数仓-- 5、即席查询Kylin==>

九、数仓搭建-ADS层

9.1 建表说明

ADS层不涉及建模,建表根据具体需求而定。

9.2 访客主题

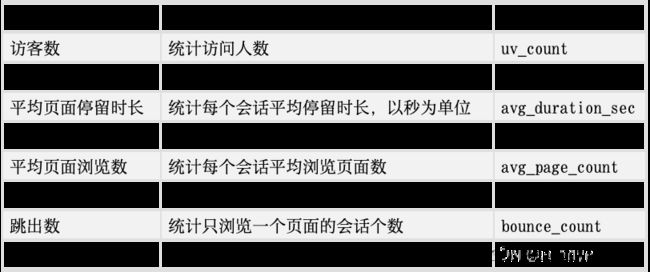

9.2.1 访客统计

- 建表语句

DROP TABLE IF EXISTS ads_visit_stats;

CREATE EXTERNAL TABLE ads_visit_stats (

`dt` STRING COMMENT '统计日期',

`is_new` STRING COMMENT '新老标识,1:新,0:老',

`recent_days` BIGINT COMMENT '最近天数,1:最近1天,7:最近7天,30:最近30天',

`channel` STRING COMMENT '渠道',

`uv_count` BIGINT COMMENT '日活(访问人数)',

`duration_sec` BIGINT COMMENT '页面停留总时长',

`avg_duration_sec` BIGINT COMMENT '一次会话,页面停留平均时长,单位为描述',

`page_count` BIGINT COMMENT '页面总浏览数',

`avg_page_count` BIGINT COMMENT '一次会话,页面平均浏览数',

`sv_count` BIGINT COMMENT '会话次数',

`bounce_count` BIGINT COMMENT '跳出数',

`bounce_rate` DECIMAL(16,2) COMMENT '跳出率'

) COMMENT '访客统计'

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

LOCATION '/warehouse/gmall/ads/ads_visit_stats/';

- 数据装载

思路分析:该需求的关键点为会话的划分,总体实现思路可分为以下几步:

第一步:对所有页面访问记录进行会话的划分。

第二步:统计每个会话的浏览时长和浏览页面数。

第三步:统计上述各指标。

insert overwrite table ads_visit_stats

select * from ads_visit_stats

union

select

'2020-06-14' dt,

is_new,

recent_days,

channel,

count(distinct(mid_id)) uv_count,

cast(sum(duration)/1000 as bigint) duration_sec,

cast(avg(duration)/1000 as bigint) avg_duration_sec,

sum(page_count) page_count,

cast(avg(page_count) as bigint) avg_page_count,

count(*) sv_count,

sum(if(page_count=1,1,0)) bounce_count,

cast(sum(if(page_count=1,1,0))/count(*)*100 as decimal(16,2)) bounce_rate

from

(

select

session_id,

mid_id,

is_new,

recent_days,

channel,

count(*) page_count,

sum(during_time) duration

from

(

select

mid_id,

channel,

recent_days,

is_new,

last_page_id,

page_id,

during_time,

concat(mid_id,'-',last_value(if(last_page_id is null,ts,null),true) over (partition by recent_days,mid_id order by ts)) session_id

from

(

select

mid_id,

channel,

last_page_id,

page_id,

during_time,

ts,

recent_days,

if(visit_date_first>=date_add('2020-06-14',-recent_days+1),'1','0') is_new

from

(

select

t1.mid_id,

t1.channel,

t1.last_page_id,

t1.page_id,

t1.during_time,

t1.dt,

t1.ts,

t2.visit_date_first

from

(

select

mid_id,

channel,

last_page_id,

page_id,

during_time,

dt,

ts

from dwd_page_log

where dt>=date_add('2020-06-14',-30)

)t1

left join

(

select

mid_id,

visit_date_first

from dwt_visitor_topic

where dt='2020-06-14'

)t2

on t1.mid_id=t2.mid_id

)t3 lateral view explode(Array(1,7,30)) tmp as recent_days

where dt>=date_add('2020-06-14',-recent_days+1)

)t4

)t5

group by session_id,mid_id,is_new,recent_days,channel

)t6

group by is_new,recent_days,channel;

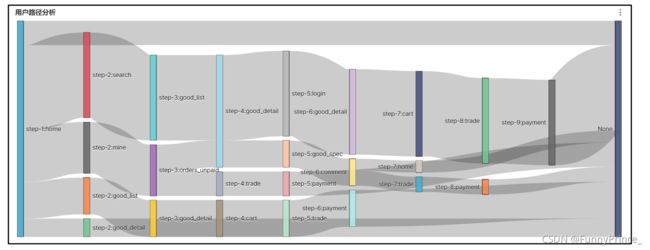

9.2.2 路径分析

用户路径分析,顾名思义,就是指用户在APP或网站中的访问路径。为了衡量网站优化的效果或营销推广的效果,以及了解用户行为偏好,时常要对访问路径进行分析。

用户访问路径的可视化通常使用桑基图。如下图所示,该图可真实还原用户的访问路径,包括页面跳转和页面访问次序。

桑基图需要我们提供每种页面跳转的次数,每个跳转由source/target表示,source指跳转起始页面,target表示跳转终到页面。

- 建表语句

DROP TABLE IF EXISTS ads_page_path;

CREATE EXTERNAL TABLE ads_page_path

(

`dt` STRING COMMENT '统计日期',

`recent_days` BIGINT COMMENT '最近天数,1:最近1天,7:最近7天,30:最近30天',

`source` STRING COMMENT '跳转起始页面ID',

`target` STRING COMMENT '跳转终到页面ID',

`path_count` BIGINT COMMENT '跳转次数'

) COMMENT '页面浏览路径'

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

LOCATION '/warehouse/gmall/ads/ads_page_path/';

- 数据装载

⚠️:

思路分析:该需求要统计的就是每种跳转的次数,故理论上对source/target进行分组count()即可。统计时需注意以下两点:

1). 桑基图的source不允许为空,但target可为空。

2). 桑基图所展示的流程不允许存在环。

insert overwrite table ads_page_path

select * from ads_page_path

union

select

'2020-06-14',

recent_days,

source,

target,

count(*)

from

(

select

recent_days,

concat('step-',step,':',source) source,

concat('step-',step+1,':',target) target

from

(

select

recent_days,

page_id source,

lead(page_id,1,null) over (partition by recent_days,session_id order by ts) target,

row_number() over (partition by recent_days,session_id order by ts) step

from

(

select

recent_days,

last_page_id,

page_id,

ts,

concat(mid_id,'-',last_value(if(last_page_id is null,ts,null),true) over (partition by mid_id,recent_days order by ts)) session_id

from dwd_page_log lateral view explode(Array(1,7,30)) tmp as recent_days

where dt>=date_add('2020-06-14',-30)

and dt>=date_add('2020-06-14',-recent_days+1)

)t2

)t3

)t4

group by recent_days,source,target;

9.3 用户主题

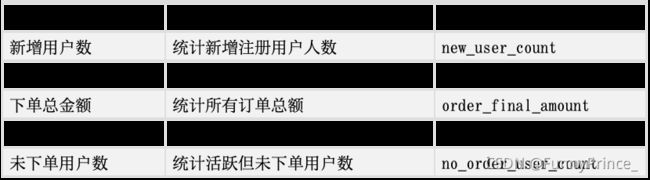

9.3.1 用户统计

该需求为用户综合统计,其中包含若干指标,以下为对每个指标的解释说明。

- 建表语句

DROP TABLE IF EXISTS ads_user_total;

CREATE EXTERNAL TABLE `ads_user_total` (

`dt` STRING COMMENT '统计日期',

`recent_days` BIGINT COMMENT '最近天数,0:累积值,1:最近1天,7:最近7天,30:最近30天',

`new_user_count` BIGINT COMMENT '新注册用户数',

`new_order_user_count` BIGINT COMMENT '新增下单用户数',

`order_final_amount` DECIMAL(16,2) COMMENT '下单总金额',

`order_user_count` BIGINT COMMENT '下单用户数',

`no_order_user_count` BIGINT COMMENT '未下单用户数(具体指活跃用户中未下单用户)'

) COMMENT '用户统计'

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

LOCATION '/warehouse/gmall/ads/ads_user_total/';

- 数据装载

insert overwrite table ads_user_total

select * from ads_user_total

union

select

'2020-06-14',

recent_days,

sum(if(login_date_first>=recent_days_ago,1,0)) new_user_count,

sum(if(order_date_first>=recent_days_ago,1,0)) new_order_user_count,

sum(order_final_amount) order_final_amount,

sum(if(order_final_amount>0,1,0)) order_user_count,

sum(if(login_date_last>=recent_days_ago and order_final_amount=0,1,0)) no_order_user_count

from

(

select

recent_days,

user_id,

login_date_first,

login_date_last,

order_date_first,

case when recent_days=0 then order_final_amount

when recent_days=1 then order_last_1d_final_amount

when recent_days=7 then order_last_7d_final_amount

when recent_days=30 then order_last_30d_final_amount

end order_final_amount,

if(recent_days=0,'1970-01-01',date_add('2020-06-14',-recent_days+1)) recent_days_ago

from dwt_user_topic lateral view explode(Array(0,1,7,30)) tmp as recent_days

where dt='2020-06-14'

)t1

group by recent_days;

9.3.2 用户变动统计

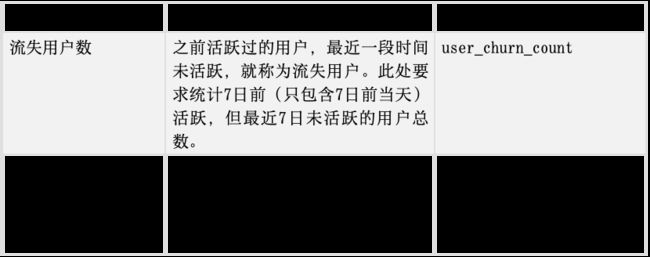

该需求包括两个指标,分别为流失用户数和回流用户数,以下为对两个指标的解释说明。

- 建表语句

DROP TABLE IF EXISTS ads_user_change;

CREATE EXTERNAL TABLE `ads_user_change` (

`dt` STRING COMMENT '统计日期',

`user_churn_count` BIGINT COMMENT '流失用户数',

`user_back_count` BIGINT COMMENT '回流用户数'

) COMMENT '用户变动统计'

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

LOCATION '/warehouse/gmall/ads/ads_user_change/';

- 数据装载

思路分析:

流失用户:末次活跃时间为7日前的用户即为流失用户。

回流用户:末次活跃时间为今日,上次活跃时间在8日前的用户即为回流用户。

insert overwrite table ads_user_change

select * from ads_user_change

union

select

churn.dt,

user_churn_count,

user_back_count

from

(

select

'2020-06-14' dt,

count(*) user_churn_count

from dwt_user_topic

where dt='2020-06-14'

and login_date_last=date_add('2020-06-14',-7)

)churn

join

(

select

'2020-06-14' dt,

count(*) user_back_count

from

(

select

user_id,

login_date_last

from dwt_user_topic

where dt='2020-06-14'

and login_date_last='2020-06-14'

)t1

join

(

select

user_id,

login_date_last login_date_previous

from dwt_user_topic

where dt=date_add('2020-06-14',-1)

)t2

on t1.user_id=t2.user_id

where datediff(login_date_last,login_date_previous)>=8

)back

on churn.dt=back.dt;

9.3.3 用户行为漏斗分析

漏斗分析是一个数据分析模型,它能够科学反映一个业务过程从起点到终点各阶段用户转化情况。由于其能将各阶段环节都展示出来,故哪个阶段存在问题,就能一目了然。

该需求要求统计一个完整的购物流程各个阶段的人数。

- 建表语句

DROP TABLE IF EXISTS ads_user_action;

CREATE EXTERNAL TABLE `ads_user_action` (

`dt` STRING COMMENT '统计日期',

`recent_days` BIGINT COMMENT '最近天数,1:最近1天,7:最近7天,30:最近30天',

`home_count` BIGINT COMMENT '浏览首页人数',

`good_detail_count` BIGINT COMMENT '浏览商品详情页人数',

`cart_count` BIGINT COMMENT '加入购物车人数',

`order_count` BIGINT COMMENT '下单人数',

`payment_count` BIGINT COMMENT '支付人数'

) COMMENT '漏斗分析'

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

LOCATION '/warehouse/gmall/ads/ads_user_action/';

- 数据装载

insert overwrite table ads_user_action

select * from ads_user_action

union

select

'2020-06-14',

cop.recent_days,

home_count,

good_detail_count,

cart_count,

order_count,

payment_count

from

(

select

recent_days,

sum(if(array_contains(pages,'home'),1,0)) home_count,

sum(if(array_contains(pages,'good_detail'),1,0)) good_detail_count

from

(

select

recent_days,

mid_id,

collect_set(page_id) pages

from dwd_page_log lateral view explode(array(1,7,30)) tmp as recent_days

where dt>=date_add('2020-06-14',-recent_days+1)

and page_id in ('home','good_detail')

group by recent_days,mid_id

)t1

group by recent_days

)page

join

(

select

recent_days,

sum(if(cart_count>0,1,0)) cart_count,

sum(if(order_count>0,1,0)) order_count,

sum(if(payment_count>0,1,0)) payment_count

from

(

select

recent_days,

case

when recent_days=1 then cart_last_1d_count

when recent_days=7 then cart_last_7d_count

when recent_days=30 then cart_last_30d_count

end cart_count,

case

when recent_days=1 then order_last_1d_count

when recent_days=7 then order_last_7d_count

when recent_days=30 then order_last_30d_count

end order_count,

case

when recent_days=1 then payment_last_1d_count

when recent_days=7 then payment_last_7d_count

when recent_days=30 then payment_last_30d_count

end payment_count

from dwt_user_topic lateral view explode(array(1,7,30)) tmp as recent_days

where dt='2020-06-14'

)t1

group by recent_days

)cop

on page.recent_days=cop.recent_days

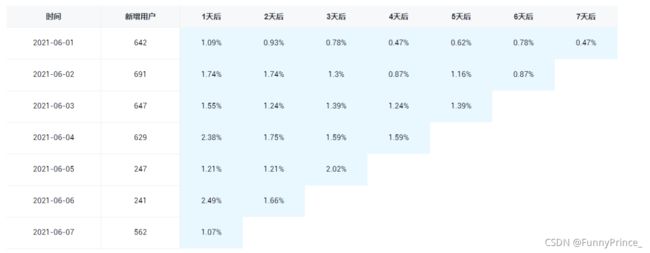

9.3.4 用户留存率

留存分析一般包含新增留存和活跃留存分析。

新增留存分析是分析某天的新增用户中,有多少人有后续的活跃行为;

活跃留存分析是分析某天的活跃用户中,有多少人有后续的活跃行为。

留存分析是衡量产品对用户价值高低的重要指标。

此处要求统计新增留存率,新增留存率具体是指留存用户数与新增用户数的比值,例如2020-06-14新增100个用户,1日之后(2020-06-15)这100人中有80个人活跃了,那2020-06-14的1日留存数则为80,2020-06-14的1日留存率则为80%。

- 建表语句

DROP TABLE IF EXISTS ads_user_retention;

CREATE EXTERNAL TABLE ads_user_retention (

`dt` STRING COMMENT '统计日期',

`create_date` STRING COMMENT '用户新增日期',

`retention_day` BIGINT COMMENT '截至当前日期留存天数',

`retention_count` BIGINT COMMENT '留存用户数量',

`new_user_count` BIGINT COMMENT '新增用户数量',

`retention_rate` DECIMAL(16,2) COMMENT '留存率'

) COMMENT '用户留存率'

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

LOCATION '/warehouse/gmall/ads/ads_user_retention/';

- 数据装载

insert overwrite table ads_user_retention

select * from ads_user_retention

union

select

'2020-06-14',

login_date_first create_date,

datediff('2020-06-14',login_date_first) retention_day,

sum(if(login_date_last='2020-06-14',1,0)) retention_count,

count(*) new_user_count,

cast(sum(if(login_date_last='2020-06-14',1,0))/count(*)*100 as decimal(16,2)) retention_rate

from dwt_user_topic

where dt='2020-06-14'

and login_date_first>=date_add('2020-06-14',-7)

and login_date_first<'2020-06-14'

group by login_date_first;

9.4 商品主题

9.4.1 商品统计

该指标为商品综合统计,包含每个spu被下单总次数和被下单总金额。

- 建表语句

DROP TABLE IF EXISTS ads_order_spu_stats;

CREATE EXTERNAL TABLE `ads_order_spu_stats` (

`dt` STRING COMMENT '统计日期',

`recent_days` BIGINT COMMENT '最近天数,1:最近1天,7:最近7天,30:最近30天',

`spu_id` STRING COMMENT '商品ID',

`spu_name` STRING COMMENT '商品名称',

`tm_id` STRING COMMENT '品牌ID',

`tm_name` STRING COMMENT '品牌名称',

`category3_id` STRING COMMENT '三级品类ID',

`category3_name` STRING COMMENT '三级品类名称',

`category2_id` STRING COMMENT '二级品类ID',

`category2_name` STRING COMMENT '二级品类名称',

`category1_id` STRING COMMENT '一级品类ID',

`category1_name` STRING COMMENT '一级品类名称',

`order_count` BIGINT COMMENT '订单数',

`order_amount` DECIMAL(16,2) COMMENT '订单金额'

) COMMENT '商品销售统计'

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

LOCATION '/warehouse/gmall/ads/ads_order_spu_stats/';

- 数据装载

insert overwrite table ads_order_spu_stats

select * from ads_order_spu_stats

union

select

'2020-06-14' dt,

recent_days,

spu_id,

spu_name,

tm_id,

tm_name,

category3_id,

category3_name,

category2_id,

category2_name,

category1_id,

category1_name,

sum(order_count),

sum(order_amount)

from

(

select

recent_days,

sku_id,

case

when recent_days=1 then order_last_1d_count

when recent_days=7 then order_last_7d_count

when recent_days=30 then order_last_30d_count

end order_count,

case

when recent_days=1 then order_last_1d_final_amount

when recent_days=7 then order_last_7d_final_amount

when recent_days=30 then order_last_30d_final_amount

end order_amount

from dwt_sku_topic lateral view explode(Array(1,7,30)) tmp as recent_days

where dt='2020-06-14'

)t1

left join

(

select

id,

spu_id,

spu_name,

tm_id,

tm_name,

category3_id,

category3_name,

category2_id,

category2_name,

category1_id,

category1_name

from dim_sku_info

where dt='2020-06-14'

)t2

on t1.sku_id=t2.id

group by recent_days,spu_id,spu_name,tm_id,tm_name,category3_id,category3_name,category2_id,category2_name,category1_id,category1_name;

9.4.2 品牌复购率

品牌复购率是指一段时间内重复购买某品牌的人数与购买过该品牌的人数的比值。重复购买即购买次数大于等于2,购买过即购买次数大于1。

此处要求统计最近1,7,30天的各品牌复购率。

- 建表语句

DROP TABLE IF EXISTS ads_repeat_purchase;

CREATE EXTERNAL TABLE `ads_repeat_purchase` (

`dt` STRING COMMENT '统计日期',

`recent_days` BIGINT COMMENT '最近天数,1:最近1天,7:最近7天,30:最近30天',

`tm_id` STRING COMMENT '品牌ID',

`tm_name` STRING COMMENT '品牌名称',

`order_repeat_rate` DECIMAL(16,2) COMMENT '复购率'

) COMMENT '品牌复购率'

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

LOCATION '/warehouse/gmall/ads/ads_repeat_purchase/';

- 数据装载

**思路分析:**该需求可分两步实现:

1). 统计每个用户购买每个品牌的次数;

2). 分别统计购买次数大于1的人数和大于2的人数。

insert overwrite table ads_repeat_purchase

select * from ads_repeat_purchase

union

select

'2020-06-14' dt,

recent_days,

tm_id,

tm_name,

cast(sum(if(order_count>=2,1,0))/sum(if(order_count>=1,1,0))*100 as decimal(16,2))

from

(

select

recent_days,

user_id,

tm_id,

tm_name,

sum(order_count) order_count

from

(

select

recent_days,

user_id,

sku_id,

count(*) order_count

from dwd_order_detail lateral view explode(Array(1,7,30)) tmp as recent_days

where dt>=date_add('2020-06-14',-29)

and dt>=date_add('2020-06-14',-recent_days+1)

group by recent_days, user_id,sku_id

)t1

left join

(

select

id,

tm_id,

tm_name

from dim_sku_info

where dt='2020-06-14'

)t2

on t1.sku_id=t2.id

group by recent_days,user_id,tm_id,tm_name

)t3

group by recent_days,tm_id,tm_name;

9.5 订单主题

9.5.1 订单统计

该需求包含订单总数,订单总金额和下单总人数。

- 建表语句

DROP TABLE IF EXISTS ads_order_total;

CREATE EXTERNAL TABLE `ads_order_total` (

`dt` STRING COMMENT '统计日期',

`recent_days` BIGINT COMMENT '最近天数,1:最近1天,7:最近7天,30:最近30天',

`order_count` BIGINT COMMENT '订单数',

`order_amount` DECIMAL(16,2) COMMENT '订单金额',

`order_user_count` BIGINT COMMENT '下单人数'

) COMMENT '订单统计'

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

LOCATION '/warehouse/gmall/ads/ads_order_total/';

- 数据装载

insert overwrite table ads_order_total

select * from ads_order_total

union

select

'2020-06-14',

recent_days,

sum(order_count),

sum(order_final_amount) order_final_amount,

sum(if(order_final_amount>0,1,0)) order_user_count

from

(

select

recent_days,

user_id,

case when recent_days=0 then order_count

when recent_days=1 then order_last_1d_count

when recent_days=7 then order_last_7d_count

when recent_days=30 then order_last_30d_count

end order_count,

case when recent_days=0 then order_final_amount

when recent_days=1 then order_last_1d_final_amount

when recent_days=7 then order_last_7d_final_amount

when recent_days=30 then order_last_30d_final_amount

end order_final_amount

from dwt_user_topic lateral view explode(Array(1,7,30)) tmp as recent_days

where dt='2020-06-14'

)t1

group by recent_days;

9.5.2 各地区订单统计

该需求包含各省份订单总数和订单总金额。

- 建表语句

DROP TABLE IF EXISTS ads_order_by_province;

CREATE EXTERNAL TABLE `ads_order_by_province` (

`dt` STRING COMMENT '统计日期',

`recent_days` BIGINT COMMENT '最近天数,1:最近1天,7:最近7天,30:最近30天',

`province_id` STRING COMMENT '省份ID',

`province_name` STRING COMMENT '省份名称',

`area_code` STRING COMMENT '地区编码',

`iso_code` STRING COMMENT '国际标准地区编码',

`iso_code_3166_2` STRING COMMENT '国际标准地区编码',

`order_count` BIGINT COMMENT '订单数',

`order_amount` DECIMAL(16,2) COMMENT '订单金额'

) COMMENT '各地区订单统计'

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

LOCATION '/warehouse/gmall/ads/ads_order_by_province/';

- 数据装载

insert overwrite table ads_order_by_province

select * from ads_order_by_province

union

select

dt,

recent_days,

province_id,

province_name,

area_code,

iso_code,

iso_3166_2,

order_count,

order_amount

from

(

select

'2020-06-14' dt,

recent_days,

province_id,

sum(order_count) order_count,

sum(order_amount) order_amount

from

(

select

recent_days,

province_id,

case

when recent_days=1 then order_last_1d_count

when recent_days=7 then order_last_7d_count

when recent_days=30 then order_last_30d_count

end order_count,

case

when recent_days=1 then order_last_1d_final_amount

when recent_days=7 then order_last_7d_final_amount

when recent_days=30 then order_last_30d_final_amount

end order_amount

from dwt_area_topic lateral view explode(Array(1,7,30)) tmp as recent_days

where dt='2020-06-14'

)t1

group by recent_days,province_id

)t2

join dim_base_province t3

on t2.province_id=t3.id;

9.6 优惠券主题

9.6.1 优惠券统计

该需求要求统计最近30日发布的所有优惠券的领用情况和补贴率,补贴率是指,优惠金额与使用优惠券的订单的原价金额的比值。

- 建表语句

DROP TABLE IF EXISTS ads_coupon_stats;

CREATE EXTERNAL TABLE ads_coupon_stats (

`dt` STRING COMMENT '统计日期',

`coupon_id` STRING COMMENT '优惠券ID',

`coupon_name` STRING COMMENT '优惠券名称',

`start_date` STRING COMMENT '发布日期',

`rule_name` STRING COMMENT '优惠规则,例如满100元减10元',

`get_count` BIGINT COMMENT '领取次数',

`order_count` BIGINT COMMENT '使用(下单)次数',

`expire_count` BIGINT COMMENT '过期次数',

`order_original_amount` DECIMAL(16,2) COMMENT '使用优惠券订单原始金额',

`order_final_amount` DECIMAL(16,2) COMMENT '使用优惠券订单最终金额',

`reduce_amount` DECIMAL(16,2) COMMENT '优惠金额',

`reduce_rate` DECIMAL(16,2) COMMENT '补贴率'

) COMMENT '商品销售统计'

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

LOCATION '/warehouse/gmall/ads/ads_coupon_stats/';

- 数据装载

insert overwrite table ads_coupon_stats

select * from ads_coupon_stats

union

select

'2020-06-14' dt,

t1.id,

coupon_name,

start_date,

rule_name,

get_count,

order_count,

expire_count,

order_original_amount,

order_final_amount,

reduce_amount,

reduce_rate

from

(

select

id,

coupon_name,

date_format(start_time,'yyyy-MM-dd') start_date,

case

when coupon_type='3201' then concat('满',condition_amount,'元减',benefit_amount,'元')

when coupon_type='3202' then concat('满',condition_num,'件打', (1-benefit_discount)*10,'折')

when coupon_type='3203' then concat('减',benefit_amount,'元')

end rule_name

from dim_coupon_info

where dt='2020-06-14'

and date_format(start_time,'yyyy-MM-dd')>=date_add('2020-06-14',-29)

)t1

left join

(

select

coupon_id,

get_count,

order_count,

expire_count,

order_original_amount,

order_final_amount,

order_reduce_amount reduce_amount,

cast(order_reduce_amount/order_original_amount as decimal(16,2)) reduce_rate

from dwt_coupon_topic

where dt='2020-06-14'

)t2

on t1.id=t2.coupon_id;

9.7 活动主题

9.7.1 活动统计

该需求要求统计最近30日发布的所有活动的参与情况和补贴率,补贴率是指,优惠金额与参与活动的订单原价金额的比值。

- 建表语句

DROP TABLE IF EXISTS ads_activity_stats;

CREATE EXTERNAL TABLE `ads_activity_stats` (

`dt` STRING COMMENT '统计日期',

`activity_id` STRING COMMENT '活动ID',

`activity_name` STRING COMMENT '活动名称',

`start_date` STRING COMMENT '活动开始日期',

`order_count` BIGINT COMMENT '参与活动订单数',

`order_original_amount` DECIMAL(16,2) COMMENT '参与活动订单原始金额',

`order_final_amount` DECIMAL(16,2) COMMENT '参与活动订单最终金额',

`reduce_amount` DECIMAL(16,2) COMMENT '优惠金额',

`reduce_rate` DECIMAL(16,2) COMMENT '补贴率'

) COMMENT '商品销售统计'

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

LOCATION '/warehouse/gmall/ads/ads_activity_stats/';

- 数据装载

insert overwrite table ads_activity_stats

select * from ads_activity_stats

union

select

'2020-06-14' dt,

t4.activity_id,

activity_name,

start_date,

order_count,

order_original_amount,

order_final_amount,

reduce_amount,

reduce_rate

from

(

select

activity_id,

activity_name,

date_format(start_time,'yyyy-MM-dd') start_date

from dim_activity_rule_info

where dt='2020-06-14'

and date_format(start_time,'yyyy-MM-dd')>=date_add('2020-06-14',-29)

group by activity_id,activity_name,start_time

)t4

left join

(

select

activity_id,

sum(order_count) order_count,

sum(order_original_amount) order_original_amount,

sum(order_final_amount) order_final_amount,

sum(order_reduce_amount) reduce_amount,

cast(sum(order_reduce_amount)/sum(order_original_amount)*100 as decimal(16,2)) reduce_rate

from dwt_activity_topic

where dt='2020-06-14'

group by activity_id

)t5

on t4.activity_id=t5.activity_id;

自此 所有表已装载完毕!

9.8 ADS层业务数据导入脚本

- 在/home/xiaobai/bin目录下创建脚本

dwt_to_ads.sh:

[xiaobai@hadoop102 ~]$ vim dwt_to_ads.sh

#!/bin/bash

APP=gmall

# 如果是输入的日期按照取输入日期;如果没输入日期取当前时间的前一天

if [ -n "$2" ] ;then

do_date=$2

else

do_date=`date -d "-1 day" +%F`

fi

ads_activity_stats="

insert overwrite table ${APP}.ads_activity_stats

select * from ${APP}.ads_activity_stats

union

select

'$do_date' dt,

t4.activity_id,

activity_name,

start_date,

order_count,

order_original_amount,

order_final_amount,

reduce_amount,

reduce_rate

from

(

select

activity_id,

activity_name,

date_format(start_time,'yyyy-MM-dd') start_date

from ${APP}.dim_activity_rule_info

where dt='$do_date'

and date_format(start_time,'yyyy-MM-dd')>=date_add('$do_date',-29)

group by activity_id,activity_name,start_time

)t4

left join

(

select

activity_id,

sum(order_count) order_count,

sum(order_original_amount) order_original_amount,

sum(order_final_amount) order_final_amount,

sum(order_reduce_amount) reduce_amount,

cast(sum(order_reduce_amount)/sum(order_original_amount)*100 as decimal(16,2)) reduce_rate

from ${APP}.dwt_activity_topic

where dt='$do_date'

group by activity_id

)t5

on t4.activity_id=t5.activity_id;

"

ads_coupon_stats="

insert overwrite table ${APP}.ads_coupon_stats

select * from ${APP}.ads_coupon_stats

union

select

'$do_date' dt,

t1.id,

coupon_name,

start_date,

rule_name,

get_count,

order_count,

expire_count,

order_original_amount,

order_final_amount,

reduce_amount,

reduce_rate

from

(

select

id,

coupon_name,

date_format(start_time,'yyyy-MM-dd') start_date,

case

when coupon_type='3201' then concat('满',condition_amount,'元减',benefit_amount,'元')

when coupon_type='3202' then concat('满',condition_num,'件打', (1-benefit_discount)*10,'折')

when coupon_type='3203' then concat('减',benefit_amount,'元')

end rule_name

from ${APP}.dim_coupon_info

where dt='$do_date'

and date_format(start_time,'yyyy-MM-dd')>=date_add('$do_date',-29)

)t1

left join

(

select

coupon_id,

get_count,

order_count,

expire_count,

order_original_amount,

order_final_amount,

order_reduce_amount reduce_amount,

cast(order_reduce_amount/order_original_amount as decimal(16,2)) reduce_rate

from ${APP}.dwt_coupon_topic

where dt='$do_date'

)t2

on t1.id=t2.coupon_id;

"

ads_order_by_province="

insert overwrite table ${APP}.ads_order_by_province

select * from ${APP}.ads_order_by_province

union

select

dt,

recent_days,

province_id,

province_name,

area_code,

iso_code,

iso_3166_2,

order_count,

order_amount

from

(

select

'$do_date' dt,

recent_days,

province_id,

sum(order_count) order_count,

sum(order_amount) order_amount

from

(

select

recent_days,

province_id,

case

when recent_days=1 then order_last_1d_count

when recent_days=7 then order_last_7d_count

when recent_days=30 then order_last_30d_count

end order_count,

case

when recent_days=1 then order_last_1d_final_amount

when recent_days=7 then order_last_7d_final_amount

when recent_days=30 then order_last_30d_final_amount

end order_amount

from ${APP}.dwt_area_topic lateral view explode(Array(1,7,30)) tmp as recent_days

where dt='$do_date'

)t1

group by recent_days,province_id

)t2

join ${APP}.dim_base_province t3

on t2.province_id=t3.id;

"

ads_order_spu_stats="

insert overwrite table ${APP}.ads_order_spu_stats

select * from ${APP}.ads_order_spu_stats

union

select

'$do_date' dt,

recent_days,

spu_id,

spu_name,

tm_id,

tm_name,

category3_id,

category3_name,

category2_id,

category2_name,

category1_id,

category1_name,

sum(order_count),

sum(order_amount)

from

(

select

recent_days,

sku_id,

case

when recent_days=1 then order_last_1d_count

when recent_days=7 then order_last_7d_count

when recent_days=30 then order_last_30d_count

end order_count,

case

when recent_days=1 then order_last_1d_final_amount

when recent_days=7 then order_last_7d_final_amount

when recent_days=30 then order_last_30d_final_amount

end order_amount

from ${APP}.dwt_sku_topic lateral view explode(Array(1,7,30)) tmp as recent_days

where dt='$do_date'

)t1

left join

(

select

id,

spu_id,

spu_name,

tm_id,

tm_name,

category3_id,

category3_name,

category2_id,

category2_name,

category1_id,

category1_name

from ${APP}.dim_sku_info

where dt='$do_date'

)t2

on t1.sku_id=t2.id

group by recent_days,spu_id,spu_name,tm_id,tm_name,category3_id,category3_name,category2_id,category2_name,category1_id,category1_name;

"

ads_order_total="

insert overwrite table ${APP}.ads_order_total

select * from ${APP}.ads_order_total

union

select

'$do_date',

recent_days,

sum(order_count),

sum(order_final_amount) order_final_amount,

sum(if(order_final_amount>0,1,0)) order_user_count

from

(

select

recent_days,

user_id,

case when recent_days=0 then order_count

when recent_days=1 then order_last_1d_count

when recent_days=7 then order_last_7d_count

when recent_days=30 then order_last_30d_count

end order_count,

case when recent_days=0 then order_final_amount

when recent_days=1 then order_last_1d_final_amount

when recent_days=7 then order_last_7d_final_amount

when recent_days=30 then order_last_30d_final_amount

end order_final_amount

from ${APP}.dwt_user_topic lateral view explode(Array(1,7,30)) tmp as recent_days

where dt='$do_date'

)t1

group by recent_days;

"

ads_page_path="

insert overwrite table ${APP}.ads_page_path

select * from ${APP}.ads_page_path

union

select

'$do_date',

recent_days,

source,

target,

count(*)

from

(

select

recent_days,

concat('step-',step,':',source) source,

concat('step-',step+1,':',target) target

from

(

select

recent_days,

page_id source,

lead(page_id,1,null) over (partition by recent_days,session_id order by ts) target,

row_number() over (partition by recent_days,session_id order by ts) step

from

(

select

recent_days,

last_page_id,

page_id,

ts,

concat(mid_id,'-',last_value(if(last_page_id is null,ts,null),true) over (partition by mid_id,recent_days order by ts)) session_id

from ${APP}.dwd_page_log lateral view explode(Array(1,7,30)) tmp as recent_days

where dt>=date_add('$do_date',-30)

and dt>=date_add('$do_date',-recent_days+1)

)t2

)t3

)t4

group by recent_days,source,target;

"

ads_repeat_purchase="

insert overwrite table ${APP}.ads_repeat_purchase

select * from ${APP}.ads_repeat_purchase

union

select

'$do_date' dt,

recent_days,

tm_id,

tm_name,

cast(sum(if(order_count>=2,1,0))/sum(if(order_count>=1,1,0))*100 as decimal(16,2))

from

(

select

recent_days,

user_id,

tm_id,

tm_name,

sum(order_count) order_count

from

(

select

recent_days,

user_id,

sku_id,

count(*) order_count

from ${APP}.dwd_order_detail lateral view explode(Array(1,7,30)) tmp as recent_days

where dt>=date_add('$do_date',-29)

and dt>=date_add('$do_date',-recent_days+1)

group by recent_days, user_id,sku_id

)t1

left join

(

select

id,

tm_id,

tm_name

from ${APP}.dim_sku_info

where dt='$do_date'

)t2

on t1.sku_id=t2.id

group by recent_days,user_id,tm_id,tm_name

)t3

group by recent_days,tm_id,tm_name;

"

ads_user_action="

with

tmp_page as

(

select

'$do_date' dt,

recent_days,

sum(if(array_contains(pages,'home'),1,0)) home_count,

sum(if(array_contains(pages,'good_detail'),1,0)) good_detail_count

from

(

select

recent_days,

mid_id,

collect_set(page_id) pages

from

(

select

dt,

mid_id,

page.page_id

from ${APP}.dws_visitor_action_daycount lateral view explode(page_stats) tmp as page

where dt>=date_add('$do_date',-29)

and page.page_id in('home','good_detail')

)t1 lateral view explode(Array(1,7,30)) tmp as recent_days

where dt>=date_add('$do_date',-recent_days+1)

group by recent_days,mid_id

)t2

group by recent_days

),

tmp_cop as

(

select

'$do_date' dt,

recent_days,

sum(if(cart_count>0,1,0)) cart_count,

sum(if(order_count>0,1,0)) order_count,

sum(if(payment_count>0,1,0)) payment_count

from

(

select

recent_days,

user_id,

case

when recent_days=1 then cart_last_1d_count

when recent_days=7 then cart_last_7d_count

when recent_days=30 then cart_last_30d_count

end cart_count,

case

when recent_days=1 then order_last_1d_count

when recent_days=7 then order_last_7d_count

when recent_days=30 then order_last_30d_count

end order_count,

case

when recent_days=1 then payment_last_1d_count

when recent_days=7 then payment_last_7d_count

when recent_days=30 then payment_last_30d_count

end payment_count

from ${APP}.dwt_user_topic lateral view explode(Array(1,7,30)) tmp as recent_days

where dt='$do_date'

)t1

group by recent_days

)

insert overwrite table ${APP}.ads_user_action

select * from ${APP}.ads_user_action

union

select

tmp_page.dt,

tmp_page.recent_days,

home_count,

good_detail_count,

cart_count,

order_count,

payment_count

from tmp_page

join tmp_cop

on tmp_page.recent_days=tmp_cop.recent_days;

"

ads_user_change="

insert overwrite table ${APP}.ads_user_change

select * from ${APP}.ads_user_change

union

select

churn.dt,

user_churn_count,

user_back_count

from

(

select

'$do_date' dt,

count(*) user_churn_count

from ${APP}.dwt_user_topic

where dt='$do_date'

and login_date_last=date_add('$do_date',-7)

)churn

join

(

select

'$do_date' dt,

count(*) user_back_count

from

(

select

user_id,

login_date_last

from ${APP}.dwt_user_topic

where dt='$do_date'

and login_date_last='$do_date'

)t1

join

(

select

user_id,

login_date_last login_date_previous

from ${APP}.dwt_user_topic

where dt=date_add('$do_date',-1)

)t2

on t1.user_id=t2.user_id

where datediff(login_date_last,login_date_previous)>=8

)back

on churn.dt=back.dt;

"

ads_user_retention="

insert overwrite table ${APP}.ads_user_retention

select * from ${APP}.ads_user_retention

union

select

'$do_date',

login_date_first create_date,

datediff('$do_date',login_date_first) retention_day,

sum(if(login_date_last='$do_date',1,0)) retention_count,

count(*) new_user_count,

cast(sum(if(login_date_last='$do_date',1,0))/count(*)*100 as decimal(16,2)) retention_rate

from ${APP}.dwt_user_topic

where dt='$do_date'

and login_date_first>=date_add('$do_date',-7)

and login_date_first<'$do_date'

group by login_date_first;

"

ads_user_total="

insert overwrite table ${APP}.ads_user_total

select * from ${APP}.ads_user_total

union

select

'$do_date',

recent_days,

sum(if(login_date_first>=recent_days_ago,1,0)) new_user_count,

sum(if(order_date_first>=recent_days_ago,1,0)) new_order_user_count,

sum(order_final_amount) order_final_amount,

sum(if(order_final_amount>0,1,0)) order_user_count,

sum(if(login_date_last>=recent_days_ago and order_final_amount=0,1,0)) no_order_user_count

from

(

select

recent_days,

user_id,

login_date_first,

login_date_last,

order_date_first,

case when recent_days=0 then order_final_amount

when recent_days=1 then order_last_1d_final_amount

when recent_days=7 then order_last_7d_final_amount

when recent_days=30 then order_last_30d_final_amount

end order_final_amount,

if(recent_days=0,'1970-01-01',date_add('$do_date',-recent_days+1)) recent_days_ago

from ${APP}.dwt_user_topic lateral view explode(Array(0,1,7,30)) tmp as recent_days

where dt='$do_date'

)t1

group by recent_days;

"

ads_visit_stats="

insert overwrite table ${APP}.ads_visit_stats

select * from ${APP}.ads_visit_stats

union

select

'$do_date' dt,

is_new,

recent_days,

channel,

count(distinct(mid_id)) uv_count,

cast(sum(duration)/1000 as bigint) duration_sec,

cast(avg(duration)/1000 as bigint) avg_duration_sec,

sum(page_count) page_count,

cast(avg(page_count) as bigint) avg_page_count,

count(*) sv_count,

sum(if(page_count=1,1,0)) bounce_count,

cast(sum(if(page_count=1,1,0))/count(*)*100 as decimal(16,2)) bounce_rate

from

(

select

session_id,

mid_id,

is_new,

recent_days,

channel,

count(*) page_count,

sum(during_time) duration

from

(

select

mid_id,

channel,

recent_days,

is_new,

last_page_id,

page_id,

during_time,

concat(mid_id,'-',last_value(if(last_page_id is null,ts,null),true) over (partition by recent_days,mid_id order by ts)) session_id

from

(

select

mid_id,

channel,

last_page_id,

page_id,

during_time,

ts,

recent_days,

if(visit_date_first>=date_add('$do_date',-recent_days+1),'1','0') is_new

from

(

select

t1.mid_id,

t1.channel,

t1.last_page_id,

t1.page_id,

t1.during_time,

t1.dt,

t1.ts,

t2.visit_date_first

from

(

select

mid_id,

channel,

last_page_id,

page_id,

during_time,

dt,

ts

from ${APP}.dwd_page_log

where dt>=date_add('$do_date',-30)

)t1

left join

(

select

mid_id,

visit_date_first

from ${APP}.dwt_visitor_topic

where dt='$do_date'

)t2

on t1.mid_id=t2.mid_id

)t3 lateral view explode(Array(1,7,30)) tmp as recent_days

where dt>=date_add('$do_date',-recent_days+1)

)t4

)t5

group by session_id,mid_id,is_new,recent_days,channel

)t6

group by is_new,recent_days,channel;

"

case $1 in

"ads_activity_stats" )

hive -e "$ads_activity_stats"

;;

"ads_coupon_stats" )

hive -e "$ads_coupon_stats"

;;

"ads_order_by_province" )

hive -e "$ads_order_by_province"

;;

"ads_order_spu_stats" )

hive -e "$ads_order_spu_stats"

;;

"ads_order_total" )

hive -e "$ads_order_total"

;;

"ads_page_path" )

hive -e "$ads_page_path"

;;

"ads_repeat_purchase" )

hive -e "$ads_repeat_purchase"

;;

"ads_user_action" )

hive -e "$ads_user_action"

;;

"ads_user_change" )

hive -e "$ads_user_change"

;;

"ads_user_retention" )

hive -e "$ads_user_retention"

;;

"ads_user_total" )

hive -e "$ads_user_total"

;;

"ads_visit_stats" )

hive -e "$ads_visit_stats"

;;

"all" )

hive -e "$ads_activity_stats$ads_coupon_stats$ads_order_by_province$ads_order_spu_stats$ads_order_total$ads_page_path$ads_repeat_purchase$ads_user_action$ads_user_change$ads_user_retention$ads_user_total$ads_visit_stats"

;;

esac

- 权限:

[xiaobai@hadoop102 ~]$ chmod 777 dwt_to_ads.sh

- 执行:

[xiaobai@hadoop102 ~]$ dwt_to_ads.sh all 2020-06-14

第10章 全流程调度

10.1 Azkaban部署

大数据之Azkaban部署戳这里==>

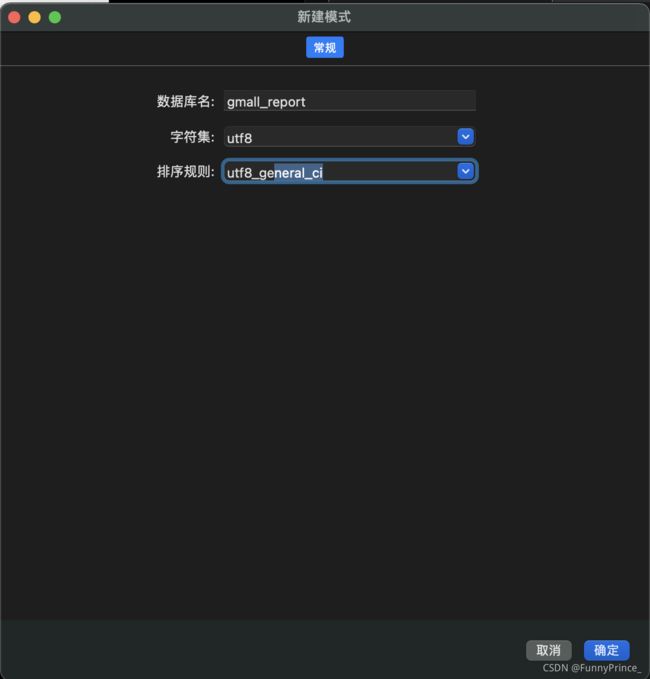

10.2 创建MySQL数据库和表

CREATE DATABASE `gmall_report` CHARACTER SET 'utf8' COLLATE 'utf8_general_ci';

- 创建表:

1). 访客统计

DROP TABLE IF EXISTS ads_visit_stats;

CREATE TABLE `ads_visit_stats` (

`dt` DATE NOT NULL COMMENT '统计日期',

`is_new` VARCHAR(255) NOT NULL COMMENT '新老标识,1:新,0:老',

`recent_days` INT NOT NULL COMMENT '最近天数,1:最近1天,7:最近7天,30:最近30天',

`channel` VARCHAR(255) NOT NULL COMMENT '渠道',

`uv_count` BIGINT(20) DEFAULT NULL COMMENT '日活(访问人数)',

`duration_sec` BIGINT(20) DEFAULT NULL COMMENT '页面停留总时长',

`avg_duration_sec` BIGINT(20) DEFAULT NULL COMMENT '一次会话,页面停留平均时长',

`page_count` BIGINT(20) DEFAULT NULL COMMENT '页面总浏览数',

`avg_page_count` BIGINT(20) DEFAULT NULL COMMENT '一次会话,页面平均浏览数',

`sv_count` BIGINT(20) DEFAULT NULL COMMENT '会话次数',

`bounce_count` BIGINT(20) DEFAULT NULL COMMENT '跳出数',

`bounce_rate` DECIMAL(16,2) DEFAULT NULL COMMENT '跳出率',

PRIMARY KEY (`dt`,`recent_days`,`is_new`,`channel`)

) ENGINE=INNODB DEFAULT CHARSET=utf8;

2). 页面路径分析

DROP TABLE IF EXISTS ads_page_path;

CREATE TABLE `ads_page_path` (

`dt` DATE NOT NULL COMMENT '统计日期',

`recent_days` BIGINT(20) NOT NULL COMMENT '最近天数,1:最近1天,7:最近7天,30:最近30天',

`source` VARCHAR(255) DEFAULT NULL COMMENT '跳转起始页面',

`target` VARCHAR(255) DEFAULT NULL COMMENT '跳转终到页面',

`path_count` BIGINT(255) DEFAULT NULL COMMENT '跳转次数',

UNIQUE KEY (`dt`,`recent_days`,`source`,`target`) USING BTREE

) ENGINE=INNODB DEFAULT CHARSET=utf8 ROW_FORMAT=DYNAMIC;

3). 用户统计

DROP TABLE IF EXISTS ads_user_total;

CREATE TABLE `ads_user_total` (

`dt` DATE NOT NULL COMMENT '统计日期',

`recent_days` BIGINT(20) NOT NULL COMMENT '最近天数,0:累积值,1:最近1天,7:最近7天,30:最近30天',

`new_user_count` BIGINT(20) DEFAULT NULL COMMENT '新注册用户数',

`new_order_user_count` BIGINT(20) DEFAULT NULL COMMENT '新增下单用户数',

`order_final_amount` DECIMAL(16,2) DEFAULT NULL COMMENT '下单总金额',

`order_user_count` BIGINT(20) DEFAULT NULL COMMENT '下单用户数',

`no_order_user_count` BIGINT(20) DEFAULT NULL COMMENT '未下单用户数(具体指活跃用户中未下单用户)',

PRIMARY KEY (`dt`,`recent_days`)

) ENGINE=INNODB DEFAULT CHARSET=utf8;

4). 用户变动统计

DROP TABLE IF EXISTS ads_user_change;

CREATE TABLE `ads_user_change` (

`dt` DATE NOT NULL COMMENT '统计日期',

`user_churn_count` BIGINT(20) DEFAULT NULL COMMENT '流失用户数',

`user_back_count` BIGINT(20) DEFAULT NULL COMMENT '回流用户数',

PRIMARY KEY (`dt`)

) ENGINE=INNODB DEFAULT CHARSET=utf8;

5). 用户行为漏斗分析

DROP TABLE IF EXISTS ads_user_action;

CREATE TABLE `ads_user_action` (

`dt` DATE NOT NULL COMMENT '统计日期',

`recent_days` BIGINT(20) NOT NULL COMMENT '最近天数,1:最近1天,7:最近7天,30:最近30天',

`home_count` BIGINT(20) DEFAULT NULL COMMENT '浏览首页人数',

`good_detail_count` BIGINT(20) DEFAULT NULL COMMENT '浏览商品详情页人数',

`cart_count` BIGINT(20) DEFAULT NULL COMMENT '加入购物车人数',

`order_count` BIGINT(20) DEFAULT NULL COMMENT '下单人数',

`payment_count` BIGINT(20) DEFAULT NULL COMMENT '支付人数',

PRIMARY KEY (`dt`,`recent_days`) USING BTREE

) ENGINE=INNODB DEFAULT CHARSET=utf8 ROW_FORMAT=DYNAMIC;

6). 用户留存率分析

DROP TABLE IF EXISTS ads_user_retention;

CREATE TABLE `ads_user_retention` (

`dt` DATE DEFAULT NULL COMMENT '统计日期',

`create_date` VARCHAR(255) NOT NULL COMMENT '用户新增日期',

`retention_day` BIGINT(20) NOT NULL COMMENT '截至当前日期留存天数',

`retention_count` BIGINT(20) DEFAULT NULL COMMENT '留存用户数量',

`new_user_count` BIGINT(20) DEFAULT NULL COMMENT '新增用户数量',

`retention_rate` DECIMAL(16,2) DEFAULT NULL COMMENT '留存率',

PRIMARY KEY (`create_date`,`retention_day`) USING BTREE

) ENGINE=INNODB DEFAULT CHARSET=utf8 ROW_FORMAT=DYNAMIC;

7). 订单统计

DROP TABLE IF EXISTS ads_order_total;

CREATE TABLE `ads_order_total` (

`dt` DATE NOT NULL COMMENT '统计日期',

`recent_days` BIGINT(20) NOT NULL COMMENT '最近天数,1:最近1天,7:最近7天,30:最近30天',

`order_count` BIGINT(255) DEFAULT NULL COMMENT '订单数',

`order_amount` DECIMAL(16,2) DEFAULT NULL COMMENT '订单金额',

`order_user_count` BIGINT(255) DEFAULT NULL COMMENT '下单人数',

PRIMARY KEY (`dt`,`recent_days`)

) ENGINE=INNODB DEFAULT CHARSET=utf8 ROW_FORMAT=DYNAMIC;

8). 各省份订单统计

DROP TABLE IF EXISTS ads_order_by_province;

CREATE TABLE `ads_order_by_province` (

`dt` DATE NOT NULL,

`recent_days` BIGINT(20) NOT NULL COMMENT '最近天数,1:最近1天,7:最近7天,30:最近30天',

`province_id` VARCHAR(255) NOT NULL COMMENT '统计日期',

`province_name` VARCHAR(255) DEFAULT NULL COMMENT '省份名称',

`area_code` VARCHAR(255) DEFAULT NULL COMMENT '地区编码',

`iso_code` VARCHAR(255) DEFAULT NULL COMMENT '国际标准地区编码',

`iso_code_3166_2` VARCHAR(255) DEFAULT NULL COMMENT '国际标准地区编码',

`order_count` BIGINT(20) DEFAULT NULL COMMENT '订单数',

`order_amount` DECIMAL(16,2) DEFAULT NULL COMMENT '订单金额',

PRIMARY KEY (`dt`, `recent_days` ,`province_id`) USING BTREE

) ENGINE=INNODB DEFAULT CHARSET=utf8 ROW_FORMAT=DYNAMIC;

9). 品牌复购率

DROP TABLE IF EXISTS ads_repeat_purchase;

CREATE TABLE `ads_repeat_purchase` (

`dt` DATE NOT NULL COMMENT '统计日期',

`recent_days` BIGINT(20) NOT NULL COMMENT '最近天数,1:最近1天,7:最近7天,30:最近30天',

`tm_id` VARCHAR(255) NOT NULL COMMENT '品牌ID',

`tm_name` VARCHAR(255) DEFAULT NULL COMMENT '品牌名称',

`order_repeat_rate` DECIMAL(16,2) DEFAULT NULL COMMENT '复购率',

PRIMARY KEY (`dt` ,`recent_days`,`tm_id`)

) ENGINE=INNODB DEFAULT CHARSET=utf8 ROW_FORMAT=DYNAMIC;

10). 商品统计

DROP TABLE IF EXISTS ads_order_spu_stats;

CREATE TABLE `ads_order_spu_stats` (

`dt` DATE NOT NULL COMMENT '统计日期',

`recent_days` BIGINT(20) NOT NULL COMMENT '最近天数,1:最近1天,7:最近7天,30:最近30天',

`spu_id` VARCHAR(255) NOT NULL COMMENT '商品ID',

`spu_name` VARCHAR(255) DEFAULT NULL COMMENT '商品名称',

`tm_id` VARCHAR(255) NOT NULL COMMENT '品牌ID',

`tm_name` VARCHAR(255) DEFAULT NULL COMMENT '品牌名称',

`category3_id` VARCHAR(255) NOT NULL COMMENT '三级品类ID',

`category3_name` VARCHAR(255) DEFAULT NULL COMMENT '三级品类名称',

`category2_id` VARCHAR(255) NOT NULL COMMENT '二级品类ID',

`category2_name` VARCHAR(255) DEFAULT NULL COMMENT '二级品类名称',

`category1_id` VARCHAR(255) NOT NULL COMMENT '一级品类ID',

`category1_name` VARCHAR(255) NOT NULL COMMENT '一级品类名称',

`order_count` BIGINT(20) DEFAULT NULL COMMENT '订单数',

`order_amount` DECIMAL(16,2) DEFAULT NULL COMMENT '订单金额',

PRIMARY KEY (`dt`,`recent_days`,`spu_id`)

) ENGINE=INNODB DEFAULT CHARSET=utf8;

11). 活动统计

DROP TABLE IF EXISTS ads_activity_stats;

CREATE TABLE `ads_activity_stats` (

`dt` DATE NOT NULL COMMENT '统计日期',

`activity_id` VARCHAR(255) NOT NULL COMMENT '活动ID',

`activity_name` VARCHAR(255) DEFAULT NULL COMMENT '活动名称',

`start_date` DATE DEFAULT NULL COMMENT '开始日期',

`order_count` BIGINT(11) DEFAULT NULL COMMENT '参与活动订单数',

`order_original_amount` DECIMAL(16,2) DEFAULT NULL COMMENT '参与活动订单原始金额',

`order_final_amount` DECIMAL(16,2) DEFAULT NULL COMMENT '参与活动订单最终金额',

`reduce_amount` DECIMAL(16,2) DEFAULT NULL COMMENT '优惠金额',

`reduce_rate` DECIMAL(16,2) DEFAULT NULL COMMENT '补贴率',

PRIMARY KEY (`dt`,`activity_id` )

) ENGINE=INNODB DEFAULT CHARSET=utf8 ROW_FORMAT=DYNAMIC;

12). 优惠券统计

DROP TABLE IF EXISTS ads_coupon_stats;

CREATE TABLE `ads_coupon_stats` (

`dt` DATE NOT NULL COMMENT '统计日期',

`coupon_id` VARCHAR(255) NOT NULL COMMENT '优惠券ID',

`coupon_name` VARCHAR(255) DEFAULT NULL COMMENT '优惠券名称',

`start_date` DATE DEFAULT NULL COMMENT '开始日期',

`rule_name` VARCHAR(200) DEFAULT NULL COMMENT '优惠规则',

`get_count` BIGINT(20) DEFAULT NULL COMMENT '领取次数',

`order_count` BIGINT(20) DEFAULT NULL COMMENT '使用(下单)次数',

`expire_count` BIGINT(20) DEFAULT NULL COMMENT '过期次数',

`order_original_amount` DECIMAL(16,2) DEFAULT NULL COMMENT '使用优惠券订单原始金额',

`order_final_amount` DECIMAL(16,2) DEFAULT NULL COMMENT '使用优惠券订单最终金额',

`reduce_amount` DECIMAL(16,2) DEFAULT NULL COMMENT '优惠金额',

`reduce_rate` DECIMAL(16,2) DEFAULT NULL COMMENT '补贴率',

PRIMARY KEY (`dt`,`coupon_id` )

) ENGINE=INNODB DEFAULT CHARSET=utf8 ROW_FORMAT=DYNAMIC;

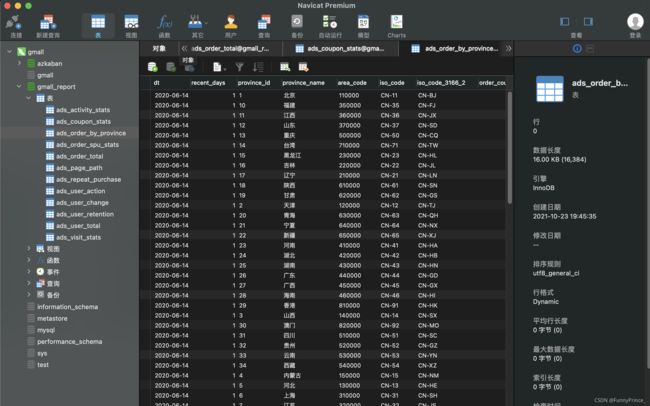

如图,所有表已经全部创建完成✅

10.3 Sqoop导出脚本

- 编写Sqoop导出脚本,在/home/xiaobai/bin目录下创建脚本

hdfs_to_mysql.sh

[xiaobai@hadoop102 bin]$ vim hdfs_to_mysql.sh

#!/bin/bash

hive_db_name=gmall

mysql_db_name=gmall_report

export_data() {

/opt/module/sqoop/bin/sqoop export \

--connect "jdbc:mysql://hadoop102:3306/${mysql_db_name}?useUnicode=true&characterEncoding=utf-8" \

--username root \

--password ****** \

--table $1 \

--num-mappers 1 \

--export-dir /warehouse/$hive_db_name/ads/$1 \

--input-fields-terminated-by "\t" \

--update-mode allowinsert \

--update-key $2 \

--input-null-string '\\N' \

--input-null-non-string '\\N'

}

case $1 in

"ads_activity_stats" )

export_data "ads_activity_stats" "dt,activity_id"

;;

"ads_coupon_stats" )

export_data "ads_coupon_stats" "dt,coupon_id"

;;

"ads_order_by_province" )

export_data "ads_order_by_province" "dt,recent_days,province_id"

;;

"ads_order_spu_stats" )

export_data "ads_order_spu_stats" "dt,recent_days,spu_id"

;;

"ads_order_total" )

export_data "ads_order_total" "dt,recent_days"

;;

"ads_page_path" )

export_data "ads_page_path" "dt,recent_days,source,target"

;;

"ads_repeat_purchase" )

export_data "ads_repeat_purchase" "dt,recent_days,tm_id"

;;

"ads_user_action" )

export_data "ads_user_action" "dt,recent_days"

;;

"ads_user_change" )

export_data "ads_user_change" "dt"

;;

"ads_user_retention" )

export_data "ads_user_retention" "create_date,retention_day"

;;

"ads_user_total" )

export_data "ads_user_total" "dt,recent_days"

;;

"ads_visit_stats" )

export_data "ads_visit_stats" "dt,recent_days,is_new,channel"

;;

"all" )

export_data "ads_activity_stats" "dt,activity_id"

export_data "ads_coupon_stats" "dt,coupon_id"

export_data "ads_order_by_province" "dt,recent_days,province_id"

export_data "ads_order_spu_stats" "dt,recent_days,spu_id"

export_data "ads_order_total" "dt,recent_days"

export_data "ads_page_path" "dt,recent_days,source,target"

export_data "ads_repeat_purchase" "dt,recent_days,tm_id"

export_data "ads_user_action" "dt,recent_days"

export_data "ads_user_change" "dt"

export_data "ads_user_retention" "create_date,retention_day"

export_data "ads_user_total" "dt,recent_days"

export_data "ads_visit_stats" "dt,recent_days,is_new,channel"

;;

esac

- 权限:

[xiaobai@hadoop102 bin]$ chmod 777 hdfs_to_mysql.sh

- 执行:

[xiaobai@hadoop102 bin]$ ./hdfs_to_mysql.sh all

tips:

关于导出update还是insert的问题:

–update-mode:

updateonly:只更新,无法插入新数据;

allowinsert 允许新增 ;

–update-key: 允许更新的情况下,指定哪些字段匹配视为同一条数据,进行更新而不增加;多个字段用逗号分隔。

–input-null-string和–input-null-non-string:

分别表示,将字符串列和非字符串列的空串和“null”转义。

Sqoop will by default import NULL values as string null. Hive is however using string \N to denote NULL values and therefore predicates dealing with NULL(like IS NULL) will not work correctly. You should append parameters --null-string and --null-non-string in case of import job or --input-null-string and --input-null-non-string in case of an export job if you wish to properly preserve NULL values. Because sqoop is using those parameters in generated code, you need to properly escape value \N to \\N:

Hive中的Null在底层是以“\N”来存储,而MySQL中的Null在底层就是Null,为了保证数据两端的一致性。在导出数据时采用–input-null-string和–input-null-non-string两个参数。导入数据时采用–null-string和–null-non-string。

官网地址:http://sqoop.apache.org/docs/1.4.6/SqoopUserGuide.html

10.4 全调度流程

10.4.1 数据准备

用户行为数据准备

先开启数据采集通道:

[xiaobai@hadoop102 ~]$ f1.sh start

------启动hadoop102采集flume------

------启动hadoop103采集flume------

[xiaobai@hadoop102 ~]$ f2.sh start

--------启动 hadoop104 消费flume-------

[xiaobai@hadoop102 applog]$ lg.sh

-

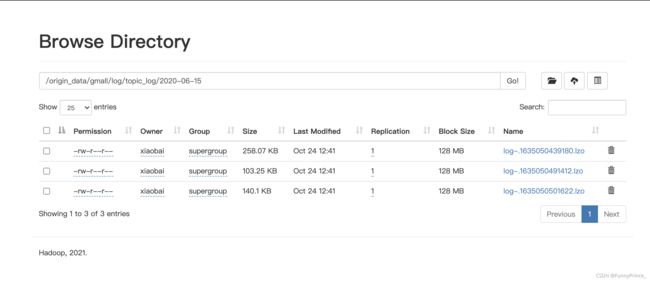

查看hdfs的/origin_data/gmall/log/topic_log/2020-06-15路径下是否有数据生成:

业务数据准备 -

修改hadoop102下的/opt/module/db_log/路径下的

application.properties:

[xiaobai@hadoop102 db_log]$ vim application.properties

- 生成数据:

[xiaobai@hadoop102 db_log]$ java -jar gmall2020-mock-db-2021-01-22.jar

10.4.2 编写Azkaban工作流程配置文件

- 编写

azkaban.property文件:

azkaban-flow-version: 2.0

- 编写

gmall.flow文件:

nodes:

- name: mysql_to_hdfs

type: command

config:

command: /home/atguigu/bin/mysql_to_hdfs.sh all ${dt}

- name: hdfs_to_ods_log

type: command

config:

command: /home/atguigu/bin/hdfs_to_ods_log.sh ${dt}

- name: hdfs_to_ods_db

type: command

dependsOn:

- mysql_to_hdfs

config:

command: /home/atguigu/bin/hdfs_to_ods_db.sh all ${dt}

- name: ods_to_dim_db

type: command

dependsOn:

- hdfs_to_ods_db

config:

command: /home/atguigu/bin/ods_to_dim_db.sh all ${dt}

- name: ods_to_dwd_log

type: command

dependsOn:

- hdfs_to_ods_log

config:

command: /home/atguigu/bin/ods_to_dwd_log.sh all ${dt}

- name: ods_to_dwd_db

type: command

dependsOn:

- hdfs_to_ods_db

config:

command: /home/atguigu/bin/ods_to_dwd_db.sh all ${dt}

- name: dwd_to_dws

type: command

dependsOn:

- ods_to_dim_db

- ods_to_dwd_log

- ods_to_dwd_db

config:

command: /home/atguigu/bin/dwd_to_dws.sh all ${dt}

- name: dws_to_dwt

type: command

dependsOn:

- dwd_to_dws

config:

command: /home/atguigu/bin/dws_to_dwt.sh all ${dt}

- name: dwt_to_ads

type: command

dependsOn:

- dws_to_dwt

config:

command: /home/atguigu/bin/dwt_to_ads.sh all ${dt}

- name: hdfs_to_mysql

type: command

dependsOn:

- dwt_to_ads

config:

command: /home/atguigu/bin/hdfs_to_mysql.sh all

- 将azkaban.project、gmall.flow文件压缩到一个zip文件

gmall.zip(需是英文哦); - 在WebServer:http://hadoop102:8081/index 新建项目并上传gmall.zip;

- 点击execute flow