Unity调用Android封装的声网sdk

文章目录

-

-

- unity3调用Android 封装的声网SDK

- 1、环境版本

- 2、创建Android library 工程

- 3、unity3D 依赖包添加到工程libs下

- 4、UnityPlayerActivity 添加到项目中

- 5、自定义Activity 继承 UnityPlayerActivity

- 6、清单文件设置

- 7、build.gradle 添加 jar 脚本

- 8、集成 声网sdk

-

- 8.1 so库 添加到项目中

- 8.2 声网jar 添加 libs 目录下

- 8.4 编写 集成声网sdk的工具类

- 8.5 MainActivity中 提供方法给 unity 调用

- 9、jar 生成

- 10、 unity中集成‘

-

- 10.1Assets 文件下 新建 Plugins 目录

- 10.2 AndroidPlugin.jar 放在 bin 目录下

- 10.3 声网so 库和jar 放在 libs目录下

- 10.4 清单文件放在Android目录下

- 11、 unity 脚本中调用 android 提供的方法

- 12、参考 资料

-

unity3调用Android 封装的声网SDK

1、环境版本

unity3D 编辑器 2020

Android Studio 4.2

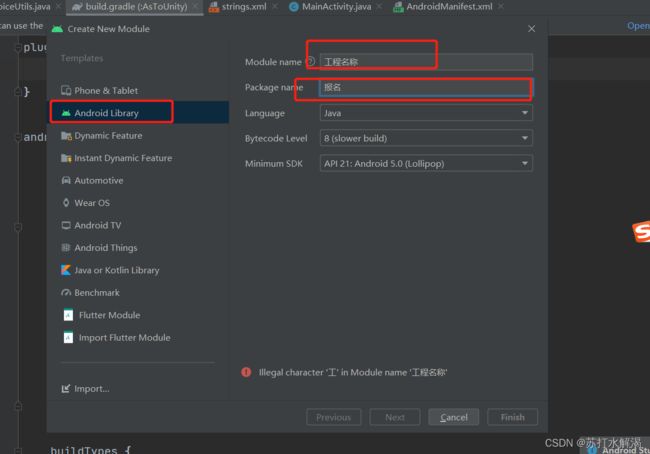

2、创建Android library 工程

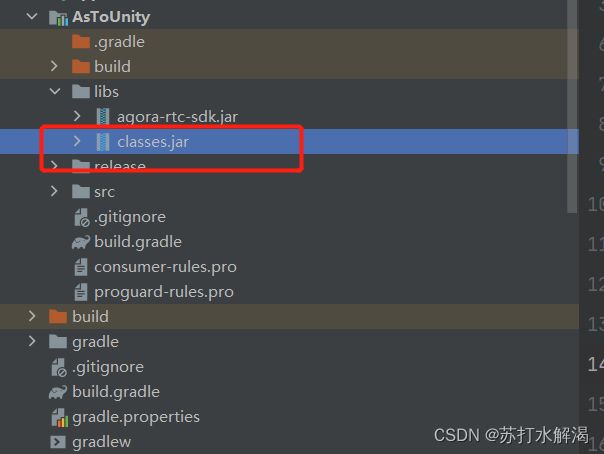

3、unity3D 依赖包添加到工程libs下

依赖包的位置(unity 安装目录下):E:\Unity_install\2020.3.28f1c1\Editor\Data\PlaybackEngines\AndroidPlayer\Variations\mono\Release\Classes

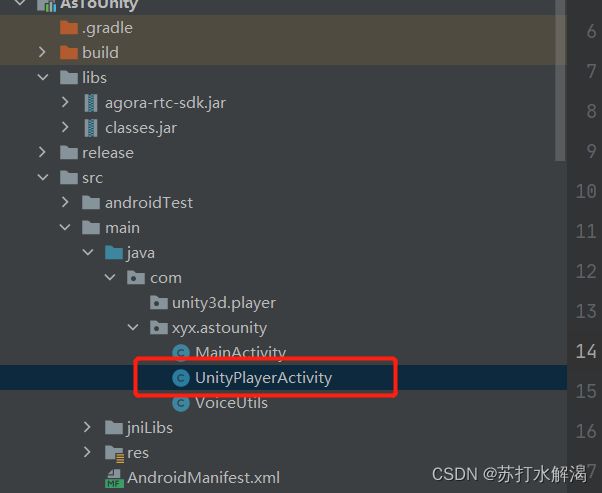

4、UnityPlayerActivity 添加到项目中

添加到项目中

5、自定义Activity 继承 UnityPlayerActivity

注销掉 设置布局方法

6、清单文件设置

<manifest xmlns:android="http://schemas.android.com/apk/res/android"

package="com.xyx.astounity">

<uses-permission android:name="android.permission.INTERNET" />

<uses-permission android:name="android.permission.RECORD_AUDIO" />

<uses-permission android:name="android.permission.ACCESS_NETWORK_STATE" />

<uses-permission android:name="android.permission.MODIFY_AUDIO_SETTINGS" />

<uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE">uses-permission>

<application>

<activity android:name="com.xyx.astounity.MainActivity">

<intent-filter>

<action android:name="android.intent.action.MAIN" />

<category android:name="android.intent.category.LAUNCHER" />

intent-filter>

<meta-data android:name="unityplayer.UnityActivity" android:value="true" />

activity>

application>

manifest>

7、build.gradle 添加 jar 脚本

//----------------这是一组将module导出为jar的gradle命令-------------------

task deleteOldJar(type: Delete) {

delete 'release/AndroidPlugin.jar'

}

//task to export contents as jar

task exportJar(type: Copy) {

from('build/intermediates/aar_main_jar/release/')

into('release/')

include('classes.jar')

///Rename the jar

rename('classes.jar', 'AndroidPlugin.jar')

}

exportJar.dependsOn(deleteOldJar, build)

8、集成 声网sdk

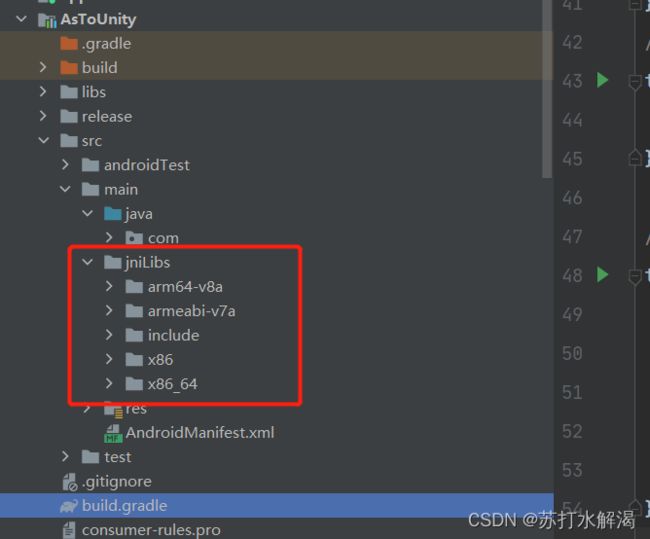

8.1 so库 添加到项目中

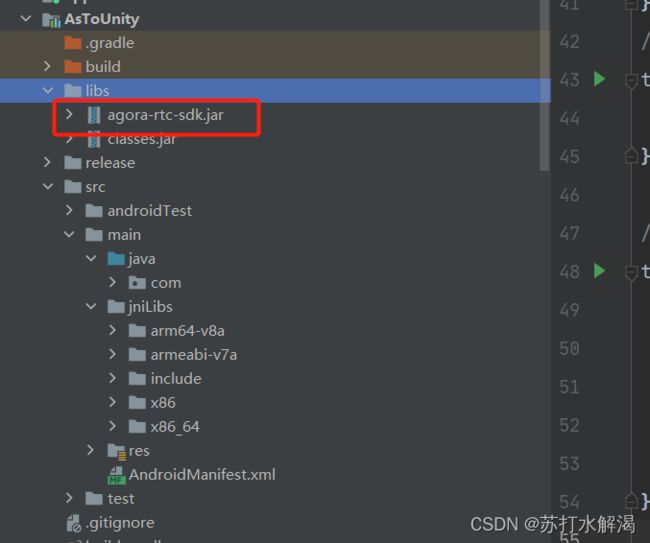

8.2 声网jar 添加 libs 目录下

8.4 编写 集成声网sdk的工具类

package com.xyx.astounity;

import android.app.Activity;

import android.content.Context;

import android.os.Handler;

import android.os.Looper;

import android.text.TextUtils;

import android.util.Log;

import android.widget.Toast;

import io.agora.rtc.Constants;

import io.agora.rtc.IRtcEngineEventHandler;

import io.agora.rtc.RtcEngine;

public class VoiceUtils {

private String appKey="5c203ec6100d4f1bbbc79265a81ef0f0";

private String token="0065c203ec6100d4f1bbbc79265a81ef0f0IABoJ4pt255quepmrqcN+P+MzHNWvu489IlD6C43eOajEKDfQtYAAAAAEAAn2yw6p4gZYgEAAQC/iBli";

private String channel="demo";

private RtcEngine mRtcEngine;

private Handler handler=new Handler(Looper.getMainLooper());

private Context context;

public boolean isIsSpeak(){

return isSpeak;

}

public void initializeAgoraEngine(Context context){

try {

mRtcEngine = RtcEngine.create(context, appKey, mRtcEventHandler);

this.context=context;

int i = mRtcEngine.enableDeepLearningDenoise(true);

mRtcEngine.enableAudioVolumeIndication(100,3,true);

Log.e("zyb", "initializeAgoraEngine: "+i );

} catch (Exception e) {

throw new RuntimeException("NEED TO check rtc sdk init fatal error\n" + Log.getStackTraceString(e));

}

}

public void joinChannel() {

String accessToken = token;

if (TextUtils.equals(accessToken, "") || TextUtils.equals(accessToken, "#YOUR ACCESS TOKEN#")) {

accessToken = null; // default, no token

}

// Sets the channel profile of the Agora RtcEngine.

// CHANNEL_PROFILE_COMMUNICATION(0): (Default) The Communication profile. Use this profile in one-on-one calls or group calls, where all users can talk freely.

// CHANNEL_PROFILE_LIVE_BROADCASTING(1): The Live-Broadcast profile. Users in a live-broadcast channel have a role as either broadcaster or audience. A broadcaster can both send and receive streams; an audience can only receive streams.

mRtcEngine.setChannelProfile(Constants.CHANNEL_PROFILE_COMMUNICATION);

// Allows a user to join a channel.

mRtcEngine.joinChannel(accessToken, channel, "Extra Optional Data", 0); // if you do not specify the uid, we will generate the uid for you

mRtcEngine.enableAudioVolumeIndication(50,3,true);

}

private final IRtcEngineEventHandler mRtcEventHandler = new IRtcEngineEventHandler() {

// Tutorial Step 1

/**

* Occurs when a remote user (Communication)/host (Live Broadcast) leaves the channel.

*

* There are two reasons for users to become offline:

*

* Leave the channel: When the user/host leaves the channel, the user/host sends a goodbye message. When this message is received, the SDK determines that the user/host leaves the channel.

* Drop offline: When no data packet of the user or host is received for a certain period of time (20 seconds for the communication profile, and more for the live broadcast profile), the SDK assumes that the user/host drops offline. A poor network connection may lead to false detections, so we recommend using the Agora RTM SDK for reliable offline detection.

*

* @param uid ID of the user or host who

* leaves

* the channel or goes offline.

* @param reason Reason why the user goes offline:

*

* USER_OFFLINE_QUIT(0): The user left the current channel.

* USER_OFFLINE_DROPPED(1): The SDK timed out and the user dropped offline because no data packet was received within a certain period of time. If a user quits the call and the message is not passed to the SDK (due to an unreliable channel), the SDK assumes the user dropped offline.

* USER_OFFLINE_BECOME_AUDIENCE(2): (Live broadcast only.) The client role switched from the host to the audience.

*/

@Override

public void onUserOffline(final int uid, final int reason) { // Tutorial Step 4

// runOnUiThread(new Runnable() {

// @Override

// public void run() {

// onRemoteUserLeft(uid, reason);

// }

// });

}

@Override

public void onAudioVolumeIndication(AudioVolumeInfo[] speakers, int totalVolume) {

super.onAudioVolumeIndication(speakers, totalVolume);

// Log.e("zyb", "onAudioVolumeIndication: "+uid );

if (speakers.length == 1) {

int uid = speakers[0].uid;

int vad = speakers[0].vad;

Log.e("zyb", "onAudioVolumeIndication: "+uid );

if (uid != 0 ) {

//

if (vad == 1) {

try {

isSpeak = true;

} catch (Exception e) {

e.printStackTrace();

}

} else {

isSpeak = false;

}

}

}else {

isSpeak=false;

}

}

/**

* Occurs when a remote user stops/resumes sending the audio stream.

* The SDK triggers this callback when the remote user stops or resumes sending the audio stream by calling the muteLocalAudioStream method.

*

* @param uid ID of the remote user.

* @param muted Whether the remote user's audio stream is muted/unmuted:

*

* true: Muted.

* false: Unmuted.

*/

@Override

public void onUserMuteAudio(final int uid, final boolean muted) { // Tutorial Step 6

handler.post(() -> Toast.makeText(context,"用户:"+uid +" \t 加入房间 ",Toast.LENGTH_SHORT).show());

}

};

public static volatile boolean isSpeak = false;

}

8.5 MainActivity中 提供方法给 unity 调用

public boolean isIsSpeak(){

if (voiceUtils==null){

Toast.makeText(this,"对象没有初始化",Toast.LENGTH_SHORT).show();

return false;

}

return voiceUtils.isIsSpeak();

}

public void initializeAgoraEngine(){

voiceUtils.initializeAgoraEngine(this);

}

public void joinChannel(){

if (voiceUtils==null){

Toast.makeText(this,"对象没有初始化",Toast.LENGTH_SHORT).show();

return;

}

voiceUtils.joinChannel();

}

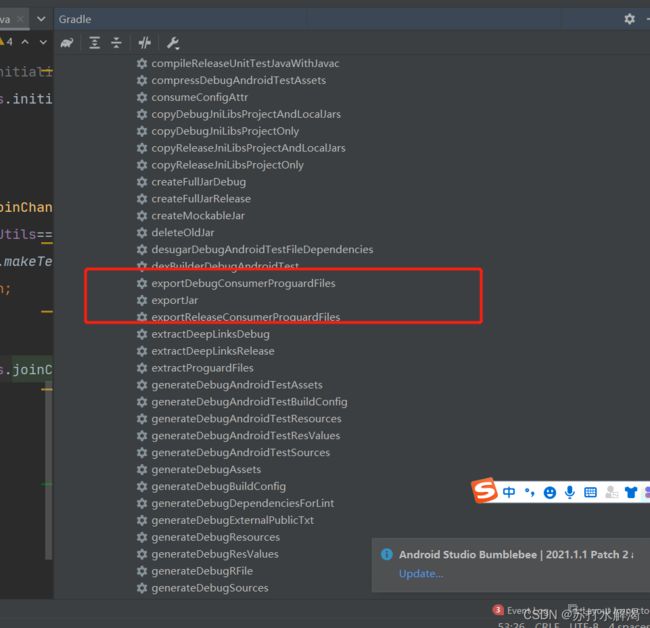

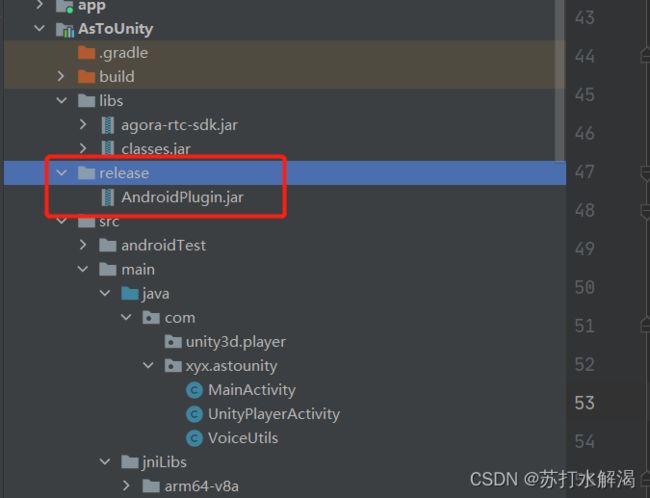

9、jar 生成

生成 目录

10、 unity中集成‘

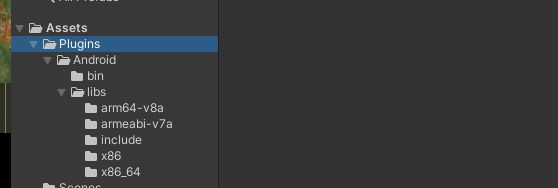

10.1Assets 文件下 新建 Plugins 目录

10.2 AndroidPlugin.jar 放在 bin 目录下

10.3 声网so 库和jar 放在 libs目录下

10.4 清单文件放在Android目录下

11、 unity 脚本中调用 android 提供的方法

unity的包名和Android的版本设置的和jar包的一样

初始化 声网sdk的脚本

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

#if(UNITY_2018_3_OR_NEWER)

using UnityEngine.Android;

#endif

public class CallAndroid : MonoBehaviour

{

// Start is called before the first frame update

void Start()

{

Permission.RequestUserPermission("android.permission.RECORD_AUDIO");

#if (UNITY_2018_3_OR_NEWER)

if (Permission.HasUserAuthorizedPermission(Permission.Microphone))

{

}

else

{

Permission.RequestUserPermission(Permission.Microphone);

}

#endif

}

// Update is called once per frame

void Update()

{

}

private AndroidJavaClass jc;

private AndroidJavaObject jo;

private void OnGUI()

{

if (GUILayout.Button("Call Android",GUILayout.Width(200), GUILayout.Height(100)))

{

//这两行是固定写法

jc = new AndroidJavaClass("com.unity3d.player.UnityPlayer");

jo = jc.GetStatic<AndroidJavaObject>("currentActivity");

int result =jo.Call<int>("add",1,2);

jo.Call("UnityCallAndroid");

// bool isSpeek = jo.Call("isIsSpeak");

}

if (GUILayout.Button("init", GUILayout.Width(200), GUILayout.Height(100)))

{

//这两行是固定写法

jc = new AndroidJavaClass("com.unity3d.player.UnityPlayer");

jo = jc.GetStatic<AndroidJavaObject>("currentActivity");

jo.Call("initializeAgoraEngine");

}

if (GUILayout.Button("join", GUILayout.Width(200), GUILayout.Height(100)))

{

//这两行是固定写法

jc = new AndroidJavaClass("com.unity3d.player.UnityPlayer");

jo = jc.GetStatic<AndroidJavaObject>("currentActivity");

jo.Call("joinChannel");

}

}

public void UnityMethod(string str)

{

Debug.Log("UnityMethod被调用,参数:" + str);

}

}

判断声网sdk VAD 脚本

using System.Collections;

using System.Collections.Generic;

using System.Threading;

using UnityEngine;

public class Switch : MonoBehaviour

{

// Start is called before the first frame update

private AndroidJavaClass jc;

private AndroidJavaObject jo;

long currentTime=0;

GameObject a;

GameObject b;

void Start()

{

a = GameObject.Find("a_demo");

b = GameObject.Find("b");

}

bool flag=false;

// Update is called once per frame

[System.Obsolete]

void Update()

{

//这两行是固定写法

jc = new AndroidJavaClass("com.unity3d.player.UnityPlayer");

jo = jc.GetStatic<AndroidJavaObject>("currentActivity");

bool isSpeek = jo.Call<bool>("isIsSpeak");

Debug.Log("isSpeek::::====" + isSpeek);

Thread.Sleep(300);

if (isSpeek)

{

//Thread.Sleep(200);

a.SetActive(flag);

b.SetActive(!flag);

flag = !flag;

Debug.Log("-----" + flag);

// a.SetActive(true);

// b.SetActive(false);

}

else {

if (b.active)

{

b.SetActive(false);

a.SetActive(true);

flag = !flag;

}

}

}

}

12、参考 资料

https://www.cnblogs.com/Jason-c/p/6743224.html

https://blog.csdn.net/u014361280/article/details/107692986

https://www.kancloud.cn/hujunjob/unity/139595