最新!CVPR 2021 视觉Transformer论文大盘点(43篇)

点击下方卡片,关注“CVer”公众号

AI/CV重磅干货,第一时间送达

作者:Amusi | 来源:CVer

前言

从2020下半年开始,特别是2021上半年,Visual Transformer的研究热点达到了前所未有的高峰。Amusi 认为引爆CV圈 Transformer热潮的有两篇最具代表性论文,即ECCV 2020的DETR(目标检测)和 ICLR 2021的ViT(图像分类)。

跟着DETR和ViT两篇论文的时间线,就能猜测到CVPR 2021上会涌现一大批视觉Transformer的工作。年前我还发了一个朋友圈,说会有两位数论文,结果我一统计,CVPR 2021上居然有40+篇视觉Transformer论文。

CVer 将正式盘点CVPR 2021上各个方向的工作,本篇是目前热度最高的视觉Transformer盘点,关于更多CVPR 2021的论文和开源代码,可见下面链接:

https://github.com/amusi/CVPR2021-Papers-with-Code

CVPR 2021 视觉Transformer论文(43篇)

Amusi 一共搜集了43篇Vision Transformer论文,应用涉及:图像分类、目标检测、实例分割、语义分割、行为识别、自动驾驶、关键点匹配、目标跟踪、NAS、low-level视觉、HoI、可解释性、布局生成、检索、文本检测等方向。PS:待"染指"的方向不多了...唯快不破

注1:这应该是目前各平台上最新最全面的CVPR 2021 视觉Transformer盘点资料,欢迎点赞收藏和分享

注2:目前只能检索到论文名称,所以这里只能尽量提供检索到的论文链接和代码,但不会完全,而且不提供论文单位、作者等信息(可能后续会提供完整版)。

注3:统计可能会有错误,因为很多论文原文还没有放出来,所以欢迎大家补充和提示。

1. End-to-End Human Pose and Mesh Reconstruction with Transformers

Paper: https://arxiv.org/abs/2012.09760

Code: https://github.com/microsoft/MeshTransformer

2. Temporal-Relational CrossTransformers for Few-Shot Action Recognition

Paper: https://arxiv.org/abs/2101.06184

Code: https://github.com/tobyperrett/trx

3. Kaleido-BERT:Vision-Language Pre-training on Fashion Domain

Paper: https://arxiv.org/abs/2103.16110

Code: https://github.com/mczhuge/Kaleido-BERT

4. HOTR: End-to-End Human-Object Interaction Detection with Transformers

Paper: https://arxiv.org/abs/2104.13682

Code: None

5. Multi-Modal Fusion Transformer for End-to-End Autonomous Driving

Paper: https://arxiv.org/abs/2104.09224

Code: https://github.com/autonomousvision/transfuser

6. Pose Recognition with Cascade Transformers

Paper: https://arxiv.org/abs/2104.06976

Code: https://github.com/mlpc-ucsd/PRTR

7. Variational Transformer Networks for Layout Generation

Paper: https://arxiv.org/abs/2104.02416

Code: None

8. LoFTR: Detector-Free Local Feature Matching with Transformers

Homepage: https://zju3dv.github.io/loftr/

Paper: https://arxiv.org/abs/2104.00680

Code: https://github.com/zju3dv/LoFTR

中文解读:CVPR 2021 | 稀疏纹理也能匹配?速览基于Transformers的图像特征匹配器LoFTR

9. Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers

Paper: https://arxiv.org/abs/2012.15840

Code: https://github.com/fudan-zvg/SETR

中文解读:CVPR 2021 | Transformer再下一城!复旦等提出SETR:语义分割网络

10. Thinking Fast and Slow: Efficient Text-to-Visual Retrieval with Transformers

Paper: https://arxiv.org/abs/2103.16553

Code: None

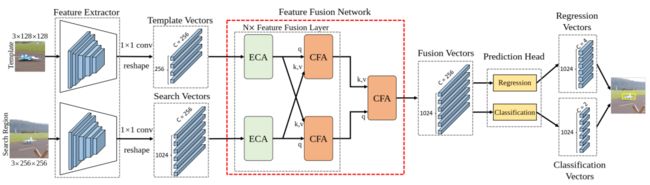

11. Transformer Tracking

Paper: https://arxiv.org/abs/2103.15436

Code: https://github.com/chenxin-dlut/TransT

12. HR-NAS: Searching Efficient High-Resolution Neural Architectures with Transformers

Paper(Oral): None

Code: https://github.com/dingmyu/HR-NAS

13. MIST: Multiple Instance Spatial Transformer

Paper: https://arxiv.org/abs/1811.10725

Code: None

14. Multimodal Motion Prediction with Stacked Transformers

Paper: https://arxiv.org/abs/2103.11624

Code: https://decisionforce.github.io/mmTransformer

15. Revamping cross-modal recipe retrieval with hierarchical Transformers and self-supervised learning

Paper: https://www.amazon.science/publications/revamping-cross-modal-recipe-retrieval-with-hierarchical-transformers-and-self-supervised-learning

Code: https://github.com/amzn/image-to-recipe-transformers

16. Transformer Meets Tracker: Exploiting Temporal Context for Robust Visual Tracking

Paper(Oral): https://arxiv.org/abs/2103.11681

Code: https://github.com/594422814/TransformerTrack

17. Pre-Trained Image Processing Transformer

Paper: https://arxiv.org/abs/2012.00364

Code: None

中文解读:CVPR 2021 | Transformer进军low-level视觉!北大华为等提出预训练模型IPT

18. End-to-End Video Instance Segmentation with Transformers

Paper(Oral): https://arxiv.org/abs/2011.14503

Code: https://github.com/Epiphqny/VisTR

中文解读:CVPR 2021 Oral | Transformer再突破!美团等提出VisTR:视频实例分割网络

19. UP-DETR: Unsupervised Pre-training for Object Detection with Transformers

Paper(Oral): https://arxiv.org/abs/2011.09094

Code: https://github.com/dddzg/up-detr

中文解读:CVPR 2021 Oral | Transformer再发力!华南理工和微信提出UP-DETR:无监督预训练检测器

20. End-to-End Human Object Interaction Detection with HOI Transformer

Paper: https://arxiv.org/abs/2103.04503

Code: https://github.com/bbepoch/HoiTransformer

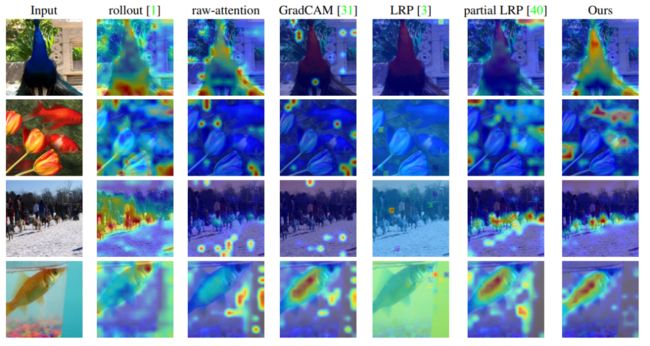

21. Transformer Interpretability Beyond Attention Visualization

Paper: https://arxiv.org/abs/2012.09838

Code: https://github.com/hila-chefer/Transformer-Explainability

22. Diverse Part Discovery: Occluded Person Re-Identification With Part-Aware Transformer

Paper: None

Code: None

23. LayoutTransformer: Scene Layout Generation With Conceptual and Spatial Diversity

Paper: None

Code: None

24. Line Segment Detection Using Transformers without Edges

Paper(Oral): https://arxiv.org/abs/2101.01909

Code: None

25. MaX-DeepLab: End-to-End Panoptic Segmentation With Mask Transformers

Paper: MaX-DeepLab: End-to-End Panoptic Segmentation with Mask Transformers

Code: None

26. SSTVOS: Sparse Spatiotemporal Transformers for Video Object Segmentation

Paper(Oral): https://arxiv.org/abs/2101.08833

Code: https://github.com/dukebw/SSTVOS

27. Facial Action Unit Detection With Transformers

Paper: None

Code: None

28. Clusformer: A Transformer Based Clustering Approach to Unsupervised Large-Scale Face and Visual Landmark Recognition

Paper: None

Code: None

29. Lesion-Aware Transformers for Diabetic Retinopathy Grading

Paper: None

Code: None

30. Topological Planning With Transformers for Vision-and-Language Navigation

Paper: https://arxiv.org/abs/2012.05292

Code: None

31. Adaptive Image Transformer for One-Shot Object Detection

Paper: None

Code: None

32. Multi-Stage Aggregated Transformer Network for Temporal Language Localization in Videos

Paper: None

Code: None

33. Taming Transformers for High-Resolution Image Synthesis

Homepage: https://compvis.github.io/taming-transformers/

Paper(Oral): https://arxiv.org/abs/2012.09841

Code: https://github.com/CompVis/taming-transformers

34. Self-Supervised Video Hashing via Bidirectional Transformers

Paper: None

Code: None

35. Point 4D Transformer Networks for Spatio-Temporal Modeling in Point Cloud Videos

Paper(Oral): https://hehefan.github.io/pdfs/p4transformer.pdf

Code: None

36. Gaussian Context Transformer

Paper: None

Code: None

37. General Multi-Label Image Classification With Transformers

Paper: https://arxiv.org/abs/2011.14027

Code: None

38. Bottleneck Transformers for Visual Recognition

Paper: https://arxiv.org/abs/2101.11605

Code: None

中文解读:CNN+Transformer!谷歌提出BoTNet:新主干网络!在ImageNet上达84.7%准确率!

39. VLN BERT: A Recurrent Vision-and-Language BERT for Navigation

Paper(Oral): https://arxiv.org/abs/2011.13922

Code: https://github.com/YicongHong/Recurrent-VLN-BERT

40. Less Is More: ClipBERT for Video-and-Language Learning via Sparse Sampling

Paper(Oral): https://arxiv.org/abs/2102.06183

Code: https://github.com/jayleicn/ClipBERT

41. Self-attention based Text Knowledge Mining for Text Detection

Paper: None

Code: https://github.com/CVI-SZU/STKM

42. SSAN: Separable Self-Attention Network for Video Representation Learning

Paper: None

Code: None

43. Scaling Local Self-Attention For Parameter Efficient Visual Backbones

Paper(Oral): https://arxiv.org/abs/2103.12731

Code: None

上述43篇Transformer论文下载

后台回复:CVPR2021,即可下载上述论文PDF

CVPR和Transformer资料下载

后台回复:CVPR2021,即可下载CVPR 2021论文和代码开源的论文合集

后台回复:Transformer综述,即可下载最新的两篇Transformer综述PDF

CVer-Transformer交流群成立

扫码添加CVer助手,可申请加入CVer-Transformer 微信交流群,方向已涵盖:目标检测、图像分割、目标跟踪、人脸检测&识别、OCR、姿态估计、超分辨率、SLAM、医疗影像、Re-ID、GAN、NAS、深度估计、自动驾驶、强化学习、车道线检测、模型剪枝&压缩、去噪、去雾、去雨、风格迁移、遥感图像、行为识别、视频理解、图像融合、图像检索、论文投稿&交流、PyTorch和TensorFlow等群。

一定要备注:研究方向+地点+学校/公司+昵称(如Transformer+上海+上交+卡卡),根据格式备注,可更快被通过且邀请进群

▲长按加小助手微信,进交流群▲点击上方卡片,关注CVer公众号

整理不易,请给CVer点赞和在看