【深度学习】【YOLACT】代码解读二

【YOLACT】代码解读二

- Loss各种函数

-

- Localization Loss (Smooth L1)

- Confidence loss

Loss各种函数

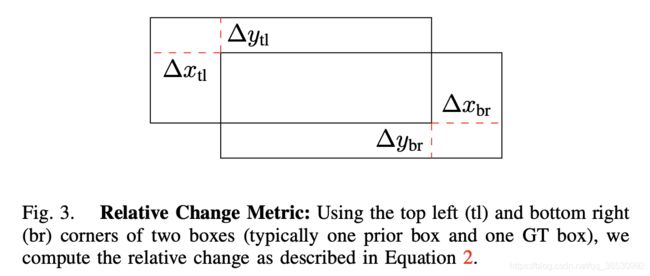

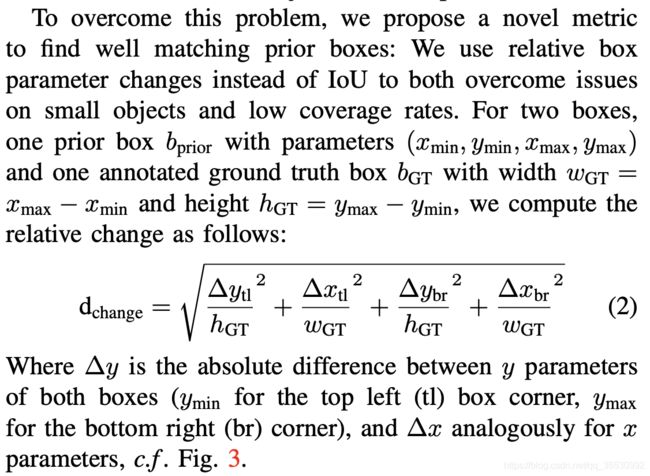

训练过程主要对loss函数(layers/modules/multibox_loss.py)进行学习,该函数先通过match函数对anchor进行正负样本分配,下面来具体看match函数(layers/box_utils.py)。那么匹配的过程最重要的部分就是计算gt box和预测框(或者priors先验框,取决于cfg.use_prediction_matching的设置)的交并比,但是代码中还出现以change(truths, decoded_priors)去代替交并比的计算,这个想法来源于box2pix(因为在SSD中交并比的阈值设置为0.5,而在cityscapes数据集中只有40%的gt box和先验框的交并比超过这个阈值),计算公式如下:

def change(gt, priors):

num_priors = priors.size(0)

num_gt = gt.size(0)

gt_w = (gt[:, 2] - gt[:, 0])[:, None].expand(num_gt, num_priors)

gt_h = (gt[:, 3] - gt[:, 1])[:, None].expand(num_gt, num_priors)

gt_mat = gt[:, None, :].expand(num_gt, num_priors, 4)

pr_mat = priors[None, :, :].expand(num_gt, num_priors, 4)

diff = gt_mat - pr_mat

diff[:, :, 0] /= gt_w

diff[:, :, 2] /= gt_w

diff[:, :, 1] /= gt_h

diff[:, :, 3] /= gt_h

return -torch.sqrt( (diff ** 2).sum(dim=2) )

# 返回的是负数是因为match阶段取得是最大值

然后对于batch的每一张图片,基于上述计算的结果overlaps,每次循环按行取最大值得到gt和与其overlaps最大的prior所对应的值和索引,再找到其中最大的值,之后将该值对应的gt所在行置为-1,与之overlaps最大的prior所在列也置为-1,这样在之后的循环中就不会用到该gt和该prior,这样得到的结果是确保每一个gt都和与其overlaps最大的prior相匹配,剩下的prior就按列取最大值所对应的gt。最后小于pos_thresh为neutral样本,小于neg_thresh为背景。返回的loc_t为每一个图片的每一个prior所分配的gt的坐标(offsets),conf_t为每一个图片的每一个prior的标签(类别,背景,neutral),idx_t为每一个图片的每一个prior所对应的gt索引。

接下来就是计算各个损失(按照默认参数设置)。

Localization Loss (Smooth L1)

定位的损失包括了框的损失,以及mask的损失,因为cfg.mask_type = mask_type.lincomb,所以来看看lincomb_mask_loss,该部分先对每一张图片的gt_masks(大小为[num_objs,im_height,im_width])resize到([138,138,num_objs])并且二值化,然后根据正的prior所在的index即(cur_pos)将正的prior所对应的组合系数(proto_coef)取出来,然后根据正的prior所对应的gt的序号(pos_idx_t)将gt对应的mask(mask_t)和label(label_t)取出来。将proto_masks和proto_coef相乘便得到[138, 138, positive_objs]的pred_masks,也就是每个正的prior所预测的mask,并用gt boxes或者predict boxes对其进行“crop”(将没在框内的数据都化为0),然后计算交叉熵损失。这里有个设置cfg.use_maskiou来源于Mask Scoring R-CNN(CVPR2019)里面的思想,作者认为使用分类置信度来度量mask的质量是不合适的,因为它只用于区分proposal的语义类别,而无法知道实例mask的实际质量和完整性,所以添加了一个给mask打分的结构,详细请参考Mask Scoring R-CNN[详解],这里也是YOLACT++相对于原版的改进。代码中,如果设置为true,则返回的是mask的损失以及[maskiou_net_input, maskiou_t, label_t],其中maskiou_net_input为该batch图片的所有正的并且满足一定面积条件(>cfg.discard_mask_area)的prior所预测mask,大小为[select_pos_objs, 1, 138, 138];maskiou_t为这些mask和gt mask的iou值,大小为[select_pos_objs];label_t为这些mask的类别,大小也为[select_pos_objs],因为即使要打分,这个分数也只能属于一个类别。

Confidence loss

如果用focal loss(即cfg.use_focal_loss为true,实际上默认为false),有三种形式的focal loss。

focal_conf_sigmoid_loss

在这部分实现中,首先对标签进行了one-hot形式的转化,定义 x x x为预测值,那么:

当target=1时,损失为 α × t a r g e t × ( 1 − s i g m o i d ( x ) ) γ × l o g ( s i g m o i d ( x ) ) \alpha \times target\times (1-sigmoid(x))^\gamma \times log(sigmoid(x)) α×target×(1−sigmoid(x))γ×log(sigmoid(x))

当target=0时,损失为 ( 1 − α ) × ( 1 − t a r g e t ) × s i g m o i d ( x ) γ × l o g ( 1 − s i g m o i d ( x ) ) (1-\alpha) \times (1-target)\times sigmoid(x)^\gamma \times log(1-sigmoid(x)) (1−α)×(1−target)×sigmoid(x)γ×log(1−sigmoid(x))

利用 1 − s i g m o i d ( x ) = s i g m o i d ( − x ) 1-sigmoid(x)=sigmoid(-x) 1−sigmoid(x)=sigmoid(−x)进行向量化后:

当target=1时,损失为 α × t a r g e t × ( 1 − s i g m o i d ( x ) ) γ × l o g ( s i g m o i d ( x ) ) \alpha \times target\times (1-sigmoid(x))^\gamma \times log(sigmoid(x)) α×target×(1−sigmoid(x))γ×log(sigmoid(x))

当target=0时,损失为 ( 1 − α ) × ( 1 − t a r g e t ) × ( 1 − s i g m o i d ( − x ) ) γ × l o g ( s i g m o i d ( − x ) ) (1-\alpha) \times (1-target)\times (1-sigmoid(-x))^\gamma \times log(sigmoid(-x)) (1−α)×(1−target)×(1−sigmoid(−x))γ×log(sigmoid(−x))

def focal_conf_sigmoid_loss(self, conf_data, conf_t):

"""

Focal loss but using sigmoid like the original paper.

Note: To make things mesh easier, the network still predicts 81 class confidences in this mode.

Because retinanet originally only predicts 80, we simply just don't use conf_data[..., 0]

conf_data : torch.Size([batchsize, 19248, 81])

conf_t : torch.Size([batchsize, 19248])

"""

num_classes = conf_data.size(-1)

conf_t = conf_t.view(-1) # [batch_size*num_priors]

conf_data = conf_data.view(-1, num_classes) # [batch_size*num_priors, num_classes]

# Ignore neutral samples (class < 0)

keep = (conf_t >= 0).float()

conf_t[conf_t < 0] = 0 # can't mask with -1, so filter that out, neutral sample is denoted as -1

# Compute a one-hot embedding of conf_t

# From https://github.com/kuangliu/pytorch-retinanet/blob/master/utils.py

conf_one_t = torch.eye(num_classes, device=conf_t.get_device())[conf_t] # torch.Size([pos_sample_nums, 81])

conf_pm_t = conf_one_t * 2 - 1 # -1 if background, +1 if forground for specific class

logpt = F.logsigmoid(conf_data * conf_pm_t) # note: 1 - sigmoid(x) = sigmoid(-x)

pt = logpt.exp()

at = cfg.focal_loss_alpha * conf_one_t + (1 - cfg.focal_loss_alpha) * (1 - conf_one_t) # torch.Size([pos_sample_nums, 81])

at[..., 0] = 0 # Set alpha for the background class to 0 because sigmoid focal loss doesn't use it

loss = -at * (1 - pt) ** cfg.focal_loss_gamma * logpt

loss = keep * loss.sum(dim=-1)

return cfg.conf_alpha * loss.sum()

focal_conf_objectness_loss

def focal_conf_objectness_loss(self, conf_data, conf_t):

"""

Instead of using softmax, use class[0] to be the objectness score and do sigmoid focal loss on that.

Then for the rest of the classes, softmax them and apply CE for only the positive examples.

If class[0] = 1 implies forground and class[0] = 0 implies background then you achieve something

similar during test-time to softmax by setting class[1:] = softmax(class[1:]) * class[0] and invert class[0].

"""

conf_t = conf_t.view(-1) # [batch_size*num_priors]

conf_data = conf_data.view(-1, conf_data.size(-1)) # [batch_size*num_priors, num_classes]

# Ignore neutral samples (class < 0)

keep = (conf_t >= 0).float()

conf_t[conf_t < 0] = 0 # so that gather doesn't drum up a fuss

background = (conf_t == 0).float()

at = (1 - cfg.focal_loss_alpha) * background + cfg.focal_loss_alpha * (1 - background)

# conf_data[:, 0] means foreground score

# 前项代表前景prior属于前景的分数,后项代表背景prior属于背景的分数

# 前景prior属于前景的分数越大,损失应该越小

# 背景prior属于前景的分数越大,取负号后应该越小,损失就越大

logpt = F.logsigmoid(conf_data[:, 0]) * (1 - background) + F.logsigmoid(-conf_data[:, 0]) * background

pt = logpt.exp()

obj_loss = -at * (1 - pt) ** cfg.focal_loss_gamma * logpt

# All that was the objectiveness loss--now time for the class confidence loss

pos_mask = conf_t > 0

conf_data_pos = (conf_data[:, 1:])[pos_mask] # Now this has just 80 classes

conf_t_pos = conf_t[pos_mask] - 1 # So subtract 1 here

class_loss = F.cross_entropy(conf_data_pos, conf_t_pos, reduction='sum')

return cfg.conf_alpha * (class_loss + (obj_loss * keep).sum())

focal_conf_loss

def focal_conf_loss(self, conf_data, conf_t):

"""

Focal loss as described in https://arxiv.org/pdf/1708.02002.pdf

Adapted from https://github.com/clcarwin/focal_loss_pytorch/blob/master/focalloss.py

Note that this uses softmax and not the original sigmoid from the paper.

"""

conf_t = conf_t.view(-1) # [batch_size*num_priors]

conf_data = conf_data.view(-1, conf_data.size(-1)) # [batch_size*num_priors, num_classes]

# Ignore neutral samples (class < 0)

keep = (conf_t >= 0).float()

conf_t[conf_t < 0] = 0 # so that gather doesn't drum up a fuss # [batch_size*num_priors, 1]

logpt = F.log_softmax(conf_data, dim=-1)

logpt = logpt.gather(1, conf_t.unsqueeze(-1)) #torch.Size([pos_sample_nums, 1])

# logpt[i][j] = logpt[i][conf_t[i][j]]

logpt = logpt.view(-1) # the value conrresponding to gt_label

pt = logpt.exp()

background = (conf_t == 0).float()

at = (1 - cfg.focal_loss_alpha) * background + cfg.focal_loss_alpha * (1 - background)

loss = -at * (1 - pt) ** cfg.focal_loss_gamma * logpt

# See comment above for keep

return cfg.conf_alpha * (loss * keep).sum()

l o s s = − l o g ( 属 于 各 l a b e l 的 分 数 ) × ( 1 − 属 于 各 l a b e l 的 分 数 ) γ × α ′ loss = -log(属于各label的分数)\times(1-属于各label的分数)^\gamma\times \alpha ^ {'} loss=−log(属于各label的分数)×(1−属于各label的分数)γ×α′

当属于某label的分数越大,说明越可能属于这一类,那么取log取负号后loss会越小,相比于0.7的置信度,0.6的loss会大一些,因为更难区分。

如果不用focal loss,有两种。分别是conf_objectness_loss和ohem_conf_loss。

conf_objectness_loss

def conf_objectness_loss(self, conf_data, conf_t, batch_size, loc_p, loc_t, priors):

"""

Instead of using softmax, use class[0] to be p(obj) * p(IoU) as in YOLO.

Then for the rest of the classes, softmax them and apply CE for only the positive examples.

conf_data : torch.Size([batchsize, 19248, 81])

conf_t : torch.Size([batchsize, 19248])

loc_p : torch.Size([positive_prior_nums, 4])

loc_t : torch.Size([positive_prior_nums, 4]) encoded

priors : torch.Size([19248, 4])

"""

conf_t = conf_t.view(-1) # [batch_size*num_priors]

conf_data = conf_data.view(-1, conf_data.size(-1)) # [batch_size*num_priors, num_classes]

pos_mask = (conf_t > 0)

neg_mask = (conf_t == 0) # without neutral samples

obj_data = conf_data[:, 0]

obj_data_pos = obj_data[pos_mask]

obj_data_neg = obj_data[neg_mask]

# Don't be confused, this is just binary cross entropy similified

# 代表属于背景的priors,该值越大,说明p(obj) * p(IoU)越大,不可能属于背景,loss就越大。

obj_neg_loss = - F.logsigmoid(-obj_data_neg).sum()

with torch.no_grad():

pos_priors = priors.unsqueeze(0).expand(batch_size, -1, -1).reshape(-1, 4)[pos_mask, :]# [positive_prior_nums, 4]

boxes_pred = decode(loc_p, pos_priors, cfg.use_yolo_regressors)

boxes_targ = decode(loc_t, pos_priors, cfg.use_yolo_regressors)

iou_targets = elemwise_box_iou(boxes_pred, boxes_targ)

# 交叉熵的变种,希望预测值sigmoid(obj_data_pos)接近iou_targets

obj_pos_loss = - iou_targets * F.logsigmoid(obj_data_pos) - (1 - iou_targets) * F.logsigmoid(-obj_data_pos)

obj_pos_loss = obj_pos_loss.sum()

# All that was the objectiveness loss--now time for the class confidence loss

conf_data_pos = (conf_data[:, 1:])[pos_mask] # Now this has just 80 classes

conf_t_pos = conf_t[pos_mask] - 1 # So subtract 1 here

class_loss = F.cross_entropy(conf_data_pos, conf_t_pos, reduction='sum')

return cfg.conf_alpha * (class_loss + obj_pos_loss + obj_neg_loss)

ohem_conf_loss

这部分主要是论文中常见的OHEM,即Online Hard Negative Mining。也就是对比较难分类的负样本挖掘,所谓难分类就是在训练过程中,loss比较大,容易将负样本看成正样本的样本,也就是说我们得先计算一下现在的负样本哪些产生的loss比较大。有两种方法,一种是通过“max(softmax) along classes > 0 ”,表示最不属于背景的负样本,如果属于前景任何一类的分数越大,那么这个负样本应该损失更大,越难分类。另一种通过“-log(softmax(class 0 confidence))”,其实是softmax loss函数( L = − ∑ j = 1 T y j l o g s j L=-\sum^T_{j=1}y_jlogs_j L=−∑j=1Tyjlogsj)的简化形式( L = − l o g s j L=-logs_j L=−logsj)(因为标签中只有一项是1,其余都是0)j=0时就是该损失,代码中log_sum_exp函数由下式所得:

l o g ( e x j ∑ i = 1 n e x i ) = l o g ( e x j ) − l o g ( ∑ i = 1 n e x i ) = x j − l o g ( ∑ i = 1 n e x i ) log(\frac{e^{x_j}}{\sum^n_{i=1}e^{x_i} })=log(e^{x_j})-log(\sum^n_{i=1}e^{x_i})=x_j-log(\sum^n_{i=1}e^{x_i}) log(∑i=1nexiexj)=log(exj)−log(i=1∑nexi)=xj−log(i=1∑nexi)

然后就根据一定的比例选出一定的负样本计算loss。

def ohem_conf_loss(self, conf_data, conf_t, pos, num):

'''

conf_data : torch.Size([batchsize, 19248, 81])

conf_t : torch.Size([batchsize, 19248])

pos : pos = conf_t > 0 torch.Size([batchsize, 19248])

num : batchsize

'''

# Compute max conf across batch for hard negative mining

batch_conf = conf_data.view(-1, self.num_classes)

if cfg.ohem_use_most_confident:

# i.e. max(softmax) along classes > 0

batch_conf = F.softmax(batch_conf, dim=1)

loss_c, _ = batch_conf[:, 1:].max(dim=1)

else:

# i.e. -softmax(class 0 confidence) # log_sum_exp(batch_conf) = log(e^c0+e^c1+e^c2)

loss_c = log_sum_exp(batch_conf) - batch_conf[:, 0]

# Hard Negative Mining

loss_c = loss_c.view(num, -1)

loss_c[pos] = 0 # filter out pos boxes

loss_c[conf_t < 0] = 0 # filter out neutrals (conf_t = -1)

_, loss_idx = loss_c.sort(1, descending=True)

_, idx_rank = loss_idx.sort(1) # idx_rank torch.Size([batchsize, 19248]) 找出矩阵每个元素在升序或降序排列中的位置

num_pos = pos.long().sum(1, keepdim=True)

num_neg = torch.clamp(self.negpos_ratio*num_pos, max=pos.size(1)-1)

neg = idx_rank < num_neg.expand_as(idx_rank)

# Just in case there aren't enough negatives, don't start using positives as negatives

neg[pos] = 0

neg[conf_t < 0] = 0 # Filter out neutrals

# Confidence Loss Including Positive and Negative Examples

pos_idx = pos.unsqueeze(2).expand_as(conf_data)

neg_idx = neg.unsqueeze(2).expand_as(conf_data)

conf_p = conf_data[(pos_idx+neg_idx).gt(0)].view(-1, self.num_classes)

targets_weighted = conf_t[(pos+neg).gt(0)]

loss_c = F.cross_entropy(conf_p, targets_weighted, reduction='none')

if cfg.use_class_balanced_conf: #false

# Lazy initialization

if self.class_instances is None:

self.class_instances = torch.zeros(self.num_classes, device=targets_weighted.device)

classes, counts = targets_weighted.unique(return_counts=True)

for _cls, _cnt in zip(classes.cpu().numpy(), counts.cpu().numpy()):

self.class_instances[_cls] += _cnt

self.total_instances += targets_weighted.size(0)

weighting = 1 - (self.class_instances[targets_weighted] / self.total_instances)

weighting = torch.clamp(weighting, min=1/self.num_classes)

# If you do the math, the average weight of self.class_instances is this

avg_weight = (self.num_classes - 1) / self.num_classes

loss_c = (loss_c * weighting).sum() / avg_weight

else:

loss_c = loss_c.sum()

return cfg.conf_alpha * loss_c