【Pytorch】使用Pytorch进行知识蒸馏

使用Pytorch进行知识蒸馏

-

-

- 一、知识蒸馏原理

-

- 1. 使用 `softmax` 进行蒸馏:

- 2. 知识迁移:老师知识 —> 学生知识

- 二、知识蒸馏实现

-

- 1. 导入各种包

- 2. 设置随机种子

- 3. 加载 MNIST 数据集

- 4. 定义教师模型

- 5. 设置模型

- 6. 开始训练教师模型

- 7. 定义并训练学生模型

- 8. 预测前准备和设置

- 9. 开始训练

- 附录

-

- 1. 关于 `import torch.nn as nn`

- 2. 关于 `nn.functional`

- 3. 关于`from torch.utils.data import DataLoader`

- 4. 关于`model.train()`

- 5. 关于`optimizer.zero_grad()`

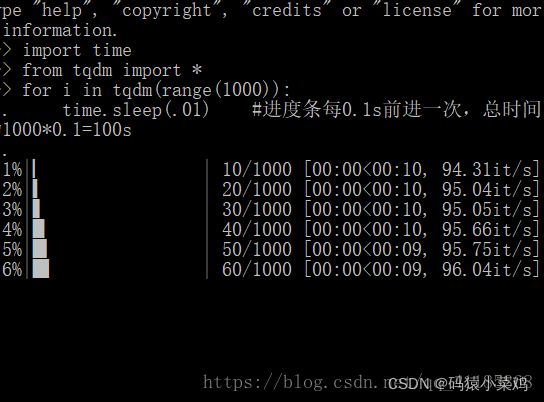

- 6. 关于 tqdm 库

- 7. 关于 Python numpy.indices()

- 7. 关于 loss.backward()

- 源代码

- 下一篇:[【Pytorch】使用Pytorch进行知识蒸馏_2](https://blog.csdn.net/weixin_47160526/article/details/123581995)

-

一、知识蒸馏原理

1. 使用 softmax 进行蒸馏:

softmax : q i = e z i / T ∑ j n e z i / T q_i=\frac{e^{z_i/T}}{ {\textstyle \sum_{j}^{n}e^{z_i/T}} } qi=∑jnezi/Tezi/T

T : 蒸馏温度

T = 时即为softmax

2. 知识迁移:老师知识 —> 学生知识

二、知识蒸馏实现

1. 导入各种包

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf

import torch

import torch.nn.functional as F

import torchvision

from torch import nn

from torchvision import transforms

from torch.utils.data import DataLoader

# from torchinfo import summary

from tqdm import tqdm

2. 设置随机种子

#设置随机种子

torch.manual_seed(0)

# device = torch.device("cuda" if torch.cuda.is_available() else "pcu") # 使用云GPU

# 使用cuDNN加速卷积运算

torch.backends.cudnn.benchmark=True

3. 加载 MNIST 数据集

执行后,MNIST数据集会下载到"dataset/"文件夹下

# 载入训练集

train_dataset = torchvision.datasets.MNIST(

root="dataset/", # MNIST数据集存放目录

train=True, #为train=True 时,加载训练集

transform=transforms.ToTensor(), # 图像处理、转不同格式显示

download=True

)

# 载入测试集

test_dataset = torchvision.datasets.MNIST(

root="dataset/",

train=False, #为train=False 时,加载测试集

transform=transforms.ToTensor(), # 图像处理、转不同格式显示

download=True

)

train_loder = DataLoader(dataset=train_dataset,batch_size=32,shuffle=True)

test_loder = DataLoader(dataset=test_dataset, batch_size=32,shuffle=False) # 从数据库中每次抽出batch size个样本

4. 定义教师模型

class TeacherModel(nn.Module):

# 教师模型先定义 三个隐藏层fc1,fc2,fc3

def __init__(self,in_channels=1,num_classes=10):

super(TeacherModel, self).__init__()

self.relu = nn.ReLU()

self.fc1 = nn.Linear(784,1200)

self.fc2 = nn.Linear(1200,1200)

self.fc3 = nn.Linear(1200,num_classes)

self.dropout = nn.Dropout(p = 0.5) # 使用dropout防止过拟合

def forward(self,x):

x = x.view(-1,784)

x = self.fc1(x)

x = self.relu(x) # 前向传播使用线性整流relu激活函数

x = self.fc2(x)

x = self.dropout(x)

x = self.relu(x)

x = self.fc3(x)

return x

5. 设置模型

model = TeacherModel()

criterion = nn.CrossEntropyLoss() # 设置使用交叉熵损失函数

optimizer = torch.optim.Adam(model.parameters(),lr=1e-4) # 使用Adam优化器,学习率为lr=1e-4

6. 开始训练教师模型

epochs = 6 # 训练6轮

for epoch in range(epochs):

model.train()

for data,targets in tqdm(train_loder):

# 前向预测

preds = model(data)

loss = criterion(preds,targets)

# 反向传播,优化权重

optimizer.zero_grad() # 把梯度置为0

loss.backward()

optimizer.step()

# 测试集上评估性能

model.eval()

num_correct = 0

num_samples = 0

with torch.no_grad():

for x,y in test_loder:

preds = model(x)

predictions = preds.max(1).indices

num_correct += (predictions == y).sum()

num_samples += predictions.size(0)

acc = (num_correct / num_samples).item()

model.train()

print(("Epoch:{}\t Accuracy:{:4f}").format(epoch+1,acc))

teacher_model = model

7. 定义并训练学生模型

# 学生模型

class StudentModel(nn.Module):

def __init__( self,inchannels=1,num_class=10):

super(StudentModel, self).__init__()

self.relu = nn.ReLU()

self.fc1 = nn.Linear(784, 20)

self.fc2 = nn.Linear(20, 20)

self.fc3 = nn.Linear(20, num_class)

self.dropout = nn.Dropout(p = 0.5)

def forward(self,x):

x = x.view(-1, 784)

x = self.fc1(x)

x = self.dropout(x)

x = self.relu(x)

x = self.fc2(x)

x = self.dropout(x)

x = self.relu(x)

x = self.fc3(x)

return x

model = StudentModel() # 从头先训练一下学生模型

# 设置交叉损失函数 和 激活函数

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters(),lr=1e-4)

epochs = 3

# 训练集上训练权重

for epoch in range(epochs):

model.train()

for data,targets in tqdm(train_loder):

# 前向预测

preds = model(data)

loss = criterion(preds,targets)

# 反向传播,优化权重

optimizer.zero_grad() # 把梯度置为0

loss.backward()

optimizer.step()

with torch.no_grad():

for x,y in test_loder:

preds = model(x)

predictions = preds.max(1).indices

num_correct += (predictions==y).sum()

num_samples += predictions.size(0)

acc = (num_correct / num_samples).item()

model.train()

print(("Epoch:{}\t Accuracy:{:4f}").format(epoch+1,acc))

student_model_scratch = model

知识蒸馏训练学生模型

8. 预测前准备和设置

# 准备好预训练好的教师模型

teacher_model.eval()

# 准备新的学生模型

model = SrudentModel()

model.train()

# 蒸馏温度

temp = 7

# hard_loss

hard_loss = nn.CrossEntropyLoss()

# hard_loss权重

alpha = 0.3

# soft_loss

soft_loss = nn.KLDivLoss(reduction="batchmean")

optimizer = torch.optim.Adam(model.parameters(),lr=1e-4)

9. 开始训练

epochs = 3

for epoch in range(epochs):

for data,targets in tqdm(train_loder):

# 教师模型预测

with torch.no_grad():

teacher_preds = teacher_model(data)

# 学生模型预测

student_preds = student_model_scratch(data)

student_loss = hard_loss(student_preds,targets)

# 计算蒸馏后的预测结果及soft_loss

distillation_loss = soft_loss(

F.softmax(student_preds/temp, dim=1),

F.softmax(teacher_preds/temp, dim=1)

)

# 将 hard_loss 和 soft_loss 加权求和

loss = alpha * student_loss + (1-alpha) * distillation_loss

# 反向传播,优化权重

optimizer.zero_grad()

loss.backward()

optimizer.step()

# 测试集上评估性能

model.eval()

num_correct = 0

num_samples = 0

with torch.no_grad():

for x,y in test_loder:

preds = student_model_scratch(x)

predictions = preds.max(1).indices

num_correct += (predictions == y).sum()

num_samples += predictions.size(0)

acc = (num_correct/num_samples).item()

model.train()

print(("Epoch:{}\t Accuracy:{:4f}").format(epoch+1,acc))

附录

1. 关于 import torch.nn as nn

torch.nn是用于设置网络中的全连接层的,需要注意在二维图像处理的任务中,全连接层的输入与输出一般都设置为二维张量,形状通常为[batch_size, size],不同于卷积层要求输入输出是四维张量。

in_features指的是输入的二维张量的大小,即输入的[batch_size, size]中的size。

out_features指的是输出的二维张量的大小,即输出的二维张量的形状为[batch_size,output_size],当然,它也代表了该全连接层的神经元个数。从输入输出的张量的shape角度来理解,相当于一个输入为[batch_size, in_features]的张量变换成了[batch_size, out_features]的输出张量。

import torch as t

from torch import nn

# in_features由输入张量的形状决定,out_features则决定了输出张量的形状

connected_layer = nn.Linear(in_features = 64*64*3, out_features = 1)

# 假定输入的图像形状为[64,64,3]

input = t.randn(1,64,64,3)

# 将四维张量转换为二维张量之后,才能作为全连接层的输入

input = input.view(1,64*64*3)

print(input.shape)

output = connected_layer(input) # 调用全连接层

print(output.shape)

# 运行结果:

# input shape is %s torch.Size([1, 12288])

# output shape is %s torch.Size([1, 1])

2. 关于 nn.functional

import torch.nn.functional as F

包含 torch.nn 库中所有函数

同时包含大量 loss 和 activation function

import torch.nn.functional as F

loss_func = F.cross_entropy

loss = loss_func(model(x), y)

loss.backward()

其中 loss.backward() 更新模型的梯度,包括 weights 和 bias

3. 关于from torch.utils.data import DataLoader

DataLoader:数据加载器,结合了数据集和取样器,并且可以提供多个线程处理数据集。

在训练模型时使用到此函数,用来把训练数据分成多个小组,此函数每次抛出一组数据。直至把所有的数据都抛出。就是做一个数据的初始化。

torch.utils.data.DataLoader(dataset,batch_size=1, shuffle=False, sampler=None,batch_sampler=None, num_workers=0, collate_fn=<function default_collate>,

pin_memory=False, drop_last=False,timeout=0, worker_init_fn=None)

``

4. 关于model.train()

model.train()的作用是启用 Batch Normalization 和 Dropout。

如果模型中有BN层(Batch Normalization)和Dropout,需要在训练时添加model.train()。model.train()是保证BN层能够用到每一批数据的均值和方差。对于Dropout,model.train()是随机取一部分网络连接来训练更新参数。

5. 关于optimizer.zero_grad()

optimizer.zero_grad()意思是把梯度置零,也就是把loss关于weight的导数变成0.

另外Pytorch 为什么每一轮batch需要设置optimizer.zero_grad:

根据pytorch中的backward()函数的计算,当网络参量进行反馈时,梯度是被积累的而不是被替换掉;但是在每一个batch时毫无疑问并不需要将两个batch的梯度混合起来累积,因此这里就需要每个batch设置一遍zero_grad 了。

在学习pytorch的时候注意到,对于每个batch大都执行了这样的操作:

# zero the parameter gradients

optimizer.zero_grad() # 梯度初始化为零

# forward + backward + optimize

outputs = net(inputs) # 前向传播求出预测的值

loss = criterion(outputs, labels) # 求loss

loss.backward() # 反向传播求梯度

optimizer.step() # 更新所有参数

6. 关于 tqdm 库

显示循环的进度条的库。taqadum, تقدّم)在阿拉伯语中的意思是进展。tqdm可以在长循环中添加一个进度提示信息,用户只需要 封装任意的迭代器 tqdm(iterator),是一个快速、扩展性强的进度条工具库。

import time

from tqdm import *

for i in tqdm(range(1000)):

time.sleep(.01) #进度条每0.1s前进一次,总时间为1000*0.1=100s

7. 关于 Python numpy.indices()

numpy.indices()函数返回一个表示网格索引的数组。计算一个数组,其中子数组包含仅沿相应轴变化的索引值0、1,…。

# Python program explaining

# numpy.indices() function

# importing numpy as geek

import numpy as geek

gfg = geek.indices((2, 3))

print (gfg)

#输出:

#[[[0 0 0]

# [1 1 1]]

# [[0 1 2]

# [0 1 2]]]

7. 关于 loss.backward()

首先,loss.backward()这个函数很简单,就是计算与图中叶子结点有关的当前张量的梯度

使用呢,当然可以直接如下使用

optimizer.zero_grad() 清空过往梯度;

loss.backward() 反向传播,计算当前梯度;

optimizer.step() 根据梯度更新网络参数

or这种情况

for i in range(num):

loss+=Loss(input,target)

optimizer.zero_grad() 清空过往梯度;

loss.backward() 反向传播,计算当前梯度;

optimizer.step() 根据梯度更新网络参数

源代码

import torch

import numpy as np

import pandas as pd

from torch import nn

import torch.nn.functional as F

import torchvision

from torchvision import transforms

from torch.utils.data import DataLoader

# from torchinfo import summary

from tqdm import tqdm

import matplotlib.pyplot as plt

#设置随机种子

torch.manual_seed(0)

# device = torch.device("cuda" if torch.cuda.is_available() else "pcu")

# 使用cuDNN加速卷积运算

torch.backends.cudnn.benchmark=True

# 载入MNIST数据集

# 载入训练集

train_dataset = torchvision.datasets.MNIST(

root="dataset/",

train=True,

transform=transforms.ToTensor(),

download=True

)

# 载入测试集

test_dataset = torchvision.datasets.MNIST(

root="dataset/",

train=False,

transform=transforms.ToTensor(),

download=True

)

train_loder = DataLoader(dataset=train_dataset,batch_size=32,shuffle=True)

test_loder = DataLoader(dataset=test_dataset, batch_size=32,shuffle=False)

# 教师模型

class TeacherModel(nn.Module):

def __init__(self,in_channels=1,num_classes=10):

super(TeacherModel, self).__init__()

self.relu = nn.ReLU()

self.fc1 = nn.Linear(784,1200)

self.fc2 = nn.Linear(1200,1200)

self.fc3 = nn.Linear(1200,num_classes)

self.dropout = nn.Dropout(p = 0.5)

def forward(self,x):

x = x.view(-1,784)

x = self.fc1(x)

x = self.dropout(x)

x = self.relu(x)

x = self.fc2(x)

x = self.dropout(x)

x = self.relu(x)

x = self.fc3(x)

return x

model = TeacherModel()

criterion = nn.CrossEntropyLoss() # 设置使用交叉熵损失函数

optimizer = torch.optim.Adam(model.parameters(),lr=1e-4) # 使用Adam优化器,学习率为lr=1e-4

epochs = 1 # 训练6轮

for epoch in range(epochs):

model.train()

for data,targets in tqdm(train_loder):

# 前向预测

preds = model(data)

loss = criterion(preds,targets)

# 反向传播,优化权重

optimizer.zero_grad()

loss.backward()

optimizer.step()

# 测试集上评估性能

model.eval()

num_correct = 0

num_samples = 0

with torch.no_grad():

for x,y in test_loder:

preds = model(x)

predictions = preds.max(1).indices

num_correct += (predictions == y).sum()

num_samples += predictions.size(0)

acc = (num_correct / num_samples).item()

model.train()

teacher_model = model

print(("Epoch:{}\t Accuracy:{:4f}").format(epoch+1,acc))

# 学生模型

class StudentModel(nn.Module):

def __init__( self,inchannels=1,num_class=10):

super(StudentModel, self).__init__()

self.relu = nn.ReLU()

self.fc1 = nn.Linear(784, 20)

self.fc2 = nn.Linear(20, 20)

self.fc3 = nn.Linear(20, num_class)

#self.dropout = nn.Dropout(p = 0.5)

def forward(self,x):

x = x.view(-1, 784)

x = self.fc1(x)

#x = self.dropout(x)

x = self.relu(x)

x = self.fc2(x)

#x = self.dropout(x)

x = self.relu(x)

x = self.fc3(x)

return x

model = StudentModel() # 从头先训练一下学生模型

# 设置交叉损失函数 和 激活函数

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters(),lr=1e-4)

epochs = 3

# 训练集上训练权重

for epoch in range(epochs):

model.train()

for data,targets in tqdm(train_loder):

# 前向预测

preds = model(data)

loss = criterion(preds,targets)

# 反向传播,优化权重

optimizer.zero_grad() # 把梯度置为0

loss.backward()

optimizer.step()

with torch.no_grad():

for x,y in test_loder:

preds = model(x)

predictions = preds.max(1).indices

num_correct += (predictions==y).sum()

num_samples += predictions.size(0)

acc = (num_correct / num_samples).item()

model.train()

print(("Epoch:{}\t Accuracy:{:4f}").format(epoch+1,acc))

student_model_scratch = model

# 准备好预训练好的教师模型

teacher_model.eval()

# 准备新的学生模型

model = StudentModel()

model.train()

# 蒸馏温度

temp = 7

# hard_loss

hard_loss = nn.CrossEntropyLoss()

# hard_loss权重

alpha = 0.3

# soft_loss

soft_loss = nn.KLDivLoss(reduction="batchmean")

optimizer = torch.optim.Adam(model.parameters(),lr=1e-4)

epochs = 3

for epoch in range(epochs):

for data,targets in tqdm(train_loder):

# 教师模型预测

with torch.no_grad():

teacher_preds = teacher_model(data)

# 学生模型预测

student_preds = student_model_scratch(data)

student_loss = hard_loss(student_preds,targets)

# 计算蒸馏后的预测结果及soft_loss

distillation_loss = soft_loss(

F.softmax(student_preds/temp, dim=1),

F.softmax(teacher_preds/temp, dim=1)

)

# 将 hard_loss 和 soft_loss 加权求和

loss = alpha * student_loss + (1-alpha) * distillation_loss

# 反向传播,优化权重

optimizer.zero_grad()

loss.backward()

optimizer.step()

# 测试集上评估性能

model.eval()

num_correct = 0

num_samples = 0

with torch.no_grad():

for x,y in test_loder:

preds = student_model_scratch(x)

predictions = preds.max(1).indices

num_correct += (predictions == y).sum()

num_samples += predictions.size(0)

acc = (num_correct/num_samples).item()

model.train()

print(("Epoch:{}\t Accuracy:{:4f}").format(epoch+1,acc))