【使用Pytorch搭建resnet网络框架结合单/多GPU并行训练分类模型】

【使用Pytorch搭建resnet网络框架结合单/多GPU并行训练分类模型】

- 一、文前白话

- 二、使用GPU并行训练相关知识

-

- 1、多GPU的一般使用方法

- 2、并行训练过程需要知晓的点

-

- ① 数据如何在不同的设备之间分配

- ② 误差梯度如何在不同的设备之间通信

- ③ BatchNormalization 如何在不同的设备之间同步数据

- 3、PyTorch官方给出的不同的GPU加速模型方式

-

-

- 3.1 两种模式

- ① DataParallel (稍早出现)

- ② DistributedDataParallel (迭代更新后的版本)

- 3.2 不同的GPU训练启动方式

-

- ①DistributedSampler方法

- ② BatchSampler方法

-

- 三、实操训练过程涉及代码与解析

-

- 3.1 环境依赖

- 3.2脚本解析

-

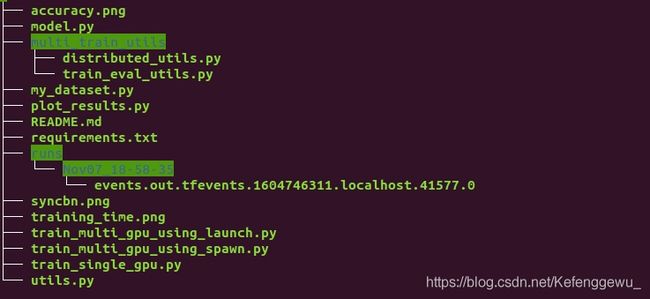

- 3.2.1 运行文件夹目录树结构

-

- ①train_single_gpu.py 脚本解析

-

- 可视化训练结果

- ②train_multi_gpu_with_launch.py 脚本解析

- ③ train_multi_gpu_with_multiprocessing.py 脚本解析

- 附: 其他相关 脚本

-

-

- ①model.py

- ②distributed_utils.py 文件

- ③train_eval_utils.py 文件

- ④my_dataset.py 文件

- ⑤ plot_results.py 文件

- 6. utils.py 文件

-

- Reference

一、文前白话

本文学习并介绍如何使用pytorch框架配合单/多GPU展开模型的训练过程与代码脚本操作,以搭建的resnet网络框架训练花分类数据集分类模型为例,帮助实现计算资源的合理利用。

二、使用GPU并行训练相关知识

1、多GPU的一般使用方法

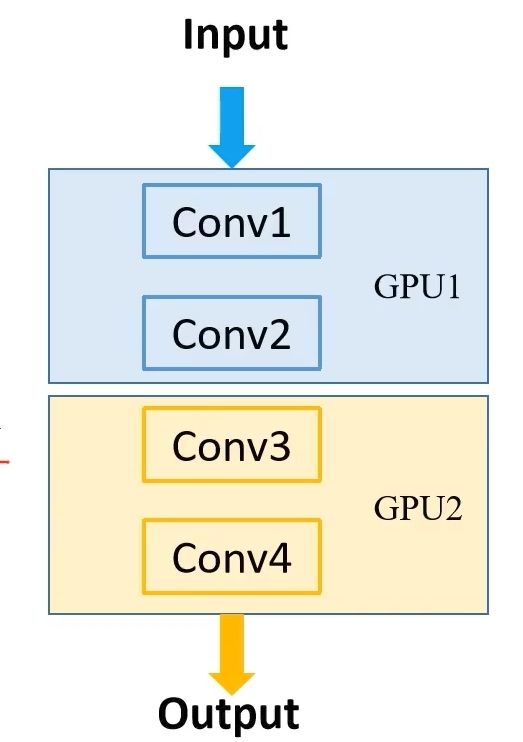

- model parallel 模型并行

可以把一个很大的数据模型分布在不同的GPU上,实际上对于训练速度没什么帮助。

图示如下:

- data parallel 数据并行

将整块模型放到一个GPU中(每个GPU中都复制有相同的模型),同时输入更多的数据集进行训练,相当于加大了batchsize , 加快训练了速度。

2、并行训练过程需要知晓的点

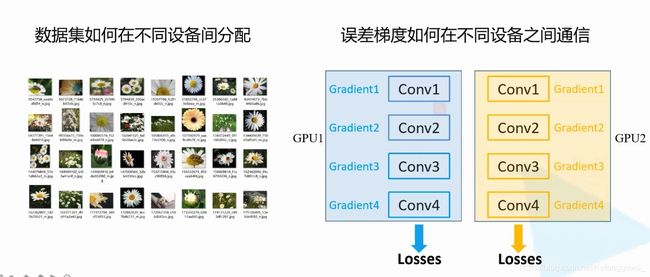

① 数据如何在不同的设备之间分配

② 误差梯度如何在不同的设备之间通信

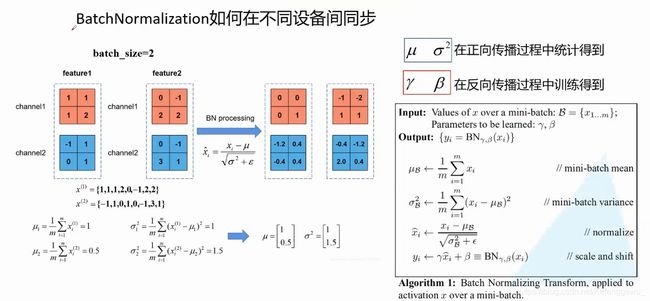

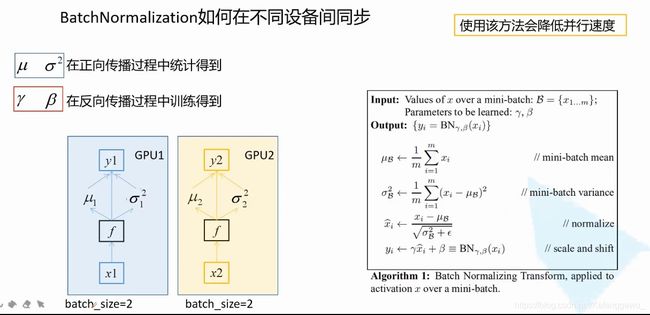

③ BatchNormalization 如何在不同的设备之间同步数据

3、PyTorch官方给出的不同的GPU加速模型方式

更多的可以去官网了解(单机多卡、多机多卡等)使用教程

阅读官方文档

链接:PyTorch官网

3.1 两种模式

① DataParallel (稍早出现)

单进程,多线程,适用于单机设备上

可适用:单机多卡

② DistributedDataParallel (迭代更新后的版本)

多进程,单机 多机均可,单机下运算更快

可适用:单机多卡,多机多卡

3.2 不同的GPU训练启动方式

- torch.distributed.launch

代码量少,启动速度快,一般多卡情况下使用

启动方式:

python -m torch.distributed.launch --(加上参数以及 脚本名称)

python -m torch.distributed.launch --help # 查看使用说明

- torch.multiprocessing

相比,有更好的控制性和灵活性

启动方式:

python -m torch.multiprocessing --(加上参数以及 脚本名称)

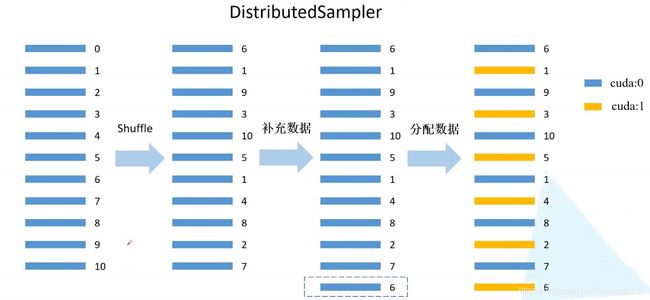

①DistributedSampler方法

作用图示:

假设此时有11个样本数据,如下进行GPU设备之间的数据分配:

假设有2块GPU设备,样本数量/GPU数量=向上取整数 :2

重分配时候,不足的取第一个补足。 将数据均匀分配到GPU设备中。

② BatchSampler方法

作用图示:

三、实操训练过程涉及代码与解析

3.1 环境依赖

- python == 3.7

- matplotlib == 3.2.1

- tqdm == 4.42.1

- torchvision == 0.7.0

- torch == 1.6.0

3.2脚本解析

3.2.1 运行文件夹目录树结构

①train_single_gpu.py 脚本解析

使用单机单卡训练脚本

#@ Time: 2021-07-23

# recoder:Wupke

import os

import math

import argparse

import torch

import torch.optim as optim

from torch.utils.tensorboard import SummaryWriter

from torchvision import transforms

import torch.optim.lr_scheduler as lr_scheduler

from model import resnet34, resnet101

from my_dataset import MyDataSet

from utils import read_split_data

from multi_train_utils.train_eval_utils import train_one_epoch, evaluate

def main(args):

device = torch.device(args.device if torch.cuda.is_available() else "cpu")

print(args)

print('Start Tensorboard with "tensorboard --logdir=logs", view at http://localhost:6006/')

tb_writer = SummaryWriter(log_dir="logs")

if os.path.exists("./weights") is False:

os.makedirs("./weights")

train_info, val_info, num_classes = read_split_data(args.data_path)

train_images_path, train_images_label = train_info

val_images_path, val_images_label = val_info

# check num_classes

assert args.num_classes == num_classes, "dataset num_classes: {}, input {}".format(args.num_classes,

num_classes)

data_transform = {

"train": transforms.Compose([transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])]),

"val": transforms.Compose([transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])])}

# 实例化训练数据集

train_data_set = MyDataSet(images_path=train_images_path,

images_class=train_images_label,

transform=data_transform["train"])

# 实例化验证数据集

val_data_set = MyDataSet(images_path=val_images_path,

images_class=val_images_label,

transform=data_transform["val"])

batch_size = args.batch_size

nw = min([os.cpu_count(), batch_size if batch_size > 1 else 0, 8]) # number of workers

print('Using {} dataloader workers every process'.format(nw))

train_loader = torch.utils.data.DataLoader(train_data_set,

batch_size=batch_size,

shuffle=True,

pin_memory=True,

num_workers=nw,

collate_fn=train_data_set.collate_fn)

val_loader = torch.utils.data.DataLoader(val_data_set,

batch_size=batch_size,

shuffle=False,

pin_memory=True,

num_workers=nw,

collate_fn=val_data_set.collate_fn)

# 如果存在预训练权重则载入

model = resnet34(num_classes=args.num_classes).to(device)

if os.path.exists(args.weights):

weights_dict = torch.load(args.weights, map_location=device)

load_weights_dict = {k: v for k, v in weights_dict.items()

if model.state_dict()[k].numel() == v.numel()}

model.load_state_dict(load_weights_dict, strict=False)

# 是否冻结权重

if args.freeze_layers:

for name, para in model.named_parameters():

# 除最后的全连接层外,其他权重全部冻结

if "fc" not in name:

para.requires_grad_(False)

pg = [p for p in model.parameters() if p.requires_grad]

optimizer = optim.SGD(pg, lr=args.lr, momentum=0.9, weight_decay=0.005)

# Scheduler https://arxiv.org/pdf/1812.01187.pdf

lf = lambda x: ((1 + math.cos(x * math.pi / args.epochs)) / 2) * (1 - args.lrf) + args.lrf # cosine

scheduler = lr_scheduler.LambdaLR(optimizer, lr_lambda=lf)

for epoch in range(args.epochs):

# train

mean_loss = train_one_epoch(model=model,

optimizer=optimizer,

data_loader=train_loader,

device=device,

epoch=epoch)

scheduler.step()

# validate

sum_num = evaluate(model=model,

data_loader=val_loader,

device=device)

acc = sum_num / len(val_data_set)

print("[epoch {}] accuracy: {}".format(epoch, round(acc, 3)))

tags = ["loss", "accuracy", "learning_rate"]

tb_writer.add_scalar(tags[0], mean_loss, epoch)

tb_writer.add_scalar(tags[1], acc, epoch)

tb_writer.add_scalar(tags[2], optimizer.param_groups[0]["lr"], epoch)

torch.save(model.state_dict(), "./weights/model-{}.pth".format(epoch))

if __name__ == '__main__':

parser = argparse.ArgumentParser()

parser.add_argument('--num_classes', type=int, default=5)

parser.add_argument('--epochs', type=int, default=20)

parser.add_argument('--batch-size', type=int, default=8)

parser.add_argument('--lr', type=float, default=0.001)

parser.add_argument('--lrf', type=float, default=0.1)

# 数据集所在根目录

# http://download.tensorflow.org/example_images/flower_photos.tgz # 官方花分类数据集下载链接

parser.add_argument('--data-path', type=str,

default="/home/Git/pytorch/tensorboard/flower_photos")

# resnet34 官方权重下载地址

# https://download.pytorch.org/models/resnet34-333f7ec4.pth

parser.add_argument('--weights', type=str, default='resNet34.pth',

help='initial weights path')

parser.add_argument('--freeze-layers', type=bool, default=False) # 这里未使用预训练权重

parser.add_argument('--device', default='cuda', help='device id (i.e. 0 or 0,1 or cpu)')

opt = parser.parse_args()

main(opt)

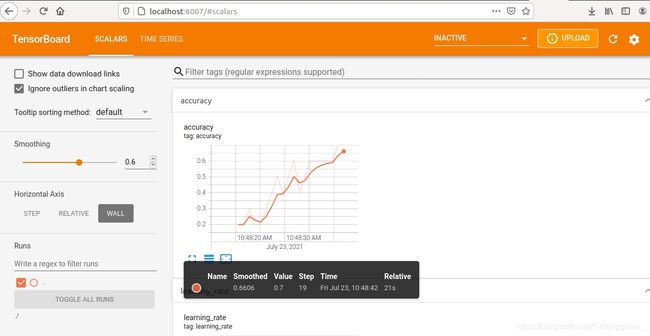

可视化训练结果

选择了部分花数据集(各选50张)进行训练,可视化结果图下:

具体的可视化训练过程,及数据格式、训练参数设置等训练小细节,可参考之前的博文链接: 如何在Pytorch中使用Tensorboard可视化训练过程.

②train_multi_gpu_with_launch.py 脚本解析

基于torch.distributed.launch启动方式的训练

脚本代码:

#@ Time: 2021-07-23

# recoder:Wupke

import os

import math

import tempfile

import argparse

import torch

import torch.optim as optim

import torch.optim.lr_scheduler as lr_scheduler

from torch.utils.tensorboard import SummaryWriter

from torchvision import transforms

from model import resnet34

from my_dataset import MyDataSet

from utils import read_split_data, plot_data_loader_image

from multi_train_utils.distributed_utils import init_distributed_mode, dist, cleanup

from multi_train_utils.train_eval_utils import train_one_epoch, evaluate

def main(args):

if torch.cuda.is_available() is False:

raise EnvironmentError("not find GPU device for training.")

# 初始化各进程环境

init_distributed_mode(args=args)

rank = args.rank

device = torch.device(args.device)

batch_size = args.batch_size

num_classes = args.num_classes

weights_path = args.weights

args.lr *= args.world_size # 学习率要根据并行GPU的数量进行倍增

if rank == 0: # 在第一个进程中打印信息,并实例化tensorboard

print(args)

print('Start Tensorboard with "tensorboard --logdir=runs", view at http://localhost:6006/')

tb_writer = SummaryWriter()

if os.path.exists("./weights") is False:

os.makedirs("./weights")

train_info, val_info, num_classes = read_split_data(args.data_path)

train_images_path, train_images_label = train_info

val_images_path, val_images_label = val_info

# check num_classes

assert args.num_classes == num_classes, "dataset num_classes: {}, input {}".format(args.num_classes,

num_classes)

data_transform = {

"train": transforms.Compose([transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])]),

"val": transforms.Compose([transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])])}

# 实例化训练数据集

train_data_set = MyDataSet(images_path=train_images_path,

images_class=train_images_label,

transform=data_transform["train"])

# 实例化验证数据集

val_data_set = MyDataSet(images_path=val_images_path,

images_class=val_images_label,

transform=data_transform["val"])

# 给每个rank对应的进程分配训练的样本索引

train_sampler = torch.utils.data.distributed.DistributedSampler(train_data_set)

# DistributedSampler 函数可到官网文档查看定义参数与使用样例

val_sampler = torch.utils.data.distributed.DistributedSampler(val_data_set)

# 将样本索引每batch_size个元素组成一个list

train_batch_sampler = torch.utils.data.BatchSampler(

train_sampler, batch_size, drop_last=True)

nw = min([os.cpu_count(), batch_size if batch_size > 1 else 0, 8]) # number of workers

if rank == 0:

print('Using {} dataloader workers every process'.format(nw))

train_loader = torch.utils.data.DataLoader(train_data_set,

batch_sampler=train_batch_sampler,

pin_memory=True,

num_workers=nw,

collate_fn=train_data_set.collate_fn)

val_loader = torch.utils.data.DataLoader(val_data_set,

batch_size=batch_size,

sampler=val_sampler,

pin_memory=True,

num_workers=nw,

collate_fn=val_data_set.collate_fn)

# 实例化模型

model = resnet34(num_classes=num_classes).to(device)

# 如果存在预训练权重则载入

if os.path.exists(weights_path):

weights_dict = torch.load(weights_path, map_location=device)

load_weights_dict = {k: v for k, v in weights_dict.items()

if model.state_dict()[k].numel() == v.numel()}

model.load_state_dict(load_weights_dict, strict=False)

else:

checkpoint_path = os.path.join(tempfile.gettempdir(), "initial_weights.pt")

# 如果不存在预训练权重,需要将第一个进程中的权重保存,然后其他进程载入,保持初始化权重一致

if rank == 0:

torch.save(model.state_dict(), checkpoint_path)

dist.barrier()

# 这里注意,一定要指定map_location参数,否则会导致第一块GPU占用更多资源

model.load_state_dict(torch.load(checkpoint_path, map_location=device))

# 是否冻结权重

if args.freeze_layers:

for name, para in model.named_parameters():

# 除最后的全连接层外,其他权重全部冻结

if "fc" not in name:

para.requires_grad_(False)

else:

# 只有训练带有BN结构的网络时使用SyncBatchNorm采用意义

if args.syncBN:

# 使用SyncBatchNorm后训练会更耗时

model = torch.nn.SyncBatchNorm.convert_sync_batchnorm(model).to(device)

# 转为DDP模型

model = torch.nn.parallel.DistributedDataParallel(model, device_ids=[args.gpu])

# optimizer

pg = [p for p in model.parameters() if p.requires_grad]

optimizer = optim.SGD(pg, lr=args.lr, momentum=0.9, weight_decay=0.005)

# Scheduler https://arxiv.org/pdf/1812.01187.pdf

lf = lambda x: ((1 + math.cos(x * math.pi / args.epochs)) / 2) * (1 - args.lrf) + args.lrf # cosine

scheduler = lr_scheduler.LambdaLR(optimizer, lr_lambda=lf)

for epoch in range(args.epochs):

train_sampler.set_epoch(epoch)

mean_loss = train_one_epoch(model=model,

optimizer=optimizer,

data_loader=train_loader,

device=device,

epoch=epoch)

scheduler.step()

sum_num = evaluate(model=model,

data_loader=val_loader,

device=device)

acc = sum_num / val_sampler.total_size

if rank == 0:

print("[epoch {}] accuracy: {}".format(epoch, round(acc, 3)))

tags = ["loss", "accuracy", "learning_rate"]

tb_writer.add_scalar(tags[0], mean_loss, epoch)

tb_writer.add_scalar(tags[1], acc, epoch)

tb_writer.add_scalar(tags[2], optimizer.param_groups[0]["lr"], epoch)

torch.save(model.module.state_dict(), "./weights/model-{}.pth".format(epoch))

# 删除临时缓存文件

if rank == 0:

if os.path.exists(checkpoint_path) is True:

os.remove(checkpoint_path)

cleanup()

if __name__ == '__main__':

parser = argparse.ArgumentParser()

parser.add_argument('--num_classes', type=int, default=5)

parser.add_argument('--epochs', type=int, default=30)

parser.add_argument('--batch-size', type=int, default=16)

parser.add_argument('--lr', type=float, default=0.001)

parser.add_argument('--lrf', type=float, default=0.1)

# 是否启用SyncBatchNorm

parser.add_argument('--syncBN', type=bool, default=True)

# 数据集所在根目录

# http://download.tensorflow.org/example_images/flower_photos.tgz

parser.add_argument('--data-path', type=str, default="/home/flower_data/flower_photos")

# resnet34 官方权重下载地址

# https://download.pytorch.org/models/resnet34-333f7ec4.pth

parser.add_argument('--weights', type=str, default='resNet34.pth',

help='initial weights path')

parser.add_argument('--freeze-layers', type=bool, default=False)

# 不要改该参数,系统会自动分配

parser.add_argument('--device', default='cuda', help='device id (i.e. 0 or 0,1 or cpu)')

# 开启的进程数(注意不是线程),不用设置该参数,会根据nproc_per_node自动设置

parser.add_argument('--world-size', default=4, type=int,

help='number of distributed processes')

parser.add_argument('--dist-url', default='env://', help='url used to set up distributed training')

opt = parser.parse_args()

main(opt)

③ train_multi_gpu_with_multiprocessing.py 脚本解析

基于torch.multiprocessing启动方式的训练

脚本代码:

#@ Time: 2021-07-23

# recoder:Wupke

import os

import math

import tempfile

import argparse

import torch

import torch.multiprocessing as mp

from torch.multiprocessing import Process

import torch.optim as optim

import torch.optim.lr_scheduler as lr_scheduler

from torch.utils.tensorboard import SummaryWriter

from torchvision import transforms

from model import resnet34

from my_dataset import MyDataSet

from utils import read_split_data, plot_data_loader_image

from multi_train_utils.distributed_utils import dist, cleanup

from multi_train_utils.train_eval_utils import train_one_epoch, evaluate

def main_fun(rank, world_size, args):

if torch.cuda.is_available() is False:

raise EnvironmentError("not find GPU device for training.")

# 初始化各进程环境 start

os.environ["MASTER_ADDR"] = "localhost"

os.environ["MASTER_PORT"] = "12355"

args.rank = rank

args.world_size = world_size

args.gpu = rank

args.distributed = True

torch.cuda.set_device(args.gpu)

args.dist_backend = 'nccl'

print('| distributed init (rank {}): {}'.format(

args.rank, args.dist_url), flush=True)

dist.init_process_group(backend=args.dist_backend, init_method=args.dist_url,

world_size=args.world_size, rank=args.rank)

dist.barrier()

# 初始化各进程环境 end

rank = args.rank

device = torch.device(args.device)

batch_size = args.batch_size

num_classes = args.num_classes

weights_path = args.weights

args.lr *= args.world_size # 学习率要根据并行GPU的数量进行倍增

if rank == 0: # 在第一个进程中打印信息,并实例化tensorboard

print(args)

print('Start Tensorboard with "tensorboard --logdir=runs", view at http://localhost:6006/')

tb_writer = SummaryWriter()

if os.path.exists("./weights") is False:

os.makedirs("./weights")

train_info, val_info, num_classes = read_split_data(args.data_path)

train_images_path, train_images_label = train_info

val_images_path, val_images_label = val_info

# check num_classes

assert args.num_classes == num_classes, "dataset num_classes: {}, input {}".format(args.num_classes,

num_classes)

data_transform = {

"train": transforms.Compose([transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])]),

"val": transforms.Compose([transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])])}

# 实例化训练数据集

train_data_set = MyDataSet(images_path=train_images_path,

images_class=train_images_label,

transform=data_transform["train"])

# 实例化验证数据集

val_data_set = MyDataSet(images_path=val_images_path,

images_class=val_images_label,

transform=data_transform["val"])

# 给每个rank对应的进程分配训练的样本索引

train_sampler = torch.utils.data.distributed.DistributedSampler(train_data_set)

val_sampler = torch.utils.data.distributed.DistributedSampler(val_data_set)

# 将样本索引每batch_size个元素组成一个list

train_batch_sampler = torch.utils.data.BatchSampler(

train_sampler, batch_size, drop_last=True)

nw = min([os.cpu_count(), batch_size if batch_size > 1 else 0, 8]) # number of workers

if rank == 0:

print('Using {} dataloader workers every process'.format(nw))

train_loader = torch.utils.data.DataLoader(train_data_set,

batch_sampler=train_batch_sampler,

pin_memory=True,

num_workers=nw,

collate_fn=train_data_set.collate_fn)

val_loader = torch.utils.data.DataLoader(val_data_set,

batch_size=batch_size,

sampler=val_sampler,

pin_memory=True,

num_workers=nw,

collate_fn=val_data_set.collate_fn)

# 实例化模型

model = resnet34(num_classes=num_classes).to(device)

# 如果存在预训练权重则载入

if os.path.exists(weights_path):

weights_dict = torch.load(weights_path, map_location=device)

load_weights_dict = {k: v for k, v in weights_dict.items()

if model.state_dict()[k].numel() == v.numel()}

model.load_state_dict(load_weights_dict, strict=False)

else:

checkpoint_path = os.path.join(tempfile.gettempdir(), "initial_weights.pt")

# 如果不存在预训练权重,需要将第一个进程中的权重保存,然后其他进程载入,保持初始化权重一致

if rank == 0:

torch.save(model.state_dict(), checkpoint_path)

dist.barrier()

# 这里注意,一定要指定map_location参数,否则会导致第一块GPU占用更多资源

model.load_state_dict(torch.load(checkpoint_path, map_location=device))

# 是否冻结权重

if args.freeze_layers:

for name, para in model.named_parameters():

# 除最后的全连接层外,其他权重全部冻结

if "fc" not in name:

para.requires_grad_(False)

else:

# 只有训练带有BN结构的网络时使用SyncBatchNorm采用意义

if args.syncBN:

# 使用SyncBatchNorm后训练会更耗时

model = torch.nn.SyncBatchNorm.convert_sync_batchnorm(model).to(device)

# 转为DDP模型

model = torch.nn.parallel.DistributedDataParallel(model, device_ids=[args.gpu])

# optimizer

pg = [p for p in model.parameters() if p.requires_grad]

optimizer = optim.SGD(pg, lr=args.lr, momentum=0.9, weight_decay=0.005)

# Scheduler https://arxiv.org/pdf/1812.01187.pdf

lf = lambda x: ((1 + math.cos(x * math.pi / args.epochs)) / 2) * (1 - args.lrf) + args.lrf # cosine

scheduler = lr_scheduler.LambdaLR(optimizer, lr_lambda=lf)

for epoch in range(args.epochs):

train_sampler.set_epoch(epoch)

mean_loss = train_one_epoch(model=model,

optimizer=optimizer,

data_loader=train_loader,

device=device,

epoch=epoch)

scheduler.step()

sum_num = evaluate(model=model,

data_loader=val_loader,

device=device)

acc = sum_num / val_sampler.total_size

if rank == 0:

print("[epoch {}] accuracy: {}".format(epoch, round(acc, 3)))

tags = ["loss", "accuracy", "learning_rate"]

tb_writer.add_scalar(tags[0], mean_loss, epoch)

tb_writer.add_scalar(tags[1], acc, epoch)

tb_writer.add_scalar(tags[2], optimizer.param_groups[0]["lr"], epoch)

torch.save(model.module.state_dict(), "./weights/model-{}.pth".format(epoch))

# 删除临时缓存文件

if rank == 0:

if os.path.exists(checkpoint_path) is True:

os.remove(checkpoint_path)

cleanup()

if __name__ == '__main__':

parser = argparse.ArgumentParser()

parser.add_argument('--num_classes', type=int, default=5)

parser.add_argument('--epochs', type=int, default=30)

parser.add_argument('--batch-size', type=int, default=16)

parser.add_argument('--lr', type=float, default=0.001)

parser.add_argument('--lrf', type=float, default=0.1)

# 是否启用SyncBatchNorm

parser.add_argument('--syncBN', type=bool, default=True)

# 数据集所在根目录

# http://download.tensorflow.org/example_images/flower_photos.tgz

parser.add_argument('--data-path', type=str, default="/home/flower_data/flower_photos")

# resnet34 官方权重下载地址

# https://download.pytorch.org/models/resnet34-333f7ec4.pth

parser.add_argument('--weights', type=str, default='resNet34.pth',

help='initial weights path')

parser.add_argument('--freeze-layers', type=bool, default=False)

# 不要改该参数,系统会自动分配

parser.add_argument('--device', default='cuda', help='device id (i.e. 0 or 0,1 or cpu)')

# 开启的进程数(注意不是线程),在单机中指使用GPU的数量

parser.add_argument('--world-size', default=4, type=int,

help='number of distributed processes')

parser.add_argument('--dist-url', default='env://', help='url used to set up distributed training')

opt = parser.parse_args()

# when using mp.spawn, if I set number of works greater 1,

# before each epoch training and validation will wait about 10 seconds

# mp.spawn(main_fun,

# args=(opt.world_size, opt),

# nprocs=opt.world_size,

# join=True)

world_size = opt.world_size

processes = []

for rank in range(world_size):

p = Process(target=main_fun, args=(rank, world_size, opt))

p.start()

processes.append(p)

for p in processes:

p.join()

附: 其他相关 脚本

实际训练过程,可以依据上述的文件目录树结构存放,并构建好数据集,配置环境依赖,调整路径与参数,进行训练调试。

①model.py

# 搭建resnet网络结构

import torch.nn as nn

import torch

class BasicBlock(nn.Module):

expansion = 1

def __init__(self, in_channel, out_channel, stride=1, downsample=None):

super(BasicBlock, self).__init__()

self.conv1 = nn.Conv2d(in_channels=in_channel, out_channels=out_channel,

kernel_size=3, stride=stride, padding=1, bias=False)

self.bn1 = nn.BatchNorm2d(out_channel)

self.relu = nn.ReLU()

self.conv2 = nn.Conv2d(in_channels=out_channel, out_channels=out_channel,

kernel_size=3, stride=1, padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(out_channel)

self.downsample = downsample

def forward(self, x):

identity = x

if self.downsample is not None:

identity = self.downsample(x)

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out += identity

out = self.relu(out)

return out

class Bottleneck(nn.Module):

expansion = 4

def __init__(self, in_channel, out_channel, stride=1, downsample=None):

super(Bottleneck, self).__init__()

self.conv1 = nn.Conv2d(in_channels=in_channel, out_channels=out_channel,

kernel_size=1, stride=1, bias=False) # squeeze channels

self.bn1 = nn.BatchNorm2d(out_channel)

# -----------------------------------------

self.conv2 = nn.Conv2d(in_channels=out_channel, out_channels=out_channel,

kernel_size=3, stride=stride, bias=False, padding=1)

self.bn2 = nn.BatchNorm2d(out_channel)

# -----------------------------------------

self.conv3 = nn.Conv2d(in_channels=out_channel, out_channels=out_channel*self.expansion,

kernel_size=1, stride=1, bias=False) # unsqueeze channels

self.bn3 = nn.BatchNorm2d(out_channel*self.expansion)

self.relu = nn.ReLU(inplace=True)

self.downsample = downsample

def forward(self, x):

identity = x

if self.downsample is not None:

identity = self.downsample(x)

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.relu(out)

out = self.conv3(out)

out = self.bn3(out)

out += identity

out = self.relu(out)

return out

class ResNet(nn.Module):

def __init__(self, block, blocks_num, num_classes=1000, include_top=True):

super(ResNet, self).__init__()

self.include_top = include_top

self.in_channel = 64

self.conv1 = nn.Conv2d(3, self.in_channel, kernel_size=7, stride=2,

padding=3, bias=False)

self.bn1 = nn.BatchNorm2d(self.in_channel)

self.relu = nn.ReLU(inplace=True)

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

self.layer1 = self._make_layer(block, 64, blocks_num[0])

self.layer2 = self._make_layer(block, 128, blocks_num[1], stride=2)

self.layer3 = self._make_layer(block, 256, blocks_num[2], stride=2)

self.layer4 = self._make_layer(block, 512, blocks_num[3], stride=2)

if self.include_top:

self.avgpool = nn.AdaptiveAvgPool2d((1, 1)) # output size = (1, 1)

self.fc = nn.Linear(512 * block.expansion, num_classes)

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

def _make_layer(self, block, channel, block_num, stride=1):

downsample = None

if stride != 1 or self.in_channel != channel * block.expansion:

downsample = nn.Sequential(

nn.Conv2d(self.in_channel, channel * block.expansion, kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(channel * block.expansion))

layers = []

layers.append(block(self.in_channel, channel, downsample=downsample, stride=stride))

self.in_channel = channel * block.expansion

for _ in range(1, block_num):

layers.append(block(self.in_channel, channel))

return nn.Sequential(*layers)

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

if self.include_top:

x = self.avgpool(x)

x = torch.flatten(x, 1)

x = self.fc(x)

return x

def resnet34(num_classes=1000, include_top=True):

return ResNet(BasicBlock, [3, 4, 6, 3], num_classes=num_classes, include_top=include_top)

def resnet101(num_classes=1000, include_top=True):

return ResNet(Bottleneck, [3, 4, 23, 3], num_classes=num_classes, include_top=include_top)

②distributed_utils.py 文件

import os

import torch

import torch.distributed as dist

def init_distributed_mode(args):

# 多机多卡情况下,RANK 第几台设备 WORLD_SIZE 使用了几台设备, LOCAL_RANK 某一台机器上第几块GPU设备

# 单机多卡下,RANK 第几块GPU WORLD_SIZE 使用了几块GPU, LOCAL_RANK 也是第几块GPU

if 'RANK' in os.environ and 'WORLD_SIZE' in os.environ:

args.rank = int(os.environ["RANK"])

args.world_size = int(os.environ['WORLD_SIZE'])

args.gpu = int(os.environ['LOCAL_RANK'])

elif 'SLURM_PROCID' in os.environ:

args.rank = int(os.environ['SLURM_PROCID'])

args.gpu = args.rank % torch.cuda.device_count()

else:

print('Not using distributed mode')

args.distributed = False

return

args.distributed = True

torch.cuda.set_device(args.gpu)

args.dist_backend = 'nccl' # 通信后端,nvidia GPU推荐使用NCCL

print('| distributed init (rank {}): {}'.format(

args.rank, args.dist_url), flush=True)

dist.init_process_group(backend=args.dist_backend, init_method=args.dist_url,

world_size=args.world_size, rank=args.rank)

dist.barrier()

def cleanup():

dist.destroy_process_group()

def is_dist_avail_and_initialized():

"""检查是否支持分布式环境"""

if not dist.is_available():

return False

if not dist.is_initialized():

return False

return True

def get_world_size():

if not is_dist_avail_and_initialized():

return 1

return dist.get_world_size()

def get_rank():

if not is_dist_avail_and_initialized():

return 0

return dist.get_rank()

def is_main_process():

return get_rank() == 0

def reduce_value(value, average=True):

world_size = get_world_size()

if world_size < 2: # 单GPU的情况

return value

with torch.no_grad():

dist.all_reduce(value)

if average:

value /= world_size

return value

③train_eval_utils.py 文件

import sys

from tqdm import tqdm

import torch

from multi_train_utils.distributed_utils import reduce_value, is_main_process

def train_one_epoch(model, optimizer, data_loader, device, epoch):

model.train()

loss_function = torch.nn.CrossEntropyLoss()

mean_loss = torch.zeros(1).to(device)

optimizer.zero_grad()

# 在进程0中打印训练进度

if is_main_process():

data_loader = tqdm(data_loader)

for step, data in enumerate(data_loader):

images, labels = data

pred = model(images.to(device))

loss = loss_function(pred, labels.to(device))

loss.backward() # loss反向传播

loss = reduce_value(loss, average=True)

mean_loss = (mean_loss * step + loss.detach()) / (step + 1) # update mean losses

# 在进程0中打印平均loss

if is_main_process():

data_loader.desc = "[epoch {}] mean loss {}".format(epoch, round(mean_loss.item(), 3))

if not torch.isfinite(loss):

print('WARNING: non-finite loss, ending training ', loss)

sys.exit(1)

optimizer.step()

optimizer.zero_grad()

# 等待所有进程计算完毕

if device != torch.device("cpu"):

torch.cuda.synchronize(device)

return mean_loss.item()

@torch.no_grad()

def evaluate(model, data_loader, device):

model.eval()

# 用于存储预测正确的样本个数

sum_num = torch.zeros(1).to(device)

# 在进程0中打印验证进度

if is_main_process():

data_loader = tqdm(data_loader)

for step, data in enumerate(data_loader):

images, labels = data

pred = model(images.to(device))

pred = torch.max(pred, dim=1)[1]

sum_num += torch.eq(pred, labels.to(device)).sum()

# 等待所有进程计算完毕

if device != torch.device("cpu"):

torch.cuda.synchronize(device)

sum_num = reduce_value(sum_num, average=False)

return sum_num.item()

④my_dataset.py 文件

自定义数据集与划分

from PIL import Image

import torch

from torch.utils.data import Dataset

class MyDataSet(Dataset):

"""自定义数据集"""

def __init__(self, images_path: list, images_class: list, transform=None):

self.images_path = images_path

self.images_class = images_class

self.transform = transform

def __len__(self):

return len(self.images_path)

def __getitem__(self, item):

img = Image.open(self.images_path[item])

# RGB为彩色图片,L为灰度图片

if img.mode != 'RGB':

raise ValueError("image: {} isn't RGB mode.".format(self.images_path[item]))

label = self.images_class[item]

if self.transform is not None:

img = self.transform(img)

return img, label

@staticmethod

def collate_fn(batch):

# 官方实现的default_collate可以参考

# https://github.com/pytorch/pytorch/blob/67b7e751e6b5931a9f45274653f4f653a4e6cdf6/torch/utils/data/_utils/collate.py

images, labels = tuple(zip(*batch))

images = torch.stack(images, dim=0)

labels = torch.as_tensor(labels)

return images, labels

⑤ plot_results.py 文件

matplotlib模块实现绘图

import math

import matplotlib.pyplot as plt

x = [0, 1, 2, 3]

y = [9, 5.5, 3, 2]

plt.bar(x, y, align='center')

plt.xticks(range(len(x)), ['One-GPU', '2 GPUs', '4 GPUs', '8 GPUs'])

plt.ylim((0, 10))

for i, v in enumerate(y):

plt.text(x=i, y=v + 0.1, s=str(v) + ' s', ha='center')

plt.xlabel('Using number of GPU device')

plt.ylabel('Training time per epoch (second)')

plt.show()

plt.close()

x = list(range(30))

no_SyncBatchNorm = [0.348, 0.495, 0.587, 0.554, 0.637,

0.622, 0.689, 0.673, 0.702, 0.717,

0.717, 0.69, 0.716, 0.696, 0.738,

0.75, 0.75, 0.66, 0.713, 0.758,

0.777, 0.777, 0.769, 0.792, 0.802,

0.807, 0.807, 0.804, 0.812, 0.811]

SyncBatchNorm = [0.283, 0.514, 0.531, 0.654, 0.671,

0.591, 0.621, 0.685, 0.701, 0.732,

0.701, 0.74, 0.667, 0.723, 0.745,

0.679, 0.738, 0.772, 0.764, 0.765,

0.764, 0.791, 0.818, 0.791, 0.807,

0.806, 0.811, 0.821, 0.833, 0.81]

plt.plot(x, no_SyncBatchNorm, label="No SyncBatchNorm")

plt.plot(x, SyncBatchNorm, label="SyncBatchNorm")

plt.xlabel('Training epochs')

plt.ylabel('Accuracy')

plt.legend()

plt.show()

plt.close()

x = list(range(30))

single_gpu = [0.569, 0.576, 0.654, 0.648, 0.609,

0.637, 0.699, 0.709, 0.715, 0.715,

0.717, 0.724, 0.722, 0.731, 0.721,

0.774, 0.751, 0.787, 0.78, 0.77,

0.763, 0.803, 0.754, 0.796, 0.799,

0.815, 0.793, 0.808, 0.811, 0.806]

plt.plot(x, single_gpu, color="black", label="Single GPU")

plt.plot(x, no_SyncBatchNorm, label="No SyncBatchNorm")

plt.plot(x, SyncBatchNorm, label="SyncBatchNorm")

plt.xlabel('Training epochs')

plt.ylabel('Accuracy')

plt.legend()

plt.show()

plt.close()

# epochs = 30

# lrf = 0.1

# lf0 = lambda x: math.cos(x * math.pi / epochs)

# lf1 = lambda x: 1 + math.cos(x * math.pi / epochs)

# lf2 = lambda x: (1 + math.cos(x * math.pi / epochs)) / 2

# lf3 = lambda x: ((1 + math.cos(x * math.pi / epochs)) / 2) * (1 - lrf) + lrf

# x = range(epochs)

# y0 = [lf0(epoch) for epoch in x]

# y1 = [lf1(epoch) for epoch in x]

# y2 = [lf2(epoch) for epoch in x]

# y3 = [lf3(epoch) for epoch in x]

# plt.subplot(2, 2, 1)

# plt.plot(x, y0)

# plt.hlines(1, 0, epochs-1, colors="r", linestyles="dashed")

# plt.hlines(-1, 0, epochs-1, colors="r", linestyles="dashed")

# plt.xlim((0, epochs-1))

#

# plt.subplot(2, 2, 2)

# plt.plot(x, y1)

# plt.hlines(2, 0, epochs-1, colors="r", linestyles="dashed")

# plt.hlines(0, 0, epochs-1, colors="r", linestyles="dashed")

# plt.xlim((0, epochs-1))

#

# plt.subplot(2, 2, 3)

# plt.plot(x, y2)

# plt.hlines(1, 0, epochs-1, colors="r", linestyles="dashed")

# plt.hlines(0, 0, epochs-1, colors="r", linestyles="dashed")

# plt.xlim((0, epochs-1))

#

# plt.subplot(2, 2, 4)

# plt.plot(x, y3)

# plt.hlines(1, 0, epochs-1, colors="r", linestyles="dashed")

# plt.hlines(lrf, 0, epochs-1, colors="r", linestyles="dashed")

# plt.text(epochs-1, y3[-1], "{}".format(round(y3[-1], 1)))

# plt.xlim((0, epochs-1))

#

# plt.show()

# plt.close()

6. utils.py 文件

import os

import json

import pickle

import random

import matplotlib.pyplot as plt

def read_split_data(root: str, val_rate: float = 0.2):

random.seed(0) # 保证随机结果可复现

assert os.path.exists(root), "dataset root: {} does not exist.".format(root)

# 遍历文件夹,一个文件夹对应一个类别

class_names = [cla for cla in os.listdir(root) if os.path.isdir(os.path.join(root, cla))]

# 排序,保证顺序一致

class_names.sort()

# 生成类别名称以及对应的数字索引

class_indices = dict((k, v) for v, k in enumerate(class_names))

json_str = json.dumps(dict((val, key) for key, val in class_indices.items()), indent=4)

with open('class_indices.json', 'w') as json_file:

json_file.write(json_str)

train_images_path = [] # 存储训练集的所有图片路径

train_images_label = [] # 存储训练集图片对应索引信息

val_images_path = [] # 存储验证集的所有图片路径

val_images_label = [] # 存储验证集图片对应索引信息

every_class_num = [] # 存储每个类别的样本总数

supported = [".jpg", ".JPG", ".png", ".PNG"] # 支持的文件后缀类型

# 遍历每个文件夹下的文件

for cla in class_names:

cla_path = os.path.join(root, cla)

# 遍历获取supported支持的所有文件路径

images = [os.path.join(root, cla, i) for i in os.listdir(cla_path)

if os.path.splitext(i)[-1] in supported]

# 获取该类别对应的索引

image_class = class_indices[cla]

# 记录该类别的样本数量

every_class_num.append(len(images))

# 按比例随机采样验证样本

val_path = random.sample(images, k=int(len(images) * val_rate))

for img_path in images:

if img_path in val_path: # 如果该路径在采样的验证集样本中则存入验证集

val_images_path.append(img_path)

val_images_label.append(image_class)

else: # 否则存入训练集

train_images_path.append(img_path)

train_images_label.append(image_class)

print("{} images were found in the dataset.".format(sum(every_class_num)))

print("{} images for training.".format(len(train_images_path)))

print("{} images for validation.".format(len(val_images_path)))

plot_image = False

if plot_image:

# 绘制每种类别个数柱状图

plt.bar(range(len(class_names)), every_class_num, align='center')

# 将横坐标0,1,2,3,4替换为相应的类别名称

plt.xticks(range(len(class_names)), class_names)

# 在柱状图上添加数值标签

for i, v in enumerate(every_class_num):

plt.text(x=i, y=v + 5, s=str(v), ha='center')

# 设置x坐标

plt.xlabel('image class')

# 设置y坐标

plt.ylabel('number of images')

# 设置柱状图的标题

plt.title('flower class distribution')

plt.show()

return [train_images_path, train_images_label], [val_images_path, val_images_label], len(class_names)

def plot_data_loader_image(data_loader):

batch_size = data_loader.batch_size

plot_num = min(batch_size, 4)

json_path = './class_indices.json'

assert os.path.exists(json_path), json_path + " does not exist."

json_file = open(json_path, 'r')

class_indices = json.load(json_file)

for data in data_loader:

images, labels = data

for i in range(plot_num):

# [C, H, W] -> [H, W, C]

img = images[i].numpy().transpose(1, 2, 0)

# 反Normalize操作

img = (img * [0.229, 0.224, 0.225] + [0.485, 0.456, 0.406]) * 255

label = labels[i].item()

plt.subplot(1, plot_num, i+1)

plt.xlabel(class_indices[str(label)])

plt.xticks([]) # 去掉x轴的刻度

plt.yticks([]) # 去掉y轴的刻度

plt.imshow(img.astype('uint8'))

plt.show()

def write_pickle(list_info: list, file_name: str):

with open(file_name, 'wb') as f:

pickle.dump(list_info, f)

def read_pickle(file_name: str) -> list:

with open(file_name, 'rb') as f:

info_list = pickle.load(f)

return info_list

Reference

①②③ ④⑤

https://www.bilibili.com/video/BV1yt4y1e7sZ