机器学习笔记 - Keras + TensorFlow2.0 + Unet进行语义分割

1、U-Net简介

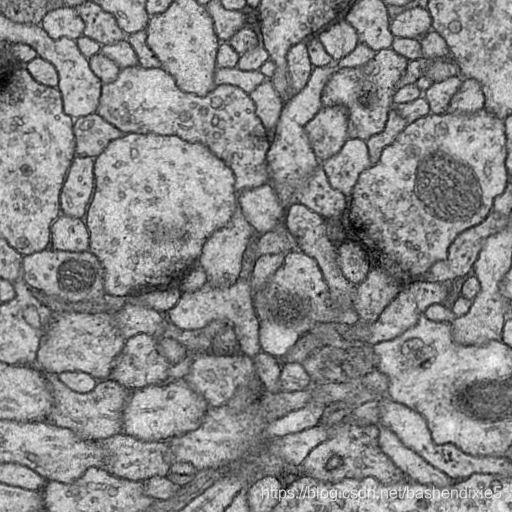

u-net用于生物医学图像分割的卷积网络(MICCAI,2015年)

在生物医学图像处理中,为图像中的每个细胞获取分类标签非常重要。 生物医学任务的最大挑战是成千上万张用于训练的图像不容易获得。

论文(https://arxiv.org/abs/1505.04597)建立在完全卷积层的基础上,并对其进行了修改,以处理一些训练图像并产生更精确的分割。

由于只有很少的训练数据可用,因此该模型通过在可用数据上施加弹性变形来使用数据增强。 如上图1所示,网络体系结构由左侧的收缩路径和右侧的扩展路径组成。

2、环境及数据集准备

基于tensorflow2、Keras、opencv,建议准备gpu测试

代码及数据集均参考(感谢作者):https://github.com/zhixuhao/unet![]() https://github.com/zhixuhao/unet

https://github.com/zhixuhao/unet

百度云链接(包含图片和代码):

https://pan.baidu.com/s/1AjYMTsPpcE6NNyCtqWeKhQ

提取码:098k

3、训练代码

data.py - 数据处理及扩充等

from __future__ import print_function

from keras.preprocessing.image import ImageDataGenerator

import numpy as np

import os

import glob

import skimage.io as io

import skimage.transform as trans

Sky = [128,128,128]

Building = [128,0,0]

Pole = [192,192,128]

Road = [128,64,128]

Pavement = [60,40,222]

Tree = [128,128,0]

SignSymbol = [192,128,128]

Fence = [64,64,128]

Car = [64,0,128]

Pedestrian = [64,64,0]

Bicyclist = [0,128,192]

Unlabelled = [0,0,0]

COLOR_DICT = np.array([Sky, Building, Pole, Road, Pavement,

Tree, SignSymbol, Fence, Car, Pedestrian, Bicyclist, Unlabelled])

def adjustData(img,mask,flag_multi_class,num_class):

if(flag_multi_class):

img = img / 255

mask = mask[:,:,:,0] if(len(mask.shape) == 4) else mask[:,:,0]

new_mask = np.zeros(mask.shape + (num_class,))

for i in range(num_class):

#for one pixel in the image, find the class in mask and convert it into one-hot vector

#index = np.where(mask == i)

#index_mask = (index[0],index[1],index[2],np.zeros(len(index[0]),dtype = np.int64) + i) if (len(mask.shape) == 4) else (index[0],index[1],np.zeros(len(index[0]),dtype = np.int64) + i)

#new_mask[index_mask] = 1

new_mask[mask == i,i] = 1

new_mask = np.reshape(new_mask,(new_mask.shape[0],new_mask.shape[1]*new_mask.shape[2],new_mask.shape[3])) if flag_multi_class else np.reshape(new_mask,(new_mask.shape[0]*new_mask.shape[1],new_mask.shape[2]))

mask = new_mask

elif(np.max(img) > 1):

img = img / 255

mask = mask /255

mask[mask > 0.5] = 1

mask[mask <= 0.5] = 0

return (img,mask)

def trainGenerator(batch_size,train_path,image_folder,mask_folder,aug_dict,image_color_mode = "grayscale",

mask_color_mode = "grayscale",image_save_prefix="image", mask_save_prefix="mask",

flag_multi_class = False,num_class = 2,save_to_dir=None, target_size=(256,256), seed=1):

'''

can generate image and mask at the same time

use the same seed for image_datagen and mask_datagen to ensure the transformation for image and mask is the same

if you want to visualize the results of generator, set save_to_dir = "your path"

'''

image_datagen = ImageDataGenerator(**aug_dict)

mask_datagen = ImageDataGenerator(**aug_dict)

image_generator = image_datagen.flow_from_directory(

train_path,

classes=[image_folder],

class_mode=None,

color_mode=image_color_mode,

target_size=target_size,

batch_size=batch_size,

save_to_dir=save_to_dir,

save_prefix=image_save_prefix,

seed=seed)

mask_generator = mask_datagen.flow_from_directory(

train_path,

classes=[mask_folder],

class_mode=None,

color_mode=mask_color_mode,

target_size=target_size,

batch_size=batch_size,

save_to_dir=save_to_dir,

save_prefix=mask_save_prefix,

seed=seed)

train_generator = zip(image_generator, mask_generator)

for (img, mask) in train_generator:

img, mask = adjustData(img, mask, flag_multi_class, num_class)

yield (img, mask)

def testGenerator(test_path, num_image = 30, target_size = (256,256),flag_multi_class = False,as_gray = True):

for i in range(num_image):

img = io.imread(os.path.join(test_path, "%d.png"%i), as_gray=as_gray)

img = img / 255

img = trans.resize(img, target_size)

img = np.reshape(img, img.shape+(1,)) if (not flag_multi_class) else img

img = np.reshape(img, (1,)+img.shape)

yield img

def geneTrainNpy(image_path, mask_path, flag_multi_class=False, num_class=2, image_prefix="image",mask_prefix = "mask",image_as_gray = True,mask_as_gray = True):

image_name_arr = glob.glob(os.path.join(image_path, "%s*.png"%image_prefix))

image_arr = []

mask_arr = []

for index, item in enumerate(image_name_arr):

img = io.imread(item,as_gray=image_as_gray)

img = np.reshape(img, img.shape + (1,)) if image_as_gray else img

mask = io.imread(item.replace(image_path,mask_path).replace(image_prefix, mask_prefix),as_gray = mask_as_gray)

mask = np.reshape(mask, mask.shape + (1,)) if mask_as_gray else mask

img, mask = adjustData(img, mask, flag_multi_class,num_class)

image_arr.append(img)

mask_arr.append(mask)

image_arr = np.array(image_arr)

mask_arr = np.array(mask_arr)

return image_arr, mask_arr

def labelVisualize(num_class, color_dict, img):

img = img[:, :, 0] if len(img.shape) == 3 else img

img_out = np.zeros(img.shape + (3,))

for i in range(num_class):

img_out[img == i, :] = color_dict[i]

return img_out / 255

def saveResult(save_path,npyfile,flag_multi_class = False,num_class = 2):

for i,item in enumerate(npyfile):

img = labelVisualize(num_class, COLOR_DICT, item) if flag_multi_class else item[:, :, 0]

io.imsave(os.path.join(save_path, "%d_predict.png"%i), img)model.py - 模型

import numpy as np

import os

import skimage.io as io

import skimage.transform as trans

import numpy as np

from keras.models import *

from keras.layers import *

from keras.optimizers import *

from keras.callbacks import ModelCheckpoint, LearningRateScheduler

from keras import backend as keras

def unet(pretrained_weights = None,input_size = (256,256,1)):

inputs = Input(input_size)

conv1 = Conv2D(64, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(inputs)

conv1 = Conv2D(64, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(conv1)

pool1 = MaxPooling2D(pool_size=(2, 2))(conv1)

conv2 = Conv2D(128, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(pool1)

conv2 = Conv2D(128, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(conv2)

pool2 = MaxPooling2D(pool_size=(2, 2))(conv2)

conv3 = Conv2D(256, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(pool2)

conv3 = Conv2D(256, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(conv3)

pool3 = MaxPooling2D(pool_size=(2, 2))(conv3)

conv4 = Conv2D(512, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(pool3)

conv4 = Conv2D(512, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(conv4)

drop4 = Dropout(0.5)(conv4)

pool4 = MaxPooling2D(pool_size=(2, 2))(drop4)

conv5 = Conv2D(1024, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(pool4)

conv5 = Conv2D(1024, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(conv5)

drop5 = Dropout(0.5)(conv5)

up6 = Conv2D(512, 2, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(UpSampling2D(size = (2,2))(drop5))

merge6 = concatenate([drop4,up6], axis = 3)

conv6 = Conv2D(512, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(merge6)

conv6 = Conv2D(512, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(conv6)

up7 = Conv2D(256, 2, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(UpSampling2D(size = (2,2))(conv6))

merge7 = concatenate([conv3,up7], axis = 3)

conv7 = Conv2D(256, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(merge7)

conv7 = Conv2D(256, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(conv7)

up8 = Conv2D(128, 2, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(UpSampling2D(size = (2,2))(conv7))

merge8 = concatenate([conv2,up8], axis = 3)

conv8 = Conv2D(128, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(merge8)

conv8 = Conv2D(128, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(conv8)

up9 = Conv2D(64, 2, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(UpSampling2D(size = (2,2))(conv8))

merge9 = concatenate([conv1,up9], axis = 3)

conv9 = Conv2D(64, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(merge9)

conv9 = Conv2D(64, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(conv9)

conv9 = Conv2D(2, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(conv9)

conv10 = Conv2D(1, 1, activation = 'sigmoid')(conv9)

model = Model(inputs=inputs, outputs=conv10)

model.compile(optimizer=Adam(lr=1e-4), loss='binary_crossentropy', metrics=['accuracy'])

#model.summary()

if(pretrained_weights):

model.load_weights(pretrained_weights)

return model

main.py - 训练代码

from model import *

from data import *

import os

os.environ['TF_FORCE_GPU_ALLOW_GROWTH'] = "true"

#os.environ["CUDA_VISIBLE_DEVICES"] = "0"

data_gen_args = dict(rotation_range=0.2,

width_shift_range=0.05,

height_shift_range=0.05,

shear_range=0.05,

zoom_range=0.05,

horizontal_flip=True,

fill_mode='nearest')

myGene = trainGenerator(2,'data/membrane/train','image','label',data_gen_args,save_to_dir = None)

model = unet()

model_checkpoint = ModelCheckpoint('unet_membrane.hdf5', monitor='loss', verbose=1, save_best_only=True)

model.fit_generator(myGene, steps_per_epoch=2000, epochs=5, callbacks=[model_checkpoint])test.py - 测试代码及h5转pb

from model import *

from data import *

import matplotlib

import os

from keras.models import load_model

import numpy as np

from PIL import Image

import cv2

import tensorflow as tf

import tensorflow_hub as hub

from tensorflow.python.framework.convert_to_constants import convert_variables_to_constants_v2

def test():

#加载模型h5文件

model = load_model("unet_meiyan.hdf5")

testGene = testGenerator("data/membrane/test_m")

results = model.predict_generator(testGene, 18, verbose=1)

saveResult("data/membrane/result_m", results)

def h5_to_pb():

model = tf.keras.models.load_model('unet_meiyan.hdf5',

custom_objects={'KerasLayer': hub.KerasLayer, 'Dense': tf.keras.layers.Dense},

compile=False)

model.summary()

full_model = tf.function(lambda Input: model(Input))

full_model = full_model.get_concrete_function(tf.TensorSpec(model.inputs[0].shape, tf.float32))

# Get frozen ConcreteFunction

frozen_func = convert_variables_to_constants_v2(full_model)

frozen_func.graph.as_graph_def()

layers = [op.name for op in frozen_func.graph.get_operations()]

print("-" * 50)

print("Frozen model layers: ")

for layer in layers:

print(layer)

print("-" * 50)

print("Frozen model inputs: ")

print(frozen_func.inputs)

print("Frozen model outputs: ")

print(frozen_func.outputs)

# Save frozen graph from frozen ConcreteFunction to hard drive

tf.io.write_graph(graph_or_graph_def=frozen_func.graph,

logdir="D:\\", name="unet_meiyan.pb", as_text=False)

#h5_to_pb()

test()4、使用Opencv加载模型进行预测

OpenCvSharp.Dnn.Net net = OpenCvSharp.Dnn.CvDnn.ReadNetFromTensorflow("C://unet.pb");

Mat frame = new Mat("C://1.png");

if (frame.Channels() > 1)

frame = frame.CvtColor(ColorConversionCodes.BGR2GRAY);

Cv2.Resize(frame, frame, new OpenCvSharp.Size(512, 512));

Mat blob = OpenCvSharp.Dnn.CvDnn.BlobFromImage(frame, 1.0 / 255, new OpenCvSharp.Size(256, 256), new Scalar(), false, false);

net.SetInput(blob);

Stopwatch sw = new Stopwatch();

sw.Start();

Mat prob = net.Forward(/*outNames[0]*/);

sw.Stop();

Console.WriteLine($"Runtime:{sw.ElapsedMilliseconds} ms");

Mat p = prob.Reshape(1, prob.Size(2));

Mat res = new Mat(p.Size(), MatType.CV_8UC1, Scalar.All(255));

for(int h=0; h(h, w, (byte)(p.At(h, w) * 100));

}

}

Cv2.ImShow("res", res); 5、其它参考

机器学习笔记 - 使用Keras + U-Net 进行图像分割_bashendixie5的博客-CSDN博客U-Net 是最初为医学影像分割而提出的一种语义分割技术。 它是较早的深度学习分割模型之一,U-Net 架构也用于许多 GAN 变体,例如 Pix2Pix 生成器。U-Net 在论文 U-Net: Convolutional Networks for Biomedical Image Segmentation 中进行了介绍。 模型架构相当简单:一个编码器(用于下采样)和一个解码器(用于上采样),带有跳跃连接。 如图 1 所示,它的形状像字母 U,因此得名 U-Net。https://blog.csdn.net/bashendixie5/article/details/123222692