基于Keras实现常见卷积神经网络模型(Lenet-5、Alexnet、VGG16)

文章目录

- 1. 测试数据的准备

- 2. Lenet-5网络模型的搭建

-

- 2.1 Lenet-5模型结构概述

- 2.2 Lenet-5模型搭建

- 2.3 测试结果

- 3. Alexnet网络模型的搭建

-

- 3.1 Alexnet模型结构概述

- 3.2 Alexnet模型搭建

- 3.3 测试结果

- 4. VGG16网络模型的搭建

-

- 4.1 VGG16模型结构概述

- 4.2 VGG16模型搭建

- 4.3 测试结果

- 5. 备注

- 小结

自己随意搭建一个卷积神经网络模型经过调参后运行结果并没有那么好,此时了解一些常用的网络模型构造就很有必要,虽然模型结构看上去不难,但是通过手动实践后确实会更理解更透彻。

由于Keras模型相对其他深度学习框架而言封装性更好,个人认为初学者学习更为方便,于是本文基于keras框架搭建了Lenet-5、Alexnet、VGG16模型

1. 测试数据的准备

将keras内置的mnist数据集作为测试数据,仅测试网络是否运行,其运行结果并不表示网络的优劣程度。代码如下:

from keras.datasets import mnist#导入keras内置的mnist数据集

from keras.utils.np_utils import to_categorical

def load_mnist_data():#训练集和验证集数据

(train_data,train_label),(test_data,test_label) = mnist.load_data()

train_data = train_data.reshape((60000, 28, 28, 1))

train_data = train_data.astype('float32') / 255

test_data = test_data.reshape((10000, 28, 28, 1))

test_data = test_data.astype('float32') / 255

train_label = to_categorical(train_label)

test_label = to_categorical(test_label)

return (train_data,train_label),(test_data,test_label)

2. Lenet-5网络模型的搭建

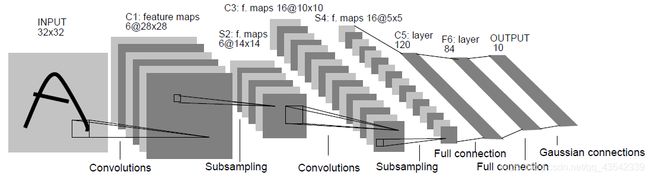

2.1 Lenet-5模型结构概述

- 卷积层Conv_1:由6个5*5卷积核组成,激活函数为’relu’函数

- 池化层Pooling_1:2*2最大池化

- 卷积层Conv_1:由16个5*5卷积核组成,激活函数为’relu’函数

- 池化层Pooling_2:2*2最大池化

- 卷积层Conv_1:由120个3*3卷积核组成,激活函数为’relu’函数

- Flatten层:将输入展开为一维

- 全连接层Dense_1:84个节点

- 全连接层Dense_2(输出层):10个节点(该层节点数等于类别数)

2.2 Lenet-5模型搭建

代码如下:

from keras import models

from keras import layers

def create_Lenet():

model = models.Sequential()

model.add(layers.Conv2D(filters=6,kernel_size=(5,5),use_bias=True,activation='relu',input_shape = (28,28,1)))

model.add(layers.MaxPooling2D(pool_size=(2,2)))

model.add(layers.Conv2D(filters=16,kernel_size=(5,5),use_bias=True,activation='relu'))

model.add(layers.MaxPooling2D(pool_size=(2,2)))

model.add(layers.Conv2D(filters=120,kernel_size=(3,3),use_bias=True,activation='relu'))

model.add(layers.Flatten())

model.add(layers.Dense(84,activation='relu'))

model.add(layers.Dense(10,activation='softmax'))

model.compile(optimizer='rmsprop',

loss='categorical_crossentropy',

metrics=['acc'])

return model

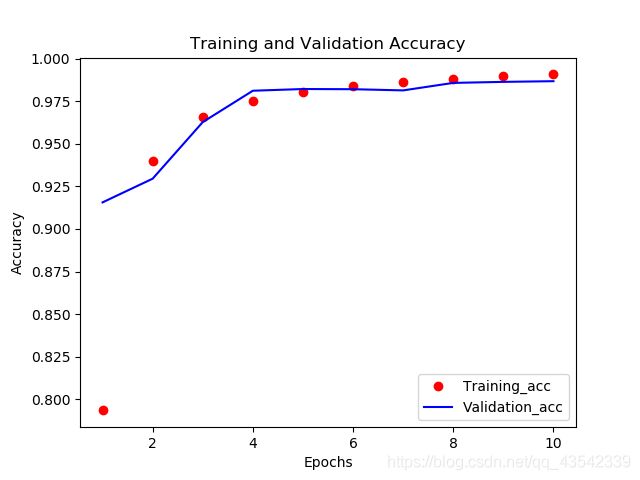

2.3 测试结果

模型迭代10次后训练验证loss降为0.0398、验证acc为98.67%

3. Alexnet网络模型的搭建

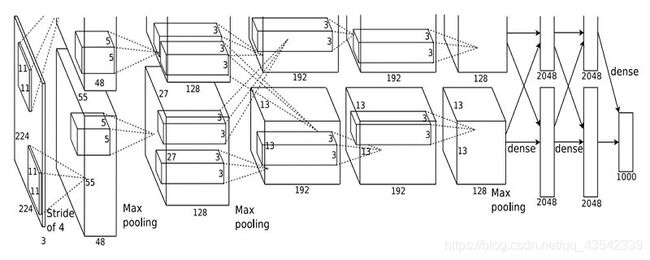

3.1 Alexnet模型结构概述

原本的Alexnet模型分了两支,用两个GPU训练,由于条件受限仅使用一支。

Alexnet模型大致结构如下:

- 卷积层Conv_1:由48个11*11卷积核组成,步长为4,激活函数为’relu’函数

- 标准化BN_1

- 卷积层Conv_2:由128个5*5卷积核组成,激活函数为’relu’函数

- 标准化BN_2

- 卷积层Conv_3:由192个3*3卷积核组成,激活函数为’relu’函数

- 卷积层Conv_4:由192个3*3卷积核组成,激活函数为’relu’函数

- 卷积层Conv_5:由192个3*3卷积核组成,激活函数为’relu’函数

- 池化层Pooling_1:2*2最大池化

- Flatten层:将输入展开为一维

- 全连接层Dense_1:64个节点,激活函数为’relu’函数

- Dropout层:防止过拟合

- 全连接层Dense_2:64个节点,激活函数为’relu’函数

- Dropout层:防止过拟合

- 全连接层Dense_3(输出层):10个节点(该层节点数等于类别数)

(注:原本在卷积层Conv_1和卷积层Conv_2后面应分别跟一个池化层,由于输入图像尺寸并不大,使得多次卷积之后特征尺寸小于卷积核尺寸,无法继续卷积,故将最开始的两个池化层删去)

3.2 Alexnet模型搭建

代码如下:

def create_Alexnet():

model = models.Sequential()

model.add(layers.Conv2D(filters=48,padding='same',kernel_size=(11,11),strides=4,activation='relu',input_shape = (28,28,1)))

# model.add(layers.MaxPooling2D(pool_size=(3,3),strides=2))

model.add(layers.BatchNormalization())

model.add(layers.Conv2D(filters=128,padding='same',kernel_size=(5,5),activation='relu'))

# model.add(layers.MaxPooling2D(pool_size=(3,3),strides=2))

model.add(layers.BatchNormalization())

model.add(layers.Conv2D(filters=192,padding='same',kernel_size=(3,3),activation='relu'))

model.add(layers.Conv2D(filters=192,padding='same',kernel_size=(3,3),activation='relu'))

model.add(layers.Conv2D(filters=128,padding='same',kernel_size=(3,3),activation='relu'))

model.add(layers.MaxPooling2D(pool_size=(3,3),strides=2))

model.add(layers.Flatten())

model.add(layers.Dense(64,activation='relu'))

model.add(layers.Dropout(0.5))

model.add(layers.Dense(64,activation='relu'))

model.add(layers.Dropout(0.5))

model.add(layers.Dense(10,activation='softmax'))

model.compile(optimizer='rmsprop',

loss='categorical_crossentropy',

metrics=['acc'])

return model

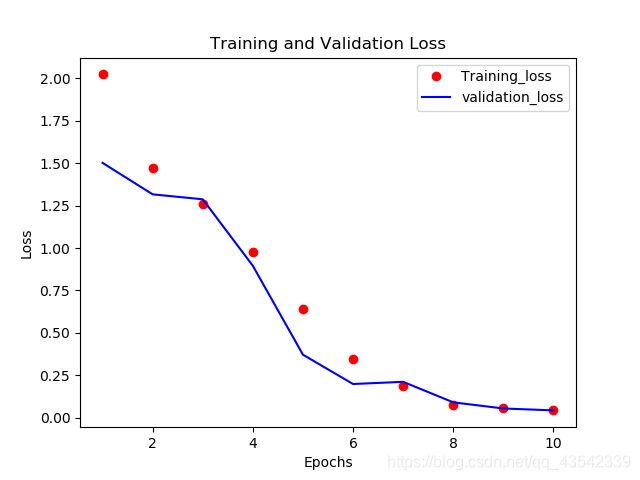

3.3 测试结果

模型迭代10次后训练验证loss降为0.0832、验证acc为98.70%

4. VGG16网络模型的搭建

4.1 VGG16模型结构概述

VGG16模型大致结构如下:(2+2+3+3+3+3 = 16)

- Group1:

卷积层Conv_1_1:由16个11 * 11卷积核组成,激活函数为’relu’函数

卷积层Conv_1_2:由16个3 * 3卷积核组成,激活函数为’relu’函数

池化层Pooling_1:2 * 2最大池化 - Group2:

卷积层Conv_2_1:由32个3 * 3卷积核组成,激活函数为’relu’函数

卷积层Conv_2_2:由32个3 * 3卷积核组成,激活函数为’relu’函数

池化层Pooling_2:2 * 2最大池化 - Group3:

卷积层Conv_3_1:由64个3 * 3卷积核组成,激活函数为’relu’函数

卷积层Conv_3_2:由64个3 * 3卷积核组成,激活函数为’relu’函数

卷积层Conv_3_3:由64个1 * 1卷积核组成,激活函数为’relu’函数

池化层Pooling_3:2 * 2最大池化 - Group4:

卷积层Conv_4_1:由128个3 * 3卷积核组成,激活函数为’relu’函数

卷积层Conv_4_2:由128个3 * 3卷积核组成,激活函数为’relu’函数

卷积层Conv_4_3:由128个1 * 1卷积核组成,激活函数为’relu’函数

池化层Pooling_4:2 * 2最大池化 - Group5:

卷积层Conv_5_1:由256个3 * 3卷积核组成,激活函数为’relu’函数

卷积层Conv_5_2:由256个3 * 3卷积核组成,激活函数为’relu’函数

卷积层Conv_5_3:由256个1 * 1卷积核组成,激活函数为’relu’函数

Flatten层:展开为一维 - Group6:

全连接层Dense_6_1:由1024个节点组成,激活函数为sigmoid函数

全连接层Dense_6_1:由1024个节点组成,激活函数为sigmoid函数

全连接层Dense_6_2:由10个节点组成

(注:本次调试减少了原VGG16各层卷积核个数(毕竟mnist数据集不需要数量过于庞大的卷积核))

4.2 VGG16模型搭建

代码如下:

def create_VGGnet():

model = models.Sequential()

model.add(layers.Conv2D(16, (3, 3), activation='relu', padding='same', input_shape=(28, 28, 1)))

model.add(layers.Conv2D(16, (3, 3), activation='relu', padding='same'))

model.add(layers.MaxPooling2D(2, 2))

model.add(layers.Conv2D(32, (3, 3), activation='relu', padding='same'))

model.add(layers.Conv2D(32, (3, 3), activation='relu', padding='same'))

model.add(layers.MaxPooling2D(2, 2))

model.add(layers.Conv2D(64, (3, 3), activation='relu', padding='same'))

model.add(layers.Conv2D(64, (3, 3), activation='relu', padding='same'))

model.add(layers.Conv2D(64, (3, 3), activation='relu', padding='same'))

model.add(layers.MaxPooling2D(2, 2))

model.add(layers.Conv2D(128, (3, 3), activation='relu', padding='same'))

model.add(layers.Conv2D(128, (3, 3), activation='relu', padding='same'))

model.add(layers.Conv2D(128, (3, 3), activation='relu', padding='same'))

model.add(layers.MaxPooling2D(2, 2))

model.add(layers.Conv2D(256, (3, 3), activation='relu', padding='same'))

model.add(layers.Conv2D(256, (3, 3), activation='relu', padding='same'))

model.add(layers.Conv2D(256, (3, 3), activation='relu', padding='same'))

model.add(layers.Flatten())

model.add(layers.Dense(1024, activation='relu'))

model.add(layers.Dense(1024, activation='relu'))

model.add(layers.Dense(10, activation='softmax'))

model.compile(optimizer='rmsprop',

loss='categorical_crossentropy',

metrics=['acc'])

return model

4.3 测试结果

模型迭代10次后,验证loss降为0.0424,验证Accuracy为99.05%

5. 备注

此处附上画图代码以及主函数代码

import matplotlib.pyplot as plt

def plot_results(history):

history_dict = history.history

loss_values = history_dict['loss']

val_loss_values = history_dict['val_loss']

epochs = range(1,len(loss_values)+1)

plt.plot(epochs,loss_values,'ro',label='Training_loss')

plt.plot(epochs,val_loss_values,'b',label = 'validation_loss')

plt.title('Training and Validation Loss')

plt.xlabel('Epochs')

plt.ylabel('Loss')

plt.legend()

plt.show()

##训练精度和验证精度绘图

plt.clf()

acc = history_dict['acc']

val_acc = history_dict['val_acc']

plt.plot(epochs,acc,'ro',label='Training_acc')

plt.plot(epochs,val_acc,'b',label = 'Validation_acc')

plt.title('Training and Validation Accuracy')

plt.xlabel('Epochs')

plt.ylabel('Accuracy')

plt.legend()

plt.show()

if __name__ == '__main__':

(train_data, train_label), (test_data, test_label) = load_mnist_data()

#LeNet = create_Lenet()

#AlexNet = create_Alexnet()

VGGNet = create_VGGnet()

history = VGGNet.fit(train_data,train_label,epochs=10,batch_size=512,validation_data=(test_data,test_label))

plot_results(history)

小结

- 就三个模型来说实质上都很简单,都是通过卷积层提取特征,中间穿插池化层与BN层,同时为了防止过拟合可考虑添加Drouout层(参数一般设为0.5),自己动手搭建搭建并亲自调节参数后确实会理解更透彻。

- 从Lenet的到Alexnet到VGG,直观来讲,我们可以看到面对更为复杂的分类问题时,改善网络模型的方法之一便是增加网络深度(即增加卷积层),同时模型层数多,卷积核个数多,则考虑采用更小尺寸的卷积核来提取特征。

- 有任何问题欢迎指出,同时非常感谢该篇博主,总结的十分全面详细

参考博客:卷积神经网络超详细介绍