k8s搭建es集群

k8s搭建es集群

- 一、环境准备

-

- 1.配置说明

- 2.关闭防火墙

- 3.关闭selinux

- 4.关闭swap

- 5.设置host

- 6.配置免密登录

- 7.k8s集群搭建,docker环境安装

- 二、集群搭建

-

- 1.拉取Elasticsearch、Kibana 镜像,上传至内网私有镜像仓库

- 2.k8s & es集群服务编排

-

- 0.创建命名空间

- 1.创建数据挂载卷

- 2.es集群服务编排

- 3. 连接测试

一、环境准备

1.配置说明

| 软体名称 | 软体版本号 |

|---|---|

| docker | Docker version 20.10.3, build 48d30b5 |

| Elasticsearch | 7.2.0 |

| Kibana | 7.2.0 |

| Kubernetes | v1.20.4 |

| centos7 | 3.10.0-1160.15.2.el7.x86_64 |

2.关闭防火墙

systemctl stop firewalld && systemctl disable firewalld

3.关闭selinux

setenforce 0

4.关闭swap

sed -ri 's/.*swap.*/#&/' /etc/fstab

5.设置host

192.168.81.193 master

192.168.81.194 slave1

192.168.4.139 slave2

192.168.4.140 slave3

192.168.4.141 slave4

6.配置免密登录

# 输入命令回车

ssh-keygen

# 按照提示先输入yes,再输入服务器用户对应密码

# 每两台配置双向ssh(包含自己,自己也是个数据节点)

ssh-copy-id root@master

7.k8s集群搭建,docker环境安装

之前已写过搭建教程就不再赘述了,详见: https://editor.csdn.net/md/?articleId=113813929

二、集群搭建

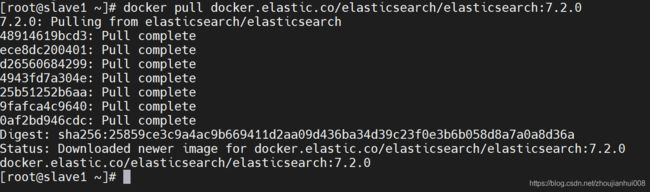

1.拉取Elasticsearch、Kibana 镜像,上传至内网私有镜像仓库

- 鉴于服务器间网络的复杂性,部分机器存在镜像拉取失败的问题,为解决类似问题,我们将官网镜像拉取到网络正常的机器上,打完标签后上传至公司内网镜像仓库中,部署则直接从内网镜像仓库中拉取。

操作如下(示例):

docker pull docker.elastic.co/elasticsearch/elasticsearch:7.2.0

# 镜像打标

docker tag docker.elastic.co/elasticsearch/elasticsearch:7.2.0 192.168.5.x/public/elasticsearch:7.2.0

# 上传至内网私有镜像仓库中

docker push 192.168.5.x/public/elasticsearch:7.2.0

docker pull docker.elastic.co/kibana/kibana:7.2.0

# 镜像打标

docker tag docker.elastic.co/kibana/kibana:7.2.0 192.168.5.x/public/kibana:7.2.0

# 上传至内网私有镜像仓库中

docker push 192.168.5.x/public/kibana:7.2.0

2.k8s & es集群服务编排

0.创建命名空间

kubectl create ns bigdata

1.创建数据挂载卷

如何创建pv、pvc可以参照文章:https://blog.csdn.net/zhoujianhui008/article/details/113816895

# 自定义目录下创建文档

vim k8s-pv-es.yaml

创建数据卷(示例):

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: k8s-pv-es1

namespace: bigdata

labels:

type: local

spec:

storageClassName: es

capacity:

storage: 15Gi

accessModes:

- ReadWriteMany

hostPath:

path: /data/k8s/mnt/es/es1

persistentVolumeReclaimPolicy: Recycle

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: k8s-pv-es2

namespace: bigdata

labels:

type: local

spec:

storageClassName: es

capacity:

storage: 15Gi

accessModes:

- ReadWriteMany

hostPath:

path: /data/k8s/mnt/es/es2

persistentVolumeReclaimPolicy: Recycle

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: k8s-pv-es3

namespace: bigdata

labels:

type: local

spec:

storageClassName: es

capacity:

storage: 15Gi

accessModes:

- ReadWriteMany

hostPath:

path: /data/k8s/mnt/es/es3

persistentVolumeReclaimPolicy: Recycle

执行命令部署挂载点:

kubectl apply -f k8s-pv-es.yaml -n bigdata

2.es集群服务编排

es集群编排文档(示例):

# 自定义目录下创建文档

vim k8s-es-cluster.yaml

---

kind: Service

apiVersion: v1

metadata:

name: es

namespace: bigdata

labels:

app: elasticsearch

spec:

selector:

app: elasticsearch

type: NodePort

ports:

- port: 9200

nodePort: 30080

name: rest

- port: 9300

nodePort: 30070

name: inter-node

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: es-cluster

namespace: bigdata

spec:

serviceName: es

replicas: 3

selector:

matchLabels:

app: elasticsearch

template:

metadata:

labels:

app: elasticsearch

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: "app"

operator: In

values:

- elasticsearch

- key: "kubernetes.io/hostname"

operator: NotIn

values:

- master

topologyKey: "kubernetes.io/hostname"

containers:

- name: elasticsearch

image: 192.168.5.x/public/elasticsearch:7.2.0

imagePullPolicy: IfNotPresent

resources:

limits:

cpu: 1000m

requests:

cpu: 100m

ports:

- containerPort: 9200

name: rest

protocol: TCP

- containerPort: 9300

name: inter-node

protocol: TCP

volumeMounts:

- name: data

mountPath: /usr/share/elasticsearch/data

env:

- name: cluster.name

value: k8s-logs

- name: node.name

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: discovery.seed_hosts

value: "es-cluster-0.es,es-cluster-1.es,es-cluster-2.es"

- name: cluster.initial_master_nodes

value: "es-cluster-0,es-cluster-1,es-cluster-2"

- name: ES_JAVA_OPTS

value: "-Xms512m -Xmx512m"

initContainers:

- name: fix-permissions

image: busybox

imagePullPolicy: IfNotPresent

command: ["sh", "-c", "chown -R 1000:1000 /usr/share/elasticsearch/data"]

securityContext:

privileged: true

volumeMounts:

- name: data

mountPath: /usr/share/elasticsearch/data

- name: increase-vm-max-map

image: busybox

imagePullPolicy: IfNotPresent

command: ["sysctl", "-w", "vm.max_map_count=262144"]

securityContext:

privileged: true

- name: increase-fd-ulimit

image: busybox

imagePullPolicy: IfNotPresent

command: ["sh", "-c", "ulimit -n 65536"]

securityContext:

privileged: true

volumeClaimTemplates:

- metadata:

name: data

labels:

app: elasticsearch

spec:

accessModes: [ "ReadWriteMany" ]

storageClassName: es

resources:

requests:

storage: 10Gi

---

apiVersion: v1

kind: Service

metadata:

name: kibana

namespace: bigdata

labels:

app: kibana

spec:

type: NodePort

ports:

- port: 5601

nodePort: 30090

targetPort: 5601

selector:

app: kibana

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana

namespace: bigdata

labels:

app: kibana

spec:

replicas: 1

selector:

matchLabels:

app: kibana

template:

metadata:

labels:

app: kibana

spec:

containers:

- name: kibana

image: 192.168.5.x/public/kibana:7.2.0

imagePullPolicy: IfNotPresent

resources:

limits:

cpu: 1000m

requests:

cpu: 100m

env:

- name: ELASTICSEARCH_HOSTS

value: http://es:9200

ports:

- containerPort: 5601

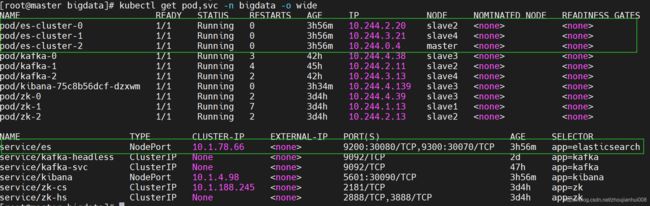

执行命令部署statefulset类型的es集群:

# 部署集群

kubectl apply -f k8s-es-cluster.yaml

# 查看部署结果

kubectl get pod,svc -n bigdata -o wide

3. 连接测试

直接访问kibana:

http://192.168.81.193:30090/app/monitoring#/elasticsearch/indices?_g=(cluster_uuid:gJnSv2HiRU2ncGvpLm8SLQ)