【深度学习-pytorch-番外篇】如何使用Tensorboard可视化Pytorch训练结果

【深度学习-Pytorch-番外篇】如何在Pytorch中使用Tensorboard可视化训练过程

- 1、文前白话

- 2、使用Tensorboard 常用的可视化的功能

- 3、 环境依赖与数据集准备

-

- 3.1 环境依赖

- 3.2 数据集准备

- 4、 用到的脚本代码与详细注释解析

-

- ① train.py

- ② train_eval_utils.py

- ③ model.py

- ④ my_daataset.py

- ⑤ data_utils.py

- 5、 训练完成后,进行查看可视化效果

-

-

- Windows 系统下

- Linux 系统下

-

- 6、补充填坑:

-

-

- 浏览器中无法访问localhost:6006

-

- 7、总结

- Reference

1、文前白话

本文主要本文以pytorch搭建的resnet网络为例进行训练可视化,结合pytorch官方所给Training With Tensorboard教程,具体可查看官网。介绍Pytorch中使用Tensorboard可视化训练过程,包括可视化模型结构,训练loss,验证acc,learning rate等。文末有讲解链接,非常详细。

Pytorch官方网址:VISUALIZING MODELS, DATA, AND TRAINING WITH TENSORBOARD.

2、使用Tensorboard 常用的可视化的功能

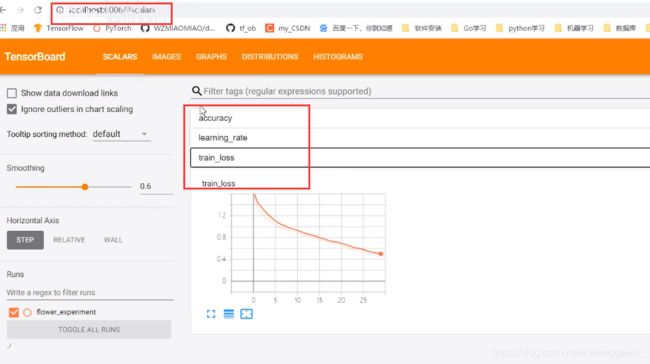

①保存网络的结构图: 可以看到模型搭建的每个模块

② 保存训练过程中的训练集损失函数loss

③验证集的accuracy

④学习率变化learning_rate

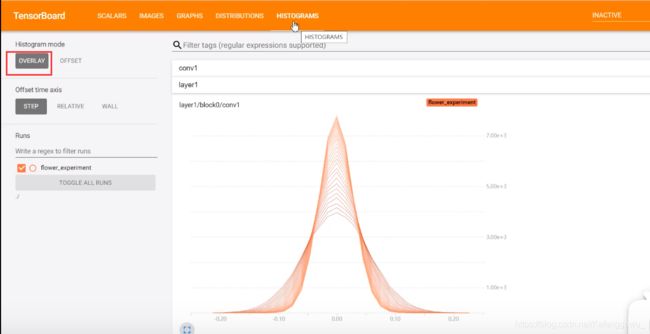

⑤保存权重数值的分布

⑥预测的结果精度 等等

3、 环境依赖与数据集准备

3.1 环境依赖

python 3.7

tqdm >= 4.42.1

matplotlib >=3.2.1

torch >=1.6.0

Pillow >=8.1.1

tensorboard >=2.2.2

3.2 数据集准备

可以使用自己的数据进行分类训练,也可以下载tensorflow官方给出的花分类数据集进行复现学习。

花分类数据集下载地址.

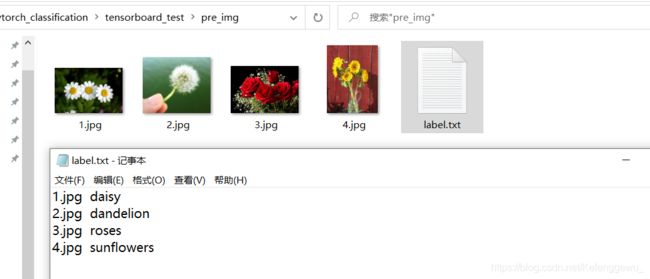

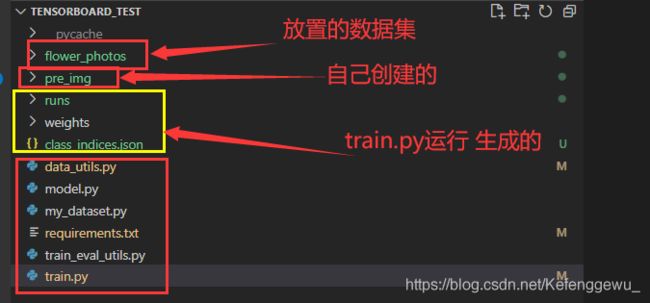

数据集的格式如下图所示。

且在实际训练中,根据代码中设定的训练集与验证集比率,会自动随机分配数据集。

文件夹中plot_img是对图片进行预测的,自己在运行脚本所在目录下创建,测试网络的预测结果(别处下载,不要用训练数据集里面的),txt文件对应为图片的标签.

4、 用到的脚本代码与详细注释解析

① train.py

完整代码:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

# @Time : 2021/07/01

# @ Wupke

# Purpose: 使用resnet网络训练分类数据集并通过tensorboard可视化

import os

import math

import argparse

import torch

import torch.optim as optim

from torch.utils.tensorboard import SummaryWriter

from torchvision import transforms

import torch.optim.lr_scheduler as lr_scheduler

from model import resnet34

from my_dataset import MyDataSet

from data_utils import read_split_data, plot_class_preds

from train_eval_utils import train_one_epoch, evaluate

def main(args):

device = torch.device(args.device if torch.cuda.is_available() else "cpu")

print(args)

print('Start Tensorboard with "tensorboard --logdir=runs", view at http://localhost:6006/')

# 实例化SummaryWriter对象

# 导入自torch.utils.tensorboard 模块

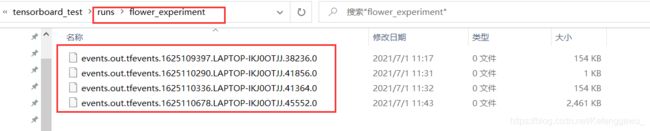

tb_writer = SummaryWriter(log_dir="runs/flower_experiment")

# 调用后,根目录下会生成相应的文件夹runs/flower_experiment来保存tensorboard文件

if os.path.exists("./weights") is False:

os.makedirs("./weights")

# 划分数据为训练集和验证集(详细可查看具体的函数定义)

train_images_path, train_images_label, val_images_path, val_images_label = read_split_data(args.data_path)

# 定义训练以及验证集的预处理方法

data_transform = {

"train": transforms.Compose([transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])]),

"val": transforms.Compose([transforms.Resize(256), #最小边长变为256大小缩放处理

transforms.CenterCrop(224), # 中心裁剪方式裁剪为224*224大小

transforms.ToTensor(), # 转化为tensor,对应图像数值从0-255变为0-1之间

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])])}

# 标准化处理,[0.485, 0.456, 0.406]为均值, [0.229, 0.224, 0.225]为标准差

# 均值和标准差是在训练image—net中得到的

# 实例化训练数据集

train_data_set = MyDataSet(images_path=train_images_path,

images_class=train_images_label,

transform=data_transform["train"])

# 实例化验证数据集

val_data_set = MyDataSet(images_path=val_images_path,

images_class=val_images_label,

transform=data_transform["val"])

batch_size = args.batch_size

# 计算使用num_workers的数量

nw = min([os.cpu_count(), batch_size if batch_size > 1 else 0, 8]) # number of workers

print('Using {} dataloader workers every process'.format(nw))

train_loader = torch.utils.data.DataLoader(train_data_set,

batch_size=batch_size,

shuffle=True,

pin_memory=True,

num_workers=nw,

collate_fn=train_data_set.collate_fn)

val_loader = torch.utils.data.DataLoader(val_data_set,

batch_size=batch_size,

shuffle=False,

pin_memory=True,

num_workers=nw,

collate_fn=val_data_set.collate_fn)

# 实例化模型

model = resnet34(num_classes=args.num_classes).to(device)

# 将模型写入tensorboard

init_img = torch.zeros((1, 3, 224, 224), device=device)

# 1 为设定的 banch 数(一次只处理一张图片), device 为开始指定的运算设备 zeros初始一个零矩阵,图片大小为224×224,3通道

tb_writer.add_graph(model, init_img) # 传入网络模型和图片,init_img 传入的数据要和图片大小相同

# 如果存在预训练权重则载入

if os.path.exists(args.weights):

weights_dict = torch.load(args.weights, map_location=device)

load_weights_dict = {k: v for k, v in weights_dict.items()

if model.state_dict()[k].numel() == v.numel()}

model.load_state_dict(load_weights_dict, strict=False)

else:

print("not using pretrain-weights.")

# 是否冻结权重

if args.freeze_layers:

print("freeze layers except fc layer.")

for name, para in model.named_parameters():

# 除最后的全连接层外,其他权重全部冻结

if "fc" not in name:

para.requires_grad_(False)

pg = [p for p in model.parameters() if p.requires_grad]

# 优化器

optimizer = optim.SGD(pg, lr=args.lr, momentum=0.9, weight_decay=0.005)

# 论文地址Scheduler https://arxiv.org/pdf/1812.01187.pdf

lf = lambda x: ((1 + math.cos(x * math.pi / args.epochs)) / 2) * (1 - args.lrf) + args.lrf # cosine

scheduler = lr_scheduler.LambdaLR(optimizer, lr_lambda=lf)

for epoch in range(args.epochs):

# train

mean_loss = train_one_epoch(model=model,

optimizer=optimizer,

data_loader=train_loader,

device=device,

epoch=epoch)

# update learning rate

scheduler.step()

# validate

acc = evaluate(model=model,

data_loader=val_loader,

device=device)

# add loss, acc and lr into tensorboard

# 使用add_scalar函数, 在训练每一个epoch后,保存当前的训练loss, 验证集的acc and lr into tensorboard

print("[epoch {}] accuracy: {}".format(epoch, round(acc, 3)))

tags = ["train_loss", "accuracy", "learning_rate"]

tb_writer.add_scalar(tags[0], mean_loss, epoch) # 相应的参数为:下标索引,名称,定位到训练哪一步

tb_writer.add_scalar(tags[1], acc, epoch)

tb_writer.add_scalar(tags[2], optimizer.param_groups[0]["lr"], epoch)

# add figure into tensorboard 将pre_img文件夹中图片预测的结果绘制成一个图片保存到tensorboard中

fig = plot_class_preds(net=model, # 参数:实例化的模型

images_dir="./pre_img", # images_dir 对应 预测图片所在的根目录

transform=data_transform["val"], # 验证集所使用的一个图像预处理

num_plot=5, # 要展示的图片数目

device=device) # 使用的运算设备信息

if fig is not None:

# 添加预测图片的结果保存到一张图上

tb_writer.add_figure("predictions vs. actuals", #第一个参数为传入图像名称

figure=fig, # 第二个为fig对象本身

global_step=epoch)

# add conv1 weights into tensorboard(添加直方图)

# 需要查看网络中间层的权重参考(参考使用pytorch查看中间特征层矩阵以及卷积核参数)

tb_writer.add_histogram(tag="conv1",

values=model.conv1.weight,

global_step=epoch)

tb_writer.add_histogram(tag="layer1/block0/conv1",

values=model.layer1[0].conv1.weight,

global_step=epoch)

# save weights

torch.save(model.state_dict(), "./weights/model-{}.pth".format(epoch))

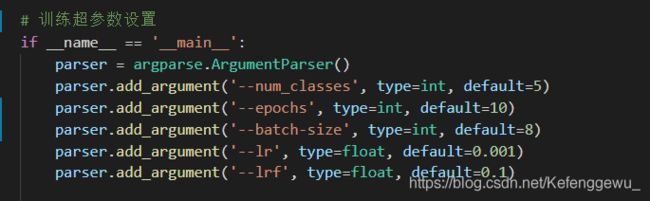

# 训练超参数设置

if __name__ == '__main__':

parser = argparse.ArgumentParser()

parser.add_argument('--num_classes', type=int, default=5)

parser.add_argument('--epochs', type=int, default=10)

parser.add_argument('--batch-size', type=int, default=8)

parser.add_argument('--lr', type=float, default=0.001)

parser.add_argument('--lrf', type=float, default=0.1)

# 数据集所在根目录(调整为自己数据集存放路径)

# http://download.tensorflow.org/example_images/flower_photos.tgz(花分类数据集下载地址)

img_root = r"E:\GIT\deep-learning-for-image-processing\pytorch_classification\tensorboard_test\flower_photos"

parser.add_argument('--data-path', type=str, default=img_root)

# resnet34 官方权重下载地址

# https://download.pytorch.org/models/resnet34-333f7ec4.pth

# parser.add_argument('--weights', type=str, default='resNet34.pth', # default='' 默认不使用预训练权重

# help='initial weights path')

parser.add_argument('--weights', type=str, default='', # default='' 默认不使用预训练权重

help='initial weights path')

parser.add_argument('--freeze-layers', type=bool, default=False)

# '--freeze-layers' 参数含义:是否冻结除全连接层以外的所有网络结构,如果default=True,就会单独训练全连接层,之前的权重不会参与训练,

# 如果是使用预训练权重的话,可以设置为true,这样可以加快模型的训练

parser.add_argument('--device', default='cuda', help='device id (i.e. 0 or 0,1 or cpu)')

opt = parser.parse_args()

main(opt)

超参数的设置

具体的参数可以根据自己的实际情况来设定。应用 or 演示等等

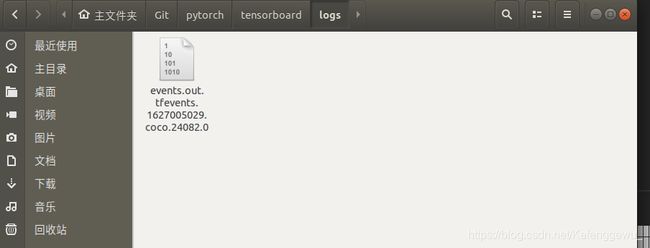

② 生成相应的文件夹runs/flower_experiment来保存tensorboard文件

③ 生成相应的文件夹weights来保存每个cpoch结束之后的训练权重

② train_eval_utils.py

训练、预测的相关过程的补充设定

完整代码:

import sys

from tqdm import tqdm

import torch

def train_one_epoch(model, optimizer, data_loader, device, epoch):

model.train()

loss_function = torch.nn.CrossEntropyLoss()

mean_loss = torch.zeros(1).to(device)

optimizer.zero_grad()

data_loader = tqdm(data_loader)

for step, data in enumerate(data_loader):

images, labels = data

pred = model(images.to(device))

loss = loss_function(pred, labels.to(device))

loss.backward()

mean_loss = (mean_loss * step + loss.detach()) / (step + 1) # update mean losses

# 打印平均loss

data_loader.desc = "[epoch {}] mean loss {}".format(epoch, round(mean_loss.item(), 3))

if not torch.isfinite(loss):

print('WARNING: non-finite loss, ending training ', loss)

sys.exit(1)

optimizer.step()

optimizer.zero_grad()

return mean_loss.item()

@torch.no_grad()

def evaluate(model, data_loader, device):

model.eval()

# 用于存储预测正确的样本个数

sum_num = torch.zeros(1).to(device)

# 统计验证集样本总数目

num_samples = len(data_loader.dataset)

# 打印验证进度

data_loader = tqdm(data_loader, desc="validation...")

for step, data in enumerate(data_loader):

images, labels = data

pred = model(images.to(device))

pred = torch.max(pred, dim=1)[1]

sum_num += torch.eq(pred, labels.to(device)).sum()

# 计算预测正确的比例

acc = sum_num.item() / num_samples

return acc

③ model.py

使用Pytorch搭建了resnet网络结构

完整代码如下:

import torch.nn as nn

import torch

class BasicBlock(nn.Module):

expansion = 1

def __init__(self, in_channel, out_channel, stride=1, downsample=None):

super(BasicBlock, self).__init__()

self.conv1 = nn.Conv2d(in_channels=in_channel, out_channels=out_channel,

kernel_size=3, stride=stride, padding=1, bias=False)

self.bn1 = nn.BatchNorm2d(out_channel)

self.relu = nn.ReLU()

self.conv2 = nn.Conv2d(in_channels=out_channel, out_channels=out_channel,

kernel_size=3, stride=1, padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(out_channel)

self.downsample = downsample

def forward(self, x):

identity = x

if self.downsample is not None:

identity = self.downsample(x)

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out += identity

out = self.relu(out)

return out

class Bottleneck(nn.Module):

expansion = 4

def __init__(self, in_channel, out_channel, stride=1, downsample=None):

super(Bottleneck, self).__init__()

self.conv1 = nn.Conv2d(in_channels=in_channel, out_channels=out_channel,

kernel_size=1, stride=1, bias=False) # squeeze channels

self.bn1 = nn.BatchNorm2d(out_channel)

# -----------------------------------------

self.conv2 = nn.Conv2d(in_channels=out_channel, out_channels=out_channel,

kernel_size=3, stride=stride, bias=False, padding=1)

self.bn2 = nn.BatchNorm2d(out_channel)

# -----------------------------------------

self.conv3 = nn.Conv2d(in_channels=out_channel, out_channels=out_channel*self.expansion,

kernel_size=1, stride=1, bias=False) # unsqueeze channels

self.bn3 = nn.BatchNorm2d(out_channel*self.expansion)

self.relu = nn.ReLU(inplace=True)

self.downsample = downsample

def forward(self, x):

identity = x

if self.downsample is not None:

identity = self.downsample(x)

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.relu(out)

out = self.conv3(out)

out = self.bn3(out)

out += identity

out = self.relu(out)

return out

class ResNet(nn.Module):

def __init__(self, block, blocks_num, num_classes=1000, include_top=True):

super(ResNet, self).__init__()

self.include_top = include_top

self.in_channel = 64

self.conv1 = nn.Conv2d(3, self.in_channel, kernel_size=7, stride=2,

padding=3, bias=False)

self.bn1 = nn.BatchNorm2d(self.in_channel)

self.relu = nn.ReLU(inplace=True)

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

self.layer1 = self._make_layer(block, 64, blocks_num[0])

self.layer2 = self._make_layer(block, 128, blocks_num[1], stride=2)

self.layer3 = self._make_layer(block, 256, blocks_num[2], stride=2)

self.layer4 = self._make_layer(block, 512, blocks_num[3], stride=2)

if self.include_top:

self.avgpool = nn.AdaptiveAvgPool2d((1, 1)) # output size = (1, 1)

self.fc = nn.Linear(512 * block.expansion, num_classes)

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

def _make_layer(self, block, channel, block_num, stride=1):

downsample = None

if stride != 1 or self.in_channel != channel * block.expansion:

downsample = nn.Sequential(

nn.Conv2d(self.in_channel, channel * block.expansion, kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(channel * block.expansion))

layers = []

layers.append(block(self.in_channel, channel, downsample=downsample, stride=stride))

self.in_channel = channel * block.expansion

for _ in range(1, block_num):

layers.append(block(self.in_channel, channel))

return nn.Sequential(*layers)

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

if self.include_top:

x = self.avgpool(x)

x = torch.flatten(x, 1)

x = self.fc(x)

return x

def resnet34(num_classes=1000, include_top=True):

return ResNet(BasicBlock, [3, 4, 6, 3], num_classes=num_classes, include_top=include_top)

def resnet101(num_classes=1000, include_top=True):

return ResNet(Bottleneck, [3, 4, 23, 3], num_classes=num_classes, include_top=include_top)

④ my_daataset.py

自定义数据集

完整代码如下:

from tqdm import tqdm

from PIL import Image

import torch

from torch.utils.data import Dataset

class MyDataSet(Dataset):

"""自定义数据集"""

def __init__(self, images_path: list, images_class: list, transform=None):

self.images_path = images_path

self.images_class = images_class

self.transform = transform

delete_img = []

for index, img_path in tqdm(enumerate(images_path)):

img = Image.open(img_path)

w, h = img.size

ratio = w / h

if ratio > 10 or ratio < 0.1:

delete_img.append(index)

# print(img_path, ratio)

for index in delete_img[::-1]:

self.images_path.pop(index)

self.images_class.pop(index)

def __len__(self):

return len(self.images_path)

def __getitem__(self, item):

img = Image.open(self.images_path[item])

# RGB为彩色图片,L为灰度图片

if img.mode != 'RGB':

raise ValueError("image: {} isn't RGB mode.".format(self.images_path[item]))

label = self.images_class[item]

if self.transform is not None:

img = self.transform(img)

return img, label

@staticmethod

def collate_fn(batch):

# 官方实现的default_collate可以参考

# https://github.com/pytorch/pytorch/blob/67b7e751e6b5931a9f45274653f4f653a4e6cdf6/torch/utils/data/_utils/collate.py

images, labels = tuple(zip(*batch))

images = torch.stack(images, dim=0)

labels = torch.as_tensor(labels)

return images, labels

⑤ data_utils.py

实现数据集的划分、训练预测结果的绘制等

完整代码如下:

import os

import json

import pickle

import random

from PIL import Image

import torch

import numpy as np

import matplotlib.pyplot as plt

def read_split_data(root: str, val_rate: float = 0.2):

random.seed(0) # 保证随机结果可复现

assert os.path.exists(root), "dataset root: {} does not exist.".format(root)

# 遍历文件夹,一个文件夹对应一个类别

flower_class = [cla for cla in os.listdir(root) if os.path.isdir(os.path.join(root, cla))]

# 排序,保证顺序一致

flower_class.sort()

# 生成类别名称以及对应的数字索引

class_indices = dict((k, v) for v, k in enumerate(flower_class))

json_str = json.dumps(dict((val, key) for key, val in class_indices.items()), indent=4)

with open('class_indices.json', 'w') as json_file:

json_file.write(json_str)

train_images_path = [] # 存储训练集的所有图片路径

train_images_label = [] # 存储训练集图片对应索引信息

val_images_path = [] # 存储验证集的所有图片路径

val_images_label = [] # 存储验证集图片对应索引信息

every_class_num = [] # 存储每个类别的样本总数

supported = [".jpg", ".JPG", ".png", ".PNG"] # 支持的文件后缀类型

# 遍历每个文件夹下的文件

for cla in flower_class:

cla_path = os.path.join(root, cla)

# 遍历获取supported支持的所有文件路径

images = [os.path.join(root, cla, i) for i in os.listdir(cla_path)

if os.path.splitext(i)[-1] in supported]

# 获取该类别对应的索引

image_class = class_indices[cla]

# 记录该类别的样本数量

every_class_num.append(len(images))

# 按比例随机采样验证样本

val_path = random.sample(images, k=int(len(images) * val_rate))

for img_path in images:

if img_path in val_path: # 如果该路径在采样的验证集样本中则存入验证集

val_images_path.append(img_path)

val_images_label.append(image_class)

else: # 否则存入训练集

train_images_path.append(img_path)

train_images_label.append(image_class)

print("{} images were found in the dataset.".format(sum(every_class_num)))

print("{} images for training.".format(len(train_images_path)))

print("{} images for validation.".format(len(val_images_path)))

plot_image = False

if plot_image:

# 绘制每种类别个数柱状图

plt.bar(range(len(flower_class)), every_class_num, align='center')

# 将横坐标0,1,2,3,4替换为相应的类别名称

plt.xticks(range(len(flower_class)), flower_class)

# 在柱状图上添加数值标签

for i, v in enumerate(every_class_num):

plt.text(x=i, y=v + 5, s=str(v), ha='center')

# 设置x坐标

plt.xlabel('image class')

# 设置y坐标

plt.ylabel('number of images')

# 设置柱状图的标题

plt.title('flower class distribution')

plt.show()

return train_images_path, train_images_label, val_images_path, val_images_label

def plot_data_loader_image(data_loader):

batch_size = data_loader.batch_size

plot_num = min(batch_size, 4)

json_path = './class_indices.json'

assert os.path.exists(json_path), json_path + " does not exist."

json_file = open(json_path, 'r')

class_indices = json.load(json_file)

for data in data_loader:

images, labels = data

for i in range(plot_num):

# [C, H, W] -> [H, W, C]

img = images[i].numpy().transpose(1, 2, 0)

# 反Normalize操作

img = (img * [0.229, 0.224, 0.225] + [0.485, 0.456, 0.406]) * 255

label = labels[i].item()

plt.subplot(1, plot_num, i+1)

plt.xlabel(class_indices[str(label)])

plt.xticks([]) # 去掉x轴的刻度

plt.yticks([]) # 去掉y轴的刻度

plt.imshow(img.astype('uint8'))

plt.show()

def write_pickle(list_info: list, file_name: str):

with open(file_name, 'wb') as f:

pickle.dump(list_info, f)

def read_pickle(file_name: str) -> list:

with open(file_name, 'rb') as f:

info_list = pickle.load(f)

return info_list

def plot_class_preds(net, # 参数:实例化的模型

images_dir: str, # images_dir 对应 预测图片所在的根目录

transform, # 验证集所使用的一个图像预处理

num_plot: int = 5, # 要展示的图片数目

device="cpu"): # 使用的运算设备信息

# device="device"): # 使用的运算设备信息

if not os.path.exists(images_dir):

print("not found {} path, ignore add figure.".format(images_dir))

return None

label_path = os.path.join(images_dir, "label.txt")

if not os.path.exists(label_path):

print("not found {} file, ignore add figure".format(label_path))

return None

# read class_indict

# 生成的json文件 索引和对应的类别

json_label_path = './class_indices.json'

assert os.path.exists(json_label_path), "not found {}".format(json_label_path)

json_file = open(json_label_path, 'r')

# {"0": "daisy"}

flower_class = json.load(json_file)

# {"daisy": "0"}

class_indices = dict((v, k) for k, v in flower_class.items())

# reading label.txt file

label_info = []

with open(label_path, "r") as rd:

for line in rd.readlines():

line = line.strip() # 去除首位空格换行符 等

if len(line) > 0: # 字符>0,说明不是空行

split_info = [i for i in line.split(" ") if len(i) > 0] # 按照空格进行分割

assert len(split_info) == 2, "label format error, expect file_name and class_name"

image_name, class_name = split_info # 格式正确的话,只有两个字符

image_path = os.path.join(images_dir, image_name)

# 如果文件不存在,则跳过

if not os.path.exists(image_path):

print("not found {}, skip.".format(image_path))

continue

# 如果读取的类别不在给定的类别内,则跳过

if class_name not in class_indices.keys():

print("unrecognized category {}, skip".format(class_name))

continue

label_info.append([image_path, class_name])

if len(label_info) == 0:

return None

# get first num_plot info

if len(label_info) > num_plot:

label_info = label_info[:num_plot]

#### 批量预测图片,就是把多张图片打包为一个banch()

num_imgs = len(label_info)

images = []

labels = []

for img_path, class_name in label_info:

# read img

img = Image.open(img_path).convert("RGB")

label_index = int(class_indices[class_name])

# preprocessing

img = transform(img)

images.append(img)

labels.append(label_index)

# batching images

# 通过stack方法 将上述的图片拼接为一个banch

images = torch.stack(images, dim=0).to(device)

# stack方法会对数据 新加一个维度,原本预处理后为 tensor(3,224,224)---(1(banch),3,224,224 )

#### # 批量预测图片,就是把多张图片打包为一个banch()

# inference 预测

with torch.no_grad():

output = net(images)

# 预测的 概率 与 索引

probs, preds = torch.max(torch.softmax(output, dim=1), dim=1)

probs = probs.cpu().numpy() # tensor转化为numpy格式

preds = preds.cpu().numpy()

# width, height

fig = plt.figure(figsize=(num_imgs * 2.5, 3), dpi=100)

# fig 的实际大小为figsize * dpi

for i in range(num_imgs):

# 1:子图共1行,num_imgs:子图共num_imgs列,当前绘制第i+1个子图

# 这里移除了x,y坐标的刻度信息

ax = fig.add_subplot(1, num_imgs, i+1, xticks=[], yticks=[])

# CHW(channel,hight,width) -> HWC(高宽channel)

npimg = images[i].cpu().numpy().transpose(1, 2, 0)

# 将图像还原至标准化之前

# mean:[0.485, 0.456, 0.406], std:[0.229, 0.224, 0.225]

npimg = (npimg * [0.229, 0.224, 0.225] + [0.485, 0.456, 0.406]) * 255

plt.imshow(npimg.astype('uint8')) # 转化为图片的常用格式

# 绘制title标签

title = "{}, {:.2f}%\n(label: {})".format(

flower_class[str(preds[i])], # predict class

probs[i] * 100, # predict probability

flower_class[str(labels[i])] # true class

)

ax.set_title(title, color=("green" if preds[i] == labels[i] else "red"))

return fig

5、 训练完成后,进行查看可视化效果

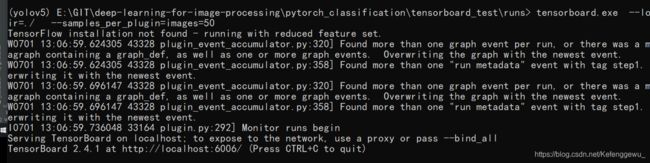

Windows 系统下

① 训练结束后,打开生成的保存tensorboard 文件的根目录,并在终端中打开,

注意:

如果报错:

tensorboard.exe : 无法将“tensorboard.exe”项识别为 cmdlet、函数、脚本文件或可运行程序的名称。请检查名称的拼写,如果包括路径,请确保路径正确,然后再试一次。

是因为当下环境没有安装 tensorboard,可以转到训练的conda 环境下运行

输入: tensorboard.exe --logdir=./ --samples_per_plugin=images=50

其中 , --logdir=./ 为当前路径

–samples_per_plugin=images=50 是自己添加的参数,指定显示图片的个数

(默认为10张)

回车后,会显示查看可视化效果的端口号:

② 进入浏览器,输入对应的端口号,就可以查看对应的训练数据.

预测的结果

Linux 系统下

② 进入相应的训练环境,并在终端输入指令:

tensorboard - - logs="./"

6、补充填坑:

浏览器中无法访问localhost:6006

输入: tensorboard.exe --logdir=./ --samples_per_plugin=images=50

在浏览器中无法访问页面可能的原因:

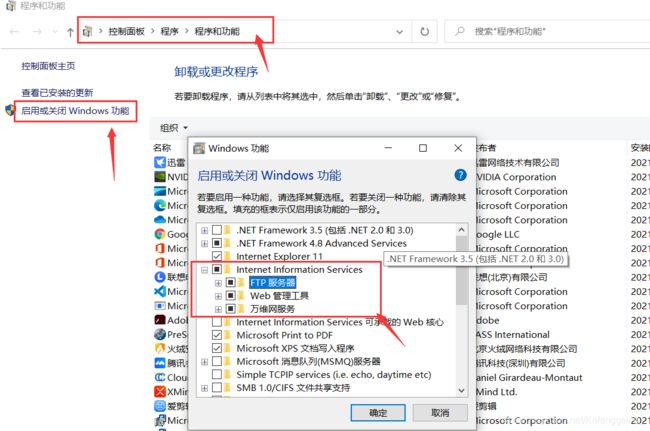

① Internet Information Service 设置

②输入的ip地址问题,hosts文件修改了

解决方案:

①打开“我的电脑”,选择左上角的“计算机”中的“卸载或更改程序”。

点击“启用或关闭Windows功能”。

点击选中“Internet Information Service”及其下的“FTP服务器”。

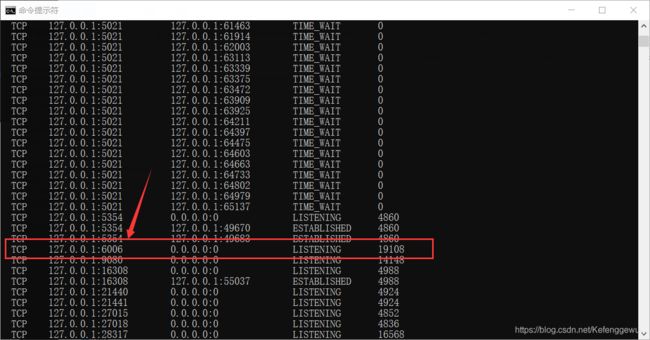

② 查看自己localhost地址:

②可尝试,重新在端口输入:

tensorboard.exe --logdir=./ --host=127.0.0.1 --samples_per_plugin=images=50

然后在浏览器中打开:

http://127.0.0.1:6006/

可成功显示!!

7、总结

实际运用中,将上述的5个文件置于同一文件夹下,设置好数据集文件夹,数据路径,训练相关的训练参数,配置好环境,就可以进行训练,并查看训练的可视化效果。

当然,如果能力允许,可以使用pytorch搭建的其他网络进行分类训练。

Reference

https://www.bilibili.com/video/BV1Qf4y1C7kz

https://blog.csdn.net/zhangpengzp/article/details/85165725

https://www.cnblogs.com/dahongbao/p/11187991.html

系列学习相关链接: 【深度学习图像检测与分类快速入门系列】.