pytorch-tensorboard 特征图可视化

网络不同深度特征图可视化

以ImageNet上预训练的Reset18模型为例,分别查看conv1,maxpool,layer1,layer2,layer3,layer4输出的特征图,即下采样2/4/8/16/32倍的特征图

- 原图:

缩放成 224*224 的大小作为输入; - conv1

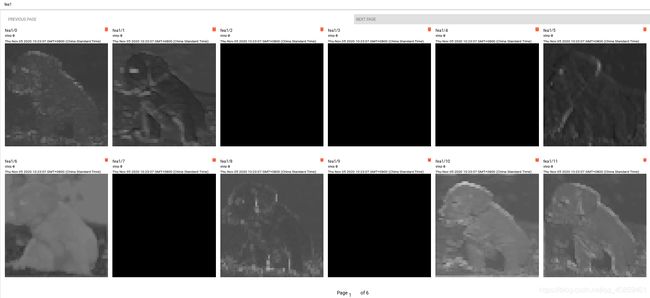

(1, 64, 112, 112),有多少个通道就有多少张图片,部分特征图是全黑的,可能说明这些滤波器不提取狗的特征(猜测) - maxpool

(1, 64, 56, 56),再次下采样,至此4倍下采样,特征图更加模糊了 - layer1

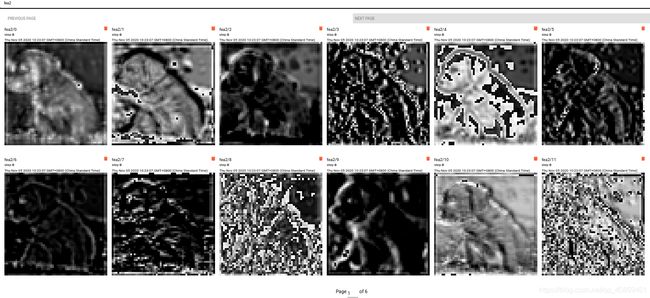

(1, 64, 56, 56),layer1没有进行下采样,所以通道数不变,进一步提取特征,特征清晰了一点; - layer2

(1, 128, 28, 28),下采样8倍,特征图尺度减半,通道数翻倍; - layer3

(1, 256, 14, 14),下采样16倍,特征图更加抽象; - layer4

(1, 512, 7, 7),再次下采样,至此下采样32倍,特征图通过averagepooling转化为向量,输入到全连接层,用于分类;

# -*- coding:utf-8 -*-

import cv2

import numpy as np

import torch

import torch.nn as nn

import torch.nn.functional as F

import torchvision.models as models

from torchvision.utils import make_grid

from torch.utils.tensorboard import SummaryWriter

class ResNet18(nn.Module):

def __init__(self, model, num_classes=1000):

super(ResNet18, self).__init__()

self.backbone = model

# 3 3*3 convs replace 1 7*7

self.conv1 = nn.Conv2d(3, 16, 3, stride=1, padding=1)

self.bn1 = nn.BatchNorm2d(16)

self.relu = nn.ReLU()

self.conv2 = nn.Conv2d(16, 32, 3, stride=1, padding=1)

self.bn2 = nn.BatchNorm2d(32)

self.conv3 = nn.Conv2d(32, 64, 3, stride=2, padding=1)

# conv

# conv replace FC

self.conv_final = nn.Conv2d(512, num_classes, 1, stride=1)

self.ada_avg_pool = nn.AdaptiveAvgPool2d((1, 1))

# FC

self.fc1 = nn.Linear(512, 256)

self.dropout = nn.Dropout(0.5)

self.fc2 = nn.Linear(256, num_classes)

def forward(self, x):

# x = whitening(x)

'''

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.conv2(x)

x = self.bn2(x)

x = self.relu(x)

x = self.conv3(x)

'''

x = self.backbone.conv1(x)

fea0 = x

x = self.backbone.bn1(x)

x = self.backbone.relu(x)

x = self.backbone.maxpool(x)

fea1 = x

x = self.backbone.layer1(x)

fea2 = x

x = self.backbone.layer2(x)

fea3 = x

x = self.backbone.layer3(x)

fea4 = x

x = self.backbone.layer4(x)

fea5 = x

# FC

x = self.backbone.avgpool(x)

x = x.view(x.size(0), -1)

x = self.fc1(x)

x = l2_norm(x)

x = self.dropout(x)

x = self.fc2(x)

x = l2_norm(x)

'''

# fully conv

x = self.conv_final(x)

x = self.ada_avg_pool(x)

x = x.view(x.size(0), -1)

# print x.size()

'''

return x, fea0, fea1, fea2, fea3, fea4, fea5

if __name__ == '__main__':

#'''

writer = SummaryWriter()

backbone = models.resnet18(pretrained=True)

models = ResNet18(backbone, 1000).cuda()

data = cv2.imread('dog.png')[..., ::-1]

data = cv2.resize(data, (224, 224))

data = data.transpose((2, 0, 1)) / 255.0

data = np.expand_dims(data, axis=0)

data = torch.as_tensor(data, dtype=torch.float).cuda()

writer.add_image('image', data[0], 0)

models.eval()

x, fea0, fea1, fea2, fea3, fea4, fea5 = models(data)

for i in range(fea0.shape[1]):

writer.add_image(f'fea0/{i}', fea0[0][i].detach().cpu().unsqueeze(dim=0), 0)

for i in range(fea1.shape[1]):

writer.add_image(f'fea1/{i}', fea1[0][i].detach().cpu().unsqueeze(dim=0), 0)

for i in range(fea1.shape[1]):

writer.add_image(f'fea2/{i}', fea2[0][i].detach().cpu().unsqueeze(dim=0), 0)

for i in range(fea3.shape[1]):

writer.add_image(f'fea3/{i}', fea3[0][i].detach().cpu().unsqueeze(dim=0), 0)

for i in range(fea4.shape[1]):

writer.add_image(f'fea4/{i}', fea4[0][i].detach().cpu().unsqueeze(dim=0), 0)

for i in range(fea5.shape[1]):

writer.add_image(f'fea5/{i}', fea5[0][i].detach().cpu().unsqueeze(dim=0), 0)

#print(x)

print(x.size())

TODO:

- 如何将特征响应区域映射到原图上?