Tensorflow (8) 图解 A Neural Machine Translation Model (Mechanics of Seq2seq Models With Attention

Neural Machine Translation (seq2seq) Tutorial.(示例)

Visualizing A Neural Machine Translation Model (Mechanics of Seq2seq Models With Attention) – Jay Alammar – Visualizing machine learning one concept at a time.

基于Attention的机器翻译模型

Sequence-to-sequence models Sutskever et al., 2014, Cho et al., 2014

A sequence-to-sequence model is a model that takes a sequence of items (words, letters, features of an images…etc) and outputs another sequence of items. A trained model would work like this:

seq2seq_1

In neural machine translation, a sequence is a series of words, processed one after another. The output is, likewise, a series of words:

seq2seq_2

1、图解内部机制

模型由一个encoder和 一个 decoder组成。

encoder 处理输入序列中的每个item,就是将每个item包含的信息转换成一个向量(称 context). 输入序列中所有item处理完成后,encoder将翻译的context 传递给decoder,decoder将item逐个处理,生成输出序列.

seq2seq_3

机器翻译

seq2seq_4

在机器翻译中 context 是一个向量 (一般为一个值为数字的数组). encoder 和 decoder 一般为循环神经网络 (RNN介绍参见A friendly introduction to Recurrent Neural Networks ).

context 为浮点数值的向量. 后面的图解中将用更亮颜色显示数值大的单元格.

context向量的维度大小可以在模型设置中进行配置,一般是encoder RNN隐藏单元的个数。 上图中显示向量维度大小为4,在实际应用中context向量会为 256, 512, or 1024.

在每个time step(每个处理单词)RNN 接收两个输入:输入句子中的的一个单词 (对应encoder), 和一个hidden state。然而单词需要用向量表示,要将单词转换为向量,就需要 “word embedding” 算法,算法将单词转换到向量空间,此向量空间同时包含了单词的meaning/semanticting 信息 (e.g. king - man + woman = queen).

单词在被encoder处理之前需要先转换为向量,转换算法为word embedding ,可以用pre-trained embeddings 或者在数据集上训练自己的的embedding。Embedding向量维度大小一般为 200 or 300, 上图为了简明表示向量大小为4.

图解RNN机制

RNN_1

RNN接下来的time step接收第二个输入向量和hidden state #1 并生成此time step的输出,下面我们图解说明向量在机器翻译模型中的翻译机制

在下面的视频中说明, 在encoder or decoder每次节拍中,RNN会处理输入并生成此time step的输出。由于encoder 和 decoder都是 RNNs, 每个time step其中 一个RNN都有处理,处理就是根据当前以及前面的输入更新 hidden state.

如下视频中encoder的 hidden states 可以看到最后一个hidden state 实际上就是传递给decoder 的context向量.

seq2seq_5

decoder也会维护一个从当前time step传递到下一个time step的 hidden state ,我们只是没有在视频中标出。

我们用另一中方式图解sequence-to-sequence模型,这个动画模型可以帮助我们更好的理解。

seq2seq_6

2、Attention

在上面的模型中,context vector会是一个瓶颈。特别是处理较长的句子。在Bahdanau et al., 2014 和 Luong et al., 2015提出了一种方法称为 “Attention”,可以很大提高机器翻译系统的性能。 Attention可以让模型更聚焦于属于句子中与之相关的部分.

在time step 7中attention mechanism使得decoder在英语翻译之前聚焦于单词 "étudiant" ("student" in french) . 这种能力可以放大输入句子中与之相关部分的信号,于是可以产生比没有注意力机制模型更好的结果

我们继续在更高的抽象层面来attention models。attention model在两个方面不同于传统的sequence-to-sequence model:

首先,encoder 给 decoder传递了更多的数据信息. encoder传递所有的hidden states给decoder,而不是只传递最后一个hidden state:

seq2seq_7

其次, attention decoder 在生成输出之前有一个额外的步骤step。为了更好的聚焦与解码time step 相关的输入部分,decoder进行了如下处理:

- 查看收到的encoder hidden states – (每个encoder hidden state是对应输入句子中一个单词)

- 给每个hidden state进行打分 (let’s ignore how the scoring is done for now)

- 将每个hidden state与它的softmaxed score相乘,这样会用更高的得分放大hidden states ,并丢弃得分低的hidden states

attention_process

打分训练在decoder端的每个time step完成.

Attention 的整体工作机制如下:

- The attention decoder RNN takes in the embedding of the

token, and an initial decoder hidden state(hinit). - The RNN processes its inputs, producing an output and a new hidden state vector (h4). The output is discarded.

- Attention Step: We use the encoder hidden states and the h4 vector to calculate a context vector (C4) for this time step.

- We concatenate h4 and C4 into one vector.

- We pass this vector through a feedforward neural network (one trained jointly with the model).

- The output of the feedforward neural networks indicates the output word of this time step.

- Repeat for the next time steps

attention_tensor_dance

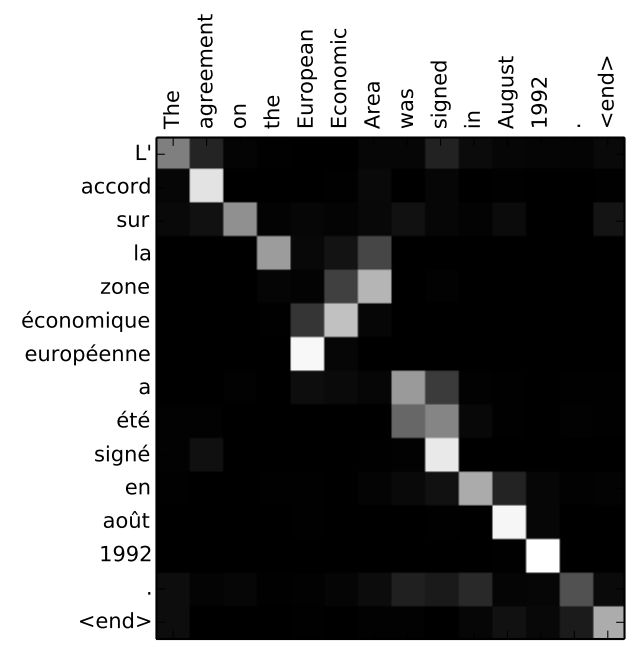

This is another way to look at which part of the input sentence we’re paying attention to at each decoding step:

seq2seq_9

Note that the model isn’t just mindless aligning the first word at the output with the first word from the input. It actually learned from the training phase how to align words in that language pair (French and English in our example). An example for how precise this mechanism can be comes from the attention papers listed above:

You can see how the model paid attention correctly when outputing "European Economic Area". In French, the order of these words is reversed ("européenne économique zone") as compared to English. Every other word in the sentence is in similar order.

示例教程Neural Machine Translation (seq2seq) Tutorial.