【机器学习】交叉验证详细解释+10种常见的验证方法具体代码实现+可视化图

【机器学习】交叉验证详细解释+10种常见的验证方法具体代码实现+可视化图

一、使用背景

- 由于在训练集上,通过调整参数设置使估计器的性能达到了最佳状态;但在测试集上可能会出现过拟合的情况。 此时,测试集上的信息反馈足以颠覆训练好的模型,评估的指标不再有效反映出模型的泛化性能。 为了解决此类问题,还应该准备另一部分被称为 验证集的数据集,模型训练完成以后在验证集上对模型进行评估。 当验证集上的评估实验比较成功时,在测试集上进行最后的评估。

- 然而,通过将原始数据分为3个数据集合,我们就大大减少了可用于模型学习的样本数量, 并且得到的结果依赖于集合对(训练,验证)的随机选择。

- 这个问题可以通过交叉验证来解决。交叉验证仍需要测试集做最后的模型评估,但不再需要验证集。

二、使用交叉验证的指标

1.使用交叉验证最简单的方法是在估计器和数据集上调用 cross_val_score 辅助函数。

- 下面的示例展示了如何通过分割数据,拟合模型和计算连续 5 次的分数(每次不同分割)来估计 linear kernel 支持向量机在 iris 数据集上的精度:

import numpy as np from sklearn.model_selection import train_test_split from sklearn import datasets from sklearn import svm from sklearn.model_selection import cross_val_score iris = datasets.load_iris() iris.data.shape, iris.target.shape clf = svm.SVC(kernel='linear', C=1) scores = cross_val_score(clf, iris.data, iris.target, cv=5) print(scores) #[0.96666667 1. 0.96666667 0.96666667 1. ] - 评分估计的平均得分和 95% 置信区间由此给出:

print("Accuracy: %0.2f (+/- %0.2f)" % (scores.mean(), scores.std() * 2)) #Accuracy: 0.98 (+/- 0.03)

2.cross_validate 函数和多度量评估

- 允许多个指标进行评估,并且除了测试得分外,还会返回一个包含训练得分、拟合次数、得分次数的一个字典

- 这里是一个使用单一指标的 cross_validate 的示例:

from sklearn.model_selection import cross_validate from sklearn.metrics import recall_score scores = cross_validate(clf, iris.data, iris.target, scoring='precision_macro', cv=5, return_estimator=True) print(sorted(scores.keys())) #['estimator', 'fit_time', 'score_time', 'test_score']

3.通过交叉验证获取预测(函数cross_val_predict)

cross_val_predict函数的结果可能会与cross_val_score函数的结果不一样,因为在这两种方法中元素的分组方式不一样。函数cross_val_score在所有交叉验证的折子上取平均。但是,函数cross_val_predict只是简单的返回由若干不同模型预测出的标签或概率。- 具有交叉验证的ROC曲线的绘制:

import numpy as np import matplotlib.pyplot as plt from sklearn import svm, datasets from sklearn.metrics import auc from sklearn.metrics import RocCurveDisplay from sklearn.model_selection import StratifiedKFold # ############################################################################# # Data IO and generation # Import some data to play with iris = datasets.load_iris() X = iris.data y = iris.target X, y = X[y != 2], y[y != 2] n_samples, n_features = X.shape # Add noisy features random_state = np.random.RandomState(0) X = np.c_[X, random_state.randn(n_samples, 200 * n_features)] # ############################################################################# # Classification and ROC analysis # Run classifier with cross-validation and plot ROC curves cv = StratifiedKFold(n_splits=6) classifier = svm.SVC(kernel="linear", probability=True, random_state=random_state) tprs = [] aucs = [] mean_fpr = np.linspace(0, 1, 100) fig, ax = plt.subplots() for i, (train, test) in enumerate(cv.split(X, y)): classifier.fit(X[train], y[train]) viz = RocCurveDisplay.from_estimator( classifier, X[test], y[test], name="ROC fold {}".format(i), alpha=0.3, lw=1, ax=ax, ) interp_tpr = np.interp(mean_fpr, viz.fpr, viz.tpr) interp_tpr[0] = 0.0 tprs.append(interp_tpr) aucs.append(viz.roc_auc) ax.plot([0, 1], [0, 1], linestyle="--", lw=2, color="r", label="Chance", alpha=0.8) mean_tpr = np.mean(tprs, axis=0) mean_tpr[-1] = 1.0 mean_auc = auc(mean_fpr, mean_tpr) std_auc = np.std(aucs) ax.plot( mean_fpr, mean_tpr, color="b", label=r"Mean ROC (AUC = %0.2f $\pm$ %0.2f)" % (mean_auc, std_auc), lw=2, alpha=0.8, ) std_tpr = np.std(tprs, axis=0) tprs_upper = np.minimum(mean_tpr + std_tpr, 1) tprs_lower = np.maximum(mean_tpr - std_tpr, 0) ax.fill_between( mean_fpr, tprs_lower, tprs_upper, color="grey", alpha=0.2, label=r"$\pm$ 1 std. dev.", ) ax.set( xlim=[-0.05, 1.05], ylim=[-0.05, 1.05], title="Receiver operating characteristic example", ) ax.legend(loc="lower right") plt.show()

三、交叉验证迭代器:用于生成索引标号,用于在不同的交叉验证策略中生成数据划分

1.K折

KFold将所有的样例划分为k个组,称为折叠 (fold) (如果 k = n, 这等价于Leave OneOut(留一) 策略),都具有相同的大小(如果可能)。预测函数学习时使用 k - 1 个折叠中的数据,最后一个剩下的折叠会用于测试。- 在 4 个样例的数据集上使用

2-fold交叉验证的示例:

import numpy as np

from sklearn.model_selection import KFold

X = ["a", "b", "c", "d"]

kf = KFold(n_splits=2)

for train, test in kf.split(X):

print("%s %s" % (train, test))

#[2 3] [0 1]

#[0 1] [2 3]

2.重复 K-折交叉验证

RepeatedKFold重复K-Foldn 次。当需要运行时可以使用它 KFold n 次,在每次重复中产生不同的分割。- 2折

K-Fold重复 2 次的示例:import numpy as np from sklearn.model_selection import RepeatedKFold X = np.array([[1, 2], [3, 4], [1, 2], [3, 4]]) random_state = 12883823 rkf = RepeatedKFold(n_splits=2, n_repeats=2, random_state=random_state) for train, test in rkf.split(X): print("%s %s" % (train, test)) #[2 3] [0 1] #[0 1] [2 3] #[0 2] [1 3] #[1 3] [0 2]

3.留一交叉验证 (LOO)

LeaveOneOut(或 LOO) 是一个简单的交叉验证。每个学习集都是通过除了一个样本以外的所有样本创建的,测试集是被留下的样本。 因此,对于 n 个样本,我们有 n 个不同的训练集和 n 个不同的测试集。这种交叉验证程序不会浪费太多数据,因为只有一个样本是从训练集中删除掉的:-

from sklearn.model_selection import LeaveOneOut X = [1, 2, 3, 4] loo = LeaveOneOut() for train, test in loo.split(X): print("%s %s" % (train, test)) #[1 2 3] [0] #[0 2 3] [1] #[0 1 3] [2] #[0 1 2] [3] - 当与 k 折交叉验证进行比较时,可以从 n 样本中构建 n 模型,而不是 k 模型,其中 n > k 。 此外,每个在 n - 1 个样本而不是在

(k-1) n / k上进行训练。在两种方式中,假设 k 不是太大,并且 k < n , LOO 比 k 折交叉验证计算开销更加昂贵。 - 就精度而言, LOO 经常导致较高的方差作为测试误差的估计器。直观地说,因为 n 个样本中的 n - 1 被用来构建每个模型,折叠构建的模型实际上是相同的,并且是从整个训练集建立的模型。但是,如果学习曲线对于所讨论的训练大小是陡峭的,那么 5- 或 10- 折交叉验证可以泛化误差增高。作为一般规则,大多数作者和经验证据表明, 5- 或者 10- 交叉验证应该优于 LOO 。

4.留 P 交叉验证 (LPO)

LeavePOut与LeaveOneOut非常相似,因为它通过从整个集合中删除 p 个样本来创建所有可能的训练/测试集。对于 n 个样本,这产生了(n,p)个训练-测试 对。与LeaveOneOut和KFold不同,当 p > 1 时,测试集会重叠。- 在有 4 个样例的数据集上使用

Leave-2-Out的示例:from sklearn.model_selection import LeavePOut X = np.ones(4) lpo = LeavePOut(p=2) for train, test in lpo.split(X): print("%s %s" % (train, test)) #[2 3] [0 1] #[1 3] [0 2] #[1 2] [0 3] #[0 3] [1 2] #[0 2] [1 3] #[0 1] [2 3]

5.随机排列交叉验证ShuffleSplit

ShuffleSplit迭代器将会生成一个用户给定数量的独立的训练/测试数据划分。样例首先被打散然后划分为一对训练测试集合。- 可以通过设定明确的

random_state,使得伪随机生成器的结果可以重复。 - 这是一个使用的小示例:

from sklearn.model_selection import ShuffleSplit X = np.arange(5) ss = ShuffleSplit(n_splits=3, test_size=0.25, random_state=0) for train_index, test_index in ss.split(X): print("%s %s" % (train_index, test_index)) #[1 3 4] [2 0] #[1 4 3] [0 2] #[4 0 2] [1 3] ShuffleSplit可以替代KFold交叉验证,因为其提供了细致的训练 测试划分的 数量和样例所占的比例等的控制。

6.分层 k 折

StratifiedKFold是k-fold的变种,会返回stratified(分层) 的折叠:每个小集合中, 各个类别的样例比例大致和完整数据集中相同。- 在有 10 个样例的,有两个略不均衡类别的数据集上进行分层 3-fold 交叉验证的示例:

from sklearn.model_selection import StratifiedKFold X = np.ones(10) y = [0, 0, 0, 0, 1, 1, 1, 1, 1, 1] skf = StratifiedKFold(n_splits=3) for train, test in skf.split(X, y): print("%s %s" % (train, test)) #[2 3 6 7 8 9] [0 1 4 5] #[0 1 3 4 5 8 9] [2 6 7] #[0 1 2 4 5 6 7] [3 8 9]

7.组 k-fold

GroupKFold是k-fold的变体,它确保同一个 group 在测试和训练集中都不被表示。 例如,如果数据是从不同的subjects获得的,每个 subject 有多个样本,并且如果模型足够灵活以高度人物指定的特征中学习,则可能无法推广到新的subject。GroupKFold可以检测到这种过拟合的情况。- 假设有三组数据,每组都有一个从1到3的相关数字:

from sklearn.model_selection import GroupKFold X = [0.1, 0.2, 2.2, 2.4, 2.3, 4.55, 5.8, 8.8, 9, 10] y = ["a", "b", "b", "b", "c", "c", "c", "d", "d", "d"] groups = [1, 1, 1, 2, 2, 2, 3, 3, 3, 3] gkf = GroupKFold(n_splits=3) for train, test in gkf.split(X, y, groups=groups): print("%s %s" % (train, test)) #[0 1 2 3 4 5] [6 7 8 9] #[0 1 2 6 7 8 9] [3 4 5] #[3 4 5 6 7 8 9] [0 1 2] - 由于数据不平衡,折叠的大小并不完全相同。

8.留一组交叉验证

LeaveOneGroupOut是一个交叉验证方案,它根据第三方提供的array of integer groups(整数组的数组)来提供样本。这个组信息可以用来编码任意域特定的预定义交叉验证折叠。- 每个训练集都是由除特定组别以外的所有样本构成的。

- 例如,在多个实验的情况下,

LeaveOneGroupOut可以用来根据不同的实验创建一个交叉验证:我们使用除去一个实验的所有实验的样本创建一个训练集:from sklearn.model_selection import LeaveOneGroupOut X = [1, 5, 10, 50, 60, 70, 80] y = [0, 1, 1, 2, 2, 2, 2] groups = [1, 1, 2, 2, 3, 3, 3] logo = LeaveOneGroupOut() for train, test in logo.split(X, y, groups=groups): print("%s %s" % (train, test)) #[2 3 4 5 6] [0 1] #[0 1 4 5 6] [2 3] #[0 1 2 3] [4 5 6]

9.留 P 组交叉验证

LeavePGroupsOut类似于LeaveOneGroupOut,但为每个训练/测试集删除与 P 组有关的样本。- Leave-2-Group Out 的示例:

from sklearn.model_selection import LeavePGroupsOut

X = np.arange(6)

y = [1, 1, 1, 2, 2, 2]

groups = [1, 1, 2, 2, 3, 3]

lpgo = LeavePGroupsOut(n_groups=2)

for train, test in lpgo.split(X, y, groups=groups):

print("%s %s" % (train, test))

#[4 5] [0 1 2 3]

#[2 3] [0 1 4 5]

#[0 1] [2 3 4 5]

10.Group Shuffle Split

GroupShuffleSplit迭代器是ShuffleSplit和LeavePGroupsOut的组合,它生成一个随机划分分区的序列,其中为每个分组提供了一个组子集。- 这是使用的示例:

from sklearn.model_selection import GroupShuffleSplit X = [0.1, 0.2, 2.2, 2.4, 2.3, 4.55, 5.8, 0.001] y = ["a", "b", "b", "b", "c", "c", "c", "a"] groups = [1, 1, 2, 2, 3, 3, 4, 4] gss = GroupShuffleSplit(n_splits=4, test_size=0.5, random_state=0) for train, test in gss.split(X, y, groups=groups): print("%s %s" % (train, test)) #[0 1 2 3] [4 5 6 7] #[2 3 6 7] [0 1 4 5] #[2 3 4 5] [0 1 6 7] #[4 5 6 7] [0 1 2 3]

三、.预定义的折叠 / 验证集

- 对一些数据集,一个预定义的,将数据划分为训练和验证集合或者划分为几个交叉验证集合的划分已经存在。 可以使用

PredefinedSplit来使用这些集合来搜索超参数。 - 比如,当使用验证集合时,设置所有验证集合中的样例的

test_fold为 0,而将其他样例设置为 -1 。

四、交叉验证在时间序列数据中应用

TimeSeriesSplit是k-fold的一个变体,它首先返回 k 折作为训练数据集,并且 (k+1) 折作为测试数据集。与标准的交叉验证方法不同,连续的训练集是超越前者的超集。 另外,它将所有的剩余数据添加到第一个训练分区,它总是用来训练模型。- 这个类可以用来交叉验证以固定时间间隔观察到的时间序列数据样本。

- 对具有 6 个样本的数据集进行

3-split时间序列交叉验证的示例:from sklearn.model_selection import TimeSeriesSplit X = np.array([[1, 2], [3, 4], [1, 2], [3, 4], [1, 2], [3, 4]]) y = np.array([1, 2, 3, 4, 5, 6]) tscv = TimeSeriesSplit(n_splits=3) print(tscv) TimeSeriesSplit(max_train_size=None, n_splits=3) for train, test in tscv.split(X): print("%s %s" % (train, test)) #[0 1 2] [3] #[0 1 2 3] [4] #[0 1 2 3 4] [5]

五、可视化操作

from sklearn.model_selection import (

TimeSeriesSplit,

KFold,

ShuffleSplit,

StratifiedKFold,

GroupShuffleSplit,

GroupKFold,

StratifiedShuffleSplit,

StratifiedGroupKFold,

)

import numpy as np

import matplotlib.pyplot as plt

from matplotlib.patches import Patch

np.random.seed(1338)

cmap_data = plt.cm.Paired

cmap_cv = plt.cm.coolwarm

n_splits = 4

# Generate the class/group data

n_points = 100

X = np.random.randn(100, 10)

percentiles_classes = [0.1, 0.3, 0.6]

y = np.hstack([[ii] * int(100 * perc) for ii, perc in enumerate(percentiles_classes)])

# Evenly spaced groups repeated once

groups = np.hstack([[ii] * 10 for ii in range(10)])

def visualize_groups(classes, groups, name):

# Visualize dataset groups

fig, ax = plt.subplots()

ax.scatter(

range(len(groups)),

[0.5] * len(groups),

c=groups,

marker="_",

lw=50,

cmap=cmap_data,

)

ax.scatter(

range(len(groups)),

[3.5] * len(groups),

c=classes,

marker="_",

lw=50,

cmap=cmap_data,

)

ax.set(

ylim=[-1, 5],

yticks=[0.5, 3.5],

yticklabels=["Data\ngroup", "Data\nclass"],

xlabel="Sample index",

)

visualize_groups(y, groups, "no groups")

会生成如下图片:

def plot_cv_indices(cv, X, y, group, ax, n_splits, lw=10):

"""Create a sample plot for indices of a cross-validation object."""

# Generate the training/testing visualizations for each CV split

for ii, (tr, tt) in enumerate(cv.split(X=X, y=y, groups=group)):

# Fill in indices with the training/test groups

indices = np.array([np.nan] * len(X))

indices[tt] = 1

indices[tr] = 0

# Visualize the results

ax.scatter(

range(len(indices)),

[ii + 0.5] * len(indices),

c=indices,

marker="_",

lw=lw,

cmap=cmap_cv,

vmin=-0.2,

vmax=1.2,

)

# Plot the data classes and groups at the end

ax.scatter(

range(len(X)), [ii + 1.5] * len(X), c=y, marker="_", lw=lw, cmap=cmap_data

)

ax.scatter(

range(len(X)), [ii + 2.5] * len(X), c=group, marker="_", lw=lw, cmap=cmap_data

)

# Formatting

yticklabels = list(range(n_splits)) + ["class", "group"]

ax.set(

yticks=np.arange(n_splits + 2) + 0.5,

yticklabels=yticklabels,

xlabel="Sample index",

ylabel="CV iteration",

ylim=[n_splits + 2.2, -0.2],

xlim=[0, 100],

)

ax.set_title("{}".format(type(cv).__name__), fontsize=15)

return ax

fig, ax = plt.subplots()

cv = KFold(n_splits)

plot_cv_indices(cv, X, y, groups, ax, n_splits)

生成k折的可视化图:

uneven_groups = np.sort(np.random.randint(0, 10, n_points))

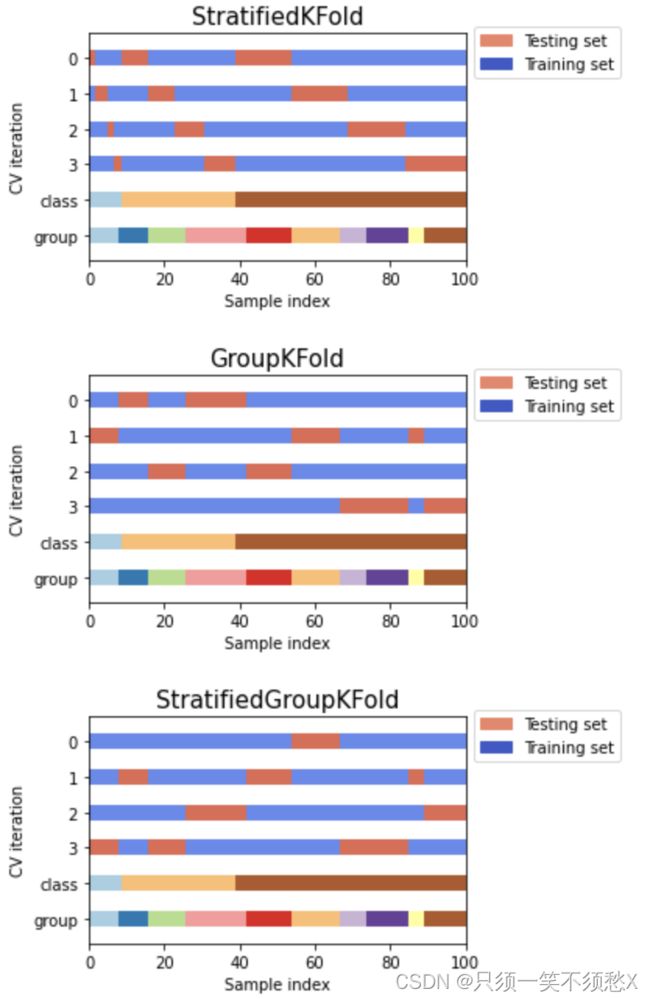

cvs = [StratifiedKFold, GroupKFold, StratifiedGroupKFold]

for cv in cvs:

fig, ax = plt.subplots(figsize=(6, 3))

plot_cv_indices(cv(n_splits), X, y, uneven_groups, ax, n_splits)

ax.legend(

[Patch(color=cmap_cv(0.8)), Patch(color=cmap_cv(0.02))],

["Testing set", "Training set"],

loc=(1.02, 0.8),

)

# Make the legend fit

plt.tight_layout()

fig.subplots_adjust(right=0.7)

生成StratifiedKFold, GroupKFold, StratifiedGroupKFold的可视化图:

cvs = [

KFold,

GroupKFold,

ShuffleSplit,

StratifiedKFold,

StratifiedGroupKFold,

GroupShuffleSplit,

StratifiedShuffleSplit,

TimeSeriesSplit,

]

for cv in cvs:

this_cv = cv(n_splits=n_splits)

fig, ax = plt.subplots(figsize=(6, 3))

plot_cv_indices(this_cv, X, y, groups, ax, n_splits)

ax.legend(

[Patch(color=cmap_cv(0.8)), Patch(color=cmap_cv(0.02))],

["Testing set", "Training set"],

loc=(1.02, 0.8),

)

# Make the legend fit

plt.tight_layout()

fig.subplots_adjust(right=0.7)

plt.show()

生成KFold, GroupKFold, ShuffleSplit, StratifiedKFold, StratifiedGroupKFold, GroupShuffleSplit,StratifiedShuffleSplit,TimeSeriesSplit的可视化图:

六、注意事项

- 如果数据的顺序不是任意的(比如说,相同标签的样例连续出现),为了获得有意义的交叉验证结果,首先对其进行打散是很有必要的。然而,当样例不是独立同分布时打散则是不可行的。例如:样例是相关的文章,以他们发表的时间 进行排序,这时候如果对数据进行打散,将会导致模型过拟合,得到一个过高的验证分数:因为验证样例更加相似(在时间上更接近) 于训练数据。