【总结】Keras+VGG16特征图可视化,帮助你深入理解VGG16

Keras+VGG16特征图可视化

- 一、VGG16结构理解

-

- 1. 可视化结构图

- 2. VGGNet各级别网络结构图

- 3. VGG16网络结构图

- 二、Keras实现VGG16

-

- 代码实现

- 三、VGG16特征图可视化

-

- 代码实现

一、VGG16结构理解

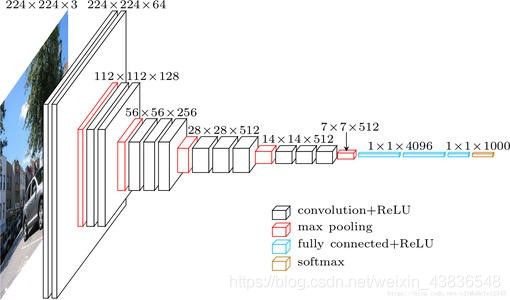

1. 可视化结构图

2. VGGNet各级别网络结构图

3. VGG16网络结构图

总结:三种不同的形式,方便大家对VGG16架构有更为直观的认识。

二、Keras实现VGG16

代码实现

# -*- coding: utf-8 -*-

"""

Created on Tue Apr 7 09:15:36 2020

@author: wuzhendong

"""

import keras

#cifar10数据集:60000张彩色图像,这些图像是32*32,分为10个类,每类6000张图

from keras.datasets import cifar10

from keras.models import Sequential

from keras.layers import Dense, Dropout, Activation, Flatten

from keras.layers import Conv2D, MaxPooling2D, BatchNormalization

from keras.optimizers import SGD

from keras import regularizers

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

from sklearn.metrics import classification_report

#该数据集需要自动下载,大小约为163M,若下载失败可手动下载

#下载链接:https://www.cs.toronto.edu/~kriz/cifar-10-python.tar.gz

(x_train, y_train), (x_test, y_test) = cifar10.load_data()

x_train = x_train.astype('float32')

x_test = x_test.astype('float32')

y_train = keras.utils.to_categorical(y_train, 10)

y_test = keras.utils.to_categorical(y_test, 10)

#训练集和验证集7/3分

x_train, x_val, y_train, y_val = train_test_split(x_train, y_train, test_size = 0.3)

#用于正则化时权重降低的速度

weight_decay = 0.0005 #权重衰减(L2正则化),作用是避免过拟合

nb_epoch=50 #50轮

batch_size=32 #每轮32张图

#layer1 32*32*3

model = Sequential()

#第一层 卷积层 的卷积核的数目是32 ,卷积核的大小是3*3,keras卷积层stride默认是1*1

#对于stride=1*1,padding ='same',这种情况卷积后的图像shape与卷积前相同,本层后shape还是32*32

model.add(Conv2D(64, (3, 3), padding='same',strides=(1, 1),

input_shape=(32,32,3),kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

#进行一次归一化

model.add(BatchNormalization())

model.add(Dropout(0.3))

#layer2 32*32*64

model.add(Conv2D(64, (3, 3), padding='same',kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization())

#keras 池化层 stride默认是2*2, padding默认是valid,输出的shape是16*16*64

model.add(MaxPooling2D(pool_size=(2, 2),strides=(2,2),padding='same'))

#layer3 16*16*64

model.add(Conv2D(128, (3, 3), padding='same',kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(Dropout(0.4))

#layer4 16*16*128

model.add(Conv2D(128, (3, 3), padding='same',kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(MaxPooling2D(pool_size=(2, 2)))

#layer5 8*8*128

model.add(Conv2D(256, (3, 3), padding='same',kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(Dropout(0.4))

#layer6 8*8*256

model.add(Conv2D(256, (3, 3), padding='same',kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(Dropout(0.4))

#layer7 8*8*256

model.add(Conv2D(256, (3, 3), padding='same',kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(MaxPooling2D(pool_size=(2, 2)))

#layer8 4*4*256

model.add(Conv2D(512, (3, 3), padding='same',kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(Dropout(0.4))

#layer9 4*4*512

model.add(Conv2D(512, (3, 3), padding='same',kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(Dropout(0.4))

#layer10 4*4*512

model.add(Conv2D(512, (3, 3), padding='same',kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(MaxPooling2D(pool_size=(2, 2)))

#layer11 2*2*512

model.add(Conv2D(512, (3, 3), padding='same',kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(Dropout(0.4))

#layer12 2*2*512

model.add(Conv2D(512, (3, 3), padding='same',kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(Dropout(0.4))

#layer13 2*2*512

model.add(Conv2D(512, (3, 3), padding='same',kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.5))

#layer14 1*1*512

model.add(Flatten())

model.add(Dense(512,kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization())

#layer15 512

model.add(Dense(512,kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization())

#layer16 512

model.add(Dropout(0.5))

model.add(Dense(10))

model.add(Activation('softmax'))

# 10

model.summary()

sgd = SGD(lr=0.01, decay=1e-6, momentum=0.9, nesterov=True)

model.compile(loss='categorical_crossentropy', optimizer=sgd,metrics=['accuracy'])

#训练

model.fit(x_train, y_train, epochs=nb_epoch, batch_size=batch_size,

validation_split=0.1, verbose=1)

#验证 loss值和 准确率

loss, acc = model.evaluate(x_val, y_val, batch_size=batch_size, verbose=1, sample_weight=None)

print("accuracy:",acc)

print("loss",loss)

#推理

y_pred = model.predict_classes(x_test, batch_size=32, verbose=0)

y_pred = keras.utils.to_categorical(y_pred, 10)

#测试集的准确率

print("accuracy score:", accuracy_score(y_test, y_pred))

#分类报告

print(classification_report(y_test, y_pred))

#保存模型

#官方文档不推荐使用pickle或cPickle来保存Keras模型

model.save('keras_vgg16_cifar10.h5')

#.h5文件是 HDF5文件,改文件包含:模型结构、权重、训练配置(损失函数、优化器等)、优化器状态

#使用keras.models.load_model(filepath)来重新实例化你的模型

#只保存模型结构

# save as JSON

json_string = model.to_json()

# save as YAML

yaml_string = model.to_yaml()

#保存模型权重

model.save_weights('my_model_weights.h5')

! ! ! 加 载 c i f a r 10 数 据 集 失 败 的 解 决 办 法 \color{#FF0000} { !!!加载cifar10数据集失败 的解决办法 } !!!加载cifar10数据集失败的解决办法

由于网络不稳定,在执行 (x_train, y_train), (x_test, y_test) = cifar10.load_data() 自动加载数据集时可能会失败,解决办法如下:

- 迅雷下载:http://www.cs.toronto.edu/~kriz/cifar-10-python.tar.gz

- 更改文件名为:cifar-10-batches-py.tar.gz

- 移动该压缩文件至 ~/.keras/datasets/

- 解压

下次再执行 (x_train, y_train), (x_test, y_test) = cifar10.load_data() 则会自动加载下载好的数据集。

三、VGG16特征图可视化

代码实现

# -*- coding: utf-8 -*-

"""

Created on Tue Apr 7 11:57:10 2020

@author: wuzhendong

"""

from keras.models import Sequential

from keras.layers import Dense, Dropout, Activation, Flatten

from keras.layers import Conv2D, MaxPooling2D, BatchNormalization

import cv2

import os

import shutil

import numpy as np

import matplotlib.pyplot as plt

from keras import regularizers

#网络模型

def create_model():

#用于正则化时权重降低的速度

weight_decay = 0.0005 #权重衰减(L2正则化),作用是避免过拟合

#layer1 32*32*3

model = Sequential()

#第一层 卷积层 的卷积核的数目是32 ,卷积核的大小是3*3,keras卷积层stride默认是1*1

#对于stride=1*1,padding ='same',这种情况卷积后的图像shape与卷积前相同,本层后shape还是32*32

model.add(Conv2D(64, (3, 3), padding='same',strides=(1, 1),

input_shape=(32,32,3),kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

#进行一次归一化

model.add(BatchNormalization())

model.add(Dropout(0.3))

#layer2 32*32*64

model.add(Conv2D(64, (3, 3), padding='same',kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization())

#keras 池化层 stride默认是2*2, padding默认是valid,输出的shape是16*16*64

model.add(MaxPooling2D(pool_size=(2, 2),strides=(2,2),padding='same'))

#layer3 16*16*64

model.add(Conv2D(128, (3, 3), padding='same',kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(Dropout(0.4))

#layer4 16*16*128

model.add(Conv2D(128, (3, 3), padding='same',kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(MaxPooling2D(pool_size=(2, 2)))

#layer5 8*8*128

model.add(Conv2D(256, (3, 3), padding='same',kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(Dropout(0.4))

#layer6 8*8*256

model.add(Conv2D(256, (3, 3), padding='same',kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(Dropout(0.4))

#layer7 8*8*256

model.add(Conv2D(256, (3, 3), padding='same',kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(MaxPooling2D(pool_size=(2, 2)))

#layer8 4*4*256

model.add(Conv2D(512, (3, 3), padding='same',kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(Dropout(0.4))

#layer9 4*4*512

model.add(Conv2D(512, (3, 3), padding='same',kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(Dropout(0.4))

#layer10 4*4*512

model.add(Conv2D(512, (3, 3), padding='same',kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(MaxPooling2D(pool_size=(2, 2)))

#layer11 2*2*512

model.add(Conv2D(512, (3, 3), padding='same',kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(Dropout(0.4))

#layer12 2*2*512

model.add(Conv2D(512, (3, 3), padding='same',kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(Dropout(0.4))

#layer13 2*2*512

model.add(Conv2D(512, (3, 3), padding='same',kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.5))

#layer14 1*1*512

model.add(Flatten())

model.add(Dense(512,kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization())

#layer15 512

model.add(Dense(512,kernel_regularizer=regularizers.l2(weight_decay)))

model.add(Activation('relu'))

model.add(BatchNormalization())

#layer16 512

model.add(Dropout(0.5))

model.add(Dense(10))

model.add(Activation('softmax'))

return model

#保存特征图

def save_conv_img(conv_img):

feature_maps = np.squeeze(conv_img,axis=0)

img_num = feature_maps.shape[2]#卷积核个数对应特征图个数

all_feature_maps = []

for i in range(0,img_num):

single_feature_map = feature_maps[:,:,i]

all_feature_maps.append(single_feature_map)

plt.imshow(single_feature_map)

plt.savefig('./feature_map/'+'feature_{}'.format(i))

sum_feature_map = sum(feature_map for feature_map in all_feature_maps)

plt.imshow(sum_feature_map)

plt.savefig("./feature_map/feature_map_sum.png")

def create_dir():

if not os.path.exists('./feature_map'):

os.mkdir('./feature_map')

else:

shutil.rmtree('./feature_map')

os.mkdir('./feature_map')

if __name__ =='__main__':

img = cv2.imread('cat_1.jpg')

print(img.shape)

create_dir()

model = create_model()

img_batch = np.expand_dims(img,axis=0)

conv_img = model.predict(img_batch) #predict()的参数是需要四维的

save_conv_img(conv_img)

需要展示哪一层的特征图,就代码注释掉后面的哪几层。举个例子,你想观察第7层,就将Layer 7 后面的代码注释掉。

create_model() 函数跟上一章节VGG16代码实现是完全相同的。

注意:第一层的 input_shape=(32, 32, 3) 代表你输入的图像必须是32×32大小,这里需要和你的输入图片大小完全一致。假如你输入一张640(W:宽)× 480(H:高)的图片,则需要改为 input_shape=(480, 640, 3)。

举例:输入图片 640(W:宽)× 480(H:高)

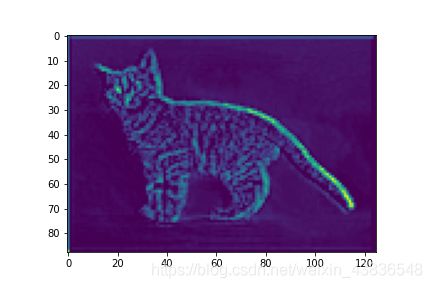

原图

原图

第七层第3个卷积核产生的特征图

第七层第3个卷积核产生的特征图

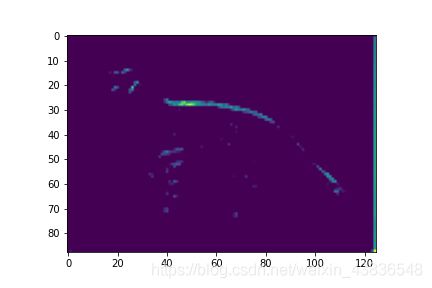

第七层第49个卷积核产生的特征图

第七层第49个卷积核产生的特征图

第七层第256个卷积核产生的特征图

第七层第256个卷积核产生的特征图 原图

原图 第七层第3个卷积核产生的特征图

第七层第3个卷积核产生的特征图

第七层第256个卷积核产生的特征图

第七层第256个卷积核产生的特征图

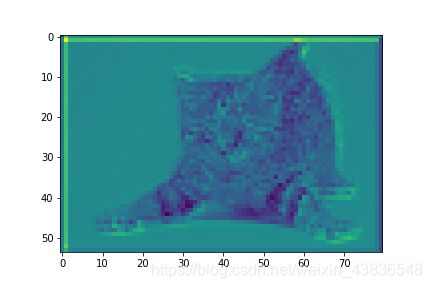

第十三层第3个卷积核产生的特征图

第十三层第3个卷积核产生的特征图

第十三层第256个卷积核产生的特征图

第十三层第256个卷积核产生的特征图