CentOS7搭建hadoop集群详细步骤

一、准备阶段:

- 准备一台服务器作为原始机:配置例如内存4G、硬盘50G;

- 安装一些必要软件(服务器需联网):

yum install -y epel-release

yum install -y psmisc nc net-tools rsync vim lrzsz ntp libzstd openssl-static tree iotop git

- 关闭防火墙并且设置开机自启

systemctl stop firewalld

systemctl disable firewalld

- 创建用户、设置密码并设置其拥有root权限,例:

adduser wyq

passwd wyq

123456

#(不用在意提示)

123456

vim /etc/sudoers

#找到root ALL=(ALL) ALL这一行,输入i进入编辑模式,另起一行输入

wyq ALL=(ALL) ALL

#然后按Esc键退出编辑模式,进入一般模式,再输入 :wq 保存退出

- 在/opt下创建两个文件目录:software和module

mkdir /opt/software

mkdir /opt/module

- 设置其所有者和所属组为wyq

chown wyq:wyq /opt/software

chown wyq:wyq /opt/module

- 卸载虚拟机自带的open JDK(如果是新创建的就不用执行此步骤)

rmp -qa | grep -i java | xargs -nl rpm -e --nodeps

- 关机

shutdown -h now

二、集群搭建(本地模式)

- 从原始机克隆出需要数量的服务器,例如3台Hadoop101,Hadoop102,Hadoop103

- 修改这三台服务器的主机名,例如第一台:

vim /etc/hostname

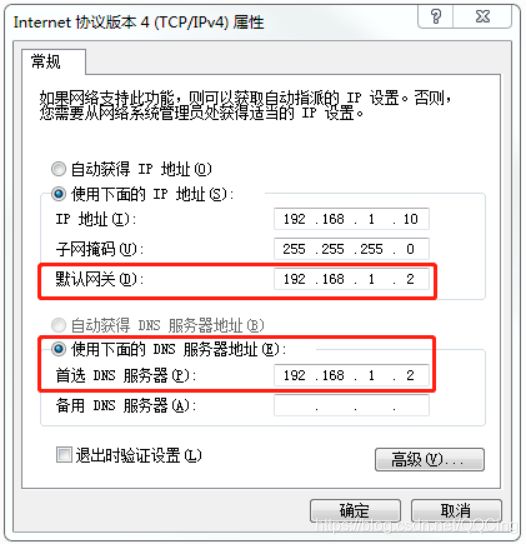

- 修改其ip地址,并将其设定为静态ip

vim /etc/sysconfig/network-scripts/ifcfg-ens33

#将BOOTPROTO设置为

BOOTPROTO=static

ONBOOT=yes

IPADDR=192.168.你的网段.101

GATEWAY=192.168.你的网段.2

DNS1=192.168.你的网段.2

#网段和下方虚拟机的虚拟网卡的第三位是一样的

- 配置linux克隆机主机名称映射hosts文件

vim /etc/hosts

#向其中添加

192.168.网段.101 Hadoop101

192.168.网段.102 Hadoop102

192.168.网段.103 Hadoop103

....

- 重启服务器

reboot

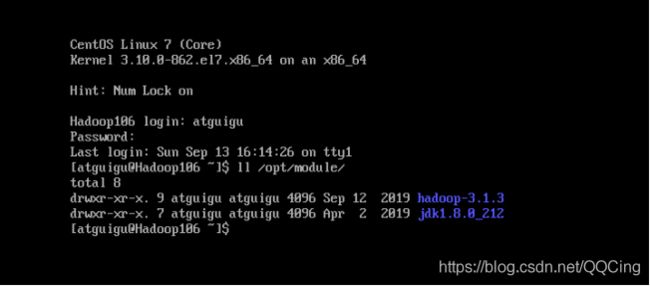

- 登录自己创建的用户,并在Hadoop101中安装JDK和Hadoop

6.1、使用远程连接工具例如Finalshell将JDK和Hadoop上传到Linux的/opt/software下

这里上传的是JDK1.8和hadoop3.1.3

6.2、解压JDK、hadoop到module中

tar -zxvf /opt/software/jdk-8u212-linux-x64.tar.gz -C /opt/module/

tar -zxvf /opt/software/hadoop-3.1.3.tar.gz -C /opt/module/

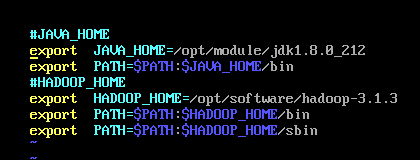

6.3、配置环境变量

sudo vim /etc/profile.d/my_env.sh

#内容为:

#JAVA_HOME

export JAVA_HOME=/opt/module/jdk1.8.0_212

export PATH=$PATH:$JAVA_HOME/bin

#HADOOP_HOME

export HADOOP_HOME=/opt/module/hadoop-3.1.3

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbin

6.4、让环境变量生效

source /etc/profile

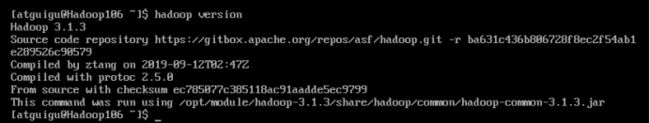

6.5、测试是否安装完成

java -version

hadoop version

三、完全分布式集群

- 编写分发脚本

在本地模式的基础上继续搭集群,在hadoop101的/home/wyq上编写一个集体分发脚本xsync

vim /home/wyq/xsync

#!/bin/bash

#1.判断参数是否为空

if [ $# -le 0 ]

then

echo Not Enough Arguement!

exit;

fi

#遍历所有服务器

for host in Hadoop101 Hadoop101 Hadoop102

do

echo ==================== $host ====================

for file in $@

do

if [ -e $file ]

then

# 获取父目录

pdir=$(cd -P $(dirname $file); pwd)

#获取当前文件的名称

fname=$(basename $file)

ssh $host "mkdir -p $pdir"

rsync -av $pdir/$fname $host:$pdir

else

echo $file does not exists!

fi

done

done

- 修改脚本权限

chmod +x xsync

- 将脚本复制到/bin中,以便于全局使用

sudo cp xsync /bin/

- 分发脚本xsync、环境变量、jdk和hadoop

sudo xsync /bin/xsync

sudo xsync /etv/profile.d/my_env.sh

xsync /opt/module

#在所有机器收到后,让环境变量生效,所有机器执行

source /etc/profile

- 配置免密登录(分发文件时不用输密码)

ssh-keygen -t rsa #三次回车

ssh-copy-id Hadoop101 #给自己发密钥

xsync /home/wyq/.ssh

#(可以配置root免密

#登录root

#ssh-keygen -t rsa #三次回车

#ssh-copy-id Hadoop101 #给自己发密钥

#xsync /.ssh

#)

- 修改集群配置文件

cd $HADOOP_HOME/etc/hadoop

vim core-site.xml

<configuration>

<property>

<name>fs.defaultFSname>

<value>hdfs://hadoop101:9820value>

property>

<property>

<name>hadoop.tmp.dirname>

<value>/opt/module/hadoop-3.1.3/datavalue>

property>

<property>

<name>hadoop.http.staticuser.username>

<value>wyqvalue>

property>

<property>

<name>hadoop.proxyuser.wyq.hostsname>

<value>*value>

property>

<property>

<name>hadoop.proxyuser.wyq.groupsname>

<value>*value>

property>

<property>

<name>hadoop.proxyuser.wyq.groupsname>

<value>*value>

property>

configuration>

vim hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.http-addressname>

<value>Hadoop101:9870value>

property>

<property>

<name>dfs.namenode.secondary.http-addressname>

<value>Hadoop103:9868value>

property>

configuration>

vim yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-servicesname>

<value>mapreduce_shufflevalue>

property>

<property>

<name>yarn.resourcemanager.hostnamename>

<value>Hadoop102value>

property>

<property>

<name>yarn.nodemanager.env-whitelistname>

<value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOMEvalue>

property>

<property>

<name>yarn.scheduler.minimum-allocation-mbname>

<value>512value>

property>

<property>

<name>yarn.scheduler.maximum-allocation-mbname>

<value>4096value>

property>

<property>

<name>yarn.nodemanager.resource.memory-mbname>

<value>4096value>

property>

<property>

<name>yarn.nodemanager.pmem-check-enabledname>

<value>falsevalue>

property>

<property>

<name>yarn.nodemanager.vmem-check-enabledname>

<value>falsevalue>

property>

configuration>

vim mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.namename>

<value>yarnvalue>

property>

configuration>

#为了群起集群

vim workers

Hadoop101

Hadoop102

Hadoop103

在这里插入代码片

- 配置历史服务器

vim mapred-site.xml

<property>

<name>mapreduce.jobhistory.addressname>

<value>hadoop102:10020value>

property>

<property>

<name>mapreduce.jobhistory.webapp.addressname>

<value>hadoop102:19888value>

property>

- 日志聚集

vim yarn-site.xml

<property>

<name>yarn.log-aggregation-enablename>

<value>truevalue>

property>

<property>

<name>yarn.log.server.urlname>

<value>http://hadoop102:19888/jobhistory/logsvalue>

property>

<property>

<name>yarn.log-aggregation.retain-secondsname>

<value>604800value>

property>

- 同步配置文件

xsync $HADOOP_HOME/etc

四、启动集群

- 第一次启动集群需要先在Hadoop101上格式化NameNode

(注意:如果集群在运行过程中报错,需要重新格式化NameNode的话,一定要先停止namenode和datanode进程,并且要删除所有机器的data和logs目录,然后再进行格式化。)

hdfs namenode -format

出现图中红色部分即为初始化成功

- 启动集群

#在Hadoop101上执行

start-dfs.sh

#在Hadoop102上执行

start-yarn.sh

#都启动完后

#执行

jps

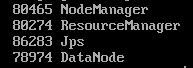

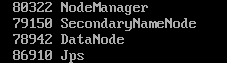

Hadoop101

Hadoop102

Hadoop103

打开浏览器

在地址栏输入

Hadoop101:9870

红色部分显示为三个子节点即为成功

- 关闭集群

#Hadoop101

stop-dfs.sh

#Hadoop102

stop-yarn.sh

至此,一个简单的集群搭建完了