ROS进阶 结合机器视觉操作机器人

《机器人操作系统入门》

中国大学MOOC 《机器人操作系统入门》

https://www.icourse163.org/course/ISCAS-1002580008#/info

https://github.com/DroidAITech/ROS-Academy-for-Beginners

(用gitee克隆 https://gitee.com/nickdlk/ROS-Academy-for-Beginners)

克隆代码后执行这个(Ubuntu 18,ROS版本melodic)再编译

rosdep install --from-paths src --ignore-src --rosdistro=melodic -y

《ROS机器人开发实践》

https://github.com/huchunxu/ros_exploring

(https://gitee.com/nickdlk/ros_exploring)

选择复制文件夹到src 目录

ros_exploring/ robot_perception / ork_tutorials需要 ecto参照安装 https://blog.csdn.net/ckkboy/article/details/99584987

git clone https://gitee.com/nickdlk/ecto

cd ~/catkin_ws && catkin_make

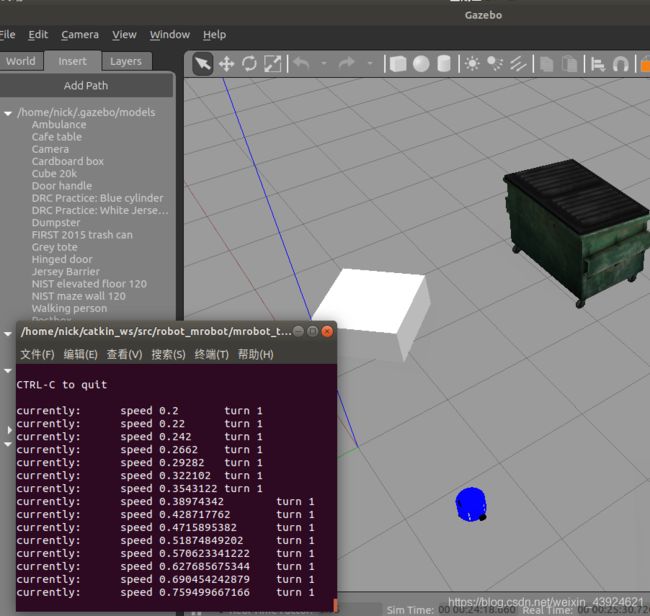

运行机器人仿真环境:

roslaunch mrobot_gazebo view_mrobot_with_camera_gazebo.launch

查看订阅消息

rostopic list

rostopic info /cmd_vel

控制移动

roslaunch mrobot_teleop mrobot_teleop.launch

ERROR: cannot launch node of type [mrobot_teleop/mrobot_teleop.py]: Cannot locate node of type [mrobot_teleop.py] in package [mrobot_teleop]. Make sure file exists in package path and permission is set to executable (chmod +x)

sudo chmod +x /home/nick/catkin_ws/src/robot_mrobot/mrobot_teleop/scripts/mrobot_teleop.py

运行带摄像头的机器人仿真环境:

roslaunch mrobot_gazebo view_mrobot_with_camera_gazebo.launch

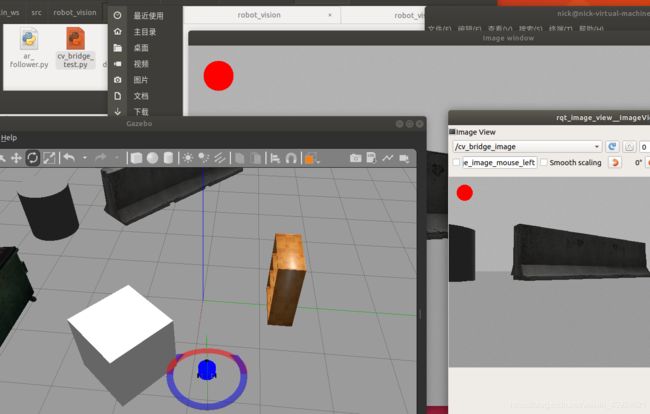

查看摄像头使用rqt工具:

rqt_image_view

usb_cam 连接真实摄像头

安装:sudo apt install ros-melodic-usb-cam

安装成功后测试:roslaunch usb_cam usb_cam-test.launch

修改usb_cam-test.launch文件

使用Qt工具:rqt_image_view

虚拟机内使用摄像头:

参考:https://blog.csdn.net/xiaozi0221/article/details/79135930/

要先确认VMware接入了摄像头

再在Ubuntu里面操作检查是否有摄像头:

lsusb

ls /dev/video*

安装:

sudo apt-get install cheese

cheese

OpenCv

安装:sudo apt-get install ros-melodic-vision-opencv libopencv-dev python-opencv

编译/robot_perception/robot_vision

运行:

roslaunch usb_cam usb_cam-test.launch

rosrun robot_vision cv_bridge_test.py

(运行不了要chmod +x 改权限)

图像上有个画出的红圈

修改成使用gazebo内的摄像头

修改/catkin_ws/src/robot_vision/scripts/cv_bridge_test.py文件:

self.image_sub = rospy.Subscriber("/usb_cam/image_raw", Image, self.callback)

#改成机器人摄像头的节点:

self.image_sub = rospy.Subscriber("/camera/image_raw", Image, self.callback)

识别色块

OPENCV颜色识别物体并用矩形框框起来 https://www.freesion.com/article/97021399645/

HSV 取色工具 https://brushes8.com/77318.html

catkin_ws/src/gaitech_edu/src/ball_tracking/ball_follower.py

RIA -E100 球跟随 代码

#!/usr/bin/env python

import cv2

import numpy as np

import rospy

from geometry_msgs.msg import Twist

from sensor_msgs.msg import Image

from cv_bridge import CvBridge, CvBridgeError

bridge = CvBridge()

#cap = cv2.VideoCapture(0)

#yellowLower =(31, 90, 7)

yellowLower =(20, 25, 25)

yellowUpper = (64, 255, 255)

integrated_angular_speed=0

integrated_angular_factor = 0.007;

linear_speed_factor = 200

angular_speed_factor = -0.005

def image_callback(message):

#time.sleep(0.03)

global integrated_angular_speed,integrated_angular_factor

frame = bridge.imgmsg_to_cv2(message, "bgr8")

cv2.namedWindow("Frame", cv2.WINDOW_NORMAL)

cv2.imshow("Frame", frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

cv2.destroyAllWindows()

hsv = cv2.cvtColor(frame, cv2.COLOR_BGR2HSV)

mask = cv2.inRange(hsv, yellowLower, yellowUpper)

mask = cv2.erode(mask, None, iterations=2)

mask = cv2.dilate(mask, None, iterations=2)

cv2.imshow("Mask", mask)

_, contours, hierarchy = cv2.findContours(mask, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

objects = np.zeros([frame.shape[0], frame.shape[1], 3], 'uint8')

# move, draw contours

max_c= None

max_c_area= 0

x=0;

y=0;

for c in contours:

area = cv2.contourArea(c) #取圆圈面积

if area > 30:

if area>max_c_area: #取到最大的圆圈

max_c = c

max_c_area = area

perimeter = cv2.arcLength(c, True) #取圆圈周长

# now we want to draw the centroid, use image moment

# get centers on x and y

((x, y), radius) = cv2.minEnclosingCircle(c)

x = int(x)

y = int (y)

radius = int(radius)

cv2.circle(objects, (x,y), radius, (0,0,255), 10) #画出圆圈

# print("x= ", x)

# print('max_c_area= ',max_c_area)

# print(frame.shape[1])

if (max_c_area>40): #如果面积大于这个值就移动靠近

velocity_message.linear.x= linear_speed_factor/max_c_area

Az = (x-frame.shape[1]/2)* angular_speed_factor ;

integrated_angular_speed +=Az

if abs(Az)>0.1:

velocity_message.angular.z= Az + integrated_angular_factor * integrated_angular_speed

else:

velocity_message.angular.z =0

pub.publish(velocity_message)

else:

velocity_message.linear.x=0

velocity_message.angular.z=0

pub.publish(velocity_message)

cv2.imshow("Countors", objects)

def camera_listener():

rospy.Subscriber("/camera/image_raw", Image, image_callback)

#rospy.Subscriber("/camera/rgb/image_raw", Image, image_callback)

rospy.spin()

if __name__ == '__main__':

velocity_message = Twist()

#pub = rospy.Publisher('/teleop/cmd_vel', Twist, queue_size=10)

pub = rospy.Publisher('/cmd_vel', Twist, queue_size=10)

rospy.init_node('ball_tracker',anonymous=True)

camera_listener()

控制移动

/catkin_ws/src/robot_mrobot/mrobot_teleop/scripts/mrobot_teleop.py

#!/usr/bin/env python

# -*- coding: utf-8 -*-

import rospy

from geometry_msgs.msg import Twist

import sys, select, termios, tty

msg = """

Control mrobot!

---------------------------

Moving around:

u i o

j k l

m , .

q/z : increase/decrease max speeds by 10%

w/x : increase/decrease only linear speed by 10%

e/c : increase/decrease only angular speed by 10%

space key, k : force stop

anything else : stop smoothly

CTRL-C to quit

"""

moveBindings = {

'i':(1,0),

'o':(1,-1),

'j':(0,1),

'l':(0,-1),

'u':(1,1),

',':(-1,0),

'.':(-1,1),

'm':(-1,-1),

}

speedBindings={

'q':(1.1,1.1),

'z':(.9,.9),

'w':(1.1,1),

'x':(.9,1),

'e':(1,1.1),

'c':(1,.9),

}

def getKey():

tty.setraw(sys.stdin.fileno())

rlist, _, _ = select.select([sys.stdin], [], [], 0.1)

if rlist:

key = sys.stdin.read(1)

else:

key = ''

termios.tcsetattr(sys.stdin, termios.TCSADRAIN, settings)

return key

speed = .2

turn = 1

def vels(speed,turn):

return "currently:\tspeed %s\tturn %s " % (speed,turn)

if __name__=="__main__":

settings = termios.tcgetattr(sys.stdin)

rospy.init_node('mrobot_teleop')

pub = rospy.Publisher('/cmd_vel', Twist, queue_size=5)

x = 0

th = 0

status = 0

count = 0

acc = 0.1

target_speed = 0

target_turn = 0

control_speed = 0

control_turn = 0

try:

print msg

print vels(speed,turn)

while(1):

key = getKey()

# 运动控制方向键(1:正方向,-1负方向)

if key in moveBindings.keys():

x = moveBindings[key][0]

th = moveBindings[key][1]

count = 0

# 速度修改键

elif key in speedBindings.keys():

speed = speed * speedBindings[key][0] # 线速度增加0.1倍

turn = turn * speedBindings[key][1] # 角速度增加0.1倍

count = 0

print vels(speed,turn)

if (status == 14):

print msg

status = (status + 1) % 15

# 停止键

elif key == ' ' or key == 'k' :

x = 0

th = 0

control_speed = 0

control_turn = 0

else:

count = count + 1

if count > 4:

x = 0

th = 0

if (key == '\x03'):

break

# 目标速度=速度值*方向值

target_speed = speed * x

target_turn = turn * th

# 速度限位,防止速度增减过快

if target_speed > control_speed:

control_speed = min( target_speed, control_speed + 0.02 )

elif target_speed < control_speed:

control_speed = max( target_speed, control_speed - 0.02 )

else:

control_speed = target_speed

if target_turn > control_turn:

control_turn = min( target_turn, control_turn + 0.1 )

elif target_turn < control_turn:

control_turn = max( target_turn, control_turn - 0.1 )

else:

control_turn = target_turn

# 创建并发布twist消息

twist = Twist()

twist.linear.x = control_speed;

twist.linear.y = 0;

twist.linear.z = 0

twist.angular.x = 0;

twist.angular.y = 0;

twist.angular.z = control_turn

pub.publish(twist)

except:

print e

finally:

twist = Twist()

twist.linear.x = 0; twist.linear.y = 0; twist.linear.z = 0

twist.angular.x = 0; twist.angular.y = 0; twist.angular.z = 0

pub.publish(twist)

termios.tcsetattr(sys.stdin, termios.TCSADRAIN, settings)

RIA-E100的腿跟随代码

/home/nick/catkin_ws/src/gaitech_roslink/src/roslink_tensorflow_bridge_follower_app.py

#!/usr/bin/env python

from SimpleWebSocketServer import SimpleWebSocketServer, WebSocket # pip install git+https://github.com/dpallot/simple-websocket-server.git

import rospy

from std_msgs.msg import String

from sensor_msgs.msg import CompressedImage, LaserScan #, Image, CameraInfo,

import cv2

import numpy as np

import base64

from geometry_msgs.msg import Twist

import threading

import time

# Load an color image in grayscale

clients = []

lidar= []

d=False

def compressedImageCallback(data):

# print("compressedImageCallback")

global clients,d

if d:

d=False

return;

else:

d=True

try:

#cv_image = ROSLinkBridgeARDrone.bridge.imgmsg_to_cv2(data, "bgr8")

np_arr = np.fromstring(data.data, np.uint8)

cv_image = cv2.imdecode(np_arr, cv2.CV_16U)

except CvBridgeError as e:

print(e)

data = u''+base64.encodestring(np_arr)

for client in clients:

# print("message sent")

client.sendMessage(data)

def LidarCallback(msg):

global lidar

lidar = msg.ranges[330:360]+msg.ranges[0:40]

lidar = lidar[::-1]

rospy.init_node('roslink_tensorflow_bridge_node', anonymous=True)

rospy.Subscriber("/camera/rgb/image_raw/compressed", CompressedImage, compressedImageCallback)

rospy.Subscriber("/scan", LaserScan, LidarCallback)

pub = rospy.Publisher('/tensorflow_bridge',String, queue_size=10)

tpub = rospy.Publisher('/teleop/cmd_vel',Twist, queue_size=10)

twist= Twist()

print("roslink tensorflow bridge node started!")

class TwistCommandThread():

"""docstring for command"""

def __init__(self):

t = threading.Thread(target=self.run)

t.setName("TwistCommandThread")

t.start()

def run ( self ):

global tpub,twist,rospy

while not rospy.is_shutdown():

time.sleep(0.0551)

# print ROSLinkBridgeRIA_E100.TwistCommand

tpub.publish (twist)

TwistCommandThread = TwistCommandThread()

websocket_prot = 9091

clients = []

#PID controller

linear_p=0.25

linear_i_sum=0

linear_i_factor=0.02

angular_p= - 0.0145

angular_i_sum = 0

angular_i_factor= - 0.0005

class SimpleWebSocketHub(WebSocket):

def handleMessage(self):

global pub, lidar,twist, angular_p,angular_i_factor,angular_i_sum,linear_p,linear_i_sum,linear_i_factor

print("self.data")

legs = self.data.split('-') #收到的腿的方框的坐标 多个 分割

chosed_point=0

chosed_point_distacne= 2.50

for leg in legs: #找最近的脚

if(len(leg)>10):

x = leg.split(",")

xmin= int(float(x[1]) * 70)

xmax= int(float(x[3]) * 70)

print(xmin,xmax,"xmin,xmax", min(lidar[xmin:xmax]))

atemp=chosed_point

dtemp=chosed_point_distacne

i=xmin

while i<xmax:

if lidar[i]<chosed_point_distacne:

chosed_point_distacne = lidar[i]

chosed_point = i -30

# chosed_point = int((xmin+xmax)/2)

i+=1

#计算权值然后移动

print(chosed_point, chosed_point_distacne)

#距离小于2.5 控制靠近

#twist.linear.x是前进线速度

#twist.angular.z是左右角度 角速度

if (chosed_point_distacne<2.5):

if (chosed_point_distacne>0.65):

linear_i_sum+=chosed_point_distacne

twist.linear.x= chosed_point_distacne * linear_p + linear_i_factor * linear_i_sum

angular_i_sum+=chosed_point

twist.angular.z= chosed_point * angular_p * 0.4 + angular_i_factor * angular_i_sum

else:

print("just angular")

twist.linear.x=0

if chosed_point_distacne>0.35:

angular_i_sum+=chosed_point

twist.angular.z= chosed_point * angular_p + angular_i_factor * angular_i_sum

else:

twist.angular.z=0

else:

#大于2.5的超出范围不移动

print("out of range")

twist.angular.z=0

twist.linear.x=0

linear_i_sum=0

angular_i_sum=0

pub.publish(self.data)

def handleConnected(self):

global clients

print(self.address, 'connected')

clients.append(self)

print("connected clients", len(clients))

def handleError(self, error):

print (error)

def handleClose(self):

clients.remove(self)

print(self.address, 'closed')

print("connected clients", len(clients))

server = SimpleWebSocketServer('', websocket_prot, SimpleWebSocketHub)

server.serveforever()

物体识别

使用ROS集成的物体识别框架——Object Recognition Kitchen(ORK)

安装:

mkdir ork_ws && cd ork_ws

wstool init src https://raw.github.com/wg-perception/object_recognition_core/master/doc/source/ork.rosinstall.kinetic.plus

cd src && wstool update -j8

cd .. && rosdep install --from-paths src -i -y

catkin_make

下载不了文件的复制这段到ork_ws下:

- git: {local-name: ecto, uri: "https://github.com/plasmodic/ecto"}

- git: {local-name: ecto_image_pipeline, uri: "https://github.com/plasmodic/ecto_image_pipeline"}

- git: {local-name: ecto_openni, uri: "https://github.com/plasmodic/ecto_openni"}

- git: {local-name: ecto_opencv, uri: "https://github.com/plasmodic/ecto_opencv"}

- git: {local-name: ecto_pcl, uri: "https://github.com/plasmodic/ecto_pcl"}

- git: {local-name: ecto_ros, uri: "https://github.com/plasmodic/ecto_ros"}

- git: {local-name: opencv_candidate, uri: "https://github.com/wg-perception/opencv_candidate"}

- git: {local-name: ork_core, uri: "https://github.com/wg-perception/object_recognition_core"}

- git: {local-name: ork_capture, uri: "https://github.com/wg-perception/capture"}

- git: {local-name: ork_reconstruction, uri: "https://github.com/wg-perception/reconstruction"}

- git: {local-name: ork_linemod, uri: "https://github.com/wg-perception/linemod"}

- git: {local-name: ork_renderer, uri: "https://github.com/wg-perception/ork_renderer"}

- git: {local-name: ork_tabletop, uri: "https://github.com/wg-perception/tabletop"}

- git: {local-name: ork_tod, uri: "https://github.com/wg-perception/tod"}

- git: {local-name: ork_transparent_objects, uri: "https://github.com/wg-perception/transparent_objects"}

- git: {local-name: ork_ros, uri: "https://github.com/wg-perception/object_recognition_ros"}

- git: {local-name: ork_ros_visualization, uri: "https://github.com/wg-perception/object_recognition_ros_visualization"}

运行:wstool init src ork_ws/ork.rosinstall.kinetic.plus

放弃这种方式了,识别物体有限,需要做模型

机器人识别色块并移动 识别程序与机器人分离

以下代码用RIA-E100人(腿)跟随代码修改:

机器人端只发送摄像头数据和接受识别程序返回的参数,然后控制机器人,识别程序使用OpenCV处理传来的图像并返回移动参数给机器人。

机器人端 roslink_tensorflow_bridge_follower_app.py

python /home/nick/catkin_ws/src/gaitech_roslink/src/roslink_tensorflow_bridge_follower_app.py

#!/usr/bin/env python

from SimpleWebSocketServer import SimpleWebSocketServer, WebSocket # pip install git+https://github.com/dpallot/simple-websocket-server.git

import rospy

from std_msgs.msg import String

from sensor_msgs.msg import CompressedImage, LaserScan #, Image, CameraInfo,

import cv2

import numpy as np

import base64

from geometry_msgs.msg import Twist

import threading

import time

# Load an color image in grayscale

clients = []

lidar= []

d=False

def compressedImageCallback(data):

# print("compressedImageCallback")

global clients,d

if d:

d=False

return;

else:

d=True

try:

#cv_image = ROSLinkBridgeARDrone.bridge.imgmsg_to_cv2(data, "bgr8")

np_arr = np.fromstring(data.data, np.uint8)

cv_image = cv2.imdecode(np_arr, cv2.CV_16U)

except CvBridgeError as e:

print(e)

data = u''+base64.encodestring(np_arr)

for client in clients:

# print("message sent")

client.sendMessage(data)

def LidarCallback(msg):

global lidar

lidar = msg.ranges[330:360]+msg.ranges[0:40]

lidar = lidar[::-1]

rospy.init_node('roslink_tensorflow_bridge_node', anonymous=True)

rospy.Subscriber("/camera/image_raw/compressed", CompressedImage, compressedImageCallback)

rospy.Subscriber("/scan", LaserScan, LidarCallback)

pub = rospy.Publisher('/tensorflow_bridge',String, queue_size=10)

tpub = rospy.Publisher('/cmd_vel',Twist, queue_size=10)

twist= Twist()

print("roslink tensorflow bridge node started!")

class TwistCommandThread():

"""docstring for command"""

def __init__(self):

t = threading.Thread(target=self.run)

t.setName("TwistCommandThread")

t.start()

def run ( self ):

global tpub,twist,rospy

while not rospy.is_shutdown():

time.sleep(0.0551)

# print ROSLinkBridgeRIA_E100.TwistCommand

tpub.publish (twist)

TwistCommandThread = TwistCommandThread()

websocket_prot = 9091

clients = []

#PID controller

linear_p=0.25

linear_i_sum=0

linear_i_factor=0.02

angular_p= - 0.0145

angular_i_sum = 0

angular_i_factor= - 0.0005

class SimpleWebSocketHub(WebSocket):

def handleMessage(self):

global pub, lidar,twist, angular_p,angular_i_factor,angular_i_sum,linear_p,linear_i_sum,linear_i_factor

print("self.data")

data = self.data.split('-')

linear_x = float(data[0])

angular_z = float(data[1])

twist.angular.z = linear_x

twist.linear.x= angular_z

pub.publish(self.data)

def handleConnected(self):

global clients

print(self.address, 'connected')

clients.append(self)

print("connected clients", len(clients))

def handleError(self, error):

print (error)

def handleClose(self):

clients.remove(self)

print(self.address, 'closed')

print("connected clients", len(clients))

server = SimpleWebSocketServer('', websocket_prot, SimpleWebSocketHub)

server.serveforever()

识别程序 follower_websocket_stream.py

# Import packages

import numpy as np

import sys

#sys.path.remove('/opt/ros/kinetic/lib/python2.7/dist-packages')

import cv2

import websocket

from websocket import create_connection , WebSocket # pip install websocket-client

import threading

import time

import base64

# This is needed since the notebook is stored in the object_detection folder.

sys.path.append("..")

# Initialize webcam feed

video = cv2.VideoCapture(0)

ret = video.set(3,1280)

ret = video.set(4,720)

ws_timer = 0

WSconnected=False

ws = None

ws_frame= ""

farme_proccesed = True

def handel_websocket_closing():

print("***************reconnecting to WS server*****************")

t = threading.Thread(target=websocketThread)

t.setName("websocket thread")

t.start()

def on_close(ws):

global WSconnected,ws_timer

WSconnected =False

print("websocket connection closed")

ws_timer+=1

print("try to re connect after",ws_timer)

time.sleep(ws_timer)

handel_websocket_closing()

def on_error(ws, error):

print("websocket error ", error)

def on_open(ws):

global WSconnected,ws_timer

ws_timer=0

print("websocket connection opened")

time.sleep(1) # to make sure no thread is sending now

WSconnected =True

def on_message(ws, message):

global ws_frame, farme_proccesed

ws_frame= message

farme_proccesed = False

def websocketThread():

global WSconnected,ws

if(not WSconnected):

# this for public network

# WS_url = "ws://"+ gcs_server_ip+":9090/websockets/roslink/user/"+str(owner_id) +"/robot/"+key+"/robot"

#WS_url = "ws://192.168.31.70:9091/";

WS_url = "ws://192.168.43.2:9091/";

ws = websocket.WebSocketApp(WS_url,

on_message = on_message,

on_error = on_error,

on_close = on_close)

ws.on_open = on_open

# WSconnected=True;

ws.run_forever()

t = threading.Thread(target=websocketThread)

t.setName("websocket thread")

t.start()

yellowLower = (20, 25, 25)

yellowUpper = (64, 255, 255)

while(True):

if(farme_proccesed):

continue

try:

temp = base64.decodebytes(ws_frame.encode('utf-8'))

nparr = np.frombuffer(temp, np.uint8)

frame = cv2.imdecode(nparr, cv2.IMREAD_COLOR)

# print('here1')

except Exception as e:

raise e

t1= time.time()

frame_expanded = np.expand_dims(frame, axis=0)

farme_proccesed= True

# All the results have been drawn on the frame, so it's time to display it.

cv2.imshow('Object detector', frame)

cv2.namedWindow("Frame", cv2.WINDOW_NORMAL)

cv2.imshow("Frame", frame)

hsv = cv2.cvtColor(frame, cv2.COLOR_BGR2HSV)

mask = cv2.inRange(hsv, yellowLower, yellowUpper)

mask = cv2.erode(mask, None, iterations=2)

mask = cv2.dilate(mask, None, iterations=2)

cv2.namedWindow("mask", 0)

cv2.imshow("Mask", mask)

# 边缘检测

contours, hierarchy = cv2.findContours(mask, cv2.RETR_CCOMP, cv2.CHAIN_APPROX_SIMPLE)

objects = np.zeros([frame.shape[0], frame.shape[1], 3], 'uint8')

#找出最大的边缘

max_box_contour = None

max_contour_area = 0

max_box_centen = 0

x = 0

y = 0

for contour in contours:

area = cv2.contourArea(contour)

rect = cv2.minAreaRect(contour)

box_ = cv2.boxPoints(rect)

h = abs(box_[3, 1] - box_[1, 1])

w = abs(box_[3, 0] - box_[1, 0])

box = cv2.boxPoints(rect) # 计算最小面积矩形的坐标

box = np.int0(box) # 将坐标规范化为整数

print(box)

#取x横向坐标 用于计算中心点位置

centen_w = (box_[3, 0] - box_[1, 0])/2

centen = box_[1, 0] + centen_w

# 画出中心点 顺便计算y的坐标

center_x = int(centen)

centen_h = (box_[3, 1] - box_[1, 1]) / 2

center_y = int(box_[1, 1] + centen_h)

cv2.circle(frame, (center_x, center_y), 7, 128, -1) # 绘制中心点

if area > 30: #记录面积超过30的 记录最大值

if area > max_contour_area:

max_box_contour = contour

max_contour_area = area

max_box_centen = centen

perimeter = cv2.arcLength(contour, True)

angle = rect[2]

cv2.drawContours(frame, [box], 0, (255, 0, 0), 3) #绘制矩形 画出边框

# -1是画出所有边缘

draw_img3 = cv2.drawContours(frame.copy(), contours, -1, (0, 0, 255), 3)

cv2.namedWindow("draw_img3", 0)

cv2.namedWindow("img_3", 0)

cv2.imshow("img_3", frame)

cv2.imshow("draw_img3", draw_img3)

print("----------------")

linear_x = 0 #前进线速度

angular_z = 0 #旋转角速度

LINEAR_SPEED_FACTOR = 200 #基准线速度

ANGULAR_SPEED_FACTOR = -0.005 #基准角速度

INTEGRATED_ANGULAR_FACTOR = 0.007

integrated_angular_speed = 0

#返回移动的参数

if(max_contour_area > 40):#最大的面积大于40才移动

linear_x = LINEAR_SPEED_FACTOR / max_contour_area #面积越大 移动速度越慢 =》越远移动越快

Az = (x - frame.shape[1] / 2) * ANGULAR_SPEED_FACTOR #计算角速度 #

integrated_angular_speed += Az

if abs(Az) > 0.1:

angular_z = Az + INTEGRATED_ANGULAR_FACTOR * integrated_angular_speed

else:

angular_z = 0

else:

linear_x= 0

print(linear_x,angular_z)

return_str = str(linear_x)+"-"+str(angular_z)

if WSconnected:

ws.send(return_str)

# Press 'q' to quit

t2 = time.time()

print("fps")

#print(1/(t2-t1))

if cv2.waitKey(1) == ord('q'):

break

# Clean up

video.release()

cv2.destroyAllWindows()

程序实现机器人识别色块后跟随

物体的SDF文件

<sdf version='1.6'>

<model name='unit_box'>

<link name='link'>

<inertial>

<mass>1mass>

<inertia>

<ixx>0.166667ixx>

<ixy>0ixy>

<ixz>0ixz>

<iyy>0.166667iyy>

<iyz>0iyz>

<izz>0.166667izz>

inertia>

inertial>

<self_collide>0self_collide>

<enable_wind>0enable_wind>

<kinematic>0kinematic>

<pose frame=''>0 0 0 0 -0 0pose>

<visual name='visual'>

<geometry>

<box>

<size>0.265661 0.3166 0.280931size>

box>

geometry>

<material>

<script>

<name>Gazebo/Green</name>

<uri>file://media/materials/scripts/gazebo.material</uri>

script>

material>

<pose frame=''>0 0 0 0 -0 0pose>

<transparency>0transparency>

<cast_shadows>1cast_shadows>

visual>

<collision name='collision'>

<laser_retro>0laser_retro>

<max_contacts>10max_contacts>

<pose frame=''>0 0 0 0 -0 0pose>

<geometry>

<box>

<size>0.265661 0.3166 0.280931size>

box>

geometry>

<surface>

<friction>

<ode>

<mu>1mu>

<mu2>1mu2>

<fdir1>0 0 0fdir1>

<slip1>0slip1>

<slip2>0slip2>

ode>

<torsional>

<coefficient>1coefficient>

<patch_radius>0patch_radius>

<surface_radius>0surface_radius>

<use_patch_radius>1use_patch_radius>

<ode>

<slip>0slip>

ode>

torsional>

friction>

<bounce>

<restitution_coefficient>0restitution_coefficient>

<threshold>1e+06threshold>

bounce>

<contact>

<collide_without_contact>0collide_without_contact>

<collide_without_contact_bitmask>1collide_without_contact_bitmask>

<collide_bitmask>1collide_bitmask>

<ode>

<soft_cfm>0soft_cfm>

<soft_erp>0.2soft_erp>

<kp>1e+13kp>

<kd>1kd>

<max_vel>0.01max_vel>

<min_depth>0min_depth>

ode>

<bullet>

<split_impulse>1split_impulse>

<split_impulse_penetration_threshold>-0.01split_impulse_penetration_threshold>

<soft_cfm>0soft_cfm>

<soft_erp>0.2soft_erp>

<kp>1e+13kp>

<kd>1kd>

bullet>

contact>

surface>

collision>

link>

<static>0static>

<allow_auto_disable>1allow_auto_disable>

model>

sdf>

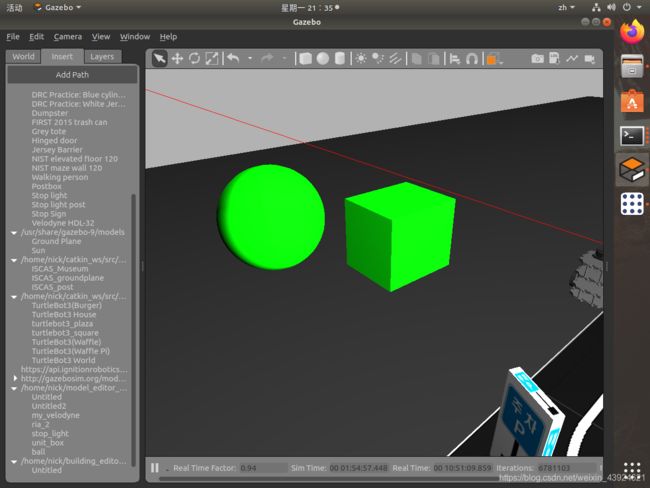

用默认模型修改的颜色 Gazebo/Green:

<script>

<name>Gazebo/Green</name>

<uri>file://media/materials/scripts/gazebo.material</uri>

script>

结果

识别的颜色用HSV描述,用工具取色即可,要取摄像头拍到的颜色才行,不能在Gazebo里面直接取,会有色差。

gazebo插件

官方教程:

http://gazebosim.org/tutorials?cat=write_plugin

翻译的博客:

https://gaoyichao.com/Xiaotu/?book=ros&title=Gazebo%E7%9A%84%E4%BB%BF%E7%9C%9F%E6%8F%92%E4%BB%B6

demo hello_world

首先参照这个:

http://gazebosim.org/tutorials?tut=plugins_hello_world&cat=write_plugin

安装包:sudo apt-get install libgazebo6-dev

hello_world.cc

mkdir ~/gazebo_plugin_tutorial

cd ~/gazebo_plugin_tutorial

gedit hello_world.cc

复制以下代码进去:(代码都在github)

#include

namespace gazebo

{

class WorldPluginTutorial : public WorldPlugin

{

public: WorldPluginTutorial() : WorldPlugin()

{

printf("Hello World!\n");

}

public: void Load(physics::WorldPtr _world, sdf::ElementPtr _sdf)

{

}

};

GZ_REGISTER_WORLD_PLUGIN(WorldPluginTutorial)

}

(原教程有解析)

CMakeLists.txt

编写~/gazebo_plugin_tutorial/CMakeLists.txt :

gedit ~/gazebo_plugin_tutorial/CMakeLists.txt

cmake_minimum_required(VERSION 2.8 FATAL_ERROR)

find_package(gazebo REQUIRED)

include_directories(${GAZEBO_INCLUDE_DIRS})

link_directories(${GAZEBO_LIBRARY_DIRS})

list(APPEND CMAKE_CXX_FLAGS "${GAZEBO_CXX_FLAGS}")

add_library(hello_world SHARED hello_world.cc)

target_link_libraries(hello_world ${GAZEBO_LIBRARIES})

编译

mkdir ~/gazebo_plugin_tutorial/build

cd ~/gazebo_plugin_tutorial/build

cmake ../

make

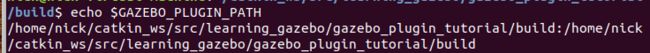

export GAZEBO_PLUGIN_PATH=${GAZEBO_PLUGIN_PATH}:~/gazebo_plugin_tutorial/build

或者:

cd build

export GAZEBO_PLUGIN_PATH=${GAZEBO_PLUGIN_PATH}:`pwd`

其中`pwd`的作用是获取当前路径

可以用echo $GAZEBO_PLUGIN_PATH 查看环境是否正确

hello.world

创建地图文件 gedit ~/gazebo_plugin_tutorial/hello.world

<sdf version="1.4">

<world name="default">

<plugin name="hello_world" filename="libhello_world.so"/>

world>

sdf>

启动:

gzserver ~/gazebo_plugin_tutorial/hello.world --verbose

成功显示:

直接在控制台有输出文本

运行 gzclient 可以打开gazebo窗口

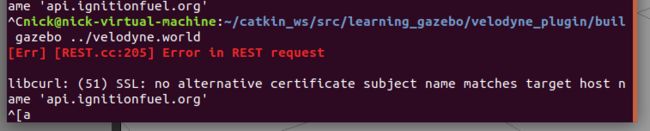

可能的错误:

gazebo 报错 [Err] [REST.cc:205] Error in REST request 解决

https://blog.csdn.net/u012254599/article/details/104327670/

找不到路径的错误:

确定环境变量里有这个路径,或者修改world文件里插件的路径。

plugins 插件的类型

Model Plugins 模型插件

Plugins allow complete access to the physical properties of models and their underlying elements (links, joints, collision objects).

插件允许完全访问模型的物理属性及其底层元素(链接、关节、碰撞对象)。

World Plugins 世界插件

It can be useful to control what models exist in a running simulation, and when they should be inserted.

世界插件可以控制控制正在运行的模拟中存在什么样的模型以及什么时候应该插入模型。

Programmatic World Control 程序化世界控制

没有介绍 盲猜是控制世界属性的 例程写了控制世界的重力 继承的仍是 public WorldPlugin 与World Plugins相同

System Plugin 系统插件

This tutorial will create a source file that is a system plugin for gzclient designed to save images into the directory /tmp/gazebo_frames.

本教程将创建一个源文件,它是 gzclient 的系统插件,用于将图像保存到/tmp/gazebo _ frames 目录中。

具体的API:

http://gazebosim.org/api.html

例如:

// Create a publisher on the ~/factory topic

transport::PublisherPtr factoryPub =

node->Advertise<msgs::Factory>("~/factory");

在:

http://osrf-distributions.s3.amazonaws.com/gazebo/api/11.0.0/classgazebo_1_1transport_1_1Publisher.html

红绿灯自动换色

模型参照这个:

增加这条属性就可以做到亮灯的效果

https://stackoverflow.com/questions/60729471/is-it-possible-to-auto-control-the-traffic-light-model-on-gazebo-simulation

就是用gazebo 的stop_light 写的

但是插件用C++写的,暂时放弃编写,在TurtleBot3里面找到了使用Python编写插件的例子。

TurtleBot3

https://www.ncnynl.com/archives/201702/1391.html

cd ~/catkin_ws/src/

git clone https://github.com/ROBOTIS-GIT/turtlebot3_msgs.git

git clone https://github.com/ROBOTIS-GIT/turtlebot3.git

cd ~/catkin_ws && catkin_make

https://gitee.com/nickdlk/turtlebot3.git

仿真环境

https://www.ncnynl.com/archives/201702/1398.html

安装TurtleBot3 Simulation

cd ~/catkin_ws/src/

git clone https://github.com/ROBOTIS-GIT/turtlebot3_simulations.git

cd ~/catkin_ws && catkin_make

替换仓库 https://gitee.com/nickdlk/turtlebot3_simulations.git

2

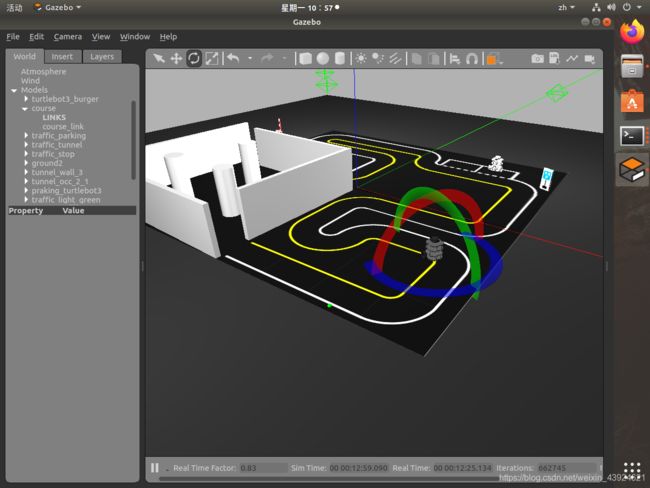

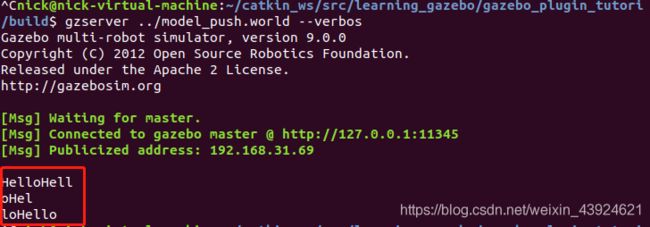

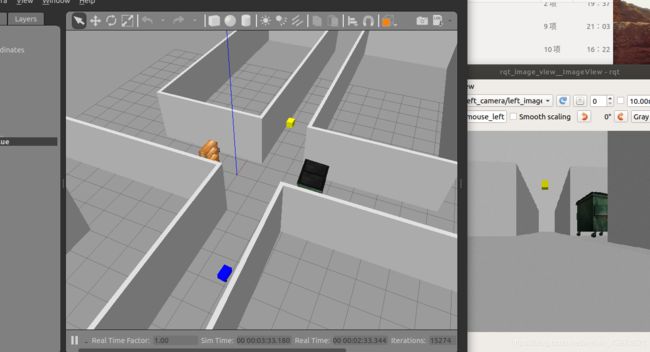

Turtlebot3与仿真-自动驾驶 AutoRace

教程:

https://www.ncnynl.com/archives/201707/1793.html

github:

https://github.com/ROBOTIS-GIT/turtlebot3_autorace

安装:

cd ~/catkin_ws/src/

git clone https://github.com/ROBOTIS-GIT/turtlebot3_autorace.git

cd ~/catkin_ws && catkin_make

运行:

roslaunch turtlebot3_gazebo turtlebot3_autorace.launch

这个会启动gazebo,世界文件为:$(find turtlebot3_gazebo)/worlds/turtlebot3_autorace.world 是固定的在/home/nick/catkin_ws/src/turtlebot3_simulations/turtlebot3_gazebo/worlds/turtlebot3_autorace.world

运行:

roslaunch turtlebot3_gazebo turtlebot3_autorace_mission.launch

这个launch的位置在/home/nick/catkin_ws/src/turtlebot3_simulations/turtlebot3_gazebo/launch,不在autorace包下。

在Gazebo中启动Traffic Light,Parking和Toll Gate任务节点,当tb3经过任务区域时,它们就会自动运行。

这个是控制红绿灯的文件

<node pkg="turtlebot3_autorace_core" type="core_node_mission" name="core_node_mission" output="screen" />

新终端,进行相机标定

export GAZEBO_MODE=true

export AUTO_IN_CALIB=action

roslaunch turtlebot3_autorace_camera turtlebot3_autorace_intrinsic_camera_calibration.launch

(?这个用来干嘛?)

会启动一个cameracalibrator.py(没有在src找到)

新终端,启动turtlebot3_autorace_core

export AUTO_EX_CALIB=action

export AUTO_DT_CALIB=action

export TURTLEBOT3_MODEL=burger

roslaunch turtlebot3_autorace_core turtlebot3_autorace_core.launch

这个会启动core_node_controller、core_mode_decider

两个都在/home/nick/catkin_ws/src/turtlebot3_autorace/turtlebot3_autorace_core/nodes下

其中controller会启动其他launch文件。

https://github.com/ROBOTIS-GIT/turtlebot3_autorace/blob/864131bbd59c037577a7535cc9b3a7924ff26d29/turtlebot3_autorace_core/nodes/core_node_controller#L430

self.launch_camera = roslaunch.parent.ROSLaunchParent(self.uuid, [self.ros_package_path + "turtlebot3_autorace_camera/launch/turtlebot3_autorace_extrinsic_camera_calibration.launch"])

其中turtlebot3_autorace_detect_sign.launch是用来识别红绿灯的。

新终端,启动所有自动驾驶节点

rostopic pub -1 /core/decided_mode std_msgs/UInt8 "data: 2"

https://v.youku.com/v_show/id_XNTAzMjg1NTQyOA==.html?spm=a2hbt.13141534.0.13141534

自动驾驶 2020库

https://emanual.robotis.com/docs/en/platform/turtlebot3/autonomous_driving/#autonomous-driving

克隆库:

cd ~/catkin_ws/src/

git clone https://github.com/ROBOTIS-GIT/turtlebot3_autorace_2020.git

cd ~/catkin_ws && catkin_make

安装依赖包:

sudo apt-get install ros-melodic-image-transport ros-melodic-cv-bridge ros-melodic-vision-opencv python-opencv libopencv-dev ros-melodic-image-proc

地图里面的一个红绿灯状态改变的例子

首先源自:

gazebo traffic lights cycle

https://github.com/ROBOTIS-GIT/turtlebot3_autorace/issues/23

提问者想循环交通灯的亮灯顺序

找到涉及的文件:

turtlebot3_autorace/turtlebot3_autorace_core/nodes/core_node_mission

https://github.com/ROBOTIS-GIT/turtlebot3_autorace/blob/864131bbd59c037577a7535cc9b3a7924ff26d29/turtlebot3_autorace_core/nodes/core_node_mission#L106

代码解析

等待服务传来消息

rospy.wait_for_service('gazebo/spawn_sdf_model')

找到这个消息的发布者 这个是gazebo的API,是用来在gazebo里创建物体的。

API:

http://wiki.ros.org/simulator_gazebo/Tutorials/Gazebo_ROS_APIhttps://blog.csdn.net/wangbinyantai/article/details/104246888

在 loadMissionModel 里读入了模型,在订阅到spawn_model_prox = rospy.ServiceProxy('gazebo/spawn_sdf_model', SpawnModel)后

设置模型:

spawn_model_prox('traffic_light_red', self.red_light_model, "robotos_name_space", self.initial_pose, "world")

后面的代码是将前一个灯的模型删掉

del_model_prox = rospy.ServiceProxy('gazebo/delete_model', DeleteModel)

del_model_prox('traffic_light_yellow')

控制红绿的是通过traffic_state变量控制的

traffic_state 是通过订阅odom节点,计算位置来改变的。odom是由Odmetry包发布的。

通过rqt工具可以看到odom下的pose.position.x和y

Odometry - 里程计 是用来记录机器人位姿的

http://wiki.ros.org/cn/navigation/Tutorials/RobotSetup/Odom

订阅使用:

https://blog.csdn.net/visual_studio1/article/details/107862465

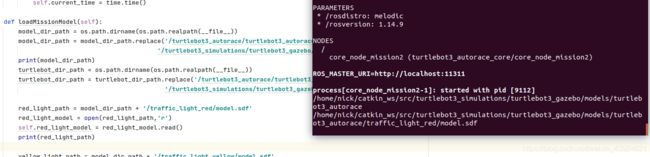

魔改红绿灯 改成红黄绿循环

复制一份core_node_mission为core_node_mission2

只需要修改:

def controlMission(self):

#loop 循环显示交通灯

while not rospy.is_shutdown():

if self.traffic_state == 1: # turn on yellow light

if abs(self.current_time - time.time()) > 5: # turn on yellow light after 5s.

rospy.wait_for_service('gazebo/spawn_sdf_model')

spawn_model_prox = rospy.ServiceProxy('gazebo/spawn_sdf_model', SpawnModel)

spawn_model_prox('traffic_light_yellow', self.yellow_light_model, "robotos_name_space", self.initial_pose, "world")

del_model_prox = rospy.ServiceProxy('gazebo/delete_model', DeleteModel)

del_model_prox('traffic_light_green')

self.traffic_state = 2

self.current_time = time.time()

elif self.traffic_state == 2:

if abs(self.current_time - time.time()) > 2: # turn on red light after 3s.

rospy.wait_for_service('gazebo/spawn_sdf_model')

spawn_model_prox = rospy.ServiceProxy('gazebo/spawn_sdf_model', SpawnModel)

spawn_model_prox('traffic_light_red', self.red_light_model, "robotos_name_space",

self.initial_pose, "world")

del_model_prox = rospy.ServiceProxy('gazebo/delete_model', DeleteModel)

del_model_prox('traffic_light_yellow')

self.traffic_state = 3

self.current_time = time.time()

elif self.traffic_state == 3:

if abs(self.current_time - time.time()) > 5: # turn on green light after 5s.

rospy.wait_for_service('gazebo/spawn_sdf_model')

spawn_model_prox = rospy.ServiceProxy('gazebo/spawn_sdf_model', SpawnModel)

spawn_model_prox('traffic_light_green', self.green_light_model, "robotos_name_space",

self.initial_pose, "world")

del_model_prox = rospy.ServiceProxy('gazebo/delete_model', DeleteModel)

del_model_prox('traffic_light_red')

self.traffic_state = 1

self.current_time = time.time()

self.rate.sleep()

和

def __init__(self):

self.current_time = time.time()

复制一份/home/nick/catkin_ws/src/turtlebot3_simulations/turtlebot3_gazebo/launch/turtlebot3_autorace_mission.launch为/home/nick/catkin_ws/src/turtlebot3_simulations/turtlebot3_gazebo/launch/turtlebot3_autorace_mission2.launch

修改内容为:

<launch>

<node pkg="turtlebot3_autorace_core" type="core_node_mission" name="core_node_mission" output="screen" />

launch>

编译:

cd ~/catkin_ws && catkin_make

运行:

roslaunch turtlebot3_gazebo turtlebot3_autorace.launch

roslaunch turtlebot3_gazebo turtlebot3_autorace_mission2.launch

就可以在地图上看到红灯亮5s,绿灯亮5s,黄灯亮2s再回到红灯。

替换成自己的模型

先找到模型的出处:

model_dir_path是替换掉了运行路径,保留了前面的用户路径。

模型创建的位置是由sdf文件的pose属性决定的

创建模型:

model.sdf

<sdf version='1.6'>

<model name='box_green'>

<link name='link'>

<inertial>

<mass>1mass>

<inertia>

<ixx>0.166667ixx>

<ixy>0ixy>

<ixz>0ixz>

<iyy>0.166667iyy>

<iyz>0iyz>

<izz>0.166667izz>

inertia>

inertial>

<self_collide>0self_collide>

<enable_wind>0enable_wind>

<kinematic>0kinematic>

<pose frame=''>0 0 0 0 -0 -1.5708pose>

<visual name='visual'>

<geometry>

<box>

<size>0.265661 0.3166 0.280931size>

box>

geometry>

<material>

<script>

<name>Gazebo/Green</name>

<uri>file://media/materials/scripts/gazebo.material</uri>

script>

material>

<pose frame=''>1.7 2 0.140465 0 0 0pose>

<transparency>0transparency>

<cast_shadows>1cast_shadows>

visual>

link>

<static>1static>

<allow_auto_disable>1allow_auto_disable>

model>

sdf>

static设置1为静态,不用collision属性

model.config

<model>

<name>box_greenname>

<version>1.0version>

<sdf version="1.6">model.sdfsdf>

<author>

<name>name>

<email>email>

author>

<description>description>

model>

复制两个,将Green改为Red和Yellow

修改python代码

#red_light_path = model_dir_path + '/traffic_light_red/model.sdf'

red_light_path = model_dir_path + '/box_red/model.sdf'

red_light_model = open(red_light_path,'r')

self.red_light_model = red_light_model.read()

print(red_light_path)

#yellow_light_path = model_dir_path + '/traffic_light_yellow/model.sdf'

yellow_light_path = model_dir_path + '/box_yellow/model.sdf'

yellow_light_model = open(yellow_light_path, 'r')

self.yellow_light_model = yellow_light_model.read()

#green_light_path = model_dir_path + '/traffic_light_green/model.sdf'

green_light_path = model_dir_path + '/box_green/model.sdf'

green_light_model = open(green_light_path, 'r')

self.green_light_model = green_light_model.read()

更换地图和模型

替换/home/nick/catkin_ws/src/mrobot_description/worlds下的playground.world

roslaunch mrobot_description view_mrobot_gazebo.launch

键盘控制:

roslaunch mrobot_teleop mrobot_teleop.launch

修改机器人识别红绿灯

识别端修改成:

import numpy as np

import sys

#sys.path.remove('/opt/ros/kinetic/lib/python2.7/dist-packages')

import cv2

import websocket

from websocket import create_connection , WebSocket # pip install websocket-client

import threading

import time

import base64

# This is needed since the notebook is stored in the object_detection folder.

sys.path.append("..")

# Initialize webcam feed

video = cv2.VideoCapture(0)

ret = video.set(3,1280)

ret = video.set(4,720)

ws_timer = 0

WSconnected=False

ws = None

ws_frame= ""

farme_proccesed = True

def handel_websocket_closing():

print("***************reconnecting to WS server*****************")

t = threading.Thread(target=websocketThread)

t.setName("websocket thread")

t.start()

def on_close(ws):

global WSconnected,ws_timer

WSconnected =False

print("websocket connection closed")

ws_timer+=1

print("try to re connect after",ws_timer)

time.sleep(ws_timer)

handel_websocket_closing()

def on_error(ws, error):

print("websocket error ", error)

def on_open(ws):

global WSconnected,ws_timer

ws_timer=0

print("websocket connection opened")

time.sleep(1) # to make sure no thread is sending now

WSconnected =True

def on_message(ws, message):

global ws_frame, farme_proccesed

ws_frame= message

farme_proccesed = False

def websocketThread():

global WSconnected,ws

if(not WSconnected):

# this for public network

# WS_url = "ws://"+ gcs_server_ip+":9090/websockets/roslink/user/"+str(owner_id) +"/robot/"+key+"/robot"

#WS_url = "ws://192.168.31.70:9091/";

WS_url = "ws://192.168.31.69:9091/";

ws = websocket.WebSocketApp(WS_url,

on_message = on_message,

on_error = on_error,

on_close = on_close)

ws.on_open = on_open

# WSconnected=True;

ws.run_forever()

t = threading.Thread(target=websocketThread)

t.setName("websocket thread")

t.start()

yellowLower = (20, 25, 25)

yellowUpper = (64, 255, 255)

greenLower =(59 , 242 , 99)

greenUpper = (64, 255, 255)

redLower =(59 , 242 , 99)

redUpper = (64, 255, 255)

while(True):

# Acquire frame and expand frame dimensions to have shape: [1, None, None, 3]

# i.e. a single-column array, where each item in the column has the pixel RGB value

# ret, frame = video.read()

if(farme_proccesed):

continue

try:

temp = base64.decodebytes(ws_frame.encode('utf-8'))

nparr = np.frombuffer(temp, np.uint8)

frame = cv2.imdecode(nparr, cv2.IMREAD_COLOR)

# print('here1')

except Exception as e:

raise e

t1= time.time()

frame_expanded = np.expand_dims(frame, axis=0)

farme_proccesed= True

# All the results have been drawn on the frame, so it's time to display it.

cv2.imshow('Object detector', frame)

# cv2.namedWindow("Frame", cv2.WINDOW_NORMAL)

# cv2.imshow("Frame", frame)

hsv = cv2.cvtColor(frame, cv2.COLOR_BGR2HSV)

mask = cv2.inRange(hsv, yellowLower, yellowUpper)

mask = cv2.erode(mask, None, iterations=2)

mask = cv2.dilate(mask, None, iterations=2)

cv2.namedWindow("mask", 0)

cv2.imshow("Mask", mask)

# 边缘检测

contours, hierarchy = cv2.findContours(mask, cv2.RETR_CCOMP, cv2.CHAIN_APPROX_SIMPLE)

objects = np.zeros([frame.shape[0], frame.shape[1], 3], 'uint8')

#找出最大的边缘

max_box_contour = None

max_contour_area = 0

max_box_centen = 0

x = 0

y = 0

for contour in contours:

area = cv2.contourArea(contour)

rect = cv2.minAreaRect(contour)

box_ = cv2.boxPoints(rect)

h = abs(box_[3, 1] - box_[1, 1])

w = abs(box_[3, 0] - box_[1, 0])

box = cv2.boxPoints(rect) # 计算最小面积矩形的坐标

box = np.int0(box) # 将坐标规范化为整数

print(box)

#取x横向坐标 用于计算中心点位置

centen_w = (box_[3, 0] - box_[1, 0])/2

centen = box_[1, 0] + centen_w

# 画出中心点 顺便计算y的坐标

center_x = int(centen)

centen_h = (box_[3, 1] - box_[1, 1]) / 2

center_y = int(box_[1, 1] + centen_h)

cv2.circle(frame, (center_x, center_y), 7, 128, -1) # 绘制中心点

if area > 30: #记录面积超过30的 记录最大值

if area > max_contour_area:

max_box_contour = contour

max_contour_area = area

max_box_centen = centen

perimeter = cv2.arcLength(contour, True)

angle = rect[2]

# if angle > 20:

# continue

cv2.drawContours(frame, [box], 0, (255, 0, 0), 3) #绘制矩形 画出边框

# -1是画出所有边缘

draw_img3 = cv2.drawContours(frame.copy(), contours, -1, (0, 0, 255), 3)

cv2.namedWindow("draw_img3", 0)

cv2.namedWindow("img_3", 0)

cv2.imshow("img_3", frame)

cv2.imshow("draw_img3", draw_img3)

print("----------------")

linear_x = 0 #前进线速度

angular_z = 0 #旋转角速度

LINEAR_SPEED_FACTOR = 2000 #基准线速度

ANGULAR_SPEED_FACTOR = -0.005 #基准角速度

INTEGRATED_ANGULAR_FACTOR = 0.007

integrated_angular_speed = 0

#返回移动的参数

if(max_contour_area > 40):#最大的面积大于40才移动

linear_x = LINEAR_SPEED_FACTOR / max_contour_area #面积越大 移动速度越慢 =》越远移动越快

Az = (x - frame.shape[1] / 2) * ANGULAR_SPEED_FACTOR #计算角速度 #

integrated_angular_speed += Az

if abs(Az) > 0.1:

angular_z = Az + INTEGRATED_ANGULAR_FACTOR * integrated_angular_speed

else:

angular_z = 0

else:

linear_x= 0

print(linear_x,angular_z)

#返回linear_x-angular_z linear_x是前进的

return_str = str(linear_x)+"|"+str(0)

if WSconnected:

ws.send(return_str)

# Press 'q' to quit

t2 = time.time()

print("fps")

#print(1/(t2-t1))

if cv2.waitKey(1) == ord('q'):

break

# Clean up

video.release()

cv2.destroyAllWindows()

机器人端修改增加了提示IP,修改成:

#!/usr/bin/env python

# encoding: utf-8

from SimpleWebSocketServer import SimpleWebSocketServer, WebSocket # pip install git+https://github.com/dpallot/simple-websocket-server.git

import rospy

from std_msgs.msg import String

from sensor_msgs.msg import CompressedImage, LaserScan #, Image, CameraInfo,

import cv2

import numpy as np

import base64

from geometry_msgs.msg import Twist

import threading

import time

import socket, fcntl, struct

def get_ip2(ifname):

s = socket.socket(socket.AF_INET, socket.SOCK_DGRAM)

return socket.inet_ntoa(fcntl.ioctl(s.fileno(), 0x8915, struct.pack('256s', ifname[:15]))[20:24])

print "本机IP:"+get_ip2('ens33')

# Load an color image in grayscale

clients = []

lidar= []

d=False

def compressedImageCallback(data):

# print("compressedImageCallback")

global clients,d

if d:

d=False

return;

else:

d=True

try:

#cv_image = ROSLinkBridgeARDrone.bridge.imgmsg_to_cv2(data, "bgr8")

np_arr = np.fromstring(data.data, np.uint8)

cv_image = cv2.imdecode(np_arr, cv2.CV_16U)

except CvBridgeError as e:

print(e)

data = u''+base64.encodestring(np_arr)

for client in clients:

# print("message sent")

client.sendMessage(data)

def LidarCallback(msg):

global lidar

lidar = msg.ranges[330:360]+msg.ranges[0:40]

lidar = lidar[::-1]

rospy.init_node('roslink_tensorflow_bridge_node', anonymous=True)

rospy.Subscriber("/camera/left_camera/left_image_raw/compressed", CompressedImage, compressedImageCallback)

rospy.Subscriber("/scan", LaserScan, LidarCallback)

pub = rospy.Publisher('/tensorflow_bridge',String, queue_size=10)

tpub = rospy.Publisher('/cmd_vel',Twist, queue_size=10)

twist= Twist()

print("roslink tensorflow bridge node started!")

twist.linear.y = 0

twist.linear.z = 0

twist.angular.x = 0

twist.angular.y = 0

class TwistCommandThread():

"""docstring for command"""

def __init__(self):

t = threading.Thread(target=self.run)

t.setName("TwistCommandThread")

t.start()

def run ( self ):

global tpub,twist,rospy

while not rospy.is_shutdown():

time.sleep(0.0551)

# print ROSLinkBridgeRIA_E100.TwistCommand

tpub.publish (twist)

TwistCommandThread = TwistCommandThread()

websocket_prot = 9091

clients = []

#PID controller

linear_p=0.25

linear_i_sum=0

linear_i_factor=0.02

angular_p= - 0.0145

angular_i_sum = 0

angular_i_factor= - 0.0005

class SimpleWebSocketHub(WebSocket):

def handleMessage(self):

global pub, lidar,twist, angular_p,angular_i_factor,angular_i_sum,linear_p,linear_i_sum,linear_i_factor

print("received data")

data = self.data.split('|')

linear_x = float(data[0])

angular_z = float(data[1])

print(self.data)

twist.angular.z = angular_z

twist.linear.x= linear_x

pub.publish(self.data)

def handleConnected(self):

global clients

print(self.address, 'connected')

clients.append(self)

print("connected clients", len(clients))

def handleError(self, error):

print (error)

def handleClose(self):

clients.remove(self)

print(self.address, 'closed')

print("connected clients", len(clients))

server = SimpleWebSocketServer('', websocket_prot, SimpleWebSocketHub)

server.serveforever()

结果 小车奔着红绿灯去了。。。。