【深度学习】Yolov3详解笔记及Pytorch代码

Yolov3详解笔记及Pytorch代码

- 预测部分

-

- 网络结构

- backbone:Darknet-53

- output

- 预测结果的解码

- 训练部分

-

- 计算loss所需参数

- pred是什么

- target是什么

- loss的计算过程

预测部分

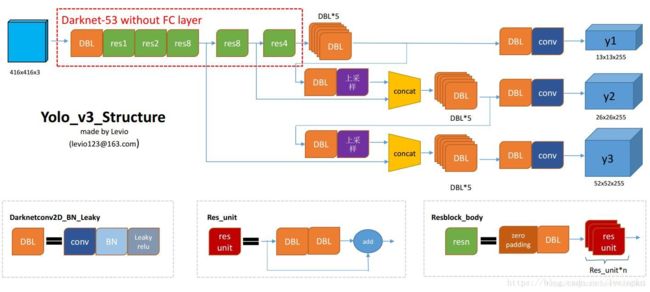

网络结构

DBL:图中左下角所示,也就是代码中的Darknetconv2d_BN_leaky,是yolov3基本组件。就是卷积+BN+leaky relu。对于v3来说,BN和leaky relu和卷积组成不可分离部分,共同组成最小组件。

resn:n代表数字,表示这个res_block含有多少个res_unit。这是yolov3的大组件,yolov3借鉴了resnet的残差结构,这种结构容易优化,能够增加网络深度来提升准确率,跳跃链接缓解了深度神经网络增加深度带来的梯度消失问题。res_unit也是由基本组件DBL构成的。

concat:张量拼接。将darknet的中间层和后面的某一层的上采样进行拼接。拼接的操作和残差层的add操作是不一样的,拼接会扩充张量的维度,而add只是直接相加不会导致张量维度的改变。

backbone:Darknet-53

yolov3使用darknet-53的前52层(没有全连接层),yolov3结构里没有池化层和全连接层。前向传播过程中,张量的尺寸变换时通过改变卷积核的步长来实现的stride=(2,2),这就等于将图像边长缩小了一半(面积缩小到1/4)。

yolov3要经历5次缩小,会将特征图缩小到原输入尺寸的1/32。输入为416x416,输出为13x13。所以通常要求输入图片的32的倍数。

为了加强算法对小目标检测的精确度,yolov3中采用类似FPN(feature pyramid networks)的upsample和融合做法(融合了3个scale,13x13,26x26,52x52),在多个scale的feature map上做检测。

实现代码为:

import torch

import torch.nn as nn

import math

from collections import OrderedDict

# 基本的darknet块,图中的Res_unit

class BasicBlock(nn.Module):

def __init__(self, inplanes, planes):

super(BasicBlock, self).__init__()

self.conv1 = nn.Conv2d(inplanes, planes[0], kernel_size=1,

stride=1, padding=0, bias=False)

self.bn1 = nn.BatchNorm2d(planes[0])

self.relu1 = nn.LeakyReLU(0.1)

self.conv2 = nn.Conv2d(planes[0], planes[1], kernel_size=3,

stride=1, padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(planes[1])

self.relu2 = nn.LeakyReLU(0.1)

def forward(self, x):

residual = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu1(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.relu2(out)

out += residual

return out

class DarkNet(nn.Module):

def __init__(self, layers):

super(DarkNet, self).__init__()

self.inplanes = 32

self.conv1 = nn.Conv2d(3, self.inplanes, kernel_size=3, stride=1, padding=1, bias=False)

self.bn1 = nn.BatchNorm2d(self.inplanes)

self.relu1 = nn.LeakyReLU(0.1)

self.layer1 = self._make_layer([32, 64], layers[0])

self.layer2 = self._make_layer([64, 128], layers[1])

self.layer3 = self._make_layer([128, 256], layers[2])

self.layer4 = self._make_layer([256, 512], layers[3])

self.layer5 = self._make_layer([512, 1024], layers[4])

self.layers_out_filters = [64, 128, 256, 512, 1024]

# 进行权值初始化

for m in self.modules():

if isinstance(m, nn.Conv2d):

n = m.kernel_size[0] * m.kernel_size[1] * m.out_channels

m.weight.data.normal_(0, math.sqrt(2. / n))

elif isinstance(m, nn.BatchNorm2d):

m.weight.data.fill_(1)

m.bias.data.zero_()

# 图中的resn

def _make_layer(self, planes, blocks):

layers = []

# 下采样,步长为2,卷积核大小为3

layers.append(("ds_conv", nn.Conv2d(self.inplanes, planes[1], kernel_size=3,

stride=2, padding=1, bias=False)))

layers.append(("ds_bn", nn.BatchNorm2d(planes[1])))

layers.append(("ds_relu", nn.LeakyReLU(0.1)))

# 加入darknet模块,res_unit

self.inplanes = planes[1]

for i in range(0, blocks):

layers.append(("residual_{}".format(i), BasicBlock(self.inplanes, planes)))

return nn.Sequential(OrderedDict(layers))

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu1(x)

x = self.layer1(x)

x = self.layer2(x)

out3 = self.layer3(x)

out4 = self.layer4(out3)

out5 = self.layer5(out4)

return out3, out4, out5

# Darnet-53整体结构,调用Darknet

def darknet53(pretrained, **kwargs):

model = DarkNet([1, 2, 8, 8, 4])

if pretrained:

if isinstance(pretrained, str):

model.load_state_dict(torch.load(pretrained))

else:

raise Exception("darknet request a pretrained path. got [{}]".format(pretrained))

return model

output

作者在3条预测支路采用的也是全卷积的结构,其中最后一个卷积层的卷积核个数是255,是针对coco数据集的80类:3x(80+4+1)=255,3表示每个网格单元预测3个box,每个box需要有(x,y,w,h,confidence)五个基本参数,还要有80个类别的概率。

具体来看y1,y2,y3是如何来的

网络中作者进行了3次检测,分别在32倍降采样,16倍降采样,8倍降采样时进行检测,多尺度的feature map上进行预测。

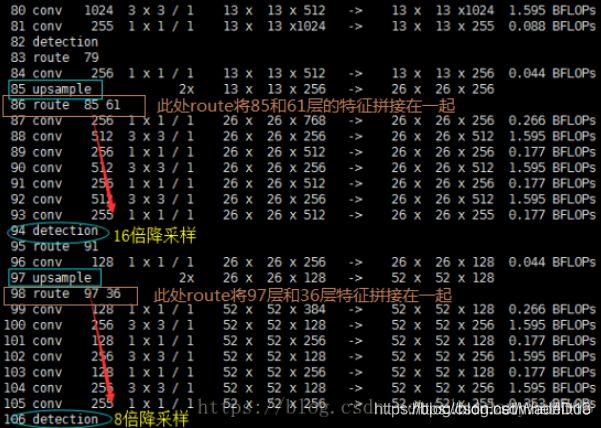

网络中使用upsample(上采样)的原因:网络越深的特征表达越好。比如在进行16倍降采样检测时,如果直接使用第四次下采样的特征来检测,这样就使用了浅层特征,效果一般不好。如果想使用32倍降采样后的特征,但深层特征的大小太小,因此yolov3使用了步长为2的upsample(上采样),吧32倍降采样得到的feature map的大小提升一倍,也就是成了16倍降采样后的维度。同理8倍采样也是对16倍降采样的特征进行步长为2的上采样,这样可以使用深层特征进行检测。

作者通过上采样将深层特征提取,其维度时与将要融合的特征层维度相同的(channel不同)。如下图,85层将13x13x256的特征上采样得到26x26x256,再将其与61层的特征拼接起来得到26x26x768。为了得到channel255,还需进行一系列3x3,1x1卷积操作,这样既可以提高非线性程度增加泛化性提高网络精度,又能减少参数提高实时性。

13x13x255就是81层得到的特征,没有进行concat。

52x52x255是97层将特征26x26x128上采样到52x52x128,然后融合36层得到的52x52x256,得到52x52x384,后再进行1x1,3x3卷积得到最终channel255。

使用pytorch,它的通道数默认在第一位。输入N张416x416的图片,经过多层计算后,会输出三个shape分别为(N,255,13,13),(N,255,26,26),(N,255,52,52)的数据,对应每个图分为13x13,26x26,52x52的网格上3个先验框的位置。

import torch

import torch.nn as nn

from collections import OrderedDict

from nets.darknet import darknet53

def conv2d(filter_in, filter_out, kernel_size):

pad = (kernel_size - 1) // 2 if kernel_size else 0

return nn.Sequential(OrderedDict([

("conv", nn.Conv2d(filter_in, filter_out, kernel_size=kernel_size, stride=1, padding=pad, bias=False)),

("bn", nn.BatchNorm2d(filter_out)),

("relu", nn.LeakyReLU(0.1)),

]))

# 对应图中的DBL*5+DBL+conv

def make_last_layers(filters_list, in_filters, out_filter):

m = nn.ModuleList([

conv2d(in_filters, filters_list[0], 1),

conv2d(filters_list[0], filters_list[1], 3),

conv2d(filters_list[1], filters_list[0], 1),

conv2d(filters_list[0], filters_list[1], 3),

conv2d(filters_list[1], filters_list[0], 1),

conv2d(filters_list[0], filters_list[1], 3),

nn.Conv2d(filters_list[1], out_filter, kernel_size=1,

stride=1, padding=0, bias=True)

])

return m

class YoloBody(nn.Module):

def __init__(self, config):

super(YoloBody, self).__init__()

self.config = config

# backbone

self.backbone = darknet53(None)

# out_filters = [64, 128, 256, 512, 1024]

out_filters = self.backbone.layers_out_filters

# last_layer0

# final_out_filter0 = 3*(5+num_classes)=3*(5+80)=255 (coco)

final_out_filter0 = len(config["yolo"]["anchors"][0]) * (5 + config["yolo"]["classes"])

# self.last_layer0对应图中的第81层

self.last_layer0 = make_last_layers([512, 1024], out_filters[-1], final_out_filter0)

# embedding1

final_out_filter1 = len(config["yolo"]["anchors"][1]) * (5 + config["yolo"]["classes"])

# self.last_layer1_conv对应图中的第84层

self.last_layer1_conv = conv2d(512, 256, 1)

# self.last_layer1_upsample对应图中85层上采样

self.last_layer1_upsample = nn.Upsample(scale_factor=2, mode='nearest')

# concat后再进行DBL*5+DBL+conv,对应框架图中的y2前的7次卷积

self.last_layer1 = make_last_layers([256, 512], out_filters[-2] + 256, final_out_filter1)

# embedding2

final_out_filter2 = len(config["yolo"]["anchors"][2]) * (5 + config["yolo"]["classes"])

# 对应图中第96层

self.last_layer2_conv = conv2d(256, 128, 1)

# 对应图中97层上采样

self.last_layer2_upsample = nn.Upsample(scale_factor=2, mode='nearest')

# 对应框架图中y3前的7次卷积

self.last_layer2 = make_last_layers([128, 256], out_filters[-3] + 128, final_out_filter2)

def forward(self, x):

# branch将make_last_layer里的7次卷积分成5次和后两次,对应结构图中的后面部分

# last_layer输入的是nn.ModuleList,layer_in输入darknet53的输出

def _branch(last_layer, layer_in):

for i, e in enumerate(last_layer):

layer_in = e(layer_in)

# 到第五次卷积时,将结果用out_bracn输出

if i == 4:

out_branch = layer_in

return layer_in, out_branch

# backbone,x0对应图中第74层darknet53的#(13,13,1024),x1对应61层#(26,26,512),x2对应36层#(52,52,256)

x2, x1, x0 = self.backbone(x)

# yolo branch 0

# 得到out0是最后的y1结果,out0_branch是结构图中y1前分支出的部分

out0, out0_branch = _branch(self.last_layer0, x0)

# yolo branch 1

# 用out0_branch去进行上采样,然后再进行concat

x1_in = self.last_layer1_conv(out0_branch)

x1_in = self.last_layer1_upsample(x1_in)

x1_in = torch.cat([x1_in, x1], 1)

# 与上同理

out1, out1_branch = _branch(self.last_layer1, x1_in)

# yolo branch 2

x2_in = self.last_layer2_conv(out1_branch)

x2_in = self.last_layer2_upsample(x2_in)

x2_in = torch.cat([x2_in, x2], 1)

out2, _ = _branch(self.last_layer2, x2_in)

return out0, out1, out2

预测结果的解码

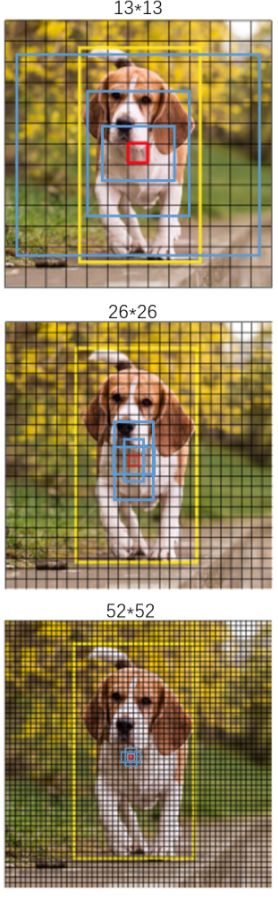

由上一步得到三个特征层的预测结果,shape分别为(N,255,13,13),(N,255,26,26),(N,255,52,52)的数据,对应每个图分为13x13、26x26、52x52的网格上3个预测框的位置。

但这个预测结果并不对应最终的预测框在图片上的位置,还需解码才能完成。

yolov3的预测原理:yolov3的3个特征层分别将整幅图分为13x13,26x26,52x52的网格,每个网格点负责一个区域的检测。

特征层的预测结果对应3个预测框的位置,我们将其reshape,结果为(N,3,85,13,13),(N,3,85,26,26),(N,3,85,52,52)。维度85中包含了4+1+80,分别代表了x_offset,y_offset,h和w,置信度,分类结果。

yolov3解码过程就是将每个网格点加上它对应的x_offset,y_offset,加完后的结果就是预测框的中心,然后再利用先验框和h,w结合计算出预测框的长和宽。这样就得到了整个预测框。

最终预测后还要进行得分排序与非极大抑制筛选

1,取出每一类得分大于self.obj_threshold的框和得分

2,利用框的位置和得分进行非极大抑制

实现代码

class DecodeBox(nn.Module):

def __init__(self, anchors, num_classes, img_size):

super(DecodeBox, self).__init__()

self.anchors = anchors

self.num_anchors = len(anchors)

self.num_classes = num_classes

self.bbox_attrs = 5 + num_classes

self.img_size = img_size

def forward(self, input):

# input为bs,3*(1+4+num_classes),13,13,以13*13为例

# 一共多少图片

batch_size = input.size(0)

# 13,13

input_height = input.size(2)

input_width = input.size(3)

# 计算步长

# 每一个特征点对应原来的图片上多少个像素点

# 如果特征层为13*13的话,一个特征点就对应原来图片上的416/13=32个像素点

stride_h = self.img_size[1] / input_height

stride_w = self.img_size[0] / input_width

# 归一到特征层上

# 把先验框的尺寸调整为特征层大小的形式

# 计算出先验框在特征层上对应的宽高

scaled_anchors = [(anchor_width / stride_w, anchor_height / stride_h) for anchor_width, anchor_height in self.anchors]

# 对预测结果进行resize

# bs,3*(5+num_classes),13,13 -> bs,3,13,13,(5+num_classes)

prediction = input.view(batch_size, self.num_anchors,

self.bbox_attrs, input_height, input_width).permute(0, 1, 3, 4, 2).contiguous()

# 先验框的中心位置的调整参数

x = torch.sigmoid(prediction[..., 0])

y = torch.sigmoid(prediction[..., 1])

# 先验框的宽高调整参数

w = prediction[..., 2] # Width

h = prediction[..., 3] # Height

# 获得置信度,是否有物体

conf = torch.sigmoid(prediction[..., 4])

# 种类置信度

pred_cls = torch.sigmoid(prediction[..., 5:]) # Cls pred.

FloatTensor = torch.cuda.FloatTensor if x.is_cuda else torch.FloatTensor

LongTensor = torch.cuda.LongTensor if x.is_cuda else torch.LongTensor

# 生成网格,先验框中心,网格左上角

grid_x = torch.linspace(0, input_width - 1, input_width).repeat(input_width, 1).repeat(

batch_size * self.num_anchors, 1, 1).view(x.shape).type(FloatTensor)

grid_y = torch.linspace(0, input_height - 1, input_height).repeat(input_height, 1).t().repeat(

batch_size * self.num_anchors, 1, 1).view(y.shape).type(FloatTensor)

# 生成先验框的宽高

anchor_w = FloatTensor(scaled_anchors).index_select(1, LongTensor([0]))

anchor_h = FloatTensor(scaled_anchors).index_select(1, LongTensor([1]))

anchor_w = anchor_w.repeat(batch_size, 1).repeat(1, 1, input_height * input_width).view(w.shape)

anchor_h = anchor_h.repeat(batch_size, 1).repeat(1, 1, input_height * input_width).view(h.shape)

# 计算调整后的先验框中心与宽高

pred_boxes = FloatTensor(prediction[..., :4].shape)

pred_boxes[..., 0] = x.data + grid_x

pred_boxes[..., 1] = y.data + grid_y

pred_boxes[..., 2] = torch.exp(w.data) * anchor_w

pred_boxes[..., 3] = torch.exp(h.data) * anchor_h

# 用于将输出调整为相对于416x416的大小

_scale = torch.Tensor([stride_w, stride_h] * 2).type(FloatTensor)

output = torch.cat((pred_boxes.view(batch_size, -1, 4) * _scale,

conf.view(batch_size, -1, 1), pred_cls.view(batch_size, -1, self.num_classes)), -1)

return output.data

我们可以获得预测框在原图上的位置,而且这些预测框都是经过筛选的。这些筛选后的框可以直接绘制在图片上,就可以获得结果了。

训练部分

计算loss所需参数

计算loss的时候,实际上是pred和target之间的对比:

pred是网络的预测结果

target就是网络的真实框情况

pred是什么

对于yolov3模型来说,网络最后输出的内容就是三个特征层每个网格点对应的预测框及种类,即三个特征层分别对应着图片被分为不同size的网格后,每个网格点上三个先验框对应的位置,置信度及种类。

输出层的shape分别为(13,13,255),(26,26,255),(52,52,255)。(coco数据集;若使用voc数据集20类,最后维度应为3*(5+20)=75)

y_pre经过解码后才是真实图像上的情况。

target是什么

target就是一个真实图像中,真实框的情况。

第一个维度是batch_size,第二个维度是每一张图片里真实框的数量,第三个维度是内部真实框的信息,包括位置及种类。

loss的计算过程

拿到pred和target后,不可以简单的剪一下作为对比,需要进行如下步骤:

- 判断真实框在图片中的位置,判断其属于哪一个网格点去检测

- 判断真实框的哪个先验框重合程度最高

- 计算该网格点应该有怎么样的预测结果才能获得真实框

- 对所有真实框进行如上处理

- 获得网络应该有的预测结果,将其与实际的预测结果对比

from random import shuffle

import numpy as np

import torch

import torch.nn as nn

import math

import torch.nn.functional as F

from matplotlib.colors import rgb_to_hsv, hsv_to_rgb

from PIL import Image

from utils.utils import bbox_iou

def clip_by_tensor(t,t_min,t_max):

t=t.float()

result = (t >= t_min).float() * t + (t < t_min).float() * t_min

result = (result <= t_max).float() * result + (result > t_max).float() * t_max

return result

def MSELoss(pred,target):

return (pred-target)**2

def BCELoss(pred,target):

epsilon = 1e-7

pred = clip_by_tensor(pred, epsilon, 1.0 - epsilon)

output = -target * torch.log(pred) - (1.0 - target) * torch.log(1.0 - pred)

return output

class YOLOLoss(nn.Module):

def __init__(self, anchors, num_classes, img_size):

super(YOLOLoss, self).__init__()

self.anchors = anchors

self.num_anchors = len(anchors)

self.num_classes = num_classes

self.bbox_attrs = 5 + num_classes

self.img_size = img_size

self.ignore_threshold = 0.5

self.lambda_xy = 1.0

self.lambda_wh = 1.0

self.lambda_conf = 1.0

self.lambda_cls = 1.0

def forward(self, input, targets=None):

# 一共多少张图片

bs = input.size(0)

# 特征层的高

in_h = input.size(2)

# 特征层的宽

in_w = input.size(3)

# 计算步长

stride_h = self.img_size[1] / in_h

stride_w = self.img_size[0] / in_w

# 把先验框的尺寸调整成特征层大小的形式

scaled_anchors = [(a_w / stride_w, a_h / stride_h) for a_w, a_h in self.anchors]

# reshape

prediction = input.view(bs, int(self.num_anchors/3),

self.bbox_attrs, in_h, in_w).permute(0, 1, 3, 4, 2).contiguous()

# 对prediction预测进行调整

x = torch.sigmoid(prediction[..., 0]) # Center x

y = torch.sigmoid(prediction[..., 1]) # Center y

w = prediction[..., 2] # Width

h = prediction[..., 3] # Height

conf = torch.sigmoid(prediction[..., 4]) # Conf

pred_cls = torch.sigmoid(prediction[..., 5:]) # Cls pred.

# 找到哪些先验框内部包含物体

mask, noobj_mask, tx, ty, tw, th, tconf, tcls, box_loss_scale_x, box_loss_scale_y =\

self.get_target(targets, scaled_anchors,

in_w, in_h,

self.ignore_threshold)

noobj_mask = self.get_ignore(prediction, targets, scaled_anchors, in_w, in_h, noobj_mask)

box_loss_scale_x = (2-box_loss_scale_x).cuda()

box_loss_scale_y = (2-box_loss_scale_y).cuda()

box_loss_scale = box_loss_scale_x*box_loss_scale_y

mask, noobj_mask = mask.cuda(), noobj_mask.cuda()

tx, ty, tw, th = tx.cuda(), ty.cuda(), tw.cuda(), th.cuda()

tconf, tcls = tconf.cuda(), tcls.cuda()

# losses.

loss_x = torch.sum(BCELoss(x, tx) / bs * box_loss_scale * mask)

loss_y = torch.sum(BCELoss(y, ty) / bs * box_loss_scale * mask)

loss_w = torch.sum(MSELoss(w, tw) / bs * 0.5 * box_loss_scale * mask)

loss_h = torch.sum(MSELoss(h, th) / bs * 0.5 * box_loss_scale * mask)

loss_conf = torch.sum(BCELoss(conf, mask) * mask / bs) + \

torch.sum(BCELoss(conf, mask) * noobj_mask / bs)

loss_cls = torch.sum(BCELoss(pred_cls[mask == 1], tcls[mask == 1])/bs)

loss = loss_x * self.lambda_xy + loss_y * self.lambda_xy + \

loss_w * self.lambda_wh + loss_h * self.lambda_wh + \

loss_conf * self.lambda_conf + loss_cls * self.lambda_cls

# print(loss, loss_x.item() + loss_y.item(), loss_w.item() + loss_h.item(),

# loss_conf.item(), loss_cls.item(), \

# torch.sum(mask),torch.sum(noobj_mask))

return loss, loss_x.item(), loss_y.item(), loss_w.item(), \

loss_h.item(), loss_conf.item(), loss_cls.item()

def get_target(self, target, anchors, in_w, in_h, ignore_threshold):

# 计算一共有多少张图片

bs = len(target)

# 获得先验框

anchor_index = [[0,1,2],[3,4,5],[6,7,8]][[13,26,52].index(in_w)]

subtract_index = [0,3,6][[13,26,52].index(in_w)]

# 创建全是0或者全是1的阵列

mask = torch.zeros(bs, int(self.num_anchors/3), in_h, in_w, requires_grad=False)

noobj_mask = torch.ones(bs, int(self.num_anchors/3), in_h, in_w, requires_grad=False)

tx = torch.zeros(bs, int(self.num_anchors/3), in_h, in_w, requires_grad=False)

ty = torch.zeros(bs, int(self.num_anchors/3), in_h, in_w, requires_grad=False)

tw = torch.zeros(bs, int(self.num_anchors/3), in_h, in_w, requires_grad=False)

th = torch.zeros(bs, int(self.num_anchors/3), in_h, in_w, requires_grad=False)

tconf = torch.zeros(bs, int(self.num_anchors/3), in_h, in_w, requires_grad=False)

tcls = torch.zeros(bs, int(self.num_anchors/3), in_h, in_w, self.num_classes, requires_grad=False)

box_loss_scale_x = torch.zeros(bs, int(self.num_anchors/3), in_h, in_w, requires_grad=False)

box_loss_scale_y = torch.zeros(bs, int(self.num_anchors/3), in_h, in_w, requires_grad=False)

for b in range(bs):

for t in range(target[b].shape[0]):

# 计算出在特征层上的点位

gx = target[b][t, 0] * in_w

gy = target[b][t, 1] * in_h

gw = target[b][t, 2] * in_w

gh = target[b][t, 3] * in_h

# 计算出属于哪个网格

gi = int(gx)

gj = int(gy)

# 计算真实框的位置

gt_box = torch.FloatTensor(np.array([0, 0, gw, gh])).unsqueeze(0)

# 计算出所有先验框的位置

anchor_shapes = torch.FloatTensor(np.concatenate((np.zeros((self.num_anchors, 2)),

np.array(anchors)), 1))

# 计算重合程度

anch_ious = bbox_iou(gt_box, anchor_shapes)

# Find the best matching anchor box

best_n = np.argmax(anch_ious)

if best_n not in anchor_index:

continue

# Masks

if (gj < in_h) and (gi < in_w):

best_n = best_n - subtract_index

# 判定哪些先验框内部真实的存在物体

noobj_mask[b, best_n, gj, gi] = 0

mask[b, best_n, gj, gi] = 1

# 计算先验框中心调整参数

tx[b, best_n, gj, gi] = gx - gi

ty[b, best_n, gj, gi] = gy - gj

# 计算先验框宽高调整参数

tw[b, best_n, gj, gi] = math.log(gw / anchors[best_n+subtract_index][0])

th[b, best_n, gj, gi] = math.log(gh / anchors[best_n+subtract_index][1])

# 用于获得xywh的比例

box_loss_scale_x[b, best_n, gj, gi] = target[b][t, 2]

box_loss_scale_y[b, best_n, gj, gi] = target[b][t, 3]

# 物体置信度

tconf[b, best_n, gj, gi] = 1

# 种类

tcls[b, best_n, gj, gi, int(target[b][t, 4])] = 1

else:

print('Step {0} out of bound'.format(b))

print('gj: {0}, height: {1} | gi: {2}, width: {3}'.format(gj, in_h, gi, in_w))

continue

return mask, noobj_mask, tx, ty, tw, th, tconf, tcls, box_loss_scale_x, box_loss_scale_y

def get_ignore(self,prediction,target,scaled_anchors,in_w, in_h,noobj_mask):

bs = len(target)

anchor_index = [[0,1,2],[3,4,5],[6,7,8]][[13,26,52].index(in_w)]

scaled_anchors = np.array(scaled_anchors)[anchor_index]

# print(scaled_anchors)

# 先验框的中心位置的调整参数

x_all = torch.sigmoid(prediction[..., 0])

y_all = torch.sigmoid(prediction[..., 1])

# 先验框的宽高调整参数

w_all = prediction[..., 2] # Width

h_all = prediction[..., 3] # Height

for i in range(bs):

x = x_all[i]

y = y_all[i]

w = w_all[i]

h = h_all[i]

FloatTensor = torch.cuda.FloatTensor if x.is_cuda else torch.FloatTensor

LongTensor = torch.cuda.LongTensor if x.is_cuda else torch.LongTensor

# 生成网格,先验框中心,网格左上角

grid_x = torch.linspace(0, in_w - 1, in_w).repeat(in_w, 1).repeat(

int(self.num_anchors/3), 1, 1).view(x.shape).type(FloatTensor)

grid_y = torch.linspace(0, in_h - 1, in_h).repeat(in_h, 1).t().repeat(

int(self.num_anchors/3), 1, 1).view(y.shape).type(FloatTensor)

# 生成先验框的宽高

anchor_w = FloatTensor(scaled_anchors).index_select(1, LongTensor([0]))

anchor_h = FloatTensor(scaled_anchors).index_select(1, LongTensor([1]))

anchor_w = anchor_w.repeat(1, 1, in_h * in_w).view(w.shape)

anchor_h = anchor_h.repeat(1, 1, in_h * in_w).view(h.shape)

# 计算调整后的先验框中心与宽高

pred_boxes = torch.FloatTensor(prediction[0][..., :4].shape)

pred_boxes[..., 0] = x.data + grid_x

pred_boxes[..., 1] = y.data + grid_y

pred_boxes[..., 2] = torch.exp(w.data) * anchor_w

pred_boxes[..., 3] = torch.exp(h.data) * anchor_h

pred_boxes = pred_boxes.view(-1, 4)

for t in range(target[i].shape[0]):

gx = target[i][t, 0] * in_w

gy = target[i][t, 1] * in_h

gw = target[i][t, 2] * in_w

gh = target[i][t, 3] * in_h

gt_box = torch.FloatTensor(np.array([gx, gy, gw, gh])).unsqueeze(0)

anch_ious = bbox_iou(gt_box, pred_boxes, x1y1x2y2=False)

anch_ious = anch_ious.view(x.size())

noobj_mask[i][anch_ious>self.ignore_threshold] = 0

# print(torch.max(anch_ious))

return noobj_mask

大神博客