ai疾病风险因素识别

Working on AI/ML initiatives is the dream of many individuals and companies. Stories of amazing AI initiatives are all over the web and those who claim ownership of these are sought after for speaking, offered handsome positions and command respect from their peers.

从事AI / ML计划是许多个人和公司的梦想。 令人惊叹的AI计划的故事遍布网络,而那些声称拥有这些计划的人受到追捧,他们的言语举足轻重,并得到同行的尊敬。

In reality, AI work is highly uncertain and there are many types of risks that are associated with AI/ML work.

实际上,AI工作是高度不确定的,并且与AI / ML工作相关的风险类型很多。

“If you understand where risks may be lurking, ill-understood, or simply unidentified, you have a better chance of catching them before they catch up with you.” — McKinsey [1].

“如果您了解潜在的潜伏,误解或根本无法识别的风险,那么在风险赶上您之前,您就有更大的机会抓住它们。” -麦肯锡[1]。

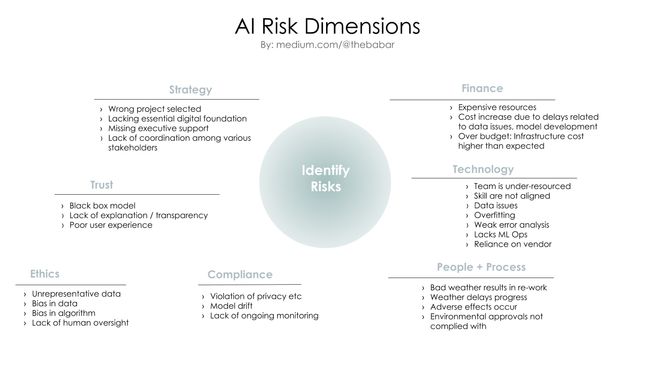

In this post I’ll summarize the 7 types of risks and how to mitigate their negative impact. For those who would like a compact view, here’s a slide that I put together.

在这篇文章中,我将总结7种风险以及如何减轻其负面影响。 对于那些想要紧凑视图的人,这是我放在一起的一张幻灯片。

Strategy Risk — As I wrote in an earlier post, it is not simple to craft AI strategy. A mistake at the early stage sets the stage for other downstream problems. Unfortunately strategy is often in the hands of those who don’t have a thorough understand of AI capabilities. This category includes risk from selecting wrong (infeasible) initiative (relative to the organization’s ground reality), lack of executive support, uncoordinated policies, friction among business groups, overestimation of AI/ML potential (contrary to the hype, ML is not the best answer for all analytics or prediction problems), lack of clarity or unclear goals or success metrics. The most obvious mitigation approach is to have alignment between the AI leadership and executive team on the strategy and what risks are associated with it. Understand exactly how AI will impact people and processes and what to do when things don’t go well.

策略风险-正如我在之前的文章中所写,制定AI策略并不简单。 早期的错误为其他下游问题奠定了基础。 不幸的是,战略往往掌握在对AI功能没有全面了解的人的手中。 此类别包括因选择错误(不可行)计划(相对于组织的实际情况),缺乏行政支持,政策不协调,业务组之间的摩擦,对AI / ML潜力的高估(与大肆宣传相反, ML并非最佳选择)带来的风险回答所有分析或预测问题),缺乏明确性或不清楚的目标或成功指标。 最明显的缓解方法是使AI领导者与执行团队就该战略以及与之相关的风险保持一致。 准确了解AI将如何影响人员和流程,以及在情况不佳时应采取的措施。

Financial Risk — One of the common, but not often talked about assumption is that the cost of model development is the main financial factor for AI/ML. Only if we had the best data/AI scientists then we would be all set. As discussed in this paper, the full lifecycle of AI is way more complex and includes data governance and management, human monitoring and infrastructure costs (cloud, containers, GPUs etc.) There’s always the uncertainty factor with AI work which means that compared with software development, the process will be more experimental and non-linear and even after all the expensive development work the end-result is not always positive. Make sure your finance leadership understand this and not treat AI/ML as just another tech project

财务风险—常见但不经常提及的假设之一是,模型开发的成本是AI / ML的主要财务因素。 只有拥有最好的数据/人工智能科学家,我们才能为之奋斗。 如本文所述,人工智能的整个生命周期更加复杂,其中包括数据治理和管理,人工监控和基础设施成本(云,容器,GPU等)。人工智能工作始终存在不确定性因素,这意味着与软件相比在开发过程中,该过程将更具实验性和非线性,即使在进行了所有昂贵的开发工作之后,最终结果也并非总是积极的。 确保您的财务主管了解这一点,不要将AI / ML视为另一个技术项目

Technical Risk — This is the most visible challenge. The technology is not mature or ready but the technologists or the vendors want to push it. Or perhaps the business leadership want to impress the media or their competitors with the forward looking vision. Technical risks come in many forms: Data: Data quality, suitability, representativeness, rigid data infrastructure and more. Model risks: capabilities, learning approach, poor performance or reliability in real world. Concept Drift, that is, change in the market or environment over time or due to an unexpected event. Competence: lack of right skills or experience, implementation delays, errors, not thorough testing, lack of stable or mature environments, lack of DataOps, MLOps, IT team not up to speed with ML/AI deployment or scaling needs, security breach and IP theft. The best way to mitigate these is to work on your team’s skills, invest in modern data infrastructure and follow best practices for ML .

技术风险-这是最明显的挑战。 该技术还不成熟或尚未准备就绪,但技术人员或供应商希望推广该技术。 或者,企业领导者希望以前瞻性的眼光打动媒体或竞争对手。 技术风险有多种形式:数据:数据质量,适用性,代表性,严格的数据基础结构等等。 建模风险:在现实世界中的能力,学习方法,较差的性能或可靠性。 概念漂移,即市场或环境随时间或由于意外事件而发生的变化。 能力:缺乏正确的技能或经验,实施延迟,错误,没有彻底的测试,缺乏稳定或成熟的环境,缺乏DataOps,MLOps,IT团队无法满足ML / AI部署或扩展需求,安全漏洞和IP盗窃。 减轻这些负担的最佳方法是提高团队的技能,投资现代数据基础架构并遵循ML的最佳实践。

People and Process Risk — It should go without saying but the right organizational structure, people and culture are critical to the success of your work. With competent people and supportive culture, you can catch problems and face challenges. Sign of a bad culture is that people hide problems and avoid ownership. When you’ve politics and tensions between teams, problems get amplified. Skills gap, rigid mindset, miscommunications, old school IT lacks operational knowledge to scale AI. Gaps in processes, coordination issues between IT and AI, fragmented or inconsistent practices/tools, vendor hype. Lack of data/knowledge sharing (org structure), missing input/review from domain experts, lack of oversight / policy controls & fallback plans, third-party model dependency, insufficient human oversight, learning feedback loop. Weak tech foundations.

人员和流程风险-毋庸置疑,正确的组织结构,人员和文化对您的工作成功至关重要。 有了能干的人和支持性的文化,您可以发现问题并面对挑战。 不良文化的标志是人们隐藏问题并避免所有权。 当您在团队之间存在政治和紧张关系时,问题就会加剧。 技能差距,思维僵化,沟通不畅,老式IT缺乏扩展AI的操作知识。 流程之间的差距,IT与AI之间的协调问题,零散的或不一致的实践/工具,厂商大肆宣传。 缺乏数据/知识共享(组织结构),缺少域专家的输入/审查,缺少监督/政策控制和后备计划,缺乏第三方模型依赖性,人员监督不足,学习反馈回路。 技术基础薄弱。

Trust and Explainability Risk — You did all the work but the end users of your AI-powered application are hesitant to use or adopt the model. IT is a common challenge. Reasons include poor performance of the model under certain conditions, opaqueness of the model (lack of explanation of results), lack of help when questions arise, poor user experience, lack of incentive alignment, major disruption to people’s workflow or daily routine. As ML/AI practitioners know, the best models such as deep neural networks are the least explainable. This leads to difficult questions such as, what is more important: model performance or its adoption by intended users?

信任和可解释性风险-您已经完成了所有工作,但基于AI的应用程序的最终用户不愿使用或采用该模型。 IT是一个普遍的挑战。 原因包括在某些条件下模型的性能不佳,模型的不透明性(缺乏对结果的解释),出现问题时缺乏帮助,用户体验差,缺乏激励措施,对人们工作流程或日常工作的重大破坏。 就像ML / AI的从业者所知道的那样,诸如深度神经网络之类的最佳模型解释得最少。 这就带来了难题,例如,更重要的是:模型性能或目标用户所采用的模型?

Compliance and Regulatory Risk — AI/ML can cause major headaches for use cases or verticals that need to comply with rules and regulations. There’s a fine line here — if you don’t take some action the competitors may get too far ahead. When you do take action, you must protect against unforeseen consequences and investigations or fines by regulators. Financial and healthcare industries are good examples of such tensions. The explainability factors discussed above are key here. Mitigation: ensure that risk management teams are well-aware of the AI/ML work and its implications. Allocate resources for human monitoring and corrective actions.

合规性和法规风险-AI / ML可能会导致需要遵守规则和规定的用例或垂直行业的头痛。 这里有一条细线-如果您不采取任何措施,竞争对手可能会领先一步。 当您采取行动时,必须防止意外后果以及监管机构的调查或罚款。 金融和医疗保健行业就是这种紧张关系的很好例子。 上面讨论的可解释性因素在这里很关键。 缓解措施:确保风险管理团队充分了解AI / ML的工作及其影响。 分配资源进行人工监视和纠正措施。

Ethical Risk — Your AI/ML project has great data and a superstar technical team, benefits are clear and there are no legal issues — but is it ethical? Take the example of Facial Recognition for police work. Vendors pushed this as a revolutionary way to improve policing but the initial models lacked the robustness needed to make fair and accurate predictions and resulted in a clear bias against certain minority groups. Credit scoring and insurance models have suffered from bias for a long time — with the growth of ML-powered applications, it has become a much bigger problem.

道德风险-您的AI / ML项目拥有大量数据和超级明星的技术团队,收益显而易见,没有法律问题-但这是否合乎道德? 以警察工作中的面部识别为例。 供应商将其作为改进警务性的革命性方式进行推广,但最初的模型缺乏做出公正,准确的预测所需要的鲁棒性,并导致对某些少数群体的明显偏见。 信用评分和保险模型长期以来一直遭受偏见-随着基于ML的应用程序的增长,这已成为一个更大的问题。

Each of the above mentioned risk area is a huge domain by itself — and requires extensive reading and hands-on experience to become familiar with the topic. I encourage you to check out the references below to get additional perspective on handling AI risk.

上述每个风险领域本身都是一个巨大的领域,并且需要广泛的阅读和动手经验才能熟悉该主题。 我鼓励您查看以下参考资料,以获取有关处理AI风险的更多观点。

Notes:

笔记:

[1] Confronting the risks of artificial intelligence, McKinsey. https://www.mckinsey.com/business-functions/mckinsey-analytics/our-insights/confronting-the-risks-of-artificial-intelligence

[1]面对人工智能的风险,麦肯锡。 https://www.mckinsey.com/business-functions/mckinsey-analytics/our-insights/confronting-the-risks-of-artificial-intelligence

[2] AI and risk management, Deloitte. https://www2.deloitte.com/global/en/pages/financial-services/articles/gx-ai-and-risk-management.html

[2] AI和风险管理,德勤。 https://www2.deloitte.com/global/zh/pages/financial-services/articles/gx-ai-and-risk-management.html

[3] Ulrika Jägare, Data Science Strategy for Dummies, 2019, Wiley.

[3] UlrikaJägare, 《傻瓜数据科学策略》 ,2019年,威利。

[4] Derisking machine learning and artificial intelligence, McKinsey. https://www.mckinsey.com/business-functions/risk/our-insights/derisking-machine-learning-and-artificial-intelligence

[4]麦肯锡(McKinsey)着迷于机器学习和人工智能。 https://www.mckinsey.com/business-functions/risk/our-insights/derisking-machine-learning-and-artificial-intelligence

[5] Understanding Model Risk Management for AI and Machine Learning, EY. https://www.ey.com/en_us/banking-capital-markets/understand-model-risk-management-for-ai-and-machine-learning

[5]《了解人工智能和机器学习的模型风险管理》,安永。 https://www.ey.com/zh_CN/banking-capital-markets/understand-model-risk-management-for-ai-and-machine-learning

翻译自: https://towardsdatascience.com/7-types-of-ai-risk-and-how-to-mitigate-their-impact-36c086bfd732

ai疾病风险因素识别