从零开始的pytorch小白使用指北

文章目录

- Pytorch是什么

- Pytorch搭建神经网络

-

- 数据准备

-

- 构建data_iterator

- 模型准备

- 模型训练

- 模型评价

- 使用GPU训练神经网络

- Pytorch和TensorFlow的区别

Pytorch是什么

PyTorch是一个开源的Python机器学习库,提供两个高级功能:

- 具有强大的GPU加速的张量计算(如NumPy)

Pytorch向numpy做了广泛兼容,很多numpy的函数接口在pytorch下同样适用,

因此在安装pytorch和numpy时要注意版本兼容。

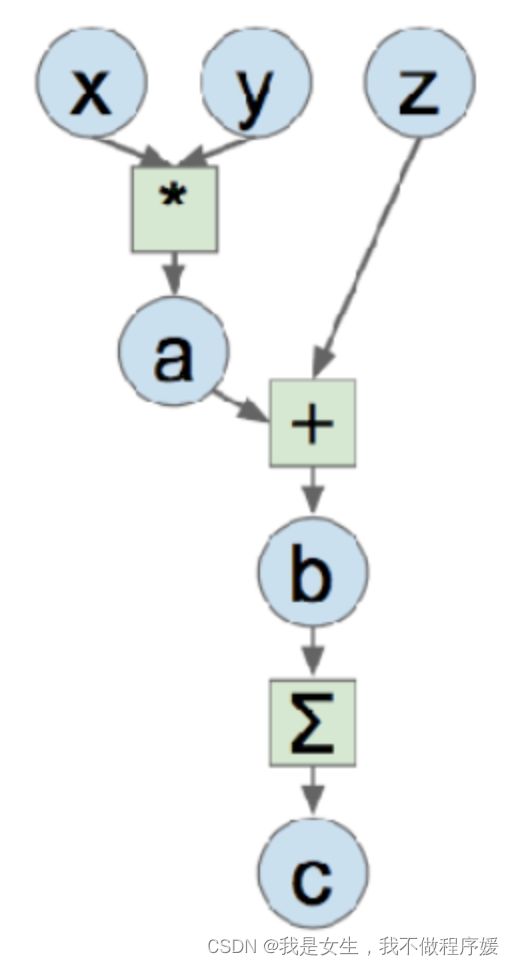

- 包含自动求导系统的深度神经网络

Pytorch搭建神经网络

>>> import torch

>>> import numpy

数据准备

tensor:张量,用于做矩阵的运算,十分适合在GPU上跑。

构建张量

>>> a = torch.tensor([[1,2,3],[4,5,6]],dtype=float) # 创建一个指定元素的张量

>>> a.size()

torch.Size([2, 3])

>>> a

tensor([[1., 2., 3.],

[4., 5., 6.]], dtype=torch.float64)

>>> b = torch.full((2,3),3.14) # 创建一个全为某一数值的张量,可以作为初始化矩阵

>>> b

tensor([[3.1400, 3.1400, 3.1400],

[3.1400, 3.1400, 3.1400]])

>>> c = torch.empty(2,3) # 返回一个未初始化的指定形状的张量,每次的运行结果都不同

>>> c

tensor([[3.6893e+19, 2.1425e+00, 5.9824e-38],

[1.7449e-39, 6.1124e-38, 1.4013e-45]])

张量运算

>>> #加法运算

>>> a+b

tensor([[4.1400, 5.1400, 6.1400],

[7.1400, 8.1400, 9.1400]])

>>> torch.add(a,b,out=c) # 将指定张量作为输出,若不指定直接返回该值

>>> c

tensor([[4.1400, 5.1400, 6.1400],

[7.1400, 8.1400, 9.1400]])

>>> a.add(b) #返回一个新的张量,原张量不变

>>> a

tensor([[1., 2., 3.],

[4., 5., 6.]], dtype=torch.float64)

>>> a.add_(b) # 张量操作加'_'后,原张量值改变

>>> a

tensor([[4.1400, 5.1400, 6.1400],

[7.1400, 8.1400, 9.1400]], dtype=torch.float64)

# 乘法运算

>>> x = torch.rand(3,5)

>>> y = torch.rand(3,5)

>>> z = torch.rand(5,3)

>>> torch.mul(x,y) # 矩阵点乘

tensor([[0.5100, 0.2523, 0.3176, 0.7665, 0.7989],

[0.3799, 0.0779, 0.1352, 0.5991, 0.0338],

[0.0375, 0.1444, 0.6965, 0.0810, 0.1836]])

>>> torch.matmul(x,z) #矩阵叉乘

tensor([[0.9843, 2.0296, 1.3304],

[0.7903, 1.4374, 1.1705],

[1.0530, 1.9607, 0.9520]])

# 常用运算

>>> a = torch.tensor([[1,2,3],[4,5,6]])

>>> torch.max(a) # 所有张量元素最大值

tensor(6)

>>> torch.max(a,0) # 沿着第0维求最大值

torch.return_types.max(values=tensor([4, 5, 6]),indices=tensor([1, 1, 1]))

>>> torch.max(a,1) # 沿着第1维求最大值

torch.return_types.max(values=tensor([3, 6]),indices=tensor([2, 2]))

>>> # min,mean,sum,prod同理

numpy桥

numpy.array和torch.tensor可以互相转化

>>> a = np.ones(3)

>>> a

array([1., 1., 1.])

>>> aa = torch.from_numpy(a) # array转为tensor

>>> aa

tensor([1., 1., 1.], dtype=torch.float64)

>>> b = torch.ones(3)

>>> b

tensor([1., 1., 1.])

>>> bb = b.numpy() # tensor转为array

>>> bb

array([1., 1., 1.], dtype=float32)

构建data_iterator

在这里插入代码片

模型准备

Module:一个神经网络的层次,可以直接调用全连接层,卷积层,等等神经网络。被自定义的神经网络类所继承。

自定义一个神经网络class通常包括两部分:__init__定义网络层,forward函数定义网络计算函数。

# Example 1

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(3, 6, 5)

self.pool = nn.MaxPool2d(2,2)

self.conv2 = nn.Conv2d(6, 16, 5)

self.fc1 = nn.Linear(16*5*5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

x = self.pool(F.relu(self.conv1(x)))

x = self.pool(F.relu(self.conv2(x)))

x = x.view(-1, 16*5*5)

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

NetModel = Net()

# Example 2

class DynamicNet(torch.nn.Module):

def __init__(self, D_in, H, D_out):

super(DynamicNet, self).__init__()

self.input_linear = torch.nn.Linear(D_in, H)

self.middle_linear = torch.nn.Linear(H, H)

self.output_linear = torch.nn.Linear(H, D_out)

def forward(self, x):

h_relu = self.input_linear(x).clamp(min=0)

for _ in range(random.randint(0, 3)):

h_relu = self.middle_linear(h_relu).clamp(min=0)

y_pred = self.output_linear(h_relu)

return y_pred

DynamicModel = DynamicNet(D_in, H, D_out)

模型训练

模型训练的主题代码由2层循环、2个定义和5句函数组成:

# 两个定义:

optimizer =

criterion =

# 两层循环:

for epoch in num_epochs:

for input in batch_iters:

# 5句函数

outputs = model(input)

model.zero_grad()

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

# Example 1

# 定义代价函数和优化器

criterion = nn.CrossEntropyLoss() # use a Classification Cross-Entropy loss

optimizer = optim.SGD(net.parameters(), lr=0.001, momentum=0.9)

# 训练 epoch × batches_per_epoch 轮

for epoch in range(2):

running_loss = 0.0

# 遍历trainloader时每次返回一个batch

for i, data in enumerate(trainloader, 0):

inputs, labels = data

# wrap them in Variable

inputs, labels = Variable(inputs), Variable(labels)

# zero the parameter gradients

optimizer.zero_grad()

# forward + backward + optimize

outputs = net(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

running_loss += loss.data[0]

# print statistics

if i % 2000 == 1999:

print('[%d, %5d] loss: %.3f' % (epoch+1, i+1, running_loss / 2000))

running_loss = 0.0

# Example 2 简化版

criterion = torch.nn.MSELoss(reduction='sum')

optimizer = torch.optim.SGD(model.parameters(), lr=1e-4, momentum=0.9)

for t in range(500):

y_pred = model(x)

loss = criterion(y_pred, y)

print(t, loss.item())

# 清零梯度,反向传播,更新权重

optimizer.zero_grad()

loss.backward()

optimizer.step()

# Example3

optimizer = torch.optim.Adam(model.parameters(), lr=config.learning_rate)

model.train()

for epoch in range(config.num_epochs):

print('Epoch [{}/{}]'.format(epoch + 1, config.num_epochs))

for i, (trains, labels) in enumerate(train_iter):

outputs = model(trains)

model.zero_grad()

loss = F.cross_entropy(outputs, labels.squeeze(1))

loss.backward()

optimizer.step()

模型评价

一个循环+2句计算+n个评价函数:

# 一个循环:test_iters

for texts, labels in test_iter:

# 2句计算:outputs = model(inputs)

outputs = net(Variable(images))

_, predicts = torch.max(outputs.data, 1)

# n个评价函数:用labels和predicts计算metrcs库的各种评价指标

# Example1

correct = 0

total = 0

# 一个循环:test_iters

for data in testloader:

images, labels = data

# 2句计算:outputs = model(inputs)

outputs = net(Variable(images))

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum()

print('Accuracy of the network on the 10000 test images: %d %%' % (100 * correct / total))

# Example 2

model.load_state_dict(torch.load(config.save_path))

model.eval()

loss_total = 0

predict_all = np.array([], dtype=int)

labels_all = np.array([], dtype=int)

with torch.no_grad():# 不自动求导,节约内存

for texts, labels in data_iter:

outputs = model(texts)

predic = torch.max(outputs.data, 1)[1].cpu().numpy()

loss = F.cross_entropy(outputs, labels.squeeze(1))

loss_total += loss

labels = labels.data.cpu().numpy()

labels_all = np.append(labels_all, labels)

predict_all = np.append(predict_all, predic)

acc = metrics.accuracy_score(labels_all, predict_all)

report = metrics.classification_report(labels_all, predict_all,

confusion = metrics.confusion_matrix(labels_all, predict_all)

print (acc, loss_total / len(data_iter), report, confusion)

使用GPU训练神经网络

# 使用GPU训练时,需要将model和tensor转至指定device

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

model = BertClassifier(config.bert_path)

model = model.to(device)

tensor = torch.tensor(x_train).to(torch.float32)

tensor = tensor.to(device)

Pytorch和TensorFlow的区别

- TensorFlow在GPU上的计算性能更高一些,在CPU上二者类似。