docker三剑客之docker swarm(部署swarm监控、compose和stack对比、可视化容器管理工具 Portainer)

docker三剑客之docker swarm(部署swarm监控、compose和stack对比、可视化容器管理工具 Portainer)

- docker swarm简介

- 创建swarm集群

- 部署swarm监控

- 节点升级降级

- 删除节点,使此节点成为本地私有仓库

- 业务滚动更新

- docker stack替换了docker-compose部署服务

- 可视化容器管理工具 Portainer

docker swarm集群管理.

docker swarm简介

- Swarm是原生支持Docker集群管理的工具。

- Docker Swarm是一个为IT运维团队提供集群和调度能力的编排工具。

- Swarm可以把多个Docker主机组成的系统转换为单一的虚拟Docker主机,使得容器可以组成跨主机的子网网络。

创建swarm集群

初始化集群:

docker swarm init #生成了一个token

在其他docker节点上执行命令,节点作为一个worker会添加进swarm

docker swarm join --token SWMTKN-1-4owwqn5j0u0k1bqxgozn3p1glcvmo7yl33w700xswc2293eiw0-dn40jytlvqqpw5zo5udbdgbzg 172.25.10.1:2377

查看swarm集群节点

[root@server1 ~]# docker node ls

部署swarm监控

[root@server2 ~]# scp nginx.tar server3:

[root@server3 ~]# docker load -i nginx.tar

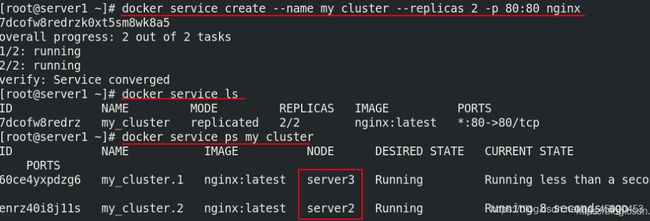

[root@server1 ~]# docker service create --name my_cluster --replicas 2 -p 80:80 nginx

server2 server3 80端口不能被占用 宿主机:容器

[root@server1 harbor]# netstat -antlp | grep :80

tcp6 0 0 :::80 :::* LISTEN 3321/dockerd

[root@server2 ~]# netstat -antlp | grep :80

tcp6 0 0 :::80 :::* LISTEN 24924/dockerd

[root@server3 ~]# netstat -antlp | grep :80

tcp6 0 0 :::80 :::* LISTEN 14773/dockerd

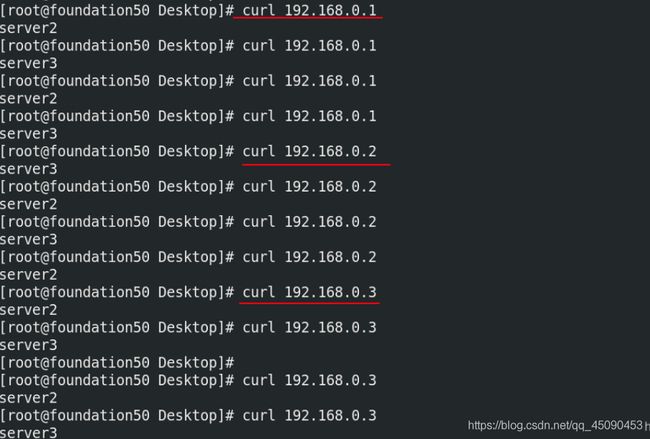

[root@server2 ~]# echo server2 > index.html

[root@server2 ~]# docker ps

[root@server2 ~]# docker cp index.html 6386513fc87f:/usr/share/nginx/html

[root@server3 ~]# echo server3 > index.html

[root@server3 ~]# docker cp index.html ecb82d7971bc:/usr/share/nginx/html

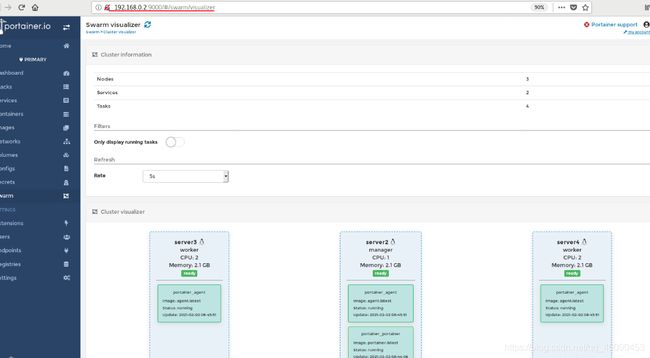

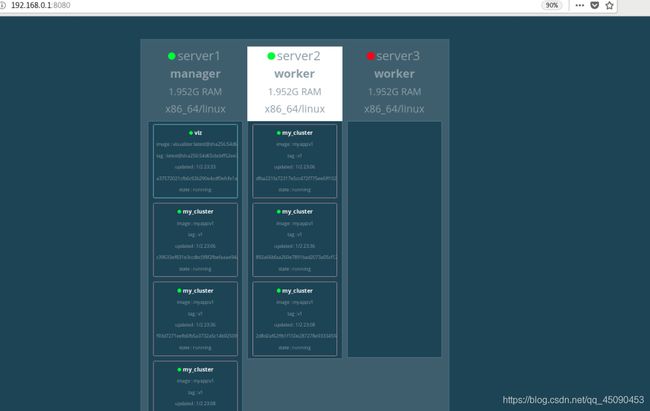

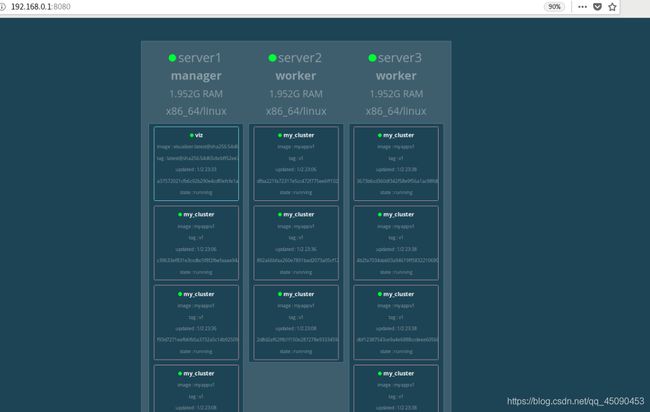

docker-swarm-visualizer.

副本的拉伸。

[root@server1 ~]# docker service scale my_cluster=4

[root@server1 ~]# docker service ps my_cluster

[root@server1 ~]# docker service rm my_cluster

my_cluster

[root@server1 ~]# docker service ls

server1 2 3

[root@server1 ~]# docker pull ikubernetes/myapp:v1

[root@server1 ~]# docker tag ikubernetes/myapp:v1 myapp:v1

[root@server1 ~]# docker rmi ikubernetes/myapp:v1

[root@server2 ~]# docker pull ikubernetes/myapp:v1

[root@server3 ~]# docker pull ikubernetes/myapp:v1

[root@server1 ~]# docker service create --name my_cluster --replicas 2 -p 80:80 myapp:v1

[root@server1 ~]# docker service scale my_cluster=6

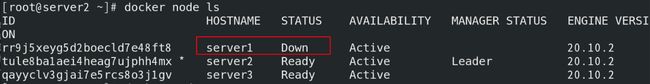

docker pull dockersamples/visualizer

docker tag dockersamples/visualizer:latest visualizer:latest

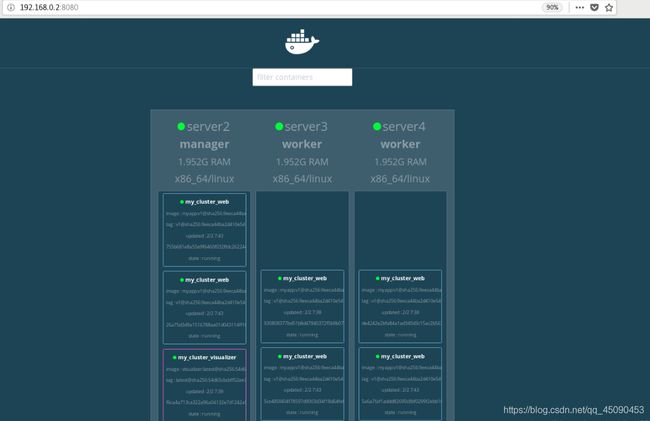

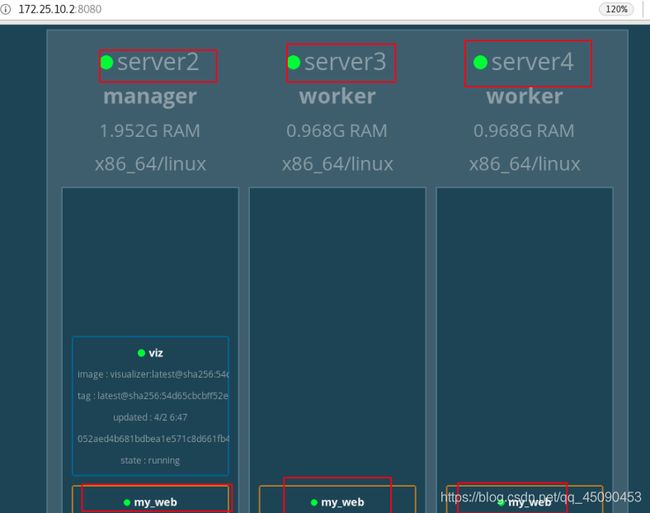

[root@server1 ~]# docker service create \

--name=viz \

--publish=8080:8080/tcp \

--constraint=node.role==manager \

--mount=type=bind,src=/var/run/docker.sock,dst=/var/run/docker.sock \

dockersamples/visualizer

[root@server3 ~]# systemctl stop docker

[root@server3 ~]# systemctl start docker

[root@server1 ~]# docker service scale my_cluster=10

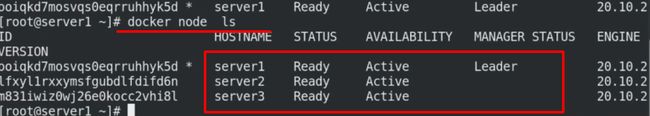

节点升级降级

[root@server1 ~]# docker node promote server2

[root@server1 ~]# docker node demote server1

[root@server2 ~]# docker node ls

删除节点,使此节点成为本地私有仓库

[root@server1 ~]# docker swarm leave

Node left the swarm.

[root@server2 ~]# docker node rm server1

[root@server2 ~]# docker swarm init

[root@server4 ~]# docker swarm join --token SWMTKN-1-1ym2b7zqmvj1trzvd6rwbx3mdc77wa6fmqrq0gdwtf7hhsyahz-5shc2cr07u7ewi7iph5jwvk4i 192.168.0.2:2377

[root@server2 ~]# docker node ls

[root@server2 ~]# docker ps

使用server1中搭建的habor仓库

[root@server1 harbor]# ./install.sh --with-chartmuseum

[root@server2 ~]# cd /etc/docker/

加速文件(server2,3,4)

[root@server2 docker]# vim daemon.json

{

"registry-mirrors": ["https://reg.westos.org"]

}

[root@server2 docker]# systemctl reload docker

[root@server2 docker]# scp daemon.json server3:/etc/docker/

[root@server2 docker]# scp daemon.json server4:/etc/docker/

[root@server3 docker-ce]# systemctl reload docker

[root@server4 docker-ce]# systemctl reload docker

拷贝证书

[root@server2 docker]# scp -r certs.d/ server3:/etc/docker/

[root@server2 docker]# scp -r certs.d/ server4:/etc/docker/

[root@server3 docker-ce]# vim /etc/hosts

每个节点有解析

[root@server4 docker-ce]# vim /etc/hosts

192.168.0.1 server1 reg.westos.org

[root@server1 ~]# docker tag myapp:v1 reg.westos.org/library/myapp:v1

[root@server1 ~]# docker push reg.westos.org/library/myapp:v1

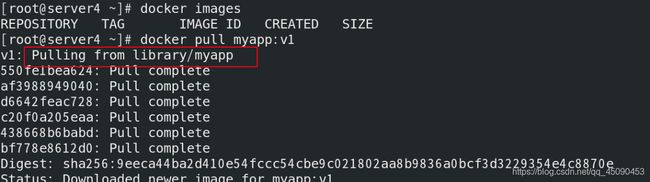

[root@server4 ~]# docker pull myapp:v1

当有了私有仓库之后,部署速度提升,自动部署

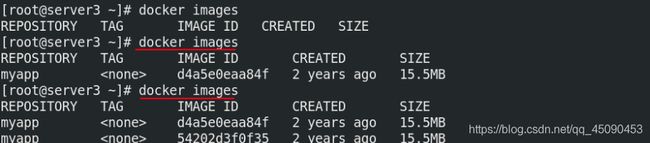

自动拉取镜像的时候,tag是none: 为了在仓库中更新(始终找最新版本)

![]()

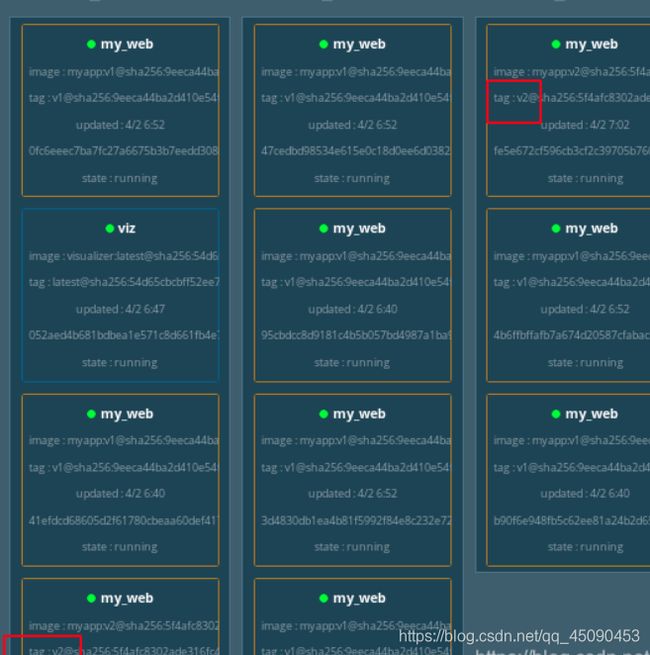

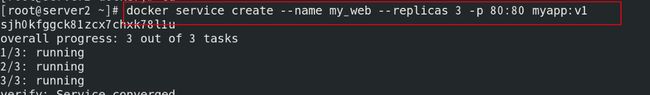

业务滚动更新

[root@server2 docker]# docker service create --name my_web --replicas 3 -p 80:80 myapp:v1

[root@server3 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

myapp <none> d4a5e0eaa84f 2 years ago 15.5MB

[root@server4 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

myapp <none> d4a5e0eaa84f 2 years ago 15.5MB

[root@server2 docker]# docker service scale my_web=10

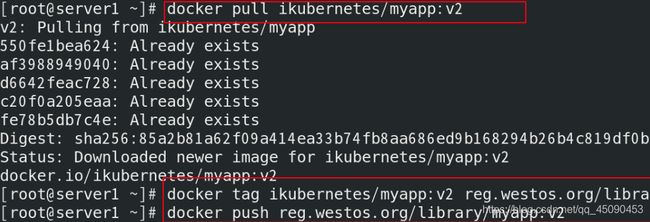

[root@server1 ~]# docker pull ikubernetes/myapp:v2

[root@server1 ~]# docker tag ikubernetes/myapp:v2 reg.westos.org/library/myapp:v2

[root@server1 ~]# docker push reg.westos.org/library/myapp:v2

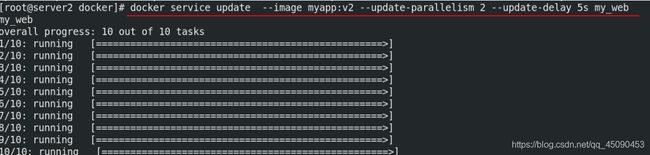

每个多长时间,每次几个,直到更新所有

docker service update --image myapp:v2 --update-parallelism 2 --update-delay 5s my_web

--image 指定要更新的镜像

--update-parallelism 指定最大同步更新的任务数

--update-delay 指定更新间隔

docker stack替换了docker-compose部署服务

compose-file参考文档地址.

docker stack和docker-compose的一些区别:

- Docker stack会忽略了“构建”指令。 您无法使用stack命令构建新镜像。 它是需要镜像是预先已经构建好的。 所以docker-compose更适合于开发场景。

- Docker Compose是一个Python项目。每个人都喜欢它,特别是Docker的追随者,最后它慢慢的融入了docker产品中。但它仍然在Python中,运行在Docker引擎之上。

- 在内部,它使用Docker API规范来操作容器。所以您仍然需要分别安装Docker -compose,以便与Docker一起在您的计算机上使用。

- Docker Stack功能包含在Docker引擎中。你不需要安装额外的包来使用它,docker stacks 只是swarm mode的一部分。它支持相同类型的compose文件,但实际的处理是发生在Docker Engine(docker引擎)内部的Go代码中。 在使用堆栈命令之前,还必须创建一个单机版的“swarm”,但这并不是什么大问题。

- 如果你的docker-compose.yml 是基于第二版写的(在docker-compose.yml中指定version: “2”),那么Docker stack是不支持的。你必须使用最新版本,也就是version版本至少为3.然而Docker Compose对版本为2和3的 文件仍然可以处理。

[root@server1 ~]# docker images

[root@server1 ~]# docker tag dockersamples/visualizer:latest reg.westos.org/library/visualizer:latest

[root@server1 ~]# docker push reg.westos.org/library/visualizer:latest

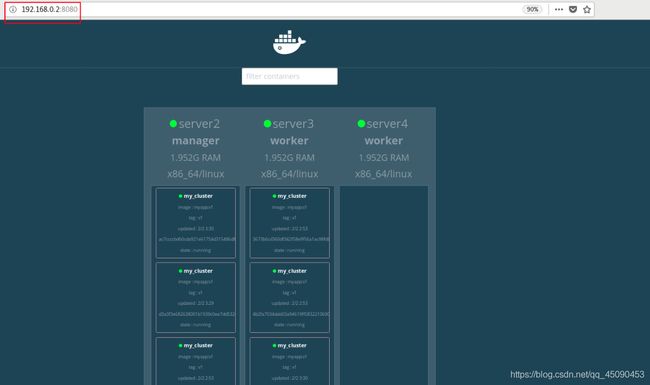

[root@server2 ~]# mkdir compose

[root@server2 ~]# cd compose/

[root@server2 compose]# ls

[root@server2 compose]# vim docker-compose.yml

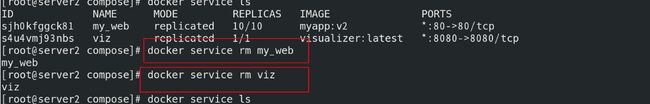

[root@server2 compose]# docker service ls

[root@server2 compose]# docker service rm my_web

[root@server2 compose]# docker service rm viz

[root@server2 compose]# docker stack deploy -c docker-compose.yml my_cluster

[root@server2 compose]# docker stack ls

[root@server2 compose]# docker stack ps my_cluster

[root@server2 compose]# docker stack services my_cluster

[root@server2 compose]# pwd

/root/compose ###建立一个单独的compose目录

[root@server2 compose]# ls

docker-compose.yml

[root@server2 compose]# cat docker-compose.yml

version: '3.9'

services:

web:

image: myapp:v1 ##直接改镜像版本,可实现滚动更新

networks:

- mynet

deploy:

replicas: 2 ##负载个数

update_config:

parallelism: 2 #每次更新个数

delay: 10s #更新时间间隔

restart_policy:

condition: on-failure

visualizer:

image: visualizer:latest

ports:

- "8080:8080"

stop_grace_period: 1m30s

volumes:

- "/var/run/docker.sock:/var/run/docker.sock"

deploy:

placement:

constraints:

- "node.role == manager"

networks:

mynet:

[root@server2 compose]# vim docker-compose.yml

replicas: 6

[root@server2 compose]# docker stack deploy -c docker-compose.yml my_cluster

[root@server2 compose]# docker stack rm my_cluster

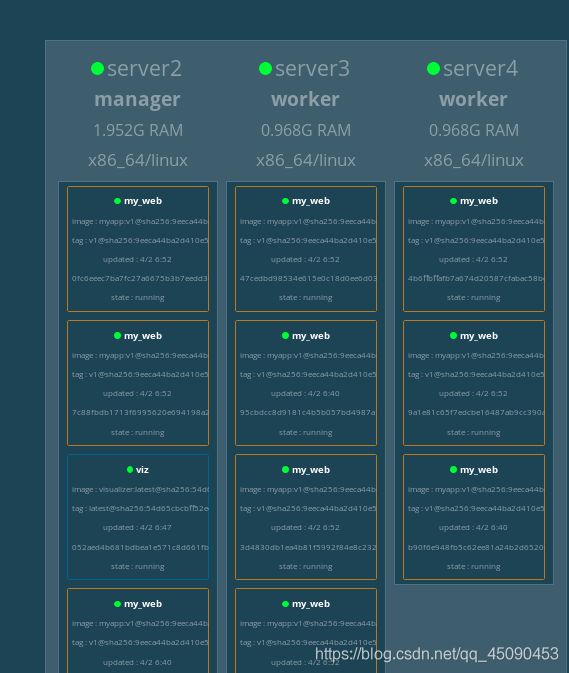

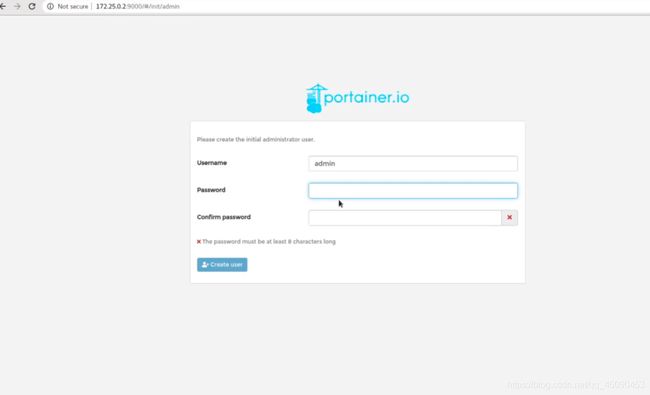

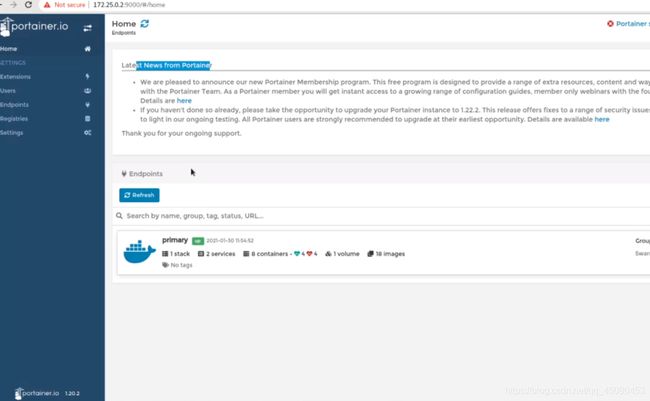

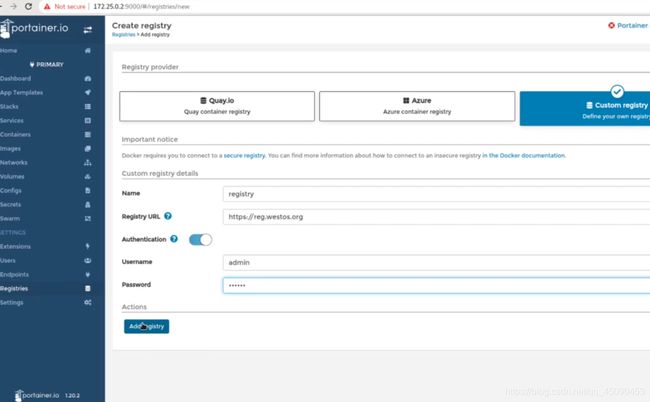

可视化容器管理工具 Portainer

可视化容器管理工具 Portainer参考文档.

将所需镜像放在habor仓库中

可以直接在github上下载

https://downloads.portainer.io/portainer-agent-stack.yml

docker pull portainer/portainer

[root@server1 ~]# mkdir portainer

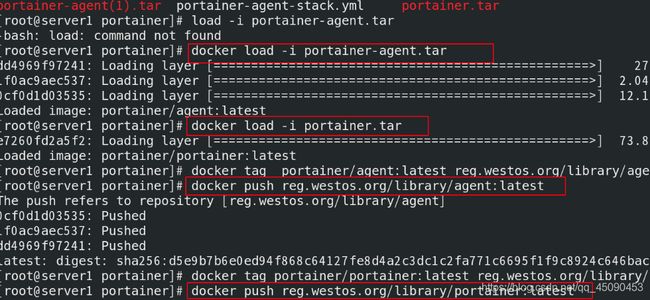

[root@server1 ~]# mv portainer-* portainer

[root@server1 portainer]# ls

portainer-agent-stack.yml portainer-agent.tar portainer.tar

[root@server1 portainer]# vim portainer-agent-stack.yml

[root@server1 portainer]# docker load -i portainer.tar

[root@server1 portainer]# docker load -i portainer-agent.tar

[root@server1 portainer]# docker tag portainer/portainer:latest reg.westos.org/library/portainer:latest

[root@server1 portainer]# docker push reg.westos.org/library/portainer:latest

[root@server1 portainer]# docker tag portainer/agent:latest reg.westos.org/library/agent:latest

[root@server1 portainer]# docker push reg.westos.org/library/agent:latest

[root@server2 ~]# mv portainer-agent-stack.yml compose/

[root@server2 ~]# cd compose/

[root@server2 compose]# vim portainer-agent-stack.yml

[root@server2 compose]# docker stack rm my_cluster

[root@server2 compose]# docker stack deploy -c portainer-agent-stack.yml portainer

[root@server2 compose]# docker stack ps portainer

下载portainer-agent-stack.yml文件,修改

[root@server3 compose]# cat portainer-agent-stack.yml

version: '3.2'

services:

agent:

image: agent ####需要修改镜像的名字

environment:

# REQUIRED: Should be equal to the service name prefixed by "tasks." when

# deployed inside an overlay network

AGENT_CLUSTER_ADDR: tasks.agent

# AGENT_PORT: 9001

# LOG_LEVEL: debug

volumes:

- /var/run/docker.sock:/var/run/docker.sock

- /var/lib/docker/volumes:/var/lib/docker/volumes

networks:

- agent_network

deploy:

mode: global

placement:

constraints: [node.platform.os == linux]

portainer:

image: portainer

command: -H tcp://tasks.agent:9001 --tlsskipverify

ports:

- "9000:9000"

volumes:

- portainer_data:/data

networks:

- agent_network

deploy:

mode: replicated

replicas: 1

placement:

constraints: [node.role == manager]

networks:

agent_network:

driver: overlay

attachable: true

volumes:

portainer_data: