Springboot集成Elasticsearch,爬虫爬取数据,实现简单搜索功能

一、新建项目

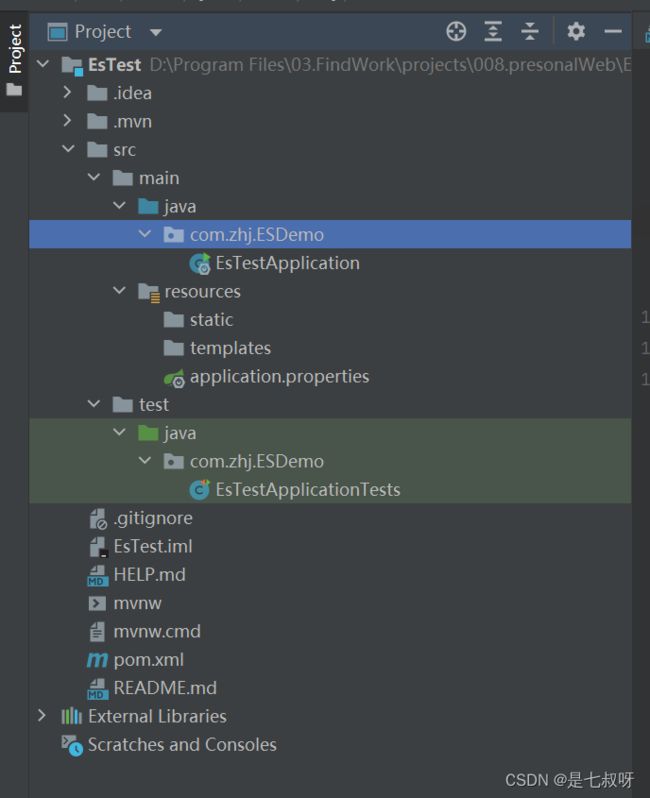

使用IDEA新建项目之后,工程目录如下

二、完善工程文件

2.0 在com.zhj.ESDemo.config目录下新建config、controller、pojo、service和utils包。

第二大步完成后项目工程目录如下:

2.1 修改配置文件

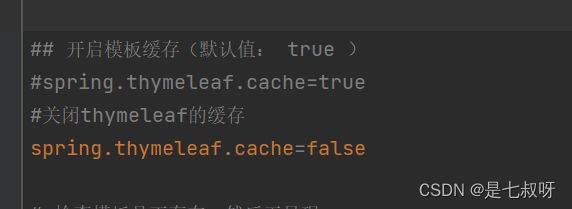

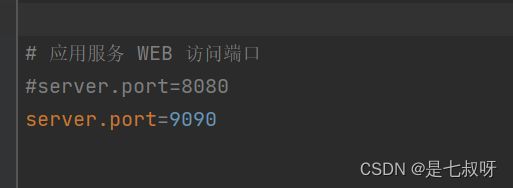

- application.properties

这里主要关闭thymeleaf的缓存和修改应用服务WEB访问端口号

所以修改后,我这里application.properties是

# 应用名称

spring.application.name=EsTest

# THYMELEAF (ThymeleafAutoConfiguration)

## 开启模板缓存(默认值: true )

#spring.thymeleaf.cache=true

#关闭thymeleaf的缓存

spring.thymeleaf.cache=false

# 检查模板是否存在,然后再呈现

spring.thymeleaf.check-template=true

# 检查模板位置是否正确(默认值 :true )

spring.thymeleaf.check-template-location=true

#Content-Type 的值(默认值: text/html )

spring.thymeleaf.content-type=text/html

# 开启 MVC Thymeleaf 视图解析(默认值: true )

spring.thymeleaf.enabled=true

# 模板编码

spring.thymeleaf.encoding=UTF-8

# 要被排除在解析之外的视图名称列表,⽤逗号分隔

spring.thymeleaf.excluded-view-names=

# 要运⽤于模板之上的模板模式。另⻅ StandardTemplate-ModeHandlers( 默认值: HTML5)

spring.thymeleaf.mode=HTML5

# 在构建 URL 时添加到视图名称前的前缀(默认值: classpath:/templates/ )

spring.thymeleaf.prefix=classpath:/templates/

# 在构建 URL 时添加到视图名称后的后缀(默认值: .html )

spring.thymeleaf.suffix=.html

# 应用服务 WEB 访问端口

#server.port=8080

server.port=9090

2.2 在config包下新建esConfig类,代码如下

- esConfig

package com.zhj.ESDemo.config;

import org.apache.http.HttpHost;

import org.elasticsearch.client.RestClient;

import org.elasticsearch.client.RestHighLevelClient;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

/**

* Topic

* Description

*

* @author zhouh

* @version 1.0

* Create by 2022/7/24 20:35

*/

@Configuration //xml文件

public class esConfig {

//spring 2.3 配置pom.xml文件

- pom.xml

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0modelVersion>

<parent>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-parentartifactId>

<version>2.2.5.RELEASEversion>

<relativePath/>

parent>

<groupId>com.cjwgroupId>

<artifactId>stu-es-jdartifactId>

<version>0.0.1-SNAPSHOTversion>

<name>stu-es-jdname>

<description>Demo project for Spring Bootdescription>

<properties>

<java.version>1.8java.version>

<elasticsearch.version>7.6.1elasticsearch.version>

properties>

<dependencies>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-data-elasticsearchartifactId>

dependency>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-thymeleafartifactId>

dependency>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-webartifactId>

dependency>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-devtoolsartifactId>

<scope>runtimescope>

<optional>trueoptional>

dependency>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-configuration-processorartifactId>

<optional>trueoptional>

dependency>

<dependency>

<groupId>org.projectlombokgroupId>

<artifactId>lombokartifactId>

<optional>trueoptional>

dependency>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-testartifactId>

<scope>testscope>

dependency>

<dependency>

<groupId>com.alibabagroupId>

<artifactId>fastjsonartifactId>

<version>1.2.62version>

dependency>

<dependency>

<groupId>org.jsoupgroupId>

<artifactId>jsoupartifactId>

<version>1.10.2version>

dependency>

<dependency>

<groupId>com.thetransactioncompanygroupId>

<artifactId>Java-property-utilsartifactId>

<version>1.9version>

dependency>

<dependency>

<groupId>com.thetransactioncompanygroupId>

<artifactId>cors-filterartifactId>

<version>1.7version>

dependency>

dependencies>

<build>

<plugins>

<plugin>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-maven-pluginartifactId>

<configuration>

<excludes>

<exclude>

<groupId>org.projectlombokgroupId>

<artifactId>lombokartifactId>

exclude>

excludes>

configuration>

plugin>

plugins>

build>

project>

2.4 在pojo包下新建Content类

- Content

package com.zhj.ESDemo.pojo;

import lombok.AllArgsConstructor;

import lombok.Data;

import lombok.NoArgsConstructor;

import org.springframework.stereotype.Component;

/**

* Topic

* Description

*

* @author zhouh

* @version 1.0

* Create by 2022/7/24 20:44

*/

//@Builder

@Data

@NoArgsConstructor

@AllArgsConstructor

@Component

public class Content {

private String title;

private String img;

private String price;

}

2.5 在utils包下新建HtmlParseUtil类

- HtmlParseUtil

package com.zhj.ESDemo.utils;

import com.zhj.ESDemo.pojo.Content;

import org.jsoup.Jsoup;

import org.jsoup.nodes.Document;

import org.jsoup.nodes.Element;

import org.jsoup.select.Elements;

import java.io.IOException;

import java.net.URL;

import java.util.ArrayList;

import java.util.List;

/**

* Topic

* Description

*

* @author zhouh

* @version 1.0

* Create by 2022/7/24 20:47

*/

public class HtmlParseUtil {

public static void main(String[] args) throws IOException {

new HtmlParseUtil().parseJD("中文").forEach(System.out::println);

//等价于for增强

/* for (User user : users) {

System.out.println(user);

}*/

}

public List<Content> parseJD(String keyword) throws IOException{

//获取请求

//前提:需要联网,ajax不能获取到

String url = "https://search.jd.com/Search?keyword=" + keyword;

//解析网页 ,(jsoup 返回的对象就是浏览器document对象)

Document document = Jsoup.parse(new URL(url), 30000);

//所有你在js中可以使用的方法,在这里都可以用。

Element j_goodsList = document.getElementById("J_goodsList");

// System.out.println(j_goodsList.html());

//获取所有的li元素

Elements li = j_goodsList.getElementsByTag("li");

//list

ArrayList<Content> objects = new ArrayList<>();

//获取元素中的内容

for (Element element : li) {

// System.out.println("element = " + element);

/* Elements img = element.getElementsByTag("img").eq(0);

System.out.println("img = " + img);*/

Content content = new Content();

//关于这种图片很多网站,图片都是懒加载的

String img_src = element.getElementsByTag("img").eq(0).attr("data-lazy-img");

//System.out.println("img_src = " + img_src);

String price = element.getElementsByClass("p-price").eq(0).text();

//System.out.println("price = " + price);

String title = element.getElementsByClass("p-name").eq(0).text();

//System.out.println("title = " + title);

content.setImg(img_src);

content.setPrice(price);

content.setTitle(title);

objects.add(content);

}

return objects;

}

}

2.6 在service包下新建业务逻辑处理类ContentService。

这里搜索使用的是模糊查询QueryBuilders.matchQuery("title", keyword),并且直接处理JSON没有用高亮【因为高亮有个bug,在网上搜了试了好久一直处理不了】

- ContentService

package com.zhj.ESDemo.service;

/**

* Topic

* Description

*

* @author zhouh

* @version 1.0

* Create by 2022/7/24 20:46

*/

import com.alibaba.fastjson.JSON;

import com.zhj.ESDemo.pojo.Content;

import com.zhj.ESDemo.utils.HtmlParseUtil;

import org.apache.commons.codec.binary.StringUtils;

import org.elasticsearch.action.bulk.BulkRequest;

import org.elasticsearch.action.bulk.BulkResponse;

import org.elasticsearch.action.get.GetRequest;

import org.elasticsearch.action.index.IndexRequest;

import org.elasticsearch.action.search.SearchRequest;

import org.elasticsearch.action.search.SearchResponse;

import org.elasticsearch.client.RequestOptions;

import org.elasticsearch.client.RestHighLevelClient;

import org.elasticsearch.common.text.Text;

import org.elasticsearch.common.unit.TimeValue;

import org.elasticsearch.common.xcontent.XContentType;

import org.elasticsearch.index.query.*;

import org.elasticsearch.rest.RestStatus;

import org.elasticsearch.search.SearchHit;

import org.elasticsearch.search.builder.SearchSourceBuilder;

import org.elasticsearch.search.fetch.subphase.highlight.HighlightBuilder;

import org.elasticsearch.search.fetch.subphase.highlight.HighlightField;

import org.springframework.beans.BeanUtils;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.data.domain.PageRequest;

import org.springframework.data.domain.Pageable;

import org.springframework.data.elasticsearch.core.ElasticsearchRestTemplate;

import org.springframework.data.elasticsearch.core.SearchHitSupport;

import org.springframework.data.elasticsearch.core.SearchHits;

import org.springframework.data.elasticsearch.core.SearchPage;

import org.springframework.data.elasticsearch.core.query.NativeSearchQuery;

import org.springframework.data.elasticsearch.core.query.NativeSearchQueryBuilder;

import org.springframework.stereotype.Service;

import javax.naming.directory.SearchResult;

import java.io.IOException;

import java.util.ArrayList;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

import java.util.concurrent.TimeUnit;

/**

* 业务编写

*/

@Service

public class ContentService {

@Autowired

private RestHighLevelClient restHighLevelClient;

/* public static void main(String[] args) throws IOException {

new ContentService().parseContext("java");

}*/

/**

* 1. 解析数据放入es索引中

*/

public Boolean parseContext(String keywords) throws IOException {

List<Content> contents = new HtmlParseUtil().parseJD(keywords);

//把查询到的数据放入es索引中

BulkRequest bulkRequest = new BulkRequest("jd_goods");

bulkRequest.timeout("2m");

for (int i = 0; i < contents.size(); i++) {

bulkRequest.add(new IndexRequest("jd_goods")

// .id(String.valueOf(i+1))//设置文档的id,如果没有指定,会随机生成,自己测试

.source(JSON.toJSONString(contents.get(i)), XContentType.JSON));

}

BulkResponse bulk = restHighLevelClient.bulk(bulkRequest, RequestOptions.DEFAULT);

return !bulk.hasFailures();

}

//2. 获取这些数据实现搜索功能

public List<Map<String, Object>> serchPage(String keyword, int pageNo, int pageSize) throws IOException {

if(pageNo <=1) {

pageNo = 1;

}

//条件搜索

SearchRequest jd_goods = new SearchRequest("jd_goods");

SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder();

TermQueryBuilder termQuery = QueryBuilders.termQuery("title", keyword);

searchSourceBuilder.query(termQuery);

searchSourceBuilder.timeout(new TimeValue(60, TimeUnit.SECONDS));

searchSourceBuilder.from(pageNo);

searchSourceBuilder.size(pageSize);

HighlightBuilder highlightBuilder = new HighlightBuilder();

highlightBuilder.field("title");

highlightBuilder.preTags("");

highlightBuilder.postTags("");

highlightBuilder.requireFieldMatch(false);//匹配第一个即可

searchSourceBuilder.highlighter(highlightBuilder);

jd_goods.source(searchSourceBuilder);

SearchResponse search = restHighLevelClient.search(jd_goods, RequestOptions.DEFAULT);

Map<String, Object> map = new HashMap<>();

ArrayList<Map<String,Object>> list = new ArrayList();

for (SearchHit hit : search.getHits()) {

map = hit.getSourceAsMap();

//System.out.println("hit = " + hit);

list.add(map);

}

return list;

}

//2. 获取这些数据实现搜索功能

public List<Map<String, Object>> serchPageBuilder1(String keyword, int pageNo, int pageSize) throws IOException {

if(pageNo <=1) {

pageNo = 1;

}

//条件搜索

//1、构建搜索请求

SearchRequest jd_goods = new SearchRequest("jd_goods");

//2、设置搜索条件,使用该构建器进行查询

SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder();

// 构建精确匹配查询条件

// TermQueryBuilder termQuery = QueryBuilders.termQuery("title", keyword);

// TermQueryBuilder termQuery = QueryBuilders.termQuery("title.keyword", keyword);

// searchSourceBuilder.query(termQuery);

// 自试 索引查询

QueryBuilder queryBuilder = QueryBuilders.matchAllQuery();

searchSourceBuilder.query(queryBuilder);

// QueryBuilder queryBuilder = QueryBuilders.matchQuery("title", "轻松"); // 成功【单子多字都成功】

// searchSourceBuilder.query(queryBuilder);

searchSourceBuilder.timeout(new TimeValue(60, TimeUnit.SECONDS));

searchSourceBuilder.from(pageNo);

searchSourceBuilder.size(pageSize);

// HighlightBuilder.Field field = new HighlightBuilder.Field("title");

// field.preTags("");

// field.postTags("");

// field.fragmentSize(100);

HighlightBuilder highlightBuilder = new HighlightBuilder();

highlightBuilder.requireFieldMatch(true);

highlightBuilder.field("title");

highlightBuilder.preTags("");

highlightBuilder.postTags("");

// highlightBuilder.requireFieldMatch(false);//匹配第一个即可

// highlightBuilder.numOfFragments(0);

searchSourceBuilder.highlighter(highlightBuilder);

//3、将搜索条件放入搜索请求中

jd_goods.source(searchSourceBuilder);

//4、客户端执行搜索请求

SearchResponse search = restHighLevelClient.search(jd_goods, RequestOptions.DEFAULT);

System.out.println("共查询到"+search.getHits().getHits().length+"条数据");

//5、打印测试

Map<String, Object> map = new HashMap<>();

ArrayList<Map<String,Object>> list = new ArrayList();

for (SearchHit hit : search.getHits()) {

//

System.out.println("hit:\n" + hit);

System.out.println(hit.getHighlightFields() == null);

System.out.println(hit.getHighlightFields().get("title"));

Map<String, HighlightField> highlightFields = hit.getHighlightFields();

System.out.println("highlightFields = " + highlightFields);

HighlightField title_high = highlightFields.get("title");

String fr = "";

for (Text fragment : title_high.fragments()) {

System.out.println("fragment = " + fragment);

fr = fragment.toString();

map.put("fragment", JSON.toJSONString(fragment.toString()));

}

System.out.println("fr = " + fr);

// map.put("fragment", JSON.toJSONString(fragment));

map.put("fr", JSON.toJSONString(fr));

//System.out.println("title_high_______fragments = " + title_high.fragments().toString());

map = hit.getSourceAsMap();

//System.out.println("hit = " + hit);

list.add(map);

}

return list;

}

//2. 获取这些数据实现搜索功能

public List<Map<String, Object>> serchPageBuilder(String keyword, int pageNo, int pageSize) throws IOException {

if(pageNo <=1) {

pageNo = 1;

}

//条件搜索

//1、构建搜索请求

SearchRequest jd_goods = new SearchRequest("jd_goods");

//2、设置搜索条件,使用该构建器进行查询

SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder();

// 构建精确匹配查询条件

// TermQueryBuilder termQuery = QueryBuilders.termQuery("title", keyword);

// TermQueryBuilder termQuery = QueryBuilders.termQuery("title.keyword", keyword);

// searchSourceBuilder.query(termQuery);

// 自试 索引查询

// QueryBuilder queryBuilder = QueryBuilders.matchAllQuery();

QueryBuilder queryBuilder = QueryBuilders.matchQuery("title", keyword); // 成功【单子多字都成功】

searchSourceBuilder.query(queryBuilder);

// QueryBuilder queryBuilder = QueryBuilders.matchQuery("title", "轻松"); // 成功【单子多字都成功】

// searchSourceBuilder.query(queryBuilder);

searchSourceBuilder.timeout(new TimeValue(60, TimeUnit.SECONDS));

searchSourceBuilder.from(pageNo);

searchSourceBuilder.size(pageSize);

// HighlightBuilder.Field field = new HighlightBuilder.Field("title");

// field.preTags("");

// field.postTags("");

// field.fragmentSize(100);

HighlightBuilder highlightBuilder = new HighlightBuilder();

highlightBuilder.requireFieldMatch(true);

highlightBuilder.field("title");

highlightBuilder.preTags("");

highlightBuilder.postTags("");

// highlightBuilder.requireFieldMatch(false);//匹配第一个即可

// highlightBuilder.numOfFragments(0);

searchSourceBuilder.highlighter(highlightBuilder);

//3、将搜索条件放入搜索请求中

jd_goods.source(searchSourceBuilder);

//4、客户端执行搜索请求

SearchResponse search = restHighLevelClient.search(jd_goods, RequestOptions.DEFAULT);

System.out.println("共查询到"+search.getHits().getHits().length+"条数据");

//5、打印测试

Map<String, Object> map = new HashMap<>();

ArrayList<Map<String,Object>> list = new ArrayList();

for (SearchHit hit : search.getHits()) {

//

String value = hit.getSourceAsString();

Content esProductTO = JSON.parseObject(value, Content.class);

map.put("fragment", JSON.toJSONString(esProductTO.getTitle()));

System.out.println(esProductTO.getTitle());

map.put("fr", JSON.toJSONString(esProductTO.getTitle()));

map = hit.getSourceAsMap();

//System.out.println("hit = " + hit);

list.add(map);

// System.out.println("hit:\n" + hit);

// System.out.println(hit.getHighlightFields() == null);

// System.out.println(hit.getHighlightFields().get("title"));

// Map highlightFields = hit.getHighlightFields();

// System.out.println("highlightFields = " + highlightFields);

// HighlightField title_high = highlightFields.get("title");

// String fr = "";

// for (Text fragment : title_high.fragments()) {

// System.out.println("fragment = " + fragment);

// fr = fragment.toString();

// map.put("fragment", JSON.toJSONString(fragment.toString()));

//

// }

// System.out.println("fr = " + fr);

// // map.put("fragment", JSON.toJSONString(fragment));

// map.put("fr", JSON.toJSONString(fr));

// //System.out.println("title_high_______fragments = " + title_high.fragments().toString());

// map = hit.getSourceAsMap();

// //System.out.println("hit = " + hit);

// list.add(map);

}

return list;

}

// @Autowired

// private ElasticsearchRestTemplate elasticsearchTemplate;

// public static final Integer ROWS = 10;

//

// public SearchResult search(String keyWord, Integer page) {

// List houseList = new ArrayList();

// Pageable pageable = PageRequest.of(page - 1, ROWS); // 设置分页参数

// NativeSearchQuery searchQuery = new NativeSearchQueryBuilder()

// .withQuery(QueryBuilders.matchQuery("title", keyWord).operator(Operator.AND)) // match查询

// .withPageable(pageable).withHighlightBuilder(getHighlightBuilder("title")) // 设置高亮

// .build();

// SearchHit searchHits = this.elasticsearchTemplate.search(searchQuery, Content.class);

System.out.println("共查询到"+searchHits.getHits().getHits().length+"条数据");

// // List> list = housePage.getSearchHits();

// for (SearchHit searchHit : searchHits) { // 获取搜索到的数据

//

// Content content = (Content) searchHit.getHighlightFields();

// Content houseData = new Content();

// BeanUtils.copyProperties(content, houseData);

//

// // 处理高亮

// Map> highlightFields = searchHit.getHighlightFields();

// for (Map.Entry> stringHighlightFieldEntry : highlightFields.entrySet()) {

// String key = stringHighlightFieldEntry.getKey();

// if (StringUtils.equals(key, "title")) {

// List fragments = stringHighlightFieldEntry.getValue();

// StringBuilder sb = new StringBuilder();

// for (String fragment : fragments) {

// sb.append(fragment.toString());

// }

// houseData.setTitle(sb.toString());

// }

//

// }

// houseList.add(houseData);

// }

//

// return new SearchResult(((Long) (searchHits.getTotalHits())).intValue(), houseList);

// }

// // 设置高亮字段

// private HighlightBuilder getHighlightBuilder(String... fields) {

// // 高亮条件

// HighlightBuilder highlightBuilder = new HighlightBuilder(); // 生成高亮查询器

// for (String field : fields) {

// highlightBuilder.field(field);// 高亮查询字段

// }

// highlightBuilder.requireFieldMatch(false); // 如果要多个字段高亮,这项要为false

// highlightBuilder.preTags(""); // 高亮设置

// highlightBuilder.postTags("");

// // 下面这两项,如果你要高亮如文字内容等有很多字的字段,必须配置,不然会导致高亮不全,文章内容缺失等

// highlightBuilder.fragmentSize(800000); // 最大高亮分片数

// highlightBuilder.numOfFragments(0); // 从第一个分片获取高亮片段

//

// return highlightBuilder;

// }

// /**

// * 根据关键字进行检索

// *

// * @param builder

// * @return

// */

// @Autowired

// private ElasticsearchRestTemplate elasticsearchRestTemplate;

//

// NativeSearchQueryBuilder searchQueryBuilder = new NativeSearchQueryBuilder();

//

// // 搜索条件构造器构建:NativeSearchQuery

// NativeSearchQuery searchQuery = searchQueryBuilder.build();

//

// // 执行搜索,获取封装响应数据结果的SearchHits集合

// SearchHits searchHits = elasticsearchRestTemplate.search(searchQuery, Content.class);

// private Map searchForPage(NativeSearchQueryBuilder builder) {

// // 关键字的高亮显示

// // 继续封装检索条件

// HighlightBuilder.Field field = new HighlightBuilder.Field("title"); //sku的name如果有关键字就进行高亮

// field.preTags(""); // 开始标签

// field.postTags(""); // 结束标签

// field.fragmentSize(100); // 显示的字符个数

// builder.withHighlightFields(field);

//

// NativeSearchQuery build = builder.build();

// //AggregatedPage page = elasticsearchTemplate.queryForPage(build, SkuInfo.class);

// // 分组结果集

// SearchHits searchHits = elasticsearchRestTemplate.search(builder.build(), Content.class);

// // 对SearchHits集合进行分页封装

// SearchPage page = SearchHitSupport.searchPageFor(searchHits, builder.build().getPageable());

//

// // 取出高亮的结果数据,在该对象中

// // 遍历: 对返回的内容进行处理(高亮字段替换原来的字段)

// for(SearchHit searchHit:searchHits){

// // 获取searchHit中的高亮内容

// Map> highlightFields = searchHit.getHighlightFields();

// // 将高亮的内容填充到content中

// searchHit.getContent().setName(highlightFields.get("name")==null ? searchHit.getContent().getName():highlightFields.get("name").get(0));

// }

//

// Map map = new HashMap<>();

// // 商品结果集

// map.put("rows", page.getContent());

// //总条数

// map.put("TotalElements", page.getTotalElements());

// //总页数

// map.put("TotalPages", page.getTotalPages());

// // 分页当前页码

// map.put("pageNum", build.getPageable().getPageNumber() + 1);

// // 每页显示条数

// map.put("pageSize", build.getPageable().getPageSize());

//

// return map;

// }

}

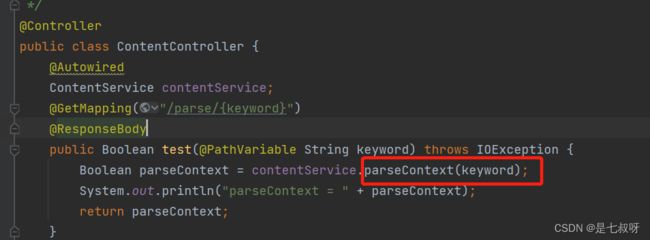

2.7 在controller包下新建ContentController类,完成前端请求编写

- ContentController

package com.zhj.ESDemo.controller;

/**

* Topic

* Description

*

* @author zhouh

* @version 1.0

* Create by 2022/7/24 20:42

*/

import com.zhj.ESDemo.service.ContentService;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Controller;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.PathVariable;

import org.springframework.web.bind.annotation.ResponseBody;

import java.io.IOException;

import java.util.List;

import java.util.Map;

/**

前端请求编写

*/

@Controller

public class ContentController {

@Autowired

ContentService contentService;

@GetMapping("/parse/{keyword}")

@ResponseBody

public Boolean test(@PathVariable String keyword) throws IOException {

Boolean parseContext = contentService.parseContext(keyword);

System.out.println("parseContext = " + parseContext);

return parseContext;

}

@GetMapping("/search/{keyword}/{pageNO}/{pageSize}")

@ResponseBody

public List<Map<String, Object>> search(@PathVariable("keyword") String keyword, @PathVariable("pageNO") int pageNo, @PathVariable int pageSize) throws IOException {

List<Map<String, Object>> list = contentService.serchPageBuilder(keyword, pageNo, pageSize);

return list;

}

}

2.8 在controller包下,继续新建indexController类

- indexController

package com.zhj.ESDemo.controller;

import org.springframework.stereotype.Controller;

import org.springframework.web.bind.annotation.GetMapping;

/**

* Topic

* Description

*

* @author zhouh

* @version 1.0

* Create by 2022/7/24 21:12

*/

@Controller

public class indexController {

@GetMapping({"/","/index"})

public String index() {

return "index";

}

}

自己新建的启动类代码如下,【这个都是自己新建项目的时候自动生成的,一般每个人的都不一样】

package com.zhj.ESDemo;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

@SpringBootApplication

public class EsTestApplication {

public static void main(String[] args) {

SpringApplication.run(EsTestApplication.class, args);

}

}

三、处理ES版本问题

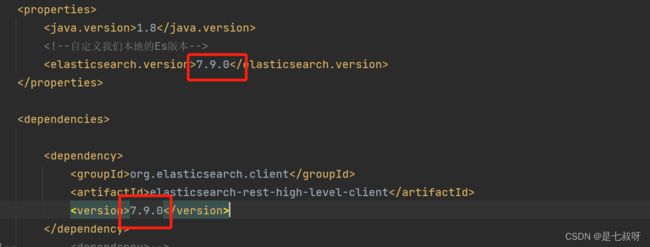

3.1 在pom.xml中配置相应的版本【自己本地ES的版本】

四、爬虫爬取数据放到本地ES中,并且自动新建jd_goods索引

数据问题?数据库获取,消息对列中获取中,都可以成为数据源,爬虫!

爬取数据:(获取请求返回的页面信息,筛选出我们想要的数据就可以了)

4.1 在utils新建HtmlParseUtil 类,代码如下

public class HtmlParseUtil {

public static void main(String[] args) throws IOException {

new HtmlParseUtil().parseJD("中文").forEach(System.out::println);

//等价于for增强

/* for (User user : users) {

System.out.println(user);

}*/

}

public List<Content> parseJD(String keyword) throws IOException{

//获取请求

//前提:需要联网,ajax不能获取到

String url = "https://search.jd.com/Search?keyword=" + keyword;

//解析网页 ,(jsoup 返回的对象就是浏览器document对象)

Document document = Jsoup.parse(new URL(url), 30000);

//所有你在js中可以使用的方法,在这里都可以用。

Element j_goodsList = document.getElementById("J_goodsList");

// System.out.println(j_goodsList.html());

//获取所有的li元素

Elements li = j_goodsList.getElementsByTag("li");

//list

ArrayList<Content> objects = new ArrayList<>();

//获取元素中的内容

for (Element element : li) {

// System.out.println("element = " + element);

/* Elements img = element.getElementsByTag("img").eq(0);

System.out.println("img = " + img);*/

Content content = new Content();

//关于这种图片很多网站,图片都是懒加载的

String img_src = element.getElementsByTag("img").eq(0).attr("data-lazy-img");

//System.out.println("img_src = " + img_src);

String price = element.getElementsByClass("p-price").eq(0).text();

//System.out.println("price = " + price);

String title = element.getElementsByClass("p-name").eq(0).text();

//System.out.println("title = " + title);

content.setImg(img_src);

content.setPrice(price);

content.setTitle(title);

objects.add(content);

}

return objects;

}

}

回头看ContentController类,启动springboot之后,我们在页面输入localhost:9090/parse中文,会调用service层中的parseContext()函数

爬取与中文关键字有关的数据放到我们本地的ES中了。

五、搜索功能

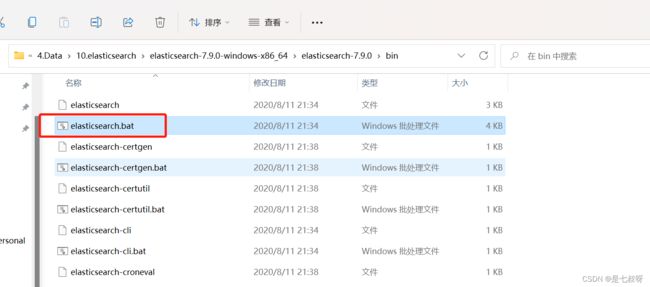

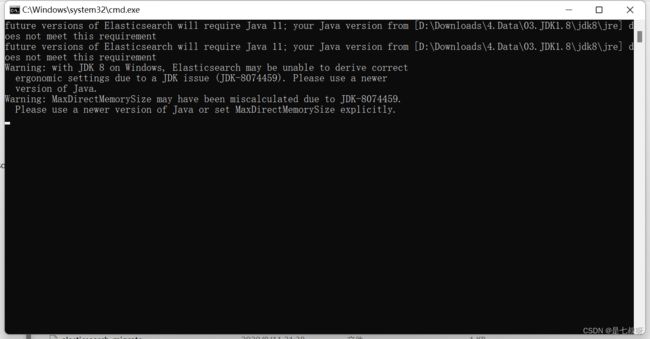

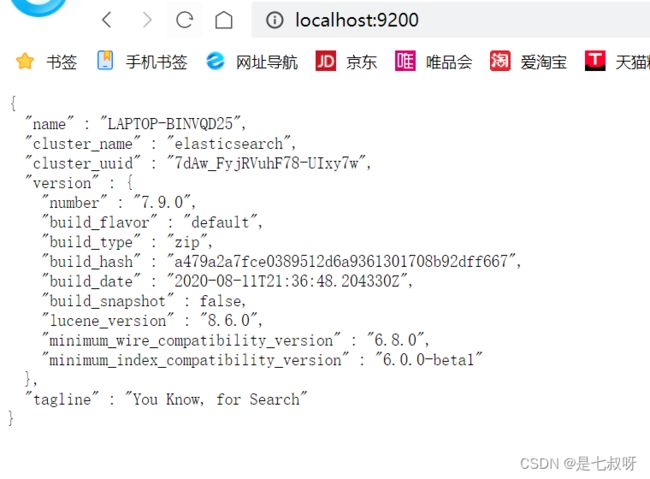

5.1 在本地打开ES:

双击elasticsearch.bat

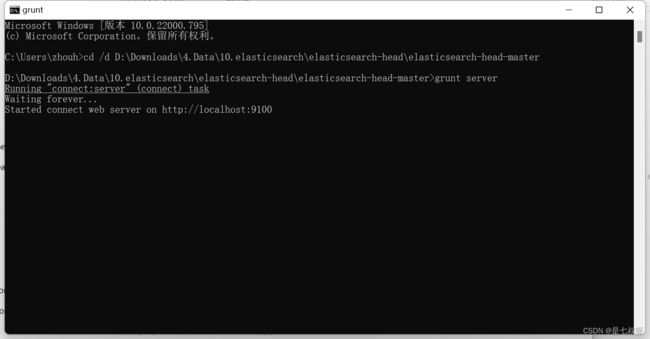

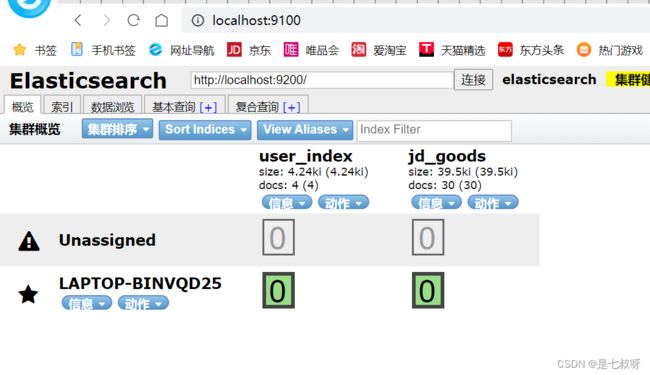

5.2 命令行启动ES-head

cd到bin之后grunt server

查看localhost:9200和localhost:9100

5.3 启动springboot,搜索中文

输入中文搜索:前端就出现了搜索结果【这里我们在第四步中已经将爬取到的数据放到ES中了,所以这里我们能搜到】

参考:Elasticsearch(五)——集成SpringBoot