一次Kaggle竞赛全过程记录

一次Kaggle竞赛学习全过程记录

竞赛地址:https://www.kaggle.com/competitions/AI4Code/overview

个人认为在工程方面,学习一个东西的方法就是去使用它。

第一步(寻找方法)

咱们去学习那些已经投票最多、可以正常运行的代码。

第二步(解读源码)

阅读别人源码,逐句阅读,加上自己的注释。(以这个notebook为例)

Setup

import json

from pathlib import Path # 导入文件路径库

import numpy as np

import pandas as pd

from scipy import sparse

from tqdm import tqdm

# 设置pd展示的长宽

pd.options.display.width = 180

pd.options.display.max_colwidth = 120

# 获取项目文件路径

data_dir = Path('../input/AI4Code')

Load Data

NUM_TRAIN = 10000

def read_notebook(path):

"""

输入json文件路径,解析单个文件

"""

return (

# 读取json文件

pd.read_json(

path,

dtype={'cell_type': 'category', 'source': 'str'}) # 将json文件的类型进行映射

.assign(id=path.stem) # path.stem返回去除后缀的文件名

.rename_axis('cell_id') # 重命名id为cell_id

)

paths_train = list((data_dir / 'train').glob('*.json'))[:NUM_TRAIN] # 获取data_dir/train目录下所有的.json后缀的文件的前NUM_TRAIN个

# 处理全部文件

notebooks_train = [

read_notebook(path) for path in tqdm(paths_train, desc='Train NBs')

]

# 将每个json文件对应的dataframe拼接起来

df = (

pd.concat(notebooks_train)

.set_index('id', append=True)

.swaplevel() # 交换索引级别 让文件id文本一级索引 cell_id成为二级索引

.sort_index(level='id', sort_remaining=False) # 对一级索引id进行排序,不对cell_id进行排序

)

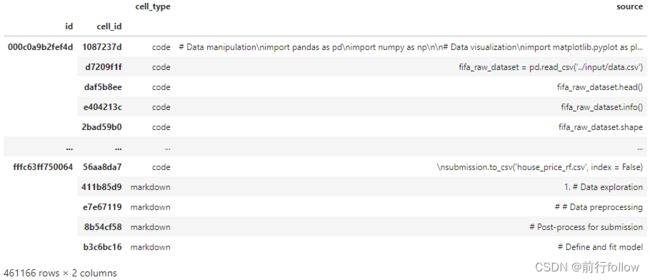

df # 是一个以id为一级索引(每个id代表一个notebook) cell_id为二级索引(每个cell_id代表一个代码块或一个markdown块) 包括cell_type、source两个属性的DataFrame

运行效果:

展示实例

# Get an example notebook

nb_id = df.index.unique('id')[6]

print('Notebook:', nb_id)

print("The disordered notebook:")

nb = df.loc[nb_id, :]

display(nb)

print()

Ordering the Cells

得到notebook对应的正确cell_id顺序

df_orders = pd.read_csv(

data_dir / 'train_orders.csv',

index_col='id',

squeeze=True, # 如果解析的数据仅包含一个列,则返回Series

).str.split() # Split the string representation of cell_ids into a list

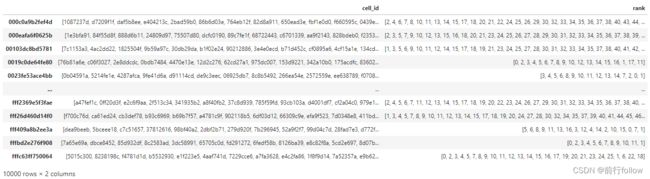

df_orders # 表示每个notebook对应的正确的cell_id顺序 类型是:pandas.core.series.Series

根据正确的cell_id顺序,获取source

# Get the correct order

cell_order = df_orders.loc[nb_id]

print("The ordered notebook:")

nb.loc[cell_order, :]

根据正确的cell_id顺序,获取打乱后cell_id的正确顺序rank

def get_ranks(base, derived):

return [base.index(d) for d in derived]

# 以当前notebook为例 插入正确的cell_id顺序

cell_ranks = get_ranks(cell_order, list(nb.index))

nb.insert(0, 'rank', cell_ranks)

nb

from pandas.testing import assert_frame_equal

# 用来检查两个DataFrame是否相等 如果忽略类型检查:check_dtype=False

assert_frame_equal(nb.loc[cell_order, :], nb.sort_values('rank'))

本文算法将使用cell ranks作为算法的target,因此接下来为每个notebook增加rank列

# 先将df_orders转换为DataFrame

# 进行拼接操作

# df.reset_index('cell_id') 此方法可以删除cell_id的索引级别,变成一个属性。

# 然后聚合每个id 相当于聚合出每个notebook 然后取出cell_id属性

df_orders_ = df_orders.to_frame().join(

df.reset_index('cell_id').groupby('id')['cell_id'].apply(list),

how='right',

)

df_orders_

df.reset_index('cell_id').groupby('id')['cell_id'].apply(list)

ranks = {}

# 一次迭代上述的df_orders_

# 创造字典 id_表示notebook的索引 cell_id表示每个notebook打乱后的cell_id顺序 rank表示cell_id对应的正确顺序

for id_, cell_order, cell_id in df_orders_.itertuples():

ranks[id_] = {'cell_id': cell_id, 'rank': get_ranks(cell_order, cell_id)}

pd.DataFrame(ranks).T

df_ranks = (

pd.DataFrame

.from_dict(ranks, orient='index') # orient=index表示将字典keys作为行 如果orient=columns将字典keys作为列

.rename_axis('id') # 将id设为index

.apply(pd.Series.explode) # 将列表的每个元素转换为行

.set_index('cell_id', append=True) # 将cell_id设置为索引 并且拼接到一级索引id上 否则将会抹除掉id索引列

)

df_ranks

split

df_ancestors = pd.read_csv(data_dir / 'train_ancestors.csv', index_col='id')

df_ancestors

为了避免信息泄露,在测试集中的notebook不知道祖先信息

from sklearn.model_selection import GroupShuffleSplit

# 使用分组分割

NVALID = 0.1 # size of validation set

splitter = GroupShuffleSplit(n_splits=1, test_size=NVALID, random_state=0)

# Split, keeping notebooks with a common origin (ancestor_id) together

ids = df.index.unique('id')

ancestors = df_ancestors.loc[ids, 'ancestor_id']

# 将祖先作为组别对notebook的id进行划分

ids_train, ids_valid = next(splitter.split(ids, groups=ancestors))

ids_train, ids_valid = ids[ids_train], ids[ids_valid]

df_train = df.loc[ids_train, :]

df_valid = df.loc[ids_valid, :]

df_train.shape,df_valid.shape

# ((416586, 2), (44580, 2))

df_train

Feature Engineering

本文使用TF-IDF特征用于排序出正确的顺序。

from sklearn.feature_extraction.text import TfidfVectorizer

# Training set

tfidf = TfidfVectorizer(min_df=0.01)

X_train = tfidf.fit_transform(df_train['source'].astype(str))

# Rank of each cell within the notebook

y_train = df_ranks.loc[ids_train].to_numpy()

# Number of cells in each notebook 每个notebook中的cell数

groups = df_ranks.loc[ids_train].groupby('id').size().to_numpy()

groups,groups.shape

#(array([70, 65, 89, ..., 17, 12, 26]), (8997,))

# Add code cell ordering

# 将code按照递增顺序记录 将markdown标记为0

X_train = sparse.hstack((

X_train,

np.where(

df_train['cell_type'] == 'code',

df_train.groupby(['id', 'cell_type']).cumcount().to_numpy() + 1,

0,

).reshape(-1, 1)

))

print(X_train.shape)

# (416586, 284)

print(y_train.shape)

# (416586, 1)

至此,通过TF-IDF将两列信息cell_type和source变成了284维特征,将训练集制作成功。

Train

训练时,采用XGBRanker来进行训练

from xgboost import XGBRanker

model = XGBRanker(

min_child_weight=10,

subsample=0.5,

tree_method='hist',

)

model.fit(X_train, y_train, group=groups)

Evaluate

Validation set

同理,制作验证集

# Validation set

X_valid = tfidf.transform(df_valid['source'].astype(str))

# The metric uses cell ids

y_valid = df_orders.loc[ids_valid]

X_valid = sparse.hstack((

X_valid,

np.where(

df_valid['cell_type'] == 'code',

df_valid.groupby(['id', 'cell_type']).cumcount().to_numpy() + 1,

0,

).reshape(-1, 1)

))

对验证机进行预测

y_pred = pd.DataFrame({'rank': model.predict(X_valid)}, index=df_valid.index)

y_pred

y_pred = (

y_pred

.sort_values(['id', 'rank']) # Sort the cells in each notebook by their rank.

# The cell_ids are now in the order the model predicted.

.reset_index('cell_id') # Convert the cell_id index into a column.

.groupby('id')['cell_id'].apply(list) # Group the cell_ids for each notebook into a list.

)

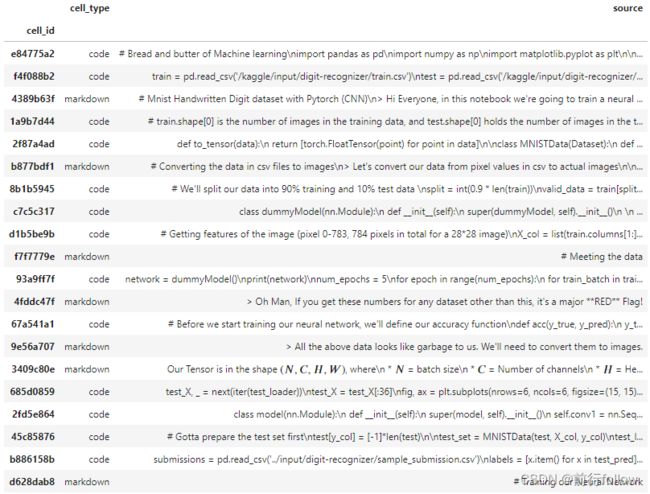

y_pred.head(10)

代码分解:

y_pred.sort_values(['id','rank'])

按照id为一级索引,对rank进行从小到大排序

样例展示:

nb_id = df_valid.index.get_level_values('id').unique()[8]

display(df.loc[nb_id])

display(df.loc[nb_id].loc[y_pred.loc[nb_id]])

原始的:

排序后的:

可以发现经过训练后,模型学到了code和markdown块之间的联系,能够去预测一个较为合理的顺序。

Metric

衡量指标如下:

K = 1 − 4 ∑ i S i ∑ i n i ( n i − 1 ) K=1-4 \frac{\sum_{i} S_{i}}{\sum_{i} n_{i}\left(n_{i}-1\right)} K=1−4∑ini(ni−1)∑iSi

如何理解这个指标呢?

该竞赛采用Kendall tau correlation,通过计算真实单元格顺序和预测单元格顺序之间的差异来衡量,即:相邻单元格需要多少次交换才可以让预测单元格顺序变成真实单元格顺序。考虑最糟糕情况,真实单元格顺序是:[1,2,…,n],预测单元格顺序是:[n,n-1,…,1],如果需要将预测单元格变成真实单元格,需要 n ( n − 1 ) 2 \frac{n(n-1)}{2} 2n(n−1)次。其中 S i S_i Si表示需要交换的次数,那么 S i n i ( n i − 1 ) \frac{S_i}{n_i(n_i-1)} ni(ni−1)Si越小表示预测效果越好,即K越大,预测效果越好。

from bisect import bisect

# 用来计算$S_i$

def count_inversions(a):

"""

传入的是预测单元格在实际单元格中的索引

"""

inversions = 0

sorted_so_far = []

for i, u in enumerate(a):

j = bisect(sorted_so_far, u)

inversions += i - j

sorted_so_far.insert(j, u)

return inversions

def kendall_tau(ground_truth, predictions):

total_inversions = 0

total_2max = 0 # twice the maximum possible inversions across all instances

for gt, pred in zip(ground_truth, predictions):

ranks = [gt.index(x) for x in pred] # rank predicted order in terms of ground truth

total_inversions += count_inversions(ranks)

n = len(gt)

total_2max += n * (n - 1)

return 1 - 4 * total_inversions / total_2max

计算y_valid和y_dummy(经过shuffle的)的kendall_tau以及经过预测后的y_pred和y_valid之间的kendall_tau,如果该系数变大也说明模型预测的结果是有效的。

y_dummy = df_valid.reset_index('cell_id').groupby('id')['cell_id'].apply(list)

kendall_tau(y_valid, y_dummy)

# 0.42511216883092573

kendall_tau(y_valid, y_pred)

# 0.6158894721044015

Submission

将测试集做数据预处理

paths_test = list((data_dir / 'test').glob('*.json'))

notebooks_test = [

read_notebook(path) for path in tqdm(paths_test, desc='Test NBs')

]

df_test = (

pd.concat(notebooks_test)

.set_index('id', append=True)

.swaplevel()

.sort_index(level='id', sort_remaining=False)

)

给测试集做特征工程

X_test = tfidf.transform(df_test['source'].astype(str))

X_test = sparse.hstack((

X_test,

np.where(

df_test['cell_type'] == 'code',

df_test.groupby(['id', 'cell_type']).cumcount().to_numpy() + 1,

0,

).reshape(-1, 1)

))

对X_test进行预测

y_infer = pd.DataFrame({'rank': model.predict(X_test)}, index=df_test.index)

y_infer = y_infer.sort_values(['id', 'rank']).reset_index('cell_id').groupby('id')['cell_id'].apply(list)

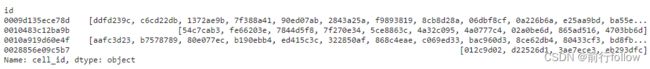

y_infer

读取sample_submission.csv文件,确定提交文件格式

y_sample = pd.read_csv(data_dir / 'sample_submission.csv', index_col='id', squeeze=True)

y_sample

y_submit = (

y_infer

.apply(' '.join) # list of ids -> string of ids

.rename_axis('id')

.rename('cell_order')

)

y_submit

保存文件到submission.csv

y_submit.to_csv('submission.csv')

reference:

- https://pandas.pydata.org/docs/reference/

- https://numpy.org/doc/stable/reference/