爬虫中如何获取页面编码类型

获取页面的编码格式的三种方式:

- 根据Response中的header获取编码格式

- 根据页面标签中的meta获取

- 根据页面内容识别自动识别出编码格式,经过测试准确率比较高

三种方式可以结合使用,由于inputStream不能够被复用,但是inputStrem没有clone方法也导致无法克隆

因此需要流转化,这种方式多重比较需要重复进行流转化。

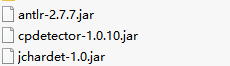

依赖包

工具类

/**

* 获取页面的编码格式

1.根据Response中的header获取编码格式

2.根据页面标签中的meta获取

3.根据页面内容识别自动识别出编码格式,经过测试准确率比较高

三种方式可以结合使用,目前GFCrawer是使用了meta一种,由于inputStream不能够被复用,但是inputStrem没有clone方法也导致无法克隆

因此需要流转化,这种方式多重比较需要重复进行流转化

*/

package charset;

import java.io.BufferedReader;

import java.io.ByteArrayInputStream;

import java.io.IOException;

import java.io.InputStream;

import java.io.InputStreamReader;

import java.net.HttpURLConnection;

import java.net.MalformedURLException;

import java.net.URL;

import java.net.URLConnection;

import java.nio.charset.Charset;

import java.util.List;

import java.util.Map;

import org.apache.commons.io.IOUtils;

import info.monitorenter.cpdetector.io.ASCIIDetector;

import info.monitorenter.cpdetector.io.ByteOrderMarkDetector;

import info.monitorenter.cpdetector.io.CodepageDetectorProxy;

import info.monitorenter.cpdetector.io.JChardetFacade;

import info.monitorenter.cpdetector.io.ParsingDetector;

import info.monitorenter.cpdetector.io.UnicodeDetector;

/**

* @author weijie 2019年5月8日

*/

public class GFCrawerCharset {

public static void main(String[] args) {

// 判断乱码

System.out.println("------判断乱码-------");

//gb2312

String url = "http://www.people.com.cn/";

//utf-8

String url2 = "https://blog.csdn.net/dreamzuora";

String errorHtml = GFCrawer.GetContent(url, "utf-8");

String succHtml = GFCrawer.GetContent(url2);

System.out.println("error: " + errorHtml.substring(100, 300));

System.out.println("succ: " + succHtml.substring(1000, 1100));

boolean b = isErrorCodes(errorHtml);

boolean b2 = isErrorCodes(succHtml);

System.out.println(b);

System.out.println(b2);

// meta获取编码

System.out.println("-------meta获取编码------");

System.out.println(url + "\t" + getCharsetByMeta(url));

System.out.println(url2 + "\t" + getCharsetByMeta(url2));

// responseHeader获取后端返回的contentType:但是很多网站不会明确返回

System.out.println("-------后端头信息编码结果------");

System.out.println(url + "\t" + getAutoCharsetByHeader(url));

System.out.println(url2 + "\t" + getAutoCharsetByHeader(url2));

// 自动判断编码类型

System.out.println("-------识别文本信息编码------");

cdpInit();

System.out.println(url + "\t" + getAutoCharsetByURL(url));

System.out.println(url2 + "\t" + getAutoCharsetByURL(url2));

}

// 解析页面内容自动识别编码类型

public static CodepageDetectorProxy cdp = null;

static {

cdpInit();

}

public static void cdpInit() {

cdp = CodepageDetectorProxy.getInstance();

cdp.add(JChardetFacade.getInstance());

cdp.add(ASCIIDetector.getInstance());

cdp.add(UnicodeDetector.getInstance());

cdp.add(new ParsingDetector(false));

cdp.add(new ByteOrderMarkDetector());

}

/**

* 判断是否乱码 加上html的判断会出错

*

* @param html

* @return

*/

public static boolean isErrorCodes(String str) {

for (int i = 0, len = str.length(); i < len; i++) {

char c = str.charAt(i);

// 当从Unicode编码向某个字符集转换时,如果在该字符集中没有对应的编码,则得到0x3f(即问号字符?)

//从其他字符集向Unicode编码转换时,如果这个二进制数在该字符集中没有标识任何的字符,则得到的结果是0xfffd

//System.out.println("--- " + (int) c);

if ((int) c == 0xfffd) {

// 存在乱码

//System.out.println("存在乱码 " + (int) c);

return true;

}

}

return false;

}

/**

* 通过流判断编码格式

*

* @param in

* @return

*/

public static String getAutoCharsetByInputStream(InputStream in) {

String code = null;

ByteArrayInputStream bais = null;

try {

bais = new ByteArrayInputStream(IOUtils.toByteArray(in));

Charset charset = cdp.detectCodepage(bais, 2147483647);

bais.close();

code = charset.name();

} catch (IOException e) {

e.printStackTrace();

}

return code;

}

/**

* 通过url判断

*

* @param href

* @return

*/

public static String getAutoCharsetByURL(String href) {

URL url;

String code = null;

try {

url = new URL(href);

Charset charset = cdp.detectCodepage(url);

code = charset.name();

} catch (MalformedURLException e) {

e.printStackTrace();

} catch (IOException e) {

e.printStackTrace();

}

return code;

}

/**

* 从mata获取编码格式

*

* @param url

* @return

*/

public static String getCharsetByMeta(String href) {

String charset = null;

URL url = null;

try {

url = new URL(href);

} catch (MalformedURLException e) {

e.printStackTrace();

url = null;

}

if(url == null)

{

return null;

}

HttpURLConnection httpConnection = null;

try {

httpConnection = (HttpURLConnection) url

.openConnection();

} catch (IOException e) {

e.printStackTrace();

httpConnection = null;

}

if(httpConnection == null)

{

return null;

}

httpConnection.setRequestProperty("User-Agent",

"Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.2; Trident/4.0; .NET CLR 1.1.4322; .NET CLR 2.0.50727)");

InputStream input = null;

try {

input = httpConnection.getInputStream();

input.read();

BufferedReader reader = new BufferedReader(new InputStreamReader(input));

while (reader.ready()) {

String line = reader.readLine();

if (line.contains("http-equiv") && line.contains("charset")) {

String tmp = line.split(";")[1];

charset = tmp.substring(tmp.indexOf("=") + 1, tmp.indexOf("\""));

break;

} else {

continue;

}

}

reader.close();

} catch (Exception e) {

e.printStackTrace();

input = null;

}

return charset;

}

/**

* 从mata获取编码格式

*

* @param url

* @return

*/

public static String getCharsetByMeta(InputStream inputStream) {

String charset = null;

try {

BufferedReader reader = new BufferedReader(new InputStreamReader(inputStream));

while (reader.ready()) {

String line = reader.readLine();

if (line.contains("http-equiv") && line.contains("charset")) {

String tmp = line.split(";")[1];

charset = tmp.substring(tmp.indexOf("=") + 1, tmp.indexOf("\""));

} else {

continue;

}

}

reader.close();

return charset;

} catch (MalformedURLException e) {

e.printStackTrace();

return charset;

} catch (IOException e) {

e.printStackTrace();

return charset;

}

}

/**

* 从header中获取页面编码

*

* @param strUrl

* @return

*/

public static String getAutoCharsetByHeader(String href) {

String charset = null;

URL url = null;

try {

url = new URL(href);

} catch (MalformedURLException e) {

e.printStackTrace();

url = null;

}

if(url == null)

{

return null;

}

HttpURLConnection httpConnection = null;

try {

httpConnection = (HttpURLConnection) url

.openConnection();

} catch (IOException e) {

e.printStackTrace();

httpConnection = null;

}

if(httpConnection == null)

{

return null;

}

httpConnection.setRequestProperty("User-Agent",

"Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.2; Trident/4.0; .NET CLR 1.1.4322; .NET CLR 2.0.50727)");

// 获取链接的header

Map<String, List<String>> headerFields = httpConnection.getHeaderFields();

// 判断headers中是否存在Content-Type

if (headerFields.containsKey("Content-Type")) {

// 拿到header 中的 Content-Type :[text/html; charset=utf-8]

List<String> attrs = headerFields.get("Content-Type");

String[] as = attrs.get(0).split(";");

for (String att : as) {

if (att.contains("charset")) {

charset = att.split("=")[1];

}

}

}

return charset;

}

/**

* 从header中获取页面编码

*

* @param strUrl

* @return

*/

public static String getCharsetByHeader(URLConnection urlConn) {

String charset = null;

// 获取链接的header

Map<String, List<String>> headerFields = urlConn.getHeaderFields();

// 判断headers中是否存在Content-Type

if (headerFields.containsKey("Content-Type")) {

// 拿到header 中的 Content-Type :[text/html; charset=utf-8]

List<String> attrs = headerFields.get("Content-Type");

String[] as = attrs.get(0).split(";");

for (String att : as) {

if (att.contains("charset")) {

charset = att.split("=")[1];

}

}

}

return charset;

}

}