企业运维容器之 docker 三剑客swarm

企业运维容器之 docker 三剑客swarm

-

- 1. Docker Swarm简介

- 2. Docker Swarm实践

- 3. docker stack部署

- 4. Portainer可视化

1. Docker Swarm简介

- Swarm 在 Docker 1.12 版本之前属于一个独立的项目,在 Docker 1.12 版本发布之后,该项目合并到了 Docker 中,成为 Docker 的一个子命令。

- Swarm 是 Docker 社区提供的唯一一个原生支持 Docker 集群管理的工具。

- Swarm可以把多个 Docker 主机组成的系统转换为单一的虚拟 Docker 主机,使得容器可以组成跨主机的子网网络。

- Docker Swarm 是一个为 IT 运维团队提供集群和调度能力的编排工具

- Docker Swarm 优点

任何规模都有高性能表现,灵活的容器调度,服务的持续可用性和 Docker API 及整合支持的兼容性。

Docker Swarm 为 Docker 化应用的核心功能(诸如多主机网络和存储卷管理)提供原生支持。 - docker swarm 相关概念

节点分为管理 (manager) 节点和工作 (worker) 节点;

任务 (Task)是 Swarm 中的最小的调度单位,目前来说就是一个单一的容器。

服务 (Services) 是指一组任务的集合,服务定义了任务的属性。

2. Docker Swarm实践

- 创建 Swarm 集群

初始化集群: docker swarm init

[root@server1 ~]# docker swarm init

Swarm initialized: current node (lwl1574190s7dk2m0vwq8fo4u) is now a manager.

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-6042qvxj27capknpxotehy0zk380r6dg0pgu5qv7g0z04slv6x-bh8eio618l0tu2pfnkm65emwd 172.25.15.1:2377

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.

该指令只能在 leader 上执行

[root@server1 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

lwl1574190s7dk2m0vwq8fo4u * server1 Ready Active Leader 19.03.15

根据提示在其他docker节点上执行命令,其他结点加入:

[root@server2 compose]# docker swarm join --token SWMTKN-1-6042qvxj27capknpxotehy0zk380r6dg0pgu5qv7g0z04slv6x-bh8eio618l0tu2pfnkm65emwd 172.25.15.1:2377

This node joined a swarm as a worker.

[root@server3 docker]# docker swarm join --token SWMTKN-1-6042qvxj27capknpxotehy0zk380r6dg0pgu5qv7g0z04slv6x-bh8eio618l0tu2pfnkm65emwd 172.25.15.1:2377

This node joined a swarm as a worker.

此时再次查看其结点信息:

[root@server1 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

lwl1574190s7dk2m0vwq8fo4u * server1 Ready Active Leader 19.03.15

[root@server1 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

lwl1574190s7dk2m0vwq8fo4u * server1 Ready Active Leader 19.03.15

zhhp5501wu76cluglinzdv70w server2 Ready Active 19.03.15

k39m95d6c0oc40biyzb5cdyix server3 Ready Active 19.03.15

会自动创建一个网络:

[root@server1 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

e3c1f4d37c07 bridge bridge local

8535c5281d20 docker_gwbridge bridge local

8a1d46231c4e harbor_harbor bridge local

9060ca2c0d1e harbor_harbor-chartmuseum bridge local

7b739bd64a8f harbor_harbor-clair bridge local

1d41c7aa2f92 harbor_harbor-notary bridge local

37f739e41113 harbor_notary-sig bridge local

9894c728c0a7 host host local

zqor0s9qs2ih ingress overlay swarm

fcd1bdec9b88 none null local

创建服务:docker service create --name mysrc --replicas 2 -p 80:80 nginx,表示创建一个服务,名称为 mysrc,副本数为 2 ,端口映射;具体的参数可以用命令来查看帮助: docker service create --help 。

命令解释:

docker service create 命令创建一个服务

–name 服务名称命名为 my_cluster

–network 指定服务使用的网络模型

–replicas 设置启动的示例数为3

以上在创建时,会非常慢,当没有镜像时会从网上拉取;

此处用 1 号主机来作为本地软件仓库所在的主机;将2号主机作为管理结点;其余3号和4号作为两个结点主机来观察实验效果;

在开一个虚拟机,在 234 上来观察实验效果,让1为本地软件仓库;

然后让1主结点下线,2上线;

首先新开一台4号主机,利用 docker-machine 来为其部署容器;

[root@server1 ~]# ssh-copy-id server4

[root@server1 ~]# docker-machine create -d generic --generic-ip-address 172.25.25.4 --engine-install-url "http://172.25.25.250/get-docker.sh" server4

[root@server1 ~]# docker-machine ls

NAME ACTIVE DRIVER STATE URL SWARM DOCKER ERRORS

server2 - generic Running tcp://172.25.25.2:2376 v19.03.25

server3 - generic Running tcp://172.25.25.3:2376 v19.03.25

server4 - generic Running tcp://172.25.25.4:2376 v19.03.25

写入解析,指定仓库:

[root@server4 ~]# vim /etc/hosts

[root@server4 ~]# cd /etc/docker/

[root@server4 docker]# ls

ca.pem certs.d daemon.json key.json server-key.pem server.pem

[root@server4 docker]# systemctl reload docker.service

[root@server3 docker]# vim /etc/hosts

[root@server3 docker]# ls

ca.pem certs.d daemon.json key.json server-key.pem server.pem

[root@server3 docker]# systemctl reload docker.service

[root@server3 docker]# docker info

让4号主机也加入swarm 集群:

[root@server4 docker]# docker swarm join --token SWMTKN-1-6042qvxj27capknpxotehy0zk380r6dg0pgu5qv7g0z04slv6x-bh8eio618l0tu2pfnkm65emwd 172.25.15.1:2377

This node joined a swarm as a worker.

[root@server1 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

lwl1574190s7dk2m0vwq8fo4u * server1 Ready Active Leader 19.03.15

zhhp5501wu76cluglinzdv70w server2 Ready Active 19.03.15

jxlltu5j93daz2k8mhj154gkc server4 Ready Active 19.03.15

k39m95d6c0oc40biyzb5cdyix server4 Ready Active 19.03.15

此时让原先控制结点下线,然后2号结点来接管:

[root@server1 ~]# docker node promote server2

##先提升2号主机的身份

Node server2 promoted to a manager in the swarm.

[root@server1 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

lwl1574190s7dk2m0vwq8fo4u * server1 Ready Active Leader 19.03.15

zhhp5501wu76cluglinzdv70w server2 Ready Active Reachable 19.03.15

jxlltu5j93daz2k8mhj154gkc server4 Ready Active 19.03.15

k39m95d6c0oc40biyzb5cdyix server4 Ready Active 19.03.15

[root@server1 ~]# docker node demote server1

Manager server1 demoted in the swarm.

##降低1号主机的身份,降低身份之后2号主机会自动接管,此时在1号主机上便不可在查看结点信息

[root@server1 ~]# docker node ls

Error response from daemon: This node is not a swarm manager. Worker nodes can't be used to view or modify cluster state. Please run this command on a manager node or promote the current node to a manager.

当1号主机下线之后,原先准备的2号主机便会自动接管。此时只能从2号主机上查看结点信息;

[root@server2 docker]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

lwl1574190s7dk2m0vwq8fo4u server1 Ready Active 19.03.15

zhhp5501wu76cluglinzdv70w * server2 Ready Active Leader 19.03.15

jxlltu5j93daz2k8mhj154gkc server4 Ready Active 19.03.15

k39m95d6c0oc40biyzb5cdyix server4 Ready Active 19.03.15

让1号主机离开 swarm 集群;

[root@server1 ~]# docker swarm leave

Node left the swarm.

当1号主机离开集群之后,此时在管理主机上看到的信息是 down ,可以在管理主机上删除已经 down 的主机;

[root@server2 docker]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

lwl1574190s7dk2m0vwq8fo4u server1 Down Active 19.03.15

zhhp5501wu76cluglinzdv70w * server2 Ready Active Leader 19.03.15

jxlltu5j93daz2k8mhj154gkc server4 Ready Active 19.03.15

k39m95d6c0oc40biyzb5cdyix server4 Ready Active 19.03.15

[root@server2 docker]# docker node rm server1

server1

[root@server2 docker]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

zhhp5501wu76cluglinzdv70w * server2 Ready Active Leader 19.03.15

jxlltu5j93daz2k8mhj154gkc server4 Ready Active 19.03.15

k39m95d6c0oc40biyzb5cdyix server4 Ready Active 19.03.15

开启仓库,开启之后在网页访问看是否信息完整;

[root@server1 harbor]# docker-compose start

让其他几个结点将仓库地址指向本地仓库;

[root@server3 ~]# cd /etc/docker/

[root@server3 docker]# ls

ca.pem key.json server-key.pem server.pem

[root@server3 docker]# ls

ca.pem certs.d daemon.json key.json server-key.pem server.pem

[root@server3 docker]# cat daemon.json

{

"registry-mirrors": ["https://reg.westos.org"]

}

[root@server3 docker]# docker info

此时再次创建服务 ,此时在创建时就很快;

[root@server2 docker]# docker service create --name mysrc --replicas 3 -p 80:80 nginx

g43i606dexdivzkizfezr2hws

overall progress: 3 out of 3 tasks

1/3: running

2/3: running

3/3: running

verify: Service converged

[root@server2 docker]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

g43i606dexdi mysrc replicated 3/3 nginx:latest *:80->80/tcp

[root@server2 docker]# docker service ps mysrc

##查看该服务三个容器分别运行在那些结点上

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

ym8aya7uzm1f mysrc.1 nginx:latest server2 Running Running about a minute ago

kj8aa1rvvjgr mysrc.2 nginx:latest server4 Running Running about a minute ago

6kaj088cdmfm mysrc.3 nginx:latest server4 Running Running about a minute ago

此时虽然可以访问,但是看到的信息都是测试页,效果不明显,此时再次上传一个镜像来做测试;

[root@server1 ~]# docker load -i myapp.tar

d39d92664027: Loading layer 4.232MB/4.232MB

8460a579ab63: Loading layer 11.61MB/11.61MB

c1dc81a64903: Loading layer 3.584kB/3.584kB

68695a6cfd7d: Loading layer 4.608kB/4.608kB

05a9e65e2d53: Loading layer 16.38kB/16.38kB

a0d2c4392b06: Loading layer 7.68kB/7.68kB

Loaded image: ikubernetes/myapp:v1

Loaded image: ikubernetes/myapp:v2

[root@server1 ~]# docker images | grep myapp

ikubernetes/myapp v1 d4a5e0eaa84f 3 years ago 15.5MB

ikubernetes/myapp v2 54202d3f0f35 3 years ago 15.5MB

[root@server1 ~]# docker tag ikubernetes/myapp:v1 reg.westos.org/library/myapp:v1

[root@server1 ~]# docker tag ikubernetes/myapp:v2 reg.westos.org/library/myapp:v2

[root@server1 ~]# docker push reg.westos.org/library/myapp:v1

The push refers to repository [reg.westos.org/library/myapp]

[root@server1 ~]# docker push reg.westos.org/library/myapp:v2

The push refers to repository [reg.westos.org/library/myapp]

此时再次创建服务,来测试:

[root@server2 docker]# docker service rm mysrc

mysrc

[root@server2 docker]# docker service create --name mysrc --replicas 3 -p 80:80 myapp:v1

yibrpco1vuc1wziyl8vlskjjh

overall progress: 3 out of 3 tasks

1/3: running

2/3: running

3/3: running

verify: Service converged

测试负载均衡,此时在访问任何一个都是负载均衡的;

[root@westos ~]# curl 172.25.25.2

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

[root@westos ~]# curl 172.25.25.2/hostname.html

4fa916724b12

[root@westos ~]# curl 172.25.25.2/hostname.html

451e21d2a9cf

[root@westos ~]# curl 172.25.25.2/hostname.html

0d7e76ef89d2

[root@westos ~]# curl 172.25.25.2/hostname.html

4fa916724b12

[root@westos ~]# curl 172.25.25.2/hostname.html

451e21d2a9cf

[root@westos ~]# curl 172.25.25.2/hostname.html

0d7e76ef89d2

[root@westos ~]# curl 172.25.25.2/hostname.html

4fa916724b12

弹性拉伸:

[root@server2 docker]# docker service scale mysrc=6

mysrc scaled to 6

overall progress: 6 out of 6 tasks

1/6: running

2/6: running

3/6: running

4/6: running

5/6: running

6/6: running

verify: Service converged

[root@server2 docker]# docker service ps mysrc

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

jwqv0y21uzj2 mysrc.1 myapp:v1 server2 Running Running about a minute ago

1qcjndb06k8c mysrc.2 myapp:v1 server4 Running Running about a minute ago

v86koacmvnjn mysrc.3 myapp:v1 server4 Running Running about a minute ago

oum30ikxxv9g mysrc.4 myapp:v1 server4 Running Running 17 seconds ago

w2r1bksii1xq mysrc.5 myapp:v1 server2 Running Running 17 seconds ago

wvz17t7ao5lg mysrc.6 myapp:v1 server4 Running Running 17 seconds ago

然后可以再次测试负载均衡的效果,新加入的会自动加入负载均衡队列。

为了更加可视化的观察实验效果,下载一个监控镜像进入可视化的图形界面来观察其效果;

[root@server1 ~]# docker load -i visualizer.tar

[root@server1 ~]# docker images | grep visualizer

dockersamples/visualizer latest 17e55a9b2354 3 years ago 148MB

[root@server1 ~]# docker tag dockersamples/visualizer reg.westos.org/library/visualizer:latest

[root@server1 ~]# docker push reg.westos.org/library/visualizer

部署 swarm 监控,再次创建服务来观察实验效果:

[root@server2 ~]# docker service create \

--name=viz --publish=8080:8080/tcp \

--constraint=node.role==manager \

--mount=type=bind,src=/var/run/docker.sock,dst=/var/run/docker.sock

visualizer:latest

以上信息表示创建一个服务,名称为 viz 端口为 8080,限制其只能在管理结点,将本机服务挂载到容器中,最后为镜像名称。

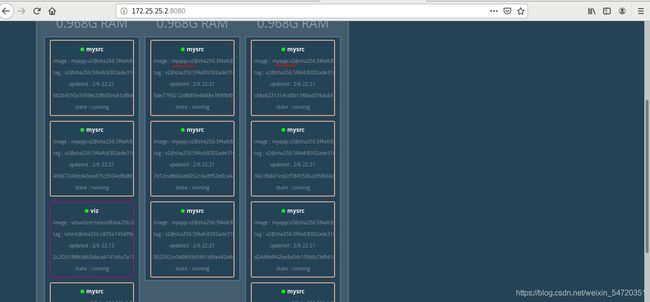

创建完成之后,此时即可在网页上查看:

[root@server2 docker]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

reye7bjec995 mysrc replicated 6/6 myapp:v1 *:80->80/tcp

fvr1j0ck125p viz replicated 1/1 visualizer:latest *:8080->8080/tcp

[root@server2 docker]# docker service scale mysrc=10

[root@server2 docker]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

reye7bjec995 mysrc replicated 10/10 myapp:v1 *:80->80/tcp

fvr1j0ck125p viz replicated 1/1 visualizer:latest *:8080->8080/tcp

[root@server2 docker]# docker service ps mysrc

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

a8iwy0qdhzbh mysrc.1 myapp:v1 server3 Running Running 17 minutes ago

uk3x8pcjoj9u mysrc.2 myapp:v1 server2 Running Running 17 minutes ago

tm2ojnskkk1v mysrc.3 myapp:v1 server4 Running Running 17 minutes ago

nsijhp2jlepu mysrc.4 myapp:v1 server4 Running Running 13 minutes ago

37cjrw61yrg1 mysrc.5 myapp:v1 server3 Running Running 13 minutes ago

nhhvz5ngj8q1 mysrc.6 myapp:v1 server2 Running Running 13 minutes ago

gqx6cp663fnd mysrc.7 myapp:v1 server3 Running Running about a minute ago

jjscxjnz8d66 mysrc.8 myapp:v1 server4 Running Running about a minute ago

spsrme15yedm mysrc.9 myapp:v1 server2 Running Running about a minute ago

klyzs5qblfud mysrc.10 myapp:v1 server3 Running Running about a minute ago

[root@server2 docker]# docker service ps viz

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

iljzp0c1xbkn viz.1 visualizer:latest server2 Running Running 3 minutes ago

- 滚动更新

docker service update --image httpd --update-parallelism 2 --update-delay 5s my_cluster的参数可以用docker service update --help来查看;

–image 指定要更新的镜像

–update-parallelism 指定最大同步更新的任务数

–update-delay 指定更新间隔

[root@server2 docker]# docker service update --image myapp:v2 --update-parallelism 2 --update-delay 2s mysrc

此时测试时可以看到其调度到 v2 上,可视化页面能清除的看到其变化;

[root@westos ~]# curl 172.25.25.2

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

3. docker stack部署

- 基于 Docker Swarm 之上来完成应用的部署,面向大规模场景下的多服务部署和管理。

- docker stack与docker-compose的区别:

Docker stack不支持“build”指令,它是需要镜像是预先已经构建好的, 所以docker-compose更适合于开发场景;

Docker Compose是一个Python项目,使用Docker API规范来操作容器。

Docker Stack功能包含在Docker引擎中,是swarm mode的一部分。

Docker stack不支持基于第2版写的docker-compose.yml ,也就是version版本至少为3。然而Docker Compose对版本为2和3的 文件仍然可以处理;

docker stack把docker compose的所有工作都做完了,因此docker stack将占主导地位。 - docker stack相关命令:

用 compose 来完成部署,创建文件,写入 yaml 文件;

先删除之前创建的服务;

[root@server2 docker]# docker service rm mysrc

mysrc

[root@server2 docker]# docker service rm viz

viz

然后编辑 yaml 文件;

[root@server2 ~]# mkdir compose

[root@server2 ~]# cd compose/

[root@server2 compose]# vim docker-compose.yaml

[root@server2 compose]# cat docker-compose.yaml

version: "3.8"

services:

mysvc:

image: nginx:latest

ports:

- "80:80"

deploy:

resources: ##做资源限制

limits: ##做cpu 上限限制

cpus: '0.50'

memory: 200M ##做内存上限限制

reservations: ##最少限制

cpus: '0.25'

memory: 50M

replicas: 3 ##副本数

update_config:

parallelism: 2 ##每次更新两个

delay: 5s ##延迟2s

restart_policy: ##当容器故障时自动重启

condition: on-failure

visualizer:

image: visualizer:latest

ports: ##端口映射

- "8080:8080"

stop_grace_period: 1m30s

volumes: ##挂载

- "/var/run/docker.sock:/var/run/docker.sock"

deploy:

placement:

constraints:

- "node.role==manager" ##限制在管理结点

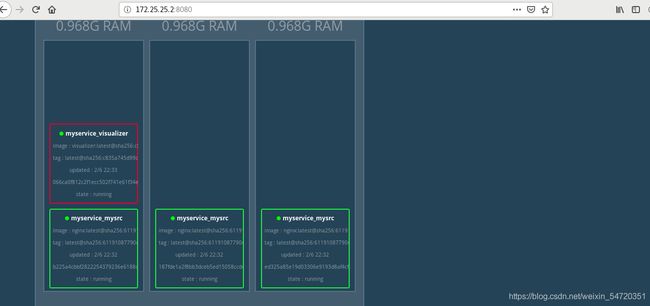

利用 yaml 文件来开始部署:

[root@server2 compose]# docker stack deploy -c docker-compose.yaml myservice

Creating network myservice_default

Creating service myservice_mysvc

Creating service myservice_visualizer

[root@server2 compose]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

ylf14u27qpa8 myservice_mysrc replicated 3/3 nginx:latest *:80->80/tcp

zqww2cf6r7ij myservice_visualizer replicated 1/1 visualizer:latest *:8080->8080/tcp

[root@server2 compose]# docker service ps myservice_mysrc

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

ir8qdahngvii myservice_mysrc.1 nginx:latest server2 Running Running 2 minutes ago

rcjtmdkpr312 myservice_mysrc.2 nginx:latest server4 Running Running 2 minutes ago

li4wn2zn0ym4 myservice_mysrc.3 nginx:latest server4 Running Running 2 minutes ago

[root@server2 compose]# docker service ps myservice_visualizer

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

pa2ksznaeahi myservice_visualizer.1 visualizer:latest server2 Running Running about a minute ago

4. Portainer可视化

集群最好有一个 web 接口,下载portainer 图形工具,配置本地镜像仓库;

为了区别于其他的镜像,此处在在 habor 上新建一个项目;

[root@server1 ~]# docker load -i portainer-agent.tar

[root@server1 ~]# docker load -i portainer.tar

[root@server1 ~]# docker images | grep portainer

portainer/portainer latest 19d07168491a 2 years ago 74.1MB

portainer/agent latest 9335796fedf9 2 years ago 12.4MB

[root@server1 ~]# docker tag portainer/portainer:latest reg.westos.org/portainer/portainer:latest

[root@server1 ~]# docker tag portainer/agent:latest reg.westos.org/portainer/agent:latest

[root@server1 ~]# docker push reg.westos.org/portainer/portainer

[root@server1 ~]# docker push reg.westos.org/portainer/agent:latest

在管理结点上编辑yaml语言;此处所有的服务端和 agent 共享一个网络;

[root@server2 ~]# ls

compose haproxy.tar portainer-agent-stack.yml

[root@server2 ~]# vim portainer-agent-stack.yml

version: '3.2'

services:

agent:

image: portainer/agent

environment:

# REQUIRED: Should be equal to the service name prefixed by "tasks." when

# deployed inside an overlay network

AGENT_CLUSTER_ADDR: tasks.agent

# AGENT_PORT: 9001

# LOG_LEVEL: debug

volumes:

- /var/run/docker.sock:/var/run/docker.sock

- /var/lib/docker/volumes:/var/lib/docker/volumes

networks:

- agent_network

deploy:

mode: global

placement:

constraints: [node.platform.os == linux]

portainer:

image: portainer/portainer

command: -H tcp://tasks.agent:9001 --tlsskipverify

ports:

- "9000:9000"

volumes:

- portainer_data:/data

networks:

- agent_network

deploy:

mode: replicated

replicas: 1

placement:

constraints: [node.role == manager]

networks:

agent_network:

driver: overlay

attachable: true

volumes:

portainer_data:

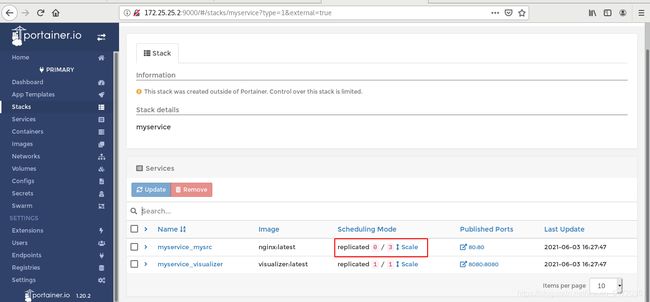

开始部署:

[root@server2 ~]# docker stack deploy -c portainer-agent-stack.yml portainer

Creating network portainer_agent_network

Creating service portainer_portainer

Creating service portainer_agent

[root@server2 ~]# docker stack ls

NAME SERVICES ORCHESTRATOR

myservice 2 Swarm

portainer 2 Swarm

此时在网页中便可可以访问:http://172.25.25.2:9000,第一次登陆时强行更改秘密;

然后可以通过图形化的界面来在线拉伸;