Multi-Stage Progressive Image Restoration

代码

Multi-stage progressive image restoration

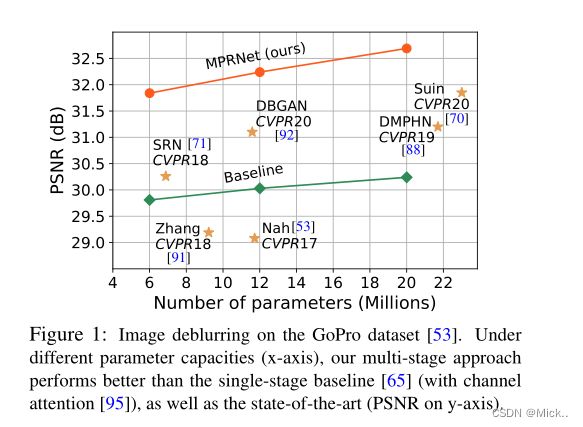

摘要:作者提出了多阶段架构,逐步学习目标函数。具体来说,作者首先使用编码器解码器架构学习上下文特征,然后在保持局部信息的高分辨率分支融合它们。在每个阶段,作者引入了像素自适应设计,该设计利用监督注意力重新加权局部特征。这种多阶段架构的一个关键要素是不同阶段之间的信息交换。为此,我们提出了一种双向方法,其中信息不仅从早期到晚期依次交换,而且特征处理块之间还存在横向连接,以避免任何信息丢失。由此产生的紧密链接的多阶段体系结构称为MPRNet。

引言

图像恢复是个不适定问题,为了限制解空间,通常会根据经验设计先验,但是设计这种先验知识是一种挑战。为了缓解这个问题,现在大多数方法都使用卷积神经网络从大规模数据中学习普遍的先验知识。

CNN方法超越其他方法主要是源于模型设计。常见的模型设计方法有递归残差学习、扩展卷积、注意力机制、密集连接、编码器-解码器、生成模型。所有低水平视觉问题都是基于单阶段设计,相比于高层次视觉问题(比如说 姿势估计、场景解析、动作分割),几乎都是多阶段的网络模型。

最近很少有人在图像恢复领域应用多级设计。作者分析了为什么会出现这种情况。首先多阶段技术常用于编码-解码架构,这在编码传递上下文信息是有效的但是在保持空间细节是不可靠的。或者在单尺度pipeline中提供了准确的空间信息,但是存在语义信息上的不可靠。但是这两种的组合在图像恢复领域是可靠的。然后,单一的将一个阶段的信息传递给下一阶段会产生次优的结果。第三,在每个阶段提供真实图像的监督对于渐进恢复是重要的。最后,在多阶段处理中为了保持编码器-解码器分支的上下文特征,需要传播早期阶段到最后阶段的中间特征。

作者提出了一种多阶段渐进式图像恢复架构,称为MPRNet,具有几个关键组件。

1 早期阶段利用编码器-解码器架构学习多尺度上下文信息,最后阶段对原始图像进行处理为了保持精细的空间细节信息。

2 在每两个阶段插入监督注意力模块(SAM),以实现渐进式学习。在真实图像的指导下,该模块利用前一阶段的预测去计算注意力图。相反,这一阶段的注意力图在传递到下一阶段之前微调前一阶段的特征。

3 跨阶段特征融合机制(CSFF),帮助传播从前期阶段到后期阶段的多尺度上下文特征。此外,这种方法简化了各个阶段的信息流,这对于稳定多阶段网络优化是有效的。

这篇文章的主要贡献。

1 一种新的多阶段方法,能够产生具有丰富的上下文和精确的空间信息的输出。基于多阶段,本文提出的框架可以将复杂的图像恢复任务分解为多个子任务,以逐步恢复退化图像。

2 一个有效的监督注意力模块,在每个阶段充分利用已经恢复得图片。

3 跨阶段聚合多尺度特征得策略。

4 在十个合成的或者真实的数据集上验证了模型的有效性,和较低的复杂度。同时,作者还提供了消融实验,定性结果和泛化测试。

相关工作

由于硬件或者环境的影响,智能手机很难拍出比较清晰的高质量图片。早期的图像恢复方法都是基于全变分、稀疏编码、自相似性、梯度先验等等。近几年来,基于CNN的恢复方法获得了比较好的结果。就结构设计,那些方法大致可以分为单阶段和多阶段。

单阶段方法

目前,大多数图像恢复方法都是基于单阶段设计的,并且大多数结构都是基于高级视觉任务开发的。比如,残差学习被用于图像去噪、图像去模糊、图像去雨。同样的,为了提取多尺度信息,引入了编码器-解码器和扩展卷积。其他单阶段方法也包含了密集连接。

多阶段方法

在每个阶段利用轻量级子网络,以渐进的方式恢复图像。这种方式是有效的,因为这将一个复杂的图像恢复问题分解为多个更容易的小任务。

注意力机制

注意力机制在高级视觉(图像分类、分割、检测)任务领域效果非常好,目前在低级视觉任务中应用也十分广泛。注意力主要是捕捉空间维度或通道维度的long-range inter-dependencies。

多阶段渐进式恢复

框架包含三个部分。前两个阶段基于编码器-解码器子网络,学习上下文信息。图像恢复是一项对于位置十分敏感的任务(需要输入到输出像素级的对应关系),最后一级利用子网络对原来输入进行处理,没有任何下采样,因此输出图像保留了所有的精细纹理。

不是简单的级联多个阶段,而是在两个阶段之间加入一个监督注意模块。在真实图片的监督下,模块将前一阶段的特征图传递到下一阶段之前,模块将缩放特征图。此外,我们引入了一种跨阶段特征融合机制,其中早期子网络的中间多尺度上下文特征有助于巩固后一个子网络的中间特征。

虽然MPRNet堆叠了多个阶段,但每个阶段都可以访问输入图像。

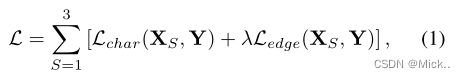

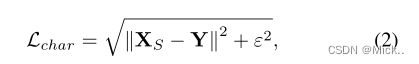

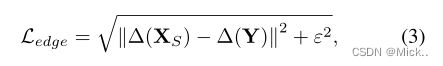

损失函数

∆ 代表拉普拉斯算子。

![]()

![]() 代表恢复的图像

代表恢复的图像

![]() 代表残差图像

代表残差图像

##########################################################################

class MPRNet(nn.Module):

def __init__(self, in_c=3, out_c=3, n_feat=80, scale_unetfeats=48, scale_orsnetfeats=32, num_cab=8, kernel_size=3, reduction=4, bias=False):

super(MPRNet, self).__init__()

act=nn.PReLU()

self.shallow_feat1 = nn.Sequential(conv(in_c, n_feat, kernel_size, bias=bias), CAB(n_feat,kernel_size, reduction, bias=bias, act=act))

self.shallow_feat2 = nn.Sequential(conv(in_c, n_feat, kernel_size, bias=bias), CAB(n_feat,kernel_size, reduction, bias=bias, act=act))

self.shallow_feat3 = nn.Sequential(conv(in_c, n_feat, kernel_size, bias=bias), CAB(n_feat,kernel_size, reduction, bias=bias, act=act))

# Cross Stage Feature Fusion (CSFF)

self.stage1_encoder = Encoder(n_feat, kernel_size, reduction, act, bias, scale_unetfeats, csff=False)

self.stage1_decoder = Decoder(n_feat, kernel_size, reduction, act, bias, scale_unetfeats)

self.stage2_encoder = Encoder(n_feat, kernel_size, reduction, act, bias, scale_unetfeats, csff=True)

self.stage2_decoder = Decoder(n_feat, kernel_size, reduction, act, bias, scale_unetfeats)

self.stage3_orsnet = ORSNet(n_feat, scale_orsnetfeats, kernel_size, reduction, act, bias, scale_unetfeats, num_cab)

self.sam12 = SAM(n_feat, kernel_size=1, bias=bias)

self.sam23 = SAM(n_feat, kernel_size=1, bias=bias)

self.concat12 = conv(n_feat*2, n_feat, kernel_size, bias=bias)

self.concat23 = conv(n_feat*2, n_feat+scale_orsnetfeats, kernel_size, bias=bias)

self.tail = conv(n_feat+scale_orsnetfeats, out_c, kernel_size, bias=bias)

def forward(self, x3_img):

# Original-resolution Image for Stage 3

H = x3_img.size(2)

W = x3_img.size(3)

# Multi-Patch Hierarchy: Split Image into four non-overlapping patches

# Two Patches for Stage 2

x2top_img = x3_img[:,:,0:int(H/2),:]

x2bot_img = x3_img[:,:,int(H/2):H,:]

# Four Patches for Stage 1

x1ltop_img = x2top_img[:,:,:,0:int(W/2)]

x1rtop_img = x2top_img[:,:,:,int(W/2):W]

x1lbot_img = x2bot_img[:,:,:,0:int(W/2)]

x1rbot_img = x2bot_img[:,:,:,int(W/2):W]

##-------------------------------------------

##-------------- Stage 1---------------------

##-------------------------------------------

## Compute Shallow Features

x1ltop = self.shallow_feat1(x1ltop_img)

x1rtop = self.shallow_feat1(x1rtop_img)

x1lbot = self.shallow_feat1(x1lbot_img)

x1rbot = self.shallow_feat1(x1rbot_img)

## Process features of all 4 patches with Encoder of Stage 1

feat1_ltop = self.stage1_encoder(x1ltop)

feat1_rtop = self.stage1_encoder(x1rtop)

feat1_lbot = self.stage1_encoder(x1lbot)

feat1_rbot = self.stage1_encoder(x1rbot)

## Concat deep features

feat1_top = [torch.cat((k,v), 3) for k,v in zip(feat1_ltop,feat1_rtop)]

feat1_bot = [torch.cat((k,v), 3) for k,v in zip(feat1_lbot,feat1_rbot)]

## Pass features through Decoder of Stage 1

res1_top = self.stage1_decoder(feat1_top)

res1_bot = self.stage1_decoder(feat1_bot)

## Apply Supervised Attention Module (SAM)

x2top_samfeats, stage1_img_top = self.sam12(res1_top[0], x2top_img)

x2bot_samfeats, stage1_img_bot = self.sam12(res1_bot[0], x2bot_img)

## Output image at Stage 1

stage1_img = torch.cat([stage1_img_top, stage1_img_bot],2)

##-------------------------------------------

##-------------- Stage 2---------------------

##-------------------------------------------

## Compute Shallow Features

x2top = self.shallow_feat2(x2top_img)

x2bot = self.shallow_feat2(x2bot_img)

## Concatenate SAM features of Stage 1 with shallow features of Stage 2

x2top_cat = self.concat12(torch.cat([x2top, x2top_samfeats], 1))

x2bot_cat = self.concat12(torch.cat([x2bot, x2bot_samfeats], 1))

## Process features of both patches with Encoder of Stage 2

feat2_top = self.stage2_encoder(x2top_cat, feat1_top, res1_top)

feat2_bot = self.stage2_encoder(x2bot_cat, feat1_bot, res1_bot)

## Concat deep features

feat2 = [torch.cat((k,v), 2) for k,v in zip(feat2_top,feat2_bot)]

## Pass features through Decoder of Stage 2

res2 = self.stage2_decoder(feat2)

## Apply SAM

x3_samfeats, stage2_img = self.sam23(res2[0], x3_img)

##-------------------------------------------

##-------------- Stage 3---------------------

##-------------------------------------------

## Compute Shallow Features

x3 = self.shallow_feat3(x3_img)

## Concatenate SAM features of Stage 2 with shallow features of Stage 3

x3_cat = self.concat23(torch.cat([x3, x3_samfeats], 1))

x3_cat = self.stage3_orsnet(x3_cat, feat2, res2)

stage3_img = self.tail(x3_cat)

return [stage3_img+x3_img, stage2_img, stage1_img]

特征处理

encoder-decoder

用于图像恢复的现有单阶段CNN通常使用以下架构设计之一:1:编码器-解码器 2. 一个单尺度特征pipeline。编码器-解码器首先将输入映射到低分辨率空间,然后逐步恢复到原来的分辨率。虽然这些模型可以有效地编码多尺度信息,但是重复了应用下采样操作,容易损失空间细节。但是,由于感受野有限,输出在语义上是不可靠的。所以这说明了编码器-解码器架构固有的局限性,并不能产生空间精确并且语义可靠的输出。为了充分利用这两种设计的优点,我们提出了一种多阶段框架,其中早期阶段包含编码器-解码器网络,最终阶段采用基于原始输入的网络。

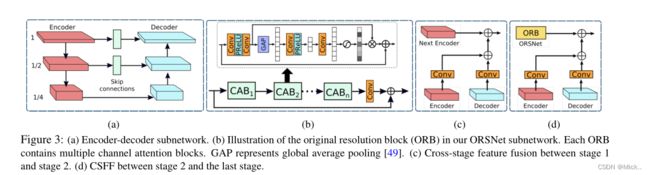

编码器-解码器子网络

编码器-解码器子网络是基于标准U-Net。首先添加了通道注意力模块在每个阶段提取特征。通道注意力模块(CABs)见图3b。然后,在U-Net跳跃连接处的特征图被传入CAB处理。最后,在解码器中不是使用反卷积增加特征图的空间分辨率,而是使用双线性插值上采样和卷积层。这有助于减少输出图像中因为反卷积而出现的棋盘效应。

##########################################################################

## U-Net

class Encoder(nn.Module):

def __init__(self, n_feat, kernel_size, reduction, act, bias, scale_unetfeats, csff):

super(Encoder, self).__init__()

self.encoder_level1 = [CAB(n_feat, kernel_size, reduction, bias=bias, act=act) for _ in range(2)]

self.encoder_level2 = [CAB(n_feat+scale_unetfeats, kernel_size, reduction, bias=bias, act=act) for _ in range(2)]

self.encoder_level3 = [CAB(n_feat+(scale_unetfeats*2), kernel_size, reduction, bias=bias, act=act) for _ in range(2)]

self.encoder_level1 = nn.Sequential(*self.encoder_level1)

self.encoder_level2 = nn.Sequential(*self.encoder_level2)

self.encoder_level3 = nn.Sequential(*self.encoder_level3)

self.down12 = DownSample(n_feat, scale_unetfeats)

self.down23 = DownSample(n_feat+scale_unetfeats, scale_unetfeats)

# Cross Stage Feature Fusion (CSFF)

if csff:

self.csff_enc1 = nn.Conv2d(n_feat, n_feat, kernel_size=1, bias=bias)

self.csff_enc2 = nn.Conv2d(n_feat+scale_unetfeats, n_feat+scale_unetfeats, kernel_size=1, bias=bias)

self.csff_enc3 = nn.Conv2d(n_feat+(scale_unetfeats*2), n_feat+(scale_unetfeats*2), kernel_size=1, bias=bias)

self.csff_dec1 = nn.Conv2d(n_feat, n_feat, kernel_size=1, bias=bias)

self.csff_dec2 = nn.Conv2d(n_feat+scale_unetfeats, n_feat+scale_unetfeats, kernel_size=1, bias=bias)

self.csff_dec3 = nn.Conv2d(n_feat+(scale_unetfeats*2), n_feat+(scale_unetfeats*2), kernel_size=1, bias=bias)

def forward(self, x, encoder_outs=None, decoder_outs=None):

enc1 = self.encoder_level1(x)

if (encoder_outs is not None) and (decoder_outs is not None):

enc1 = enc1 + self.csff_enc1(encoder_outs[0]) + self.csff_dec1(decoder_outs[0])

x = self.down12(enc1)

enc2 = self.encoder_level2(x)

if (encoder_outs is not None) and (decoder_outs is not None):

enc2 = enc2 + self.csff_enc2(encoder_outs[1]) + self.csff_dec2(decoder_outs[1])

x = self.down23(enc2)

enc3 = self.encoder_level3(x)

if (encoder_outs is not None) and (decoder_outs is not None):

enc3 = enc3 + self.csff_enc3(encoder_outs[2]) + self.csff_dec3(decoder_outs[2])

return [enc1, enc2, enc3]

class Decoder(nn.Module):

def __init__(self, n_feat, kernel_size, reduction, act, bias, scale_unetfeats):

super(Decoder, self).__init__()

self.decoder_level1 = [CAB(n_feat, kernel_size, reduction, bias=bias, act=act) for _ in range(2)]

self.decoder_level2 = [CAB(n_feat+scale_unetfeats, kernel_size, reduction, bias=bias, act=act) for _ in range(2)]

self.decoder_level3 = [CAB(n_feat+(scale_unetfeats*2), kernel_size, reduction, bias=bias, act=act) for _ in range(2)]

self.decoder_level1 = nn.Sequential(*self.decoder_level1)

self.decoder_level2 = nn.Sequential(*self.decoder_level2)

self.decoder_level3 = nn.Sequential(*self.decoder_level3)

self.skip_attn1 = CAB(n_feat, kernel_size, reduction, bias=bias, act=act)

self.skip_attn2 = CAB(n_feat+scale_unetfeats, kernel_size, reduction, bias=bias, act=act)

self.up21 = SkipUpSample(n_feat, scale_unetfeats)

self.up32 = SkipUpSample(n_feat+scale_unetfeats, scale_unetfeats)

def forward(self, outs):

enc1, enc2, enc3 = outs

dec3 = self.decoder_level3(enc3)

x = self.up32(dec3, self.skip_attn2(enc2))

dec2 = self.decoder_level2(x)

x = self.up21(dec2, self.skip_attn1(enc1))

dec1 = self.decoder_level1(x)

return [dec1,dec2,dec3]

##########################################################################

##---------- Resizing Modules ----------

class DownSample(nn.Module):

def __init__(self, in_channels,s_factor):

super(DownSample, self).__init__()

self.down = nn.Sequential(nn.Upsample(scale_factor=0.5, mode='bilinear', align_corners=False),

nn.Conv2d(in_channels, in_channels+s_factor, 1, stride=1, padding=0, bias=False))

def forward(self, x):

x = self.down(x)

return x

class UpSample(nn.Module):

def __init__(self, in_channels,s_factor):

super(UpSample, self).__init__()

self.up = nn.Sequential(nn.Upsample(scale_factor=2, mode='bilinear', align_corners=False),

nn.Conv2d(in_channels+s_factor, in_channels, 1, stride=1, padding=0, bias=False))

def forward(self, x):

x = self.up(x)

return x

class SkipUpSample(nn.Module):

def __init__(self, in_channels,s_factor):

super(SkipUpSample, self).__init__()

self.up = nn.Sequential(nn.Upsample(scale_factor=2, mode='bilinear', align_corners=False),

nn.Conv2d(in_channels+s_factor, in_channels, 1, stride=1, padding=0, bias=False))

def forward(self, x, y):

x = self.up(x)

x = x + y

return x

Original Resolution Subnetwork (ORSNet)

为了从输入图像到输出图像的精细细节,作者在最后阶段引入了原始分辨率子网络(OSRNet)。OSRNet没有利用任何下采样操作并且生成空间丰富的高分辨率特征。.它包含多个原始分辨率块(ORB),每个ORB包含CABs。ORB见图3b。

##########################################################################

## Original Resolution Block (ORB)

class ORB(nn.Module):

def __init__(self, n_feat, kernel_size, reduction, act, bias, num_cab):

super(ORB, self).__init__()

modules_body = []

modules_body = [CAB(n_feat, kernel_size, reduction, bias=bias, act=act) for _ in range(num_cab)]

modules_body.append(conv(n_feat, n_feat, kernel_size))

self.body = nn.Sequential(*modules_body)

def forward(self, x):

res = self.body(x)

res += x

return res

##########################################################################

class ORSNet(nn.Module):

def __init__(self, n_feat, scale_orsnetfeats, kernel_size, reduction, act, bias, scale_unetfeats, num_cab):

super(ORSNet, self).__init__()

self.orb1 = ORB(n_feat+scale_orsnetfeats, kernel_size, reduction, act, bias, num_cab)

self.orb2 = ORB(n_feat+scale_orsnetfeats, kernel_size, reduction, act, bias, num_cab)

self.orb3 = ORB(n_feat+scale_orsnetfeats, kernel_size, reduction, act, bias, num_cab)

self.up_enc1 = UpSample(n_feat, scale_unetfeats)

self.up_dec1 = UpSample(n_feat, scale_unetfeats)

self.up_enc2 = nn.Sequential(UpSample(n_feat+scale_unetfeats, scale_unetfeats), UpSample(n_feat, scale_unetfeats))

self.up_dec2 = nn.Sequential(UpSample(n_feat+scale_unetfeats, scale_unetfeats), UpSample(n_feat, scale_unetfeats))

self.conv_enc1 = nn.Conv2d(n_feat, n_feat+scale_orsnetfeats, kernel_size=1, bias=bias)

self.conv_enc2 = nn.Conv2d(n_feat, n_feat+scale_orsnetfeats, kernel_size=1, bias=bias)

self.conv_enc3 = nn.Conv2d(n_feat, n_feat+scale_orsnetfeats, kernel_size=1, bias=bias)

self.conv_dec1 = nn.Conv2d(n_feat, n_feat+scale_orsnetfeats, kernel_size=1, bias=bias)

self.conv_dec2 = nn.Conv2d(n_feat, n_feat+scale_orsnetfeats, kernel_size=1, bias=bias)

self.conv_dec3 = nn.Conv2d(n_feat, n_feat+scale_orsnetfeats, kernel_size=1, bias=bias)

def forward(self, x, encoder_outs, decoder_outs):

x = self.orb1(x)

x = x + self.conv_enc1(encoder_outs[0]) + self.conv_dec1(decoder_outs[0])

x = self.orb2(x)

x = x + self.conv_enc2(self.up_enc1(encoder_outs[1])) + self.conv_dec2(self.up_dec1(decoder_outs[1]))

x = self.orb3(x)

x = x + self.conv_enc3(self.up_enc2(encoder_outs[2])) + self.conv_dec3(self.up_dec2(decoder_outs[2]))

return x

跨阶段特征融合(CSFF)

在框架中,我们在两个编码器-解码器之间(见图3c)以及编码器-解码器和ORSNet之间(见图3d)引入了CSFF模块。请注意,一个阶段的特征首先通过1×1卷积进行细化,然后再传播到下一阶段进行聚合。CSFF模块具有以下几个优点。首先,由于在编码器-解码器中重复使用上下采样操作,它使网络不易受到信息丢失的影响。第二,一个阶段的多尺度特征有助于丰富下一阶段的特征。第三,网络优化过程变得更加稳定,因为它简化了信息流,从而允许我们在整体架构中添加几个阶段。

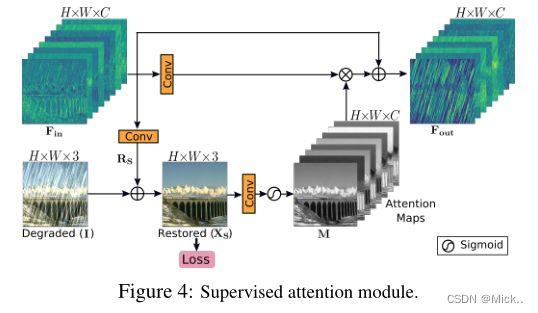

Supervised Attention Module(监督注意力模块)

最近的图像恢复多级网络直接预测每个阶段的图像,然后将其传递到下一个连续阶段。相反,我们在每两个阶段之间引入了监督注意力模块,这有助于实现显著的性能提升。首先,它提供了对每个阶段的渐进图像恢复有用的真实监控信号。其次,在局部监督预测的帮助下,我们生成注意力图,以抑制当前阶段信息量较小的特征,只允许有用的特征传播到下一阶段。

SAM模块接收早期阶段的输入特征并且生成残差图像。残差图像和退化图像相加得到恢复图像。为了预测图像![]() ,提供真实图像作为监督。然后,由

,提供真实图像作为监督。然后,由![]() 产生逐像素注意力掩码M。M用来校准1*1卷积后的输入特征,产生注意力特征,然后与原始特征图相加。最后,SAM生成的注意力增强特征表示Fout被传递到下一阶段进行进一步处理。

产生逐像素注意力掩码M。M用来校准1*1卷积后的输入特征,产生注意力特征,然后与原始特征图相加。最后,SAM生成的注意力增强特征表示Fout被传递到下一阶段进行进一步处理。

## Supervised Attention Module

class SAM(nn.Module):

def __init__(self, n_feat, kernel_size, bias):

super(SAM, self).__init__()

self.conv1 = conv(n_feat, n_feat, kernel_size, bias=bias)

self.conv2 = conv(n_feat, 3, kernel_size, bias=bias)

self.conv3 = conv(3, n_feat, kernel_size, bias=bias)

def forward(self, x, x_img):##x_img表示退化图像

x1 = self.conv1(x)

img = self.conv2(x) + x_img

x2 = torch.sigmoid(self.conv3(img)) ###表示M矩阵

x1 = x1*x2

x1 = x1+x

return x1, img

全部模型代码

import torch

import torch.nn as nn

import torch.nn.functional as F

##########################################################################

def conv(in_channels, out_channels, kernel_size, bias=False, stride = 1): ##卷积层

return nn.Conv2d(

in_channels, out_channels, kernel_size,

padding=(kernel_size//2), bias=bias, stride = stride)

##########################################################################

## Channel Attention Layer ##通道注意力层

class CALayer(nn.Module):

def __init__(self, channel, reduction=16, bias=False):

super(CALayer, self).__init__()

# global average pooling: feature --> point

self.avg_pool = nn.AdaptiveAvgPool2d(1)

# feature channel downscale and upscale --> channel weight

self.conv_du = nn.Sequential(

nn.Conv2d(channel, channel // reduction, 1, padding=0, bias=bias),

nn.ReLU(inplace=True),

nn.Conv2d(channel // reduction, channel, 1, padding=0, bias=bias),

nn.Sigmoid()

)

def forward(self, x):

y = self.avg_pool(x)

y = self.conv_du(y)

return x * y

##########################################################################

## Channel Attention Block (CAB)

class CAB(nn.Module):

def __init__(self, n_feat, kernel_size, reduction, bias, act):

super(CAB, self).__init__()

modules_body = []

modules_body.append(conv(n_feat, n_feat, kernel_size, bias=bias))

modules_body.append(act)

modules_body.append(conv(n_feat, n_feat, kernel_size, bias=bias))

self.CA = CALayer(n_feat, reduction, bias=bias)

self.body = nn.Sequential(*modules_body)

def forward(self, x):

res = self.body(x)

res = self.CA(res)

res += x

return res

##########################################################################

## Supervised Attention Module

class SAM(nn.Module):

def __init__(self, n_feat, kernel_size, bias):

super(SAM, self).__init__()

self.conv1 = conv(n_feat, n_feat, kernel_size, bias=bias)

self.conv2 = conv(n_feat, 3, kernel_size, bias=bias)

self.conv3 = conv(3, n_feat, kernel_size, bias=bias)

def forward(self, x, x_img):

x1 = self.conv1(x)

img = self.conv2(x) + x_img

x2 = torch.sigmoid(self.conv3(img))

x1 = x1*x2

x1 = x1+x

return x1, img

##########################################################################

## U-Net

class Encoder(nn.Module):

def __init__(self, n_feat, kernel_size, reduction, act, bias, scale_unetfeats, csff):

super(Encoder, self).__init__()

self.encoder_level1 = [CAB(n_feat, kernel_size, reduction, bias=bias, act=act) for _ in range(2)]

self.encoder_level2 = [CAB(n_feat+scale_unetfeats, kernel_size, reduction, bias=bias, act=act) for _ in range(2)]

self.encoder_level3 = [CAB(n_feat+(scale_unetfeats*2), kernel_size, reduction, bias=bias, act=act) for _ in range(2)]

self.encoder_level1 = nn.Sequential(*self.encoder_level1)

self.encoder_level2 = nn.Sequential(*self.encoder_level2)

self.encoder_level3 = nn.Sequential(*self.encoder_level3)

self.down12 = DownSample(n_feat, scale_unetfeats)

self.down23 = DownSample(n_feat+scale_unetfeats, scale_unetfeats)

# Cross Stage Feature Fusion (CSFF)

if csff:

self.csff_enc1 = nn.Conv2d(n_feat, n_feat, kernel_size=1, bias=bias)

self.csff_enc2 = nn.Conv2d(n_feat+scale_unetfeats, n_feat+scale_unetfeats, kernel_size=1, bias=bias)

self.csff_enc3 = nn.Conv2d(n_feat+(scale_unetfeats*2), n_feat+(scale_unetfeats*2), kernel_size=1, bias=bias)

self.csff_dec1 = nn.Conv2d(n_feat, n_feat, kernel_size=1, bias=bias)

self.csff_dec2 = nn.Conv2d(n_feat+scale_unetfeats, n_feat+scale_unetfeats, kernel_size=1, bias=bias)

self.csff_dec3 = nn.Conv2d(n_feat+(scale_unetfeats*2), n_feat+(scale_unetfeats*2), kernel_size=1, bias=bias)

def forward(self, x, encoder_outs=None, decoder_outs=None):

enc1 = self.encoder_level1(x)

if (encoder_outs is not None) and (decoder_outs is not None):

enc1 = enc1 + self.csff_enc1(encoder_outs[0]) + self.csff_dec1(decoder_outs[0])

x = self.down12(enc1)

enc2 = self.encoder_level2(x)

if (encoder_outs is not None) and (decoder_outs is not None):

enc2 = enc2 + self.csff_enc2(encoder_outs[1]) + self.csff_dec2(decoder_outs[1])

x = self.down23(enc2)

enc3 = self.encoder_level3(x)

if (encoder_outs is not None) and (decoder_outs is not None):

enc3 = enc3 + self.csff_enc3(encoder_outs[2]) + self.csff_dec3(decoder_outs[2])

return [enc1, enc2, enc3]

class Decoder(nn.Module):

def __init__(self, n_feat, kernel_size, reduction, act, bias, scale_unetfeats):

super(Decoder, self).__init__()

self.decoder_level1 = [CAB(n_feat, kernel_size, reduction, bias=bias, act=act) for _ in range(2)]

self.decoder_level2 = [CAB(n_feat+scale_unetfeats, kernel_size, reduction, bias=bias, act=act) for _ in range(2)]

self.decoder_level3 = [CAB(n_feat+(scale_unetfeats*2), kernel_size, reduction, bias=bias, act=act) for _ in range(2)]

self.decoder_level1 = nn.Sequential(*self.decoder_level1)

self.decoder_level2 = nn.Sequential(*self.decoder_level2)

self.decoder_level3 = nn.Sequential(*self.decoder_level3)

self.skip_attn1 = CAB(n_feat, kernel_size, reduction, bias=bias, act=act)

self.skip_attn2 = CAB(n_feat+scale_unetfeats, kernel_size, reduction, bias=bias, act=act)

self.up21 = SkipUpSample(n_feat, scale_unetfeats)

self.up32 = SkipUpSample(n_feat+scale_unetfeats, scale_unetfeats)

def forward(self, outs):

enc1, enc2, enc3 = outs

dec3 = self.decoder_level3(enc3)

x = self.up32(dec3, self.skip_attn2(enc2))

dec2 = self.decoder_level2(x)

x = self.up21(dec2, self.skip_attn1(enc1))

dec1 = self.decoder_level1(x)

return [dec1,dec2,dec3]

##########################################################################

##---------- Resizing Modules ----------

class DownSample(nn.Module):

def __init__(self, in_channels,s_factor):

super(DownSample, self).__init__()

self.down = nn.Sequential(nn.Upsample(scale_factor=0.5, mode='bilinear', align_corners=False),

nn.Conv2d(in_channels, in_channels+s_factor, 1, stride=1, padding=0, bias=False))

def forward(self, x):

x = self.down(x)

return x

class UpSample(nn.Module):

def __init__(self, in_channels,s_factor):

super(UpSample, self).__init__()

self.up = nn.Sequential(nn.Upsample(scale_factor=2, mode='bilinear', align_corners=False),

nn.Conv2d(in_channels+s_factor, in_channels, 1, stride=1, padding=0, bias=False))

def forward(self, x):

x = self.up(x)

return x

class SkipUpSample(nn.Module):

def __init__(self, in_channels,s_factor):

super(SkipUpSample, self).__init__()

self.up = nn.Sequential(nn.Upsample(scale_factor=2, mode='bilinear', align_corners=False),

nn.Conv2d(in_channels+s_factor, in_channels, 1, stride=1, padding=0, bias=False))

def forward(self, x, y):

x = self.up(x)

x = x + y

return x

##########################################################################

## Original Resolution Block (ORB)

class ORB(nn.Module):

def __init__(self, n_feat, kernel_size, reduction, act, bias, num_cab):

super(ORB, self).__init__()

modules_body = []

modules_body = [CAB(n_feat, kernel_size, reduction, bias=bias, act=act) for _ in range(num_cab)]

modules_body.append(conv(n_feat, n_feat, kernel_size))

self.body = nn.Sequential(*modules_body)

def forward(self, x):

res = self.body(x)

res += x

return res

##########################################################################

class ORSNet(nn.Module):

def __init__(self, n_feat, scale_orsnetfeats, kernel_size, reduction, act, bias, scale_unetfeats, num_cab):

super(ORSNet, self).__init__()

self.orb1 = ORB(n_feat+scale_orsnetfeats, kernel_size, reduction, act, bias, num_cab)

self.orb2 = ORB(n_feat+scale_orsnetfeats, kernel_size, reduction, act, bias, num_cab)

self.orb3 = ORB(n_feat+scale_orsnetfeats, kernel_size, reduction, act, bias, num_cab)

self.up_enc1 = UpSample(n_feat, scale_unetfeats)

self.up_dec1 = UpSample(n_feat, scale_unetfeats)

self.up_enc2 = nn.Sequential(UpSample(n_feat+scale_unetfeats, scale_unetfeats), UpSample(n_feat, scale_unetfeats))

self.up_dec2 = nn.Sequential(UpSample(n_feat+scale_unetfeats, scale_unetfeats), UpSample(n_feat, scale_unetfeats))

self.conv_enc1 = nn.Conv2d(n_feat, n_feat+scale_orsnetfeats, kernel_size=1, bias=bias)

self.conv_enc2 = nn.Conv2d(n_feat, n_feat+scale_orsnetfeats, kernel_size=1, bias=bias)

self.conv_enc3 = nn.Conv2d(n_feat, n_feat+scale_orsnetfeats, kernel_size=1, bias=bias)

self.conv_dec1 = nn.Conv2d(n_feat, n_feat+scale_orsnetfeats, kernel_size=1, bias=bias)

self.conv_dec2 = nn.Conv2d(n_feat, n_feat+scale_orsnetfeats, kernel_size=1, bias=bias)

self.conv_dec3 = nn.Conv2d(n_feat, n_feat+scale_orsnetfeats, kernel_size=1, bias=bias)

def forward(self, x, encoder_outs, decoder_outs):

x = self.orb1(x)

x = x + self.conv_enc1(encoder_outs[0]) + self.conv_dec1(decoder_outs[0])

x = self.orb2(x)

x = x + self.conv_enc2(self.up_enc1(encoder_outs[1])) + self.conv_dec2(self.up_dec1(decoder_outs[1]))

x = self.orb3(x)

x = x + self.conv_enc3(self.up_enc2(encoder_outs[2])) + self.conv_dec3(self.up_dec2(decoder_outs[2]))

return x

##########################################################################

class MPRNet(nn.Module):

def __init__(self, in_c=3, out_c=3, n_feat=80, scale_unetfeats=48, scale_orsnetfeats=32, num_cab=8, kernel_size=3, reduction=4, bias=False):

super(MPRNet, self).__init__()

act=nn.PReLU()

self.shallow_feat1 = nn.Sequential(conv(in_c, n_feat, kernel_size, bias=bias), CAB(n_feat,kernel_size, reduction, bias=bias, act=act))

self.shallow_feat2 = nn.Sequential(conv(in_c, n_feat, kernel_size, bias=bias), CAB(n_feat,kernel_size, reduction, bias=bias, act=act))

self.shallow_feat3 = nn.Sequential(conv(in_c, n_feat, kernel_size, bias=bias), CAB(n_feat,kernel_size, reduction, bias=bias, act=act))

# Cross Stage Feature Fusion (CSFF)

self.stage1_encoder = Encoder(n_feat, kernel_size, reduction, act, bias, scale_unetfeats, csff=False)

self.stage1_decoder = Decoder(n_feat, kernel_size, reduction, act, bias, scale_unetfeats)

self.stage2_encoder = Encoder(n_feat, kernel_size, reduction, act, bias, scale_unetfeats, csff=True)

self.stage2_decoder = Decoder(n_feat, kernel_size, reduction, act, bias, scale_unetfeats)

self.stage3_orsnet = ORSNet(n_feat, scale_orsnetfeats, kernel_size, reduction, act, bias, scale_unetfeats, num_cab)

self.sam12 = SAM(n_feat, kernel_size=1, bias=bias)

self.sam23 = SAM(n_feat, kernel_size=1, bias=bias)

self.concat12 = conv(n_feat*2, n_feat, kernel_size, bias=bias)

self.concat23 = conv(n_feat*2, n_feat+scale_orsnetfeats, kernel_size, bias=bias)

self.tail = conv(n_feat+scale_orsnetfeats, out_c, kernel_size, bias=bias)

def forward(self, x3_img):

# Original-resolution Image for Stage 3

H = x3_img.size(2)

W = x3_img.size(3)

# Multi-Patch Hierarchy: Split Image into four non-overlapping patches

# Two Patches for Stage 2

x2top_img = x3_img[:,:,0:int(H/2),:]

x2bot_img = x3_img[:,:,int(H/2):H,:]

# Four Patches for Stage 1

x1ltop_img = x2top_img[:,:,:,0:int(W/2)]

x1rtop_img = x2top_img[:,:,:,int(W/2):W]

x1lbot_img = x2bot_img[:,:,:,0:int(W/2)]

x1rbot_img = x2bot_img[:,:,:,int(W/2):W]

##-------------------------------------------

##-------------- Stage 1---------------------

##-------------------------------------------

## Compute Shallow Features

x1ltop = self.shallow_feat1(x1ltop_img)

x1rtop = self.shallow_feat1(x1rtop_img)

x1lbot = self.shallow_feat1(x1lbot_img)

x1rbot = self.shallow_feat1(x1rbot_img)

## Process features of all 4 patches with Encoder of Stage 1

feat1_ltop = self.stage1_encoder(x1ltop)

feat1_rtop = self.stage1_encoder(x1rtop)

feat1_lbot = self.stage1_encoder(x1lbot)

feat1_rbot = self.stage1_encoder(x1rbot)

## Concat deep features

feat1_top = [torch.cat((k,v), 3) for k,v in zip(feat1_ltop,feat1_rtop)]

feat1_bot = [torch.cat((k,v), 3) for k,v in zip(feat1_lbot,feat1_rbot)]

## Pass features through Decoder of Stage 1

res1_top = self.stage1_decoder(feat1_top)

res1_bot = self.stage1_decoder(feat1_bot)

## Apply Supervised Attention Module (SAM)

x2top_samfeats, stage1_img_top = self.sam12(res1_top[0], x2top_img)

x2bot_samfeats, stage1_img_bot = self.sam12(res1_bot[0], x2bot_img)

## Output image at Stage 1

stage1_img = torch.cat([stage1_img_top, stage1_img_bot],2)

##-------------------------------------------

##-------------- Stage 2---------------------

##-------------------------------------------

## Compute Shallow Features

x2top = self.shallow_feat2(x2top_img)

x2bot = self.shallow_feat2(x2bot_img)

## Concatenate SAM features of Stage 1 with shallow features of Stage 2

x2top_cat = self.concat12(torch.cat([x2top, x2top_samfeats], 1))

x2bot_cat = self.concat12(torch.cat([x2bot, x2bot_samfeats], 1))

## Process features of both patches with Encoder of Stage 2

feat2_top = self.stage2_encoder(x2top_cat, feat1_top, res1_top)

feat2_bot = self.stage2_encoder(x2bot_cat, feat1_bot, res1_bot)

## Concat deep features

feat2 = [torch.cat((k,v), 2) for k,v in zip(feat2_top,feat2_bot)]

## Pass features through Decoder of Stage 2

res2 = self.stage2_decoder(feat2)

## Apply SAM

x3_samfeats, stage2_img = self.sam23(res2[0], x3_img)

##-------------------------------------------

##-------------- Stage 3---------------------

##-------------------------------------------

## Compute Shallow Features

x3 = self.shallow_feat3(x3_img)

## Concatenate SAM features of Stage 2 with shallow features of Stage 3

x3_cat = self.concat23(torch.cat([x3, x3_samfeats], 1))

x3_cat = self.stage3_orsnet(x3_cat, feat2, res2)

stage3_img = self.tail(x3_cat)

return [stage3_img+x3_img, stage2_img, stage1_img]

实验与分析

数据集

使用PSNR和SSIM进行定量比较。