hadoop(五)

目录

群起集群

一、启动集群

Hadoop群起脚本

LOZ压缩配置

群起集群

一、启动集群

如果是第一次启动,则需要格式化。

[doudou@hadoop101 hadoop-3.1.3]$ bin/hdfs namenode -format

[doudou@hadoop101 hadoop-3.1.3]$ sbin/start-dfs.sh

[doudou@hadoop101 hadoop-3.1.3]$ xcall.sh jps

hadoop102中

[doudou@hadoop102 hadoop-3.1.3]$ sbin/start-yarn.sh

Hadoop群起脚本

编写脚本

[doudou@hadoop101 bin]$ vim hdp.sh#!/bin/bash

if [ $# -lt 1 ]

then

echo "No Args Input..."

exit ;

fi

case $1 in

"start")

echo " =================== 启动 hadoop集群 ==================="

echo " --------------- 启动 hdfs ---------------"

ssh hadoop102 "/opt/module/hadoop-3.1.3/sbin/start-dfs.sh"

echo " --------------- 启动 yarn ---------------"

ssh hadoop103 "/opt/module/hadoop-3.1.3/sbin/start-yarn.sh"

echo " --------------- 启动 historyserver ---------------"

ssh hadoop102 "/opt/module/hadoop-3.1.3/bin/mapred --daemon start historyserver"

;;

"stop")

echo " =================== 关闭 hadoop集群 ==================="

echo " --------------- 关闭 historyserver ---------------"

ssh hadoop102 "/opt/module/hadoop-3.1.3/bin/mapred --daemon stop historyserver"

echo " --------------- 关闭 yarn ---------------"

ssh hadoop103 "/opt/module/hadoop-3.1.3/sbin/stop-yarn.sh"

echo " --------------- 关闭 hdfs ---------------"

ssh hadoop102 "/opt/module/hadoop-3.1.3/sbin/stop-dfs.sh"

;;

*)

echo "Input Args Error..."

;;

esac

查看启动的日志

[doudou@hadoop101 bin]$ xcall.sh jps

---------- 192.168.149.129 ---------

2552 DataNode

2430 NameNode

2895 NodeManager

3183 Jps

---------- 192.168.149.132 ---------

2261 DataNode

3224 Jps

2617 NodeManager

2442 ResourceManager

---------- 192.168.149.133 ---------

2571 Jps

2254 DataNode

2350 SecondaryNameNode

关闭日志

[doudou@hadoop101 bin]$ hdp.sh stop

查看关闭后日志

[doudou@hadoop101 bin]$ xcall.sh jps

---------- 192.168.149.129 ---------

3825 Jps

---------- 192.168.149.132 ---------

2617 NodeManager

3674 Jps

---------- 192.168.149.133 ---------

2758 Jps

启动日志

[doudou@hadoop101 bin]$ hdp.sh start

查看启动后日志

[doudou@hadoop101 bin]$ xcall.sh jps

---------- 192.168.149.129 ---------

4051 NameNode

4181 DataNode

4762 Jps

4523 NodeManager

4699 JobHistoryServer

---------- 192.168.149.132 ---------

3778 DataNode

3974 ResourceManager

2617 NodeManager

4621 Jps

---------- 192.168.149.133 ---------

3040 Jps

2861 DataNode

2974 SecondaryNameNode

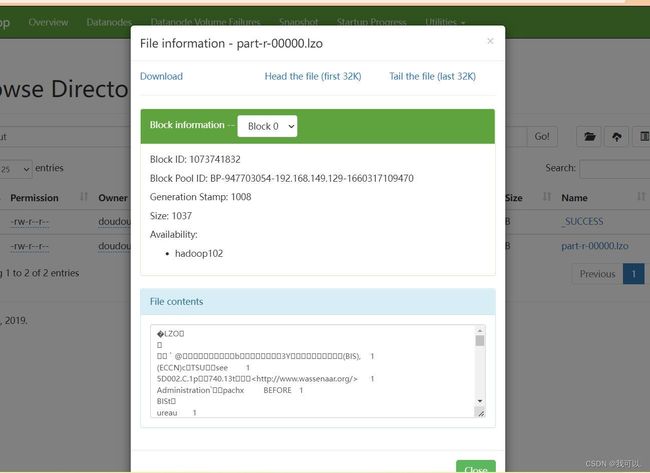

LOZ压缩配置

移动编译好的文件

[doudou@hadoop101 software]$ mv hadoop-lzo-0.4.20.jar /opt/module/hadoop-3.1.3/share/hadoop/common/

[doudou@hadoop101 software]$ cd /opt/module/hadoop-3.1.3/share/hadoop/common/

[doudou@hadoop101 common]$ ll

total 7348

-rw-r--r--. 1 doudou root 4096811 Sep 11 2019 hadoop-common-3.1.3.jar

-rw-r--r--. 1 doudou root 2878235 Sep 11 2019 hadoop-common-3.1.3-tests.jar

-rw-r--r--. 1 doudou root 129977 Sep 11 2019 hadoop-kms-3.1.3.jar

-rw-r--r--. 1 doudou root 193831 Aug 7 06:56 hadoop-lzo-0.4.20.jar

-rw-r--r--. 1 doudou root 201616 Sep 11 2019 hadoop-nfs-3.1.3.jar

drwxr-xr-x. 2 doudou root 4096 Sep 11 2019 jdiff

drwxr-xr-x. 2 doudou root 4096 Sep 11 2019 lib

drwxr-xr-x. 2 doudou root 89 Sep 11 2019 sources

drwxr-xr-x. 3 doudou root 20 Sep 11 2019 webapps

分发

[doudou@hadoop101 common]$ xsync hadoop-lzo-0.4.20.jar

core-site.xml增加配置支持LZO压缩

[doudou@hadoop101 hadoop]$ vim core-site.xml

io.compression.codecs

org.apache.hadoop.io.compress.GzipCodec,

org.apache.hadoop.io.compress.DefaultCodec,

org.apache.hadoop.io.compress.BZip2Codec,

org.apache.hadoop.io.compress.SnappyCodec,

com.hadoop.compression.lzo.LzoCodec,

com.hadoop.compression.lzo.LzopCodec

io.compression.codec.lzo.class

com.hadoop.compression.lzo.LzoCodec

分发

[doudou@hadoop101 hadoop]$ xsync core-site.xml

重启集群

[doudou@hadoop101 hadoop]$ hdp.sh start测试

[doudou@hadoop101 hadoop-3.1.3]$ hadoop fs -mkdir /input

[doudou@hadoop101 hadoop-3.1.3]$ hadoop fs -put README.txt /input

[doudou@hadoop101 hadoop-3.1.3]$ hadoop fs -put README.txt /input

2022-08-13 04:02:46,435 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

[doudou@hadoop101 hadoop-3.1.3]$ hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.3.jar wordcount -Dmapreduce.output.fileoutputformat.compress=true -Dmapreduce.output.fileoutputformat.compress.codec=com.hadoop.compression.lzo.LzopCodec /input /output

将bigtable.lzo上传

[doudou@hadoop101 software]$ hadoop fs -put bigtable.lzo /input

执行wordcount程序

[doudou@hadoop101 software]$ hadoop jar /opt/module/hadoop-3.1.3/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.3.jar wordcount -Dmapreduce.job.inputformat.class=com.hadoop.mapreduce.LzoTextInputFormat /input /output1

对上传的LZO文件建索引

[doudou@hadoop101 software]$ hadoop jar /opt/module/hadoop-3.1.3/share/hadoop/common/hadoop-lzo-0.4.20.jar com.hadoop.compression.lzo.DistributedLzoIndexer

再次执行wordcount程序

[doudou@hadoop101 software]$ hadoop jar /opt/module/hadoop-3.1.3/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.3.jar wordcount -Dmapreduce.job.inputformat.class=com.hadoop.mapreduce.LzoTextInputFormat /input /output2