day6----容器云Prometheus的相关组件(1)

前情回顾

* 配置是独立于程序的可配置变量,同一份程序在不同配置下会有不同的行为

* 云原生(Cloud Native)程序的特点(容器变为云原生的三种方法)

* * 程序的配置,通过设置“环境变量”传递到容器内部

* * 程序的配置,通过程序“启动参数”配置生效

* * 程序的配置,通过集中在”配置中心“进行统一管理(CRUD)

* Devops工程师应该做些什么

* * 容器化公司自研的应该程序,(通过Docker进行二次封装)

* * 推动容器化应用,转变云原生应用(一次构建,到处应用)

* * 使用容器编排框架(kubernetes),合理,规范,专业的编排业务容器

实战新一代容器云监控系统

第一章:新一代容器云监控Prometheus的概述

第二章:实战部署容器云监控必备exporter

第三章:实战部署Prometheus以及配置详解

第四章:实战部署容器云监控展示平台Grafana

第五章:实战Grafana监控容器云系统需要配置的插件以及仪表盘的制作

第六章:实战演示微服务业务容器接入容器云监控系统原理

第七章:实战通过Alertmanager组件进行监控告警

第八章:课后总结

Prometheus(普罗米修斯)是一个最初在SoundCloud上构建的监控系统

自2012年成为社区开源项目,拥有非常活跃的开发人员和用户社区,为强调开源以及独立维护,Prometheus于20167年加入CNCF,成为kubernetes之后的第二托管项目

https://Prometheus.io

https://github.com/prometheus

https://github.com/prometheus/prometheus

https://github.com/prometheus/prometheus/releases

Prometheus的特点

** 多维数据模型:由度量名称和键值对标识的时间列数据

** 内置时间序列数据库:TSDB

** promQL: 一种灵活的查询语句,可以利用多维数据完成复杂查询

** 基于HTTP的pull(拉取)方式采集时间序列数据(exporter)

** 同时支持PushGateway组件收集数据

** 通过服务发现或静态配置发现目标

** 多种图形模型以及仪表盘支持

** 支持做为数据源接入Grafana

说明::监控上的数据,大量查询是需要基于时间去查询的,这点就是优于zabbix的一点,,zabbix设为主动化,那么就是主动去获取数据的,设为被动的话就会被动的设置数据的

Prometheus只能基于http或者https去采集时间序列数据的,,如果软件不带http接口就只能下载软件去设置exporter去设置的

Grafana是一个专门设置高大上图形化的展示界面—重点

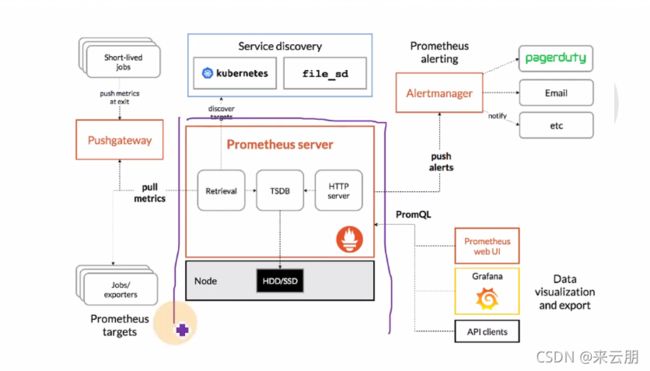

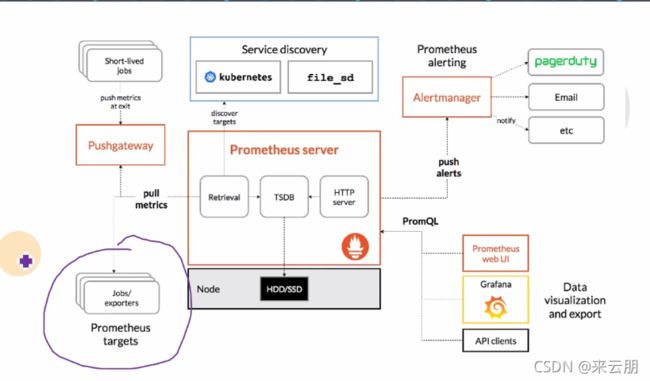

Prometheus架构介绍

架构图

exporters----监控的目标

protheus去exporters去拉去数据

右下角的地方就是通过Grafana去展示出来,,其上下的两个不怎么常用

上面地方最用要就是服务发现,就是基于元数据的自动发现,也可以基于文件的sd的服务发现–这个也是重点

prometheus是两个拉去的一个是服务发现一个是exporters,

Prometheus和zabbixc对比

新一代容器云监控系统Prometheus和传统监控Zabbix对比

交付kube-state-metric

运维主机上操作200

准备kube-state-metrics镜像

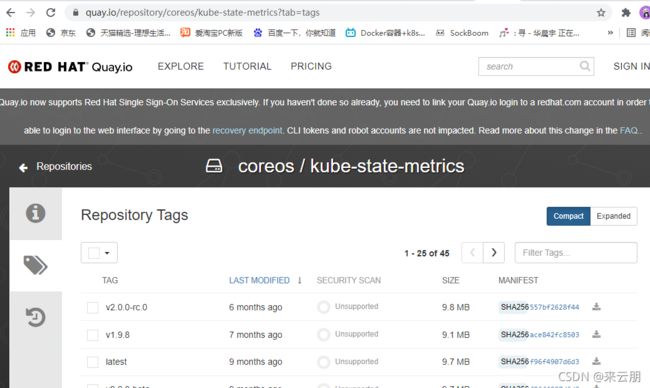

官方地址:https://quay.io/repository/coreos/kube-state-metrics?tab=tags

[root@hdss7-200 ~]# docker pull quay.io/coreos/kube-state-metrics:v1.5.0

v1.5.0: Pulling from coreos/kube-state-metrics

cd784148e348: Pull complete

f622528a393e: Pull complete

Digest: sha256:b7a3143bd1eb7130759c9259073b9f239d0eeda09f5210f1cd31f1a530599ea1

Status: Downloaded newer image for quay.io/coreos/kube-state-metrics:v1.5.0

quay.io/coreos/kube-state-metrics:v1.5.0

[root@hdss7-200 ~]# docker images |grep v1.5.0

quay.io/coreos/kube-state-metrics v1.5.0 91599517197a 2 years ago 31.8MB

[root@hdss7-200 ~]# docker tag 91599517197a harbor.od.com/public/kube-state-metrics:v1.5.0

[root@hdss7-200 ~]# docker push harbor.od.com/public/kube-state-metrics:v1.5.0

The push refers to repository [harbor.od.com/public/kube-state-metrics]

5b3c36501a0a: Pushed

7bff100f35cb: Pushed

v1.5.0: digest: sha256:16e9a1d63e80c19859fc1e2727ab7819f89aeae5f8ab5c3380860c2f88fe0a58 size: 739

[root@hdss7-200 ~]#

准备资源配置清单

[root@hdss7-200 kube-state-metrics]# pwd

/data/k8s-yaml/kube-state-metrics

[root@hdss7-200 kube-state-metrics]# ll

total 8

-rw-r--r-- 1 root root 1243 Sep 9 00:17 dp.yaml

-rw-r--r-- 1 root root 1429 Sep 9 00:15 rbac.yaml

[root@hdss7-200 kube-state-metrics]# cat rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/cluster-service: "true"

name: kube-state-metrics

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/cluster-service: "true"

name: kube-state-metrics

rules:

- apiGroups:

- ""

resources:

- configmaps

- secrets

- nodes

- pods

- services

- resourcequotas

- replicationcontrollers

- limitranges

- persistentvolumeclaims

- persistentvolumes

- namespaces

- endpoints

verbs:

- list

- watch

- apiGroups:

- extensions

resources:

- daemonsets

- deployments

- replicasets

verbs:

- list

- watch

- apiGroups:

- apps

resources:

- statefulsets

verbs:

- list

- watch

- apiGroups:

- batch

resources:

- cronjobs

- jobs

verbs:

- list

- watch

- apiGroups:

- autoscaling

resources:

- horizontalpodautoscalers

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/cluster-service: "true"

name: kube-state-metrics

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kube-state-metrics

subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: kube-system

[root@hdss7-200 kube-state-metrics]# cat dp.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "2"

labels:

grafanak8sapp: "true"

app: kube-state-metrics

name: kube-state-metrics

namespace: kube-system

spec:

selector:

matchLabels:

grafanak8sapp: "true"

app: kube-state-metrics

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

grafanak8sapp: "true"

app: kube-state-metrics

spec:

containers:

- image: harbor.od.com/public/kube-state-metrics:v1.5.0

name: kube-state-metrics

ports:

- containerPort: 8080

name: http-metrics

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 8080

scheme: HTTP

initialDelaySeconds: 5

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

imagePullPolicy: IfNotPresent

imagePullSecrets:

- name: harbor

restartPolicy: Always

serviceAccount: kube-state-metrics

serviceAccountName: kube-state-metrics

[root@hdss7-200 kube-state-metrics]#

应用资源配置清单,并查看是否启动成功

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/kube-state-metrics/rbac.yaml

serviceaccount/kube-state-metrics created

clusterrole.rbac.authorization.k8s.io/kube-state-metrics created

clusterrolebinding.rbac.authorization.k8s.io/kube-state-metrics created

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/kube-state-metrics/dp.yaml

deployment.extensions/kube-state-metrics created

[root@hdss7-21 ~]#

[root@hdss7-21 ~]# curl 172.7.21.7:8080/healthz

ok[root@hdss7-21 ~]#

部署node-exporter

在运维主机200上

node-exporter官方dockerhub地址

https://hub.docker.com/r/prom/node-exporter/tags

node-expoerer官方github地址

下载

[root@hdss7-200 kube-state-metrics]# docker pull prom/node-exporter:v0.15.0

v0.15.0: Pulling from prom/node-exporter

Image docker.io/prom/node-exporter:v0.15.0 uses outdated schema1 manifest format. Please upgrade to a schema2 image for better future compatibility. More information at https://docs.docker.com/registry/spec/deprecated-schema-v1/

aa3e9481fcae: Pull complete

a3ed95caeb02: Pull complete

afc308b02dc6: Pull complete

4cafbffc9d4f: Pull complete

Digest: sha256:a59d1f22610da43490532d5398b3911c90bfa915951d3b3e5c12d3c0bf8771c3

Status: Downloaded newer image for prom/node-exporter:v0.15.0

docker.io/prom/node-exporter:v0.15.0

[root@hdss7-200 kube-state-metrics]# docker images |grep node-exporter

prom/node-exporter v0.15.0 12d51ffa2b22 3 years ago 22.8MB

[root@hdss7-200 kube-state-metrics]# docker tag 12d51ffa2b22 harbor.od.com/public/node-exporter:v0.15.0

[root@hdss7-200 kube-state-metrics]# docker push !$

docker push harbor.od.com/public/node-exporter:v0.15.0

The push refers to repository [harbor.od.com/public/node-exporter]

5f70bf18a086: Mounted from public/pause

1c7f6350717e: Pushed

a349adf62fe1: Pushed

c7300f623e77: Pushed

v0.15.0: digest: sha256:57d9b335b593e4d0da1477d7c5c05f23d9c3dc6023b3e733deb627076d4596ed size: 1979

[root@hdss7-200 kube-state-metrics]#

准备资源配置清单

[root@hdss7-200 kube-state-metrics]# cd ..

[root@hdss7-200 k8s-yaml]# mkdir node-exporter

[root@hdss7-200 k8s-yaml]# cd node-exporter/

[root@hdss7-200 node-exporter]# vi ds.yaml

[root@hdss7-200 node-exporter]# cat ds.yaml

kind: DaemonSet

apiVersion: extensions/v1beta1

metadata:

name: node-exporter

namespace: kube-system

labels:

daemon: "node-exporter"

grafanak8sapp: "true"

spec:

selector:

matchLabels:

daemon: "node-exporter"

grafanak8sapp: "true"

template:

metadata:

name: node-exporter

labels:

daemon: "node-exporter"

grafanak8sapp: "true"

spec:

volumes:

- name: proc

hostPath:

path: /proc

type: ""

- name: sys

hostPath:

path: /sys

type: ""

containers:

- name: node-exporter

image: harbor.od.com/public/node-exporter:v0.15.0

args:

- --path.procfs=/host_proc

- --path.sysfs=/host_sys

ports:

- name: node-exporter

hostPort: 9100

containerPort: 9100

protocol: TCP

volumeMounts:

- name: sys

readOnly: true

mountPath: /host_sys

- name: proc

readOnly: true

mountPath: /host_proc

imagePullSecrets:

- name: harbor

restartPolicy: Always

hostNetwork: true

[root@hdss7-200 node-exporter]#

应用资源配置清单,并检验效果

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/node-exporter/ds.yaml

daemonset.extensions/node-exporter created

[root@hdss7-21 ~]# netstat -lntup|grep 9100

tcp6 0 0 :::9100 :::* LISTEN 98829/node_exporter

[root@hdss7-21 ~]# kubectl get pods -n kube-system -l daemon="node-exporter" -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

node-exporter-s2hzm 1/1 Running 0 60s 10.4.7.21 hdss7-21.host.com <none> <none>

node-exporter-zr68n 1/1 Running 0 60s 10.4.7.22 hdss7-22.host.com <none> <none>

[root@hdss7-21 ~]# curl -s 10.4.7.21:9100/metrics |head ##检查相关的取出来的内容

# HELP go_gc_duration_seconds A summary of the GC invocation durations.

# TYPE go_gc_duration_seconds summary

go_gc_duration_seconds{quantile="0"} 0

go_gc_duration_seconds{quantile="0.25"} 0

go_gc_duration_seconds{quantile="0.5"} 0

go_gc_duration_seconds{quantile="0.75"} 0

go_gc_duration_seconds{quantile="1"} 0

go_gc_duration_seconds_sum 0

go_gc_duration_seconds_count 0

# HELP go_goroutines Number of goroutines that currently exist.

[root@hdss7-21 ~]#

部署cadvisor

运维主机上200

准备镜像cadvisor

https://hub.docker.com/r/google/cadvisor/tags

[root@hdss7-200 node-exporter]# docker pull google/cadvisor:v0.28.3

v0.28.3: Pulling from google/cadvisor

ab7e51e37a18: Pull complete

a2dc2f1bce51: Pull complete

3b017de60d4f: Pull complete

Digest: sha256:9e347affc725efd3bfe95aa69362cf833aa810f84e6cb9eed1cb65c35216632a

Status: Downloaded newer image for google/cadvisor:v0.28.3

docker.io/google/cadvisor:v0.28.3

[root@hdss7-200 node-exporter]# docker images |grep cadvisor

google/cadvisor v0.28.3 75f88e3ec333 3 years ago 62.2MB

[root@hdss7-200 node-exporter]# docker tag 75f88e3ec333 harbor.od.com/public/cadvisor:v0.28.3

[root@hdss7-200 node-exporter]# docker push harbor.od.com/public/cadvisor:v0.28.3

The push refers to repository [harbor.od.com/public/cadvisor]

f60e27acaccf: Pushed

f04a25da66bf: Pushed

52a5560f4ca0: Pushed

v0.28.3: digest: sha256:34d9d683086d7f3b9bbdab0d1df4518b230448896fa823f7a6cf75f66d64ebe1 size: 951

[root@hdss7-200 node-exporter]#

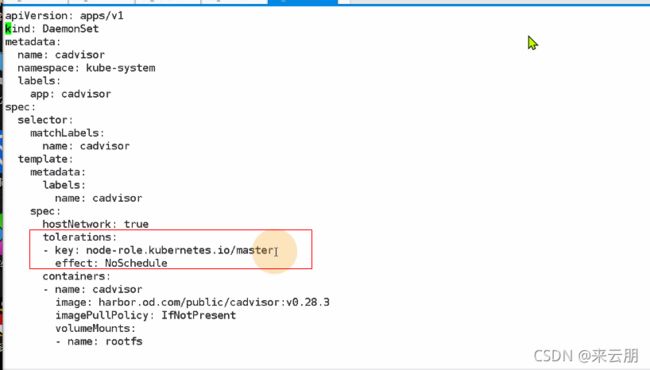

资源配置清单

[root@hdss7-200 node-exporter]# cd ..

[root@hdss7-200 k8s-yaml]# mkdir cadvisor

[root@hdss7-200 k8s-yaml]# cd cadvisor/

[root@hdss7-200 cadvisor]# vi ds.yaml

[root@hdss7-200 cadvisor]# cat ds.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: cadvisor

namespace: kube-system

labels:

app: cadvisor

spec:

selector:

matchLabels:

name: cadvisor

template:

metadata:

labels:

name: cadvisor

spec:

hostNetwork: true

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

containers:

- name: cadvisor

image: harbor.od.com/public/cadvisor:v0.28.3

imagePullPolicy: IfNotPresent

volumeMounts:

- name: rootfs

mountPath: /rootfs

readOnly: true

- name: var-run

mountPath: /var/run

- name: sys

mountPath: /sys

readOnly: true

- name: docker

mountPath: /var/lib/docker

readOnly: true

ports:

- name: http

containerPort: 4194

protocol: TCP

readinessProbe:

tcpSocket:

port: 4194

initialDelaySeconds: 5

periodSeconds: 10

args:

- --housekeeping_interval=10s

- --port=4194

terminationGracePeriodSeconds: 30

volumes:

- name: rootfs

hostPath:

path: /

- name: var-run

hostPath:

path: /var/run

- name: sys

hostPath:

path: /sys

- name: docker

hostPath:

path: /data/docker

[root@hdss7-200 cadvisor]#

污点设置

知识点::

人为影响k8s调度策略的三种方法

污点、容忍度方法

污点:运算节点node上的污点

容忍度:pod是否能够容忍污点

nodeName: 让Pod运行在指定的node上

nodeSelector:通过标签选择器,让Pod 运行在指定的一类node上

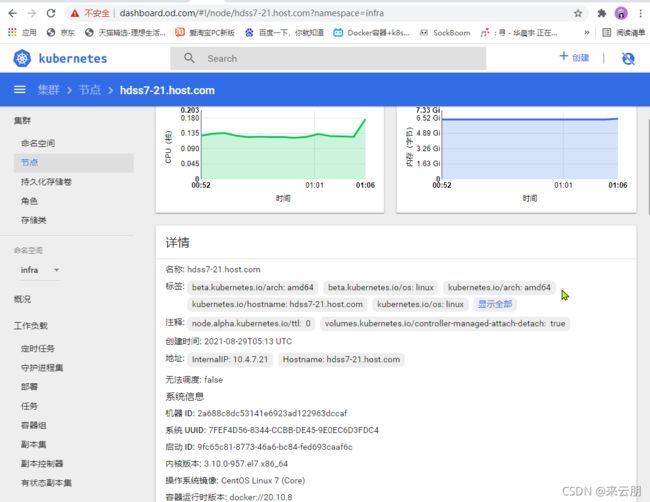

[root@hdss7-21 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

hdss7-21.host.com Ready master,node 10d v1.15.2

hdss7-22.host.com Ready master,node 10d v1.15.2

[root@hdss7-21 ~]# kubectl taint node hdss7-21.host.com node-role.kubernetes.io/master=master:NoSchedule

node/hdss7-21.host.com tainted

修改运算节点软连接

[root@hdss7-22 ~]# mount -o remount,rw /sys/fs/cgroup/

[root@hdss7-22 ~]# ln -s /sys/fs/cgroup/cpu,cpuacct /sys/fs/cgroup/cpuacct,cpu

[root@hdss7-22 ~]# ls -l /sys/fs/cgroup/

total 0

drwxr-xr-x 8 root root 0 Sep 4 11:38 blkio

lrwxrwxrwx 1 root root 11 Sep 4 11:38 cpu -> cpu,cpuacct

lrwxrwxrwx 1 root root 11 Sep 4 11:38 cpuacct -> cpu,cpuacct

lrwxrwxrwx 1 root root 26 Sep 9 00:59 cpuacct,cpu -> /sys/fs/cgroup/cpu,cpuacct

drwxr-xr-x 8 root root 0 Sep 4 11:38 cpu,cpuacct

drwxr-xr-x 5 root root 0 Sep 4 11:38 cpuset

drwxr-xr-x 8 root root 0 Sep 4 11:38 devices

drwxr-xr-x 5 root root 0 Sep 4 11:38 freezer

drwxr-xr-x 5 root root 0 Sep 4 11:38 hugetlb

drwxr-xr-x 8 root root 0 Sep 4 11:38 memory

lrwxrwxrwx 1 root root 16 Sep 4 11:38 net_cls -> net_cls,net_prio

drwxr-xr-x 5 root root 0 Sep 4 11:38 net_cls,net_prio

lrwxrwxrwx 1 root root 16 Sep 4 11:38 net_prio -> net_cls,net_prio

drwxr-xr-x 5 root root 0 Sep 4 11:38 perf_event

drwxr-xr-x 8 root root 0 Sep 4 11:38 pids

drwxr-xr-x 8 root root 0 Sep 4 11:38 systemd

应用资源配置清单

[root@hdss7-21 ~]# mount -o remount,rw /sys/fs/cgroup/

[root@hdss7-21 ~]# ln -s /sys/fs/cgroup/cpu,cpuacct /sys/fs/cgroup/cpuacct,cpu

[root@hdss7-21 ~]# ls -l /sys/fs/cgroup/

total 0

drwxr-xr-x 8 root root 0 Aug 29 13:43 blkio

lrwxrwxrwx 1 root root 11 Aug 29 13:43 cpu -> cpu,cpuacct

lrwxrwxrwx 1 root root 11 Aug 29 13:43 cpuacct -> cpu,cpuacct

lrwxrwxrwx 1 root root 26 Sep 9 01:00 cpuacct,cpu -> /sys/fs/cgroup/cpu,cpuacct

drwxr-xr-x 8 root root 0 Aug 29 13:43 cpu,cpuacct

drwxr-xr-x 5 root root 0 Aug 29 13:43 cpuset

drwxr-xr-x 8 root root 0 Aug 29 13:43 devices

drwxr-xr-x 5 root root 0 Aug 29 13:43 freezer

drwxr-xr-x 5 root root 0 Aug 29 13:43 hugetlb

drwxr-xr-x 8 root root 0 Aug 29 13:43 memory

lrwxrwxrwx 1 root root 16 Aug 29 13:43 net_cls -> net_cls,net_prio

drwxr-xr-x 5 root root 0 Aug 29 13:43 net_cls,net_prio

lrwxrwxrwx 1 root root 16 Aug 29 13:43 net_prio -> net_cls,net_prio

drwxr-xr-x 5 root root 0 Aug 29 13:43 perf_event

drwxr-xr-x 8 root root 0 Aug 29 13:43 pids

drwxr-xr-x 8 root root 0 Aug 29 13:43 systemd

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/cadvisor/ds.yaml

daemonset.apps/cadvisor created

[root@hdss7-21 ~]# kubectl get po -n kube-system -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

cadvisor-6r7gq 1/1 Running 0 2m13s 10.4.7.22 hdss7-22.host.com <none> <none>

删除污点

[root@hdss7-21 ~]# kubectl taint node hdss7-21.host.com node-role.kubernetes.io/master-

node/hdss7-21.host.com untainted

[root@hdss7-21 ~]#

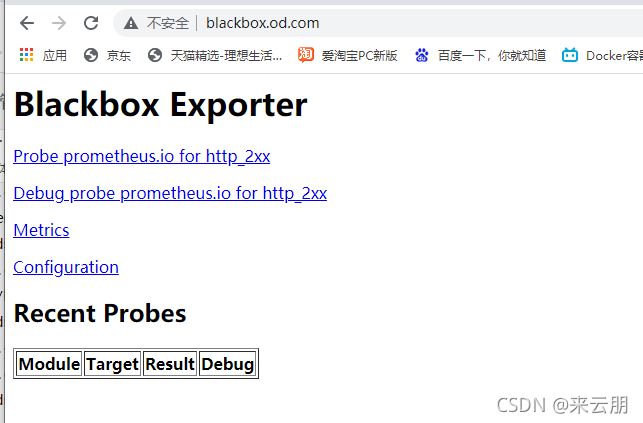

交付blackbox-exporter

下载

https://hub.docker.com/r/prom/blackbox-exporter

https://github.com/prometheus/blackbox_exporter

[root@hdss7-200 cadvisor]# docker pull prom/blackbox-exporter:v0.15.1

v0.15.1: Pulling from prom/blackbox-exporter

8e674ad76dce: Pull complete

e77d2419d1c2: Pull complete

969c24328c68: Pull complete

d9df4d63dd8a: Pull complete

Digest: sha256:0ccbb0bb08bbc00f1c765572545e9372a4e4e4dc9bafffb1a962024f61d6d996

Status: Downloaded newer image for prom/blackbox-exporter:v0.15.1

docker.io/prom/blackbox-exporter:v0.15.1

[root@hdss7-200 cadvisor]# docker images |grep blackbox

prom/blackbox-exporter v0.15.1 81b70b6158be 24 months ago 19.7MB

[root@hdss7-200 cadvisor]# docker tag 81b70b6158be harbor.od.com/public/blackbox-exporter:v0.15.1

[root@hdss7-200 cadvisor]# docker push harbor.od.com/public/blackbox-exporter:v0.15.1

The push refers to repository [harbor.od.com/public/blackbox-exporter]

2e93bab0c159: Pushed

4f2b5ab68d7f: Pushed

3163e6173fcc: Pushed

6194458b07fc: Pushed

v0.15.1: digest: sha256:f7c335cc7898c6023346a0d5fba8566aca4703b69d63be8dc5367476c77cf2c4 size: 1155

[root@hdss7-200 cadvisor]#

准备资源配置清单

[root@hdss7-200 cadvisor]# cd ..

[root@hdss7-200 k8s-yaml]# mkdir blackbox-exporter

[root@hdss7-200 k8s-yaml]# cd blackbox-exporter/

[root@hdss7-200 blackbox-exporter]# vi cm.yaml

[root@hdss7-200 blackbox-exporter]# mv cm.yaml dp.yaml

[root@hdss7-200 blackbox-exporter]# vi cm.yaml

[root@hdss7-200 blackbox-exporter]# vi dp.yaml

[root@hdss7-200 blackbox-exporter]# vi svc.yaml

[root@hdss7-200 blackbox-exporter]# vi ingress.yaml

[root@hdss7-200 blackbox-exporter]# cat cm.yaml

apiVersion: v1

kind: ConfigMap

metadata:

labels:

app: blackbox-exporter

name: blackbox-exporter

namespace: kube-system

data:

blackbox.yml: |-

modules:

http_2xx:

prober: http

timeout: 2s

http:

valid_http_versions: ["HTTP/1.1", "HTTP/2"]

valid_status_codes: [200,301,302]

method: GET

preferred_ip_protocol: "ip4"

tcp_connect:

prober: tcp

timeout: 2s

[root@hdss7-200 blackbox-exporter]# cat dp.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: blackbox-exporter

namespace: kube-system

labels:

app: blackbox-exporter

annotations:

deployment.kubernetes.io/revision: 1

spec:

replicas: 1

selector:

matchLabels:

app: blackbox-exporter

template:

metadata:

labels:

app: blackbox-exporter

spec:

volumes:

- name: config

configMap:

name: blackbox-exporter

defaultMode: 420

containers:

- name: blackbox-exporter

image: harbor.od.com/public/blackbox-exporter:v0.15.1

imagePullPolicy: IfNotPresent

args:

- --config.file=/etc/blackbox_exporter/blackbox.yml

- --log.level=info

- --web.listen-address=:9115

ports:

- name: blackbox-port

containerPort: 9115

protocol: TCP

resources:

limits:

cpu: 200m

memory: 256Mi

requests:

cpu: 100m

memory: 50Mi

volumeMounts:

- name: config

mountPath: /etc/blackbox_exporter

readinessProbe:

tcpSocket:

port: 9115

initialDelaySeconds: 5

timeoutSeconds: 5

periodSeconds: 10

successThreshold: 1

failureThreshold: 3

[root@hdss7-200 blackbox-exporter]# cat svc.yaml

apiVersion: v1

kind: Service

metadata:

name: blackbox-exporter

namespace: kube-system

spec:

selector:

app: blackbox-exporter

ports:

- name: blackbox-port

protocol: TCP

port: 9115

[root@hdss7-200 blackbox-exporter]# cat ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: blackbox-exporter

namespace: kube-system

spec:

rules:

- host: blackbox.od.com

http:

paths:

- path: /

backend:

serviceName: blackbox-exporter

servicePort: blackbox-port

[root@hdss7-200 blackbox-exporter]#

域名设置

[root@hdss7-11 ~]# vi /var/named/od.com.zone

[root@hdss7-11 ~]# cat /var/named/od.com.zone

$ORIGIN od.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.od.com. dnsadmin.od.com. (

2021052317 ; serial

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.od.com.

$TTL 60 ; 1 minute

dns A 10.4.7.11

harbor A 10.4.7.200

k8s-yaml A 10.4.7.200

traefik A 10.4.7.10

dashboard A 10.4.7.10

zk1 A 10.4.7.11

zk2 A 10.4.7.12

zk3 A 10.4.7.21

jenkins A 10.4.7.10

dubbo-monitor A 10.4.7.10

demo A 10.4.7.10

config A 10.4.7.10

mysql A 10.4.7.11

portal A 10.4.7.10

zk-test A 10.4.7.11

zk-prod A 10.4.7.12

config-test A 10.4.7.10

config-prod A 10.4.7.10

demo-test A 10.4.7.10

demo-prod A 10.4.7.10

blackbox A 10.4.7.10

[root@hdss7-11 ~]# systemctl restart named

[root@hdss7-11 ~]#

[root@hdss7-11 ~]# dig -t A blackbox.od.com @10.4.7.11 +short

10.4.7.10

应用资源配置清单

[root@hdss7-21 ~]# kubectl taint node hdss7-21.host.com node-role.kubernetes.io/master-

node/hdss7-21.host.com untainted

[root@hdss7-21 ~]# ^C

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/blackbox-exporter/cm.yaml

configmap/blackbox-exporter created

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/blackbox-exporter/dp.yaml

deployment.apps/blackbox-exporter created

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/blackbox-exporter/svc.yaml

service/blackbox-exporter created

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/blackbox-exporter/ingress.yaml

ingress.extensions/blackbox-exporter created

[root@hdss7-21 ~]#