8月13日TensorFlow学习笔记——卷积神经网络、CIFAR100、ResNet

文章目录

- 前言

- 一、减少 Overfitting

-

- 1、More data

- 2、Constrain model complexity

- 3、Regularization

- 4、动量

- 5、学习率衰减

- 6、Dropout

- 二、卷积神经网络

-

- 1、池化与采样

-

- (1)、Pooling

- (2)、放大 Upsampling2D

- 三、CIFAR100 实战

- 四、BatchNorm

- 五、ResNet 实战

前言

本文为8月13日TensorFlow学习笔记,分为五个章节:

- 减少 Overfitting;

- 卷积神经网络;

- CIFAR100 实战;

- BatchNorm;

- ResNet 实战。

一、减少 Overfitting

1、More data

2、Constrain model complexity

3、Regularization

- L2-regularization:

l2_model = keras.models.Sequential([

keras.layers.Dense(16, kernel_regularization=keras.regularizers.L2(0.001),

activation=tf.nn.relu, input_shape=(NUM_WORDS,)),

keras.layers.Dense(16, kernel_regularization=keras.regularizers.L2(0.001),

activation=tf.nn.relu),

keras.layers.Dense(1, activation=tf.nn.sigmoid())

])

4、动量

optimizer = SGD(learning_rate=0.02, momentum=0.9)

optimizer = RMSprop(learning_rate=0.02, momentum=0.9)

optimizer = SGD(learning_rate=0.02,

beta_1=0.9,

beta_2=0.999)

5、学习率衰减

optimizer = SGD(learning_rate=0.02)

for epoch in range(100):

optimizer.learning_rate = 0.2 * (100-epoch)/100

6、Dropout

- tf.nn.dropout(keep_prob)

test 时,keep_prob = 1

network = Sequential([layers.Dense(256, activation='relu'),

layers.Dropout(0.5),

layers.Dense(128, activation='relu'),

layers.Dropout(0.5),

layers.Dense(64, activation='relu'),

layers.Dense(32, activation='relu'),

layers.Dense(10)])

二、卷积神经网络

- 图片输入: [ b , h , w , c ] [b, h, w, c] [b,h,w,c].

- 一个 kernel: [ c , 3 , 3 ] [c, 3, 3] [c,3,3].

- 多个 kernel: [ N , c , 3 , 3 ] [N, c, 3, 3] [N,c,3,3]

- 输出: [ b , h o u t , w o u t , N ] [b, h_{out}, w_{out}, N] [b,hout,wout,N]

layers.Conv2D:

x = tf.random.normal([1, 28, 28, 4])

layer = layers.Conv2D(4, kernel_size=5, strides=1, padding='valid')

out = layer(x)

查看 weight & bias:

layer.kernel

layer.bias

tf.nn.conv2d:

x = tf.random.normal([1, 32, 32, 3])

w = tf.random.normal([5, 5, 3, 4])

b = tf.zeros([4])

print('w_shape', w.shape)

out = tf.nn.conv2d(x, w, strides=1, padding='VALID')

print('out_shape', out.shape)

out = out + b

print('out+b_shape', out.shape)

out = tf.nn.conv2d(x, w, strides=2, padding='VALID')

print('out_02_shape', out.shape)

1、池化与采样

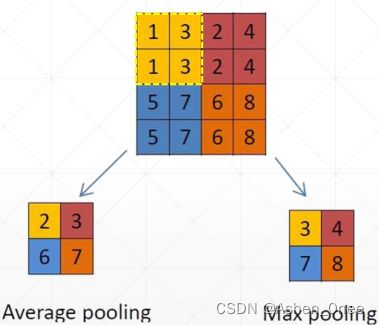

(1)、Pooling

pool = layers.MaxPool2D(2, strides=2)

out = pool(x)

print(out.shape)

>>> (1, 7, 7, 4)

out = tf.nn.max_pool2d(x, 2, strides=2, padding='VALID')

print(out.shape)

>>> (1, 7, 7, 4)

(2)、放大 Upsampling2D

x = tf.random.normal([1, 7, 7, 4])

layer = layers.UpSampling2D(size=3)

out=layer(x)

print(out.shape)

>>> (1, 21, 21, 4)

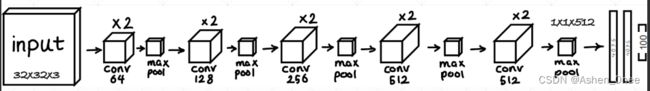

三、CIFAR100 实战

import tensorflow as tf

from tensorflow.keras import layers, optimizers, datasets, Sequential

import os

os.environ['TF_CPP_MIN_LOG_LEVEL']='2'

tf.random.set_seed(2345)

# 组建 13层网络的 list

con_layers = [ # 5 units of conv + maxpooling

# unit 1

layers.Conv2D(64, kernel_size=[3, 3], padding='same', activation=tf.nn.relu),

layers.Conv2D(64, kernel_size=[3, 3], padding='same', activation=tf.nn.relu),

layers.MaxPool2D(pool_size=[2, 2], strides=2, padding='same'),

# unit 2

layers.Conv2D(128, kernel_size=[3, 3], padding='same', activation=tf.nn.relu),

layers.Conv2D(128, kernel_size=[3, 3], padding='same', activation=tf.nn.relu),

layers.MaxPool2D(pool_size=[2, 2], strides=2, padding='same'),

# unit 3

layers.Conv2D(256, kernel_size=[3, 3], padding='same', activation=tf.nn.relu),

layers.Conv2D(256, kernel_size=[3, 3], padding='same', activation=tf.nn.relu),

layers.MaxPool2D(pool_size=[2, 2], strides=2, padding='same'),

# unit 4

layers.Conv2D(512, kernel_size=[3, 3], padding='same', activation=tf.nn.relu),

layers.Conv2D(512, kernel_size=[3, 3], padding='same', activation=tf.nn.relu),

layers.MaxPool2D(pool_size=[2, 2], strides=2, padding='same'),

# unit 5

layers.Conv2D(512, kernel_size=[3, 3], padding='same', activation=tf.nn.relu),

layers.Conv2D(512, kernel_size=[3, 3], padding='same', activation=tf.nn.relu),

layers.MaxPool2D(pool_size=[2, 2], strides=2, padding='same'),

]

def preprocess(x, y):

# [0~1]

x = tf.cast(x, dtype=tf.float32) / 255.

y = tf.cast(y, dtype=tf.int32)

return x, y

# 数据加载

(x, y), (x_test, y_test) = datasets.cifar100.load_data()

y = tf.squeeze(y, axis=1)

y_test = tf.squeeze(y_test, axis=1)

print(x.shape, y.shape, x_test.shape, y_test.shape)

train_db = tf.data.Dataset.from_tensor_slices((x, y))

train_db = train_db.shuffle(1000).map(preprocess).batch(128)

test_db = tf.data.Dataset.from_tensor_slices((x_test, y_test))

test_db = test_db.map(preprocess).batch(64)

sample = next(iter(train_db))

print('sample: ', sample[0].shape, sample[1].shape,

tf.reduce_min(sample[0]), tf.reduce_max(sample[0]))

def main():

# [b, 32, 32, 3] ==> [b, 1, 1, 512]

conv_net = Sequential(con_layers)

conv_net.build(input_shape=[None, 32, 32, 3])

# x = tf.random.normal([4, 32, 32, 3])

# out = conv_net(x)

# print(out.shape)

fc_net = Sequential([

layers.Dense(256, activation=tf.nn.relu),

layers.Dense(128, activation=tf.nn.relu),

layers.Dense(100, activation=None),

])

conv_net.build(input_shape=[None, 32, 32, 3])

fc_net.build(input_shape=[None, 512])

optimizer = optimizers.Adam(learning_rate=1e-4)

variables = conv_net.trainable_variables + fc_net.trainable_variables

for epoch in range(50):

for step, (x, y) in enumerate(train_db):

with tf.GradientTape() as tape:

# [b, 32, 32, 3] ==> [b, 1, 1, 512]

out = conv_net(x)

# flatten [b, 512]

out = tf.reshape(out, [-1, 512])

# fc_net: [b, 512] ==> [b, 100]

logits = fc_net(out)

# [b] ==> [b, 100]

y_onehot = tf.one_hot(y, depth=100)

loss = tf.losses.categorical_crossentropy(y_onehot, logits, from_logits=True)

loss = tf.reduce_mean(loss)

grads = tape.gradient(loss, variables)

optimizer.apply_gradients(zip(grads, variables))

if step % 100 == 0:

print(epoch, step, 'loss: ', loss)

total_num = 0

total_correct = 0

for x, y in test_db:

out = conv_net(x)

out = tf.reshape(out, [-1, 512])

logits = fc_net(out)

prob = tf.nn.softmax(logits, axis=1)

pred = tf.argmax(prob, axis=1)

pred = tf.cast(pred, dtype=tf.int32)

correct = tf.cast(tf.equal(pred, y), dtype=tf.int32)

correct =tf.reduce_sum(correct)

total_num += x.shape[0]

total_correct += int(correct)

acc = total_correct / total_num

print(epoch, 'acc: ', acc)

if __name__ == '__main__':

main()

>>> (50000, 32, 32, 3) (50000,) (10000, 32, 32, 3) (10000,)

sample: (128, 32, 32, 3) (128,) tf.Tensor(0.0, shape=(), dtype=float32) tf.Tensor(1.0, shape=(), dtype=float32)

……

9 acc: 0.3281

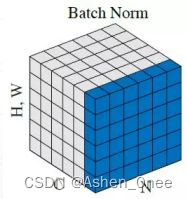

四、BatchNorm

在 channel 维度上正则化。

- Forward update:

# 16个 channel

x = tf.random.normal([2, 4, 4, 3], mean=1., stddev=0.5)

net = layers.BatchNormalization(axis=3)

out = net(x)

print(net.variables)

>>> [<tf.Variable 'batch_normalization/gamma:0' shape=(3,) dtype=float32, numpy=array([1., 1., 1.], dtype=float32)>, <tf.Variable 'batch_normalization/beta:0' shape=(3,) dtype=float32, numpy=array([0., 0., 0.], dtype=float32)>, <tf.Variable 'batch_normalization/moving_mean:0' shape=(3,) dtype=float32, numpy=array([0., 0., 0.], dtype=float32)>, <tf.Variable 'batch_normalization/moving_variance:0' shape=(3,) dtype=float32, numpy=array([1., 1., 1.], dtype=float32)>]

>>>

- Backward update

for i in range(10):

with tf.GradientTape() as tape:

out = net(x, training=True)

loss = tf.reduce_mean(tf.pow(out, 2)) - 1

grads = tape.gradient(loss, net.trainable_variables)

optimizer.apply_gradients(zip(grads, net.trainable_variables))

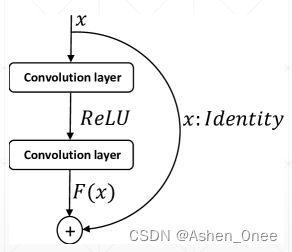

五、ResNet 实战

import tensorflow as tf

from tensorflow.keras import layers, optimizers, datasets, Sequential

import os

from shizhan_ResNet import resnet18

os.environ['TF_CPP_MIN_LOG_LEVEL']='2'

tf.random.set_seed(2345)

def preprocess(x, y):

# [0~1]

x = 2 * tf.cast(x, dtype=tf.float32) / 255. - 1

y = tf.cast(y, dtype=tf.int32)

return x, y

# 数据加载

(x, y), (x_test, y_test) = datasets.cifar100.load_data()

y = tf.squeeze(y, axis=1)

y_test = tf.squeeze(y_test, axis=1)

print(x.shape, y.shape, x_test.shape, y_test.shape)

train_db = tf.data.Dataset.from_tensor_slices((x, y))

train_db = train_db.shuffle(1000).map(preprocess).batch(256)

test_db = tf.data.Dataset.from_tensor_slices((x_test, y_test))

test_db = test_db.map(preprocess).batch(256)

sample = next(iter(train_db))

print('sample: ', sample[0].shape, sample[1].shape,

tf.reduce_min(sample[0]), tf.reduce_max(sample[0]))

def main():

# [b, 32, 32, 3] ==> [b, 1, 1, 512]

model = resnet18()

model.build(input_shape=[None, 32, 32, 3])

model.summary()

optimizer = optimizers.Adam(learning_rate=1e-4)

for epoch in range(50):

for step, (x, y) in enumerate(train_db):

with tf.GradientTape() as tape:

# [b, 32, 32, 3] ==> [b, 100]

out = model(x)

# flatten [b, 512]

out = tf.reshape(out, [-1, 512])

# model: [b, 512] ==> [b, 100]

logits = model(out)

# [b] ==> [b, 100]

y_onehot = tf.one_hot(y, depth=100)

loss = tf.losses.categorical_crossentropy(y_onehot, logits, from_logits=True)

loss = tf.reduce_mean(loss)

grads = tape.gradient(loss, model.trainable_variables)

optimizer.apply_gradients(zip(grads, model.trainable_variables))

if step % 100 == 0:

print(epoch, step, 'loss: ', loss)

total_num = 0

total_correct = 0

for x, y in test_db:

logits = model(out)

prob = tf.nn.softmax(logits, axis=1)

pred = tf.argmax(prob, axis=1)

pred = tf.cast(pred, dtype=tf.int32)

correct = tf.cast(tf.equal(pred, y), dtype=tf.int32)

correct =tf.reduce_sum(correct)

total_num += x.shape[0]

total_correct += int(correct)

acc = total_correct / total_num

print(epoch, 'acc: ', acc)

if __name__ == '__main__':

main()

>>> (50000, 32, 32, 3) (50000,) (10000, 32, 32, 3) (10000,)

sample: (512, 32, 32, 3) (512,) tf.Tensor(-0.5, shape=(), dtype=float32) tf.Tensor(0.5, shape=(), dtype=float32)

Model: "res_net"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

sequential (Sequential) (None, 30, 30, 64) 2048

sequential_1 (Sequential) (None, 30, 30, 64) 148736

sequential_2 (Sequential) (None, 15, 15, 128) 526976

sequential_4 (Sequential) (None, 8, 8, 256) 2102528

sequential_6 (Sequential) (None, 4, 4, 512) 8399360

global_average_pooling2d (G multiple 0

lobalAveragePooling2D)

dense (Dense) multiple 51300

=================================================================

Total params: 11,230,948

Trainable params: 11,223,140

Non-trainable params: 7,808

_________________________________________________________________

0 0 loss: 4.605584144592285

0 50 loss: 4.490157127380371

0 acc: 0.054

……

9 0 loss: 1.8639005422592163

9 50 loss: 1.5815012454986572

9 acc: 0.3417