DenseNet一维复现pytorch

DenseNet复现

DenseNet网络是2017年提出的一种借鉴了GoogLeNet的Inception结构,以及ResNet残差结构的一种新的稠密类型的网络,既利用残差结构避免网络退化,又通过Inception结构吸收多层输出的特征,其拥有着极好的性能又占据着恐怖的内存,但是对于追求准确率的任务来说这是一个非常值得尝试的选择。

LeNet-AlexNet-ZFNet: LeNet-AlexNet-ZFNet一维复现pytorch

VGG: VGG一维复现pytorch

GoogLeNet: GoogLeNet一维复现pytorch

ResNet: ResNet残差网络一维复现pytorch-含残差块复现思路分析

DenseNet: DenseNet一维复现pytorch

DenseNet原文链接: Densely Connected Convolutional Networks

Dense Block

一般来说自己复现的话,这里稍微比较难理解。

对于传统的网络 x x x为输入,则经过对应的一个层或者一组层之后的输出表达式如下,这个过程被公式表述为输入 x x x经过了一个函数 H H H

x l = H l ( x l − 1 ) x_l=H_l(x_{l-1}) xl=Hl(xl−1)

ResNet的残差结构输入和输出的表达式为,输出等于经过了一组卷积层的输出按位再加上输入

x l = H l ( x l − 1 ) + x l − 1 x_l=H_l(x_{l-1})+x_{l-1} xl=Hl(xl−1)+xl−1

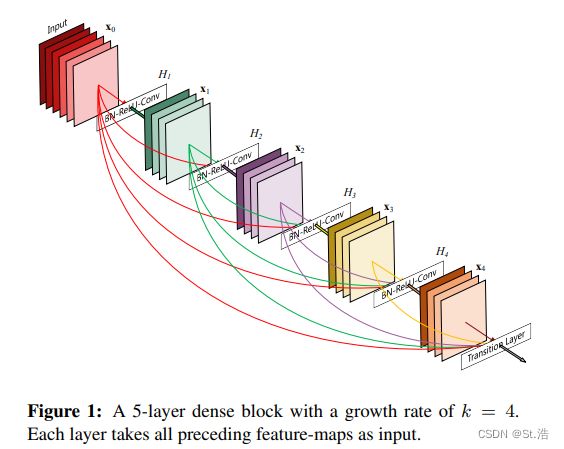

重点就是DenseNet的输出与输入的关系公式是这样的,好多文章基本就直接这吗给你个公式一摆,说实话能看懂就怪了,这里最重要的概念就是将变量在某一个维度拼接到一起,对GoogLeNet有一定了解的同学这里一定不陌生,这里举一个简单的例子如果 x 0 x_0 x0的维度为(Batch×channel1×Sample), x 1 x_1 x1的维度为(Batch×channel2×Sample)则 [ x 0 , x 1 ] [x_0,x_1] [x0,x1]在下面这个公式的应用中的形状为(Batch×(channel1+channel2)×Sample),也就是在通道维度上拼接。

x l = H l ( [ x 0 , x 1 , x 2 , . . . . . . , x l − 1 ] ) x_l=H_l([x_0,x_1,x_2,...... ,x_{l-1}]) xl=Hl([x0,x1,x2,......,xl−1])

因此每次输入随着不断的拼接维度会越来越大,而每经过一次指定运算输出的维度会大多少呢,文中给这个数字也就是这个参数取了一个名字叫growth rate增长率

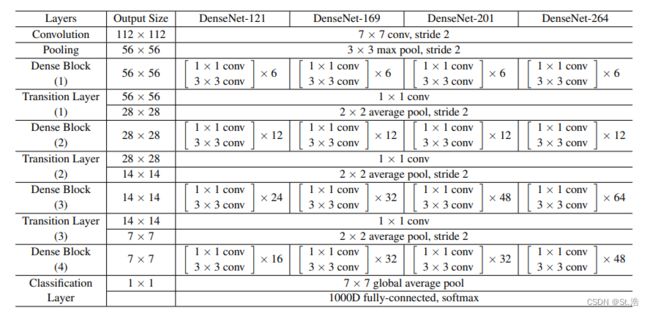

来看官方给的参数图这次我们复现的是DenseNet-121,可以看到每一个Dense Block包含着不同数量的DenseLayer因此复现的思路就是线复现一个DenseLayer然后复现可以可以选择其中DenseLayer数量的DenseBlock,然后再写一个用于每个DenseBlock中间链接的Transition Layer然后再像拼积木一样拼接到一起。

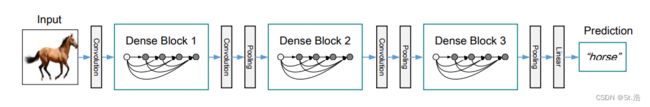

最终形成一个原文中给的这样的一个网络

首先复现DenseLayer这个难度不大就是一个卷积核大小分别为1和3的卷积搭在一起,然后输出的时候把输入和输出在通道维度上拼接到一起。每一层后面再加一个标准化和激活函数。这里需要三个参数

1.输入通道数

2.过渡通道数

3.输出通道数,这里的输出通道数这个参数其实就是增长率,最终的DenseLayer的输出的通道数实际上是输入通道数加上输出通道数也就是输入通道数加上增长率

class DenseLayer(torch.nn.Module):

def __init__(self,in_channels,middle_channels=128,out_channels=32):

super(DenseLayer, self).__init__()

self.layer = torch.nn.Sequential(

torch.nn.BatchNorm1d(in_channels),

torch.nn.ReLU(inplace=True),

torch.nn.Conv1d(in_channels,middle_channels,1),

torch.nn.BatchNorm1d(middle_channels),

torch.nn.ReLU(inplace=True),

torch.nn.Conv1d(middle_channels,out_channels,3,padding=1)

)

def forward(self,x):

return torch.cat([x,self.layer(x)],dim=1)

然后下一步就复现DenseBlock,DenseBlock的代码数量其实会出乎意料的少,因为里面包含的层数是不确定的,所以不使用一般搭建框架所使用的torch.nn.Model父类使用torch.nn.Sequential父类写代码,则我们需要的参数有

1.层数也就是这个DenseBlock里面有几个DenseLayer层

2.增长率growth rate 就是经过每一个DenseLayer层之后维度增长多少

3.输入通道数,这里指的是这个DenseBlock模块输入的通道数

4.过渡通道数,每个DenseLayer的通道数都一样,这参数比较鸡肋

然后通过一个for循环的方式的方式,使用self.add_module(层名,层)的的函数搭建一个DenseBlock,提一嘴这里’denselayer%d’%(i)是python仿C语言的语法%d的位置最后会被变量i替换。

class DenseBlock(torch.nn.Sequential):

def __init__(self,layer_num,growth_rate,in_channels,middele_channels=128):

super(DenseBlock, self).__init__()

for i in range(layer_num):

layer = DenseLayer(in_channels+i*growth_rate,middele_channels,growth_rate)

self.add_module('denselayer%d'%(i),layer)

Transition

transiton层就是将DenseBlock层的输出的通道数减少一倍的通道数,避免通道数累计的过多参数量爆炸。

class Transition(torch.nn.Sequential):

def __init__(self,channels):

super(Transition, self).__init__()

self.add_module('norm',torch.nn.BatchNorm1d(channels))

self.add_module('relu',torch.nn.ReLU(inplace=True))

self.add_module('conv',torch.nn.Conv1d(channels,channels//2,3,padding=1))

self.add_module('Avgpool',torch.nn.AvgPool1d(2))

完整代码

import torch

from torchsummary import summary

class DenseLayer(torch.nn.Module):

def __init__(self,in_channels,middle_channels=128,out_channels=32):

super(DenseLayer, self).__init__()

self.layer = torch.nn.Sequential(

torch.nn.BatchNorm1d(in_channels),

torch.nn.ReLU(inplace=True),

torch.nn.Conv1d(in_channels,middle_channels,1),

torch.nn.BatchNorm1d(middle_channels),

torch.nn.ReLU(inplace=True),

torch.nn.Conv1d(middle_channels,out_channels,3,padding=1)

)

def forward(self,x):

return torch.cat([x,self.layer(x)],dim=1)

class DenseBlock(torch.nn.Sequential):

def __init__(self,layer_num,growth_rate,in_channels,middele_channels=128):

super(DenseBlock, self).__init__()

for i in range(layer_num):

layer = DenseLayer(in_channels+i*growth_rate,middele_channels,growth_rate)

self.add_module('denselayer%d'%(i),layer)

class Transition(torch.nn.Sequential):

def __init__(self,channels):

super(Transition, self).__init__()

self.add_module('norm',torch.nn.BatchNorm1d(channels))

self.add_module('relu',torch.nn.ReLU(inplace=True))

self.add_module('conv',torch.nn.Conv1d(channels,channels//2,3,padding=1))

self.add_module('Avgpool',torch.nn.AvgPool1d(2))

class DenseNet(torch.nn.Module):

def __init__(self,layer_num=(6,12,24,16),growth_rate=32,init_features=64,in_channels=1,middele_channels=128,classes=5):

super(DenseNet, self).__init__()

self.feature_channel_num=init_features

self.conv=torch.nn.Conv1d(in_channels,self.feature_channel_num,7,2,3)

self.norm=torch.nn.BatchNorm1d(self.feature_channel_num)

self.relu=torch.nn.ReLU()

self.maxpool=torch.nn.MaxPool1d(3,2,1)

self.DenseBlock1=DenseBlock(layer_num[0],growth_rate,self.feature_channel_num,middele_channels)

self.feature_channel_num=self.feature_channel_num+layer_num[0]*growth_rate

self.Transition1=Transition(self.feature_channel_num)

self.DenseBlock2=DenseBlock(layer_num[1],growth_rate,self.feature_channel_num//2,middele_channels)

self.feature_channel_num=self.feature_channel_num//2+layer_num[1]*growth_rate

self.Transition2 = Transition(self.feature_channel_num)

self.DenseBlock3 = DenseBlock(layer_num[2],growth_rate,self.feature_channel_num//2,middele_channels)

self.feature_channel_num=self.feature_channel_num//2+layer_num[2]*growth_rate

self.Transition3 = Transition(self.feature_channel_num)

self.DenseBlock4 = DenseBlock(layer_num[3],growth_rate,self.feature_channel_num//2,middele_channels)

self.feature_channel_num=self.feature_channel_num//2+layer_num[3]*growth_rate

self.avgpool=torch.nn.AdaptiveAvgPool1d(1)

self.classifer = torch.nn.Sequential(

torch.nn.Linear(self.feature_channel_num, self.feature_channel_num//2),

torch.nn.ReLU(),

torch.nn.Dropout(0.5),

torch.nn.Linear(self.feature_channel_num//2, classes),

)

def forward(self,x):

x = self.conv(x)

x = self.norm(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.DenseBlock1(x)

x = self.Transition1(x)

x = self.DenseBlock2(x)

x = self.Transition2(x)

x = self.DenseBlock3(x)

x = self.Transition3(x)

x = self.DenseBlock4(x)

x = self.avgpool(x)

x = x.view(-1,self.feature_channel_num)

x = self.classifer(x)

return x

if __name__ == '__main__':

input = torch.randn(size=(1,1,224))

model = DenseNet(layer_num=(6,12,24,16),growth_rate=32,in_channels=1,classes=5)

output = model(input)

print(output.shape)

print(model)

summary(model=model, input_size=(1, 224), device='cpu')

输出结果

输出尺寸torch.Size([1, 5])

DenseNet(

(conv): Conv1d(1, 64, kernel_size=(7,), stride=(2,), padding=(3,))

(norm): BatchNorm1d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU()

(maxpool): MaxPool1d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)

(DenseBlock1): DenseBlock(

(denselayer0): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(64, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer1): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(96, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(96, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer2): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(128, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer3): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(160, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(160, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer4): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(192, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(192, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer5): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(224, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(224, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

)

(Transition1): Transition(

(norm): BatchNorm1d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv): Conv1d(256, 128, kernel_size=(3,), stride=(1,), padding=(1,))

(Avgpool): AvgPool1d(kernel_size=(2,), stride=(2,), padding=(0,))

)

(DenseBlock2): DenseBlock(

(denselayer0): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(128, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer1): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(160, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(160, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer2): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(192, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(192, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer3): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(224, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(224, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer4): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(256, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer5): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(288, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(288, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer6): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(320, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(320, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer7): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(352, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(352, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer8): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(384, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(384, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer9): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(416, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(416, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer10): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(448, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(448, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer11): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(480, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(480, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

)

(Transition2): Transition(

(norm): BatchNorm1d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv): Conv1d(512, 256, kernel_size=(3,), stride=(1,), padding=(1,))

(Avgpool): AvgPool1d(kernel_size=(2,), stride=(2,), padding=(0,))

)

(DenseBlock3): DenseBlock(

(denselayer0): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(256, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer1): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(288, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(288, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer2): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(320, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(320, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer3): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(352, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(352, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer4): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(384, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(384, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer5): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(416, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(416, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer6): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(448, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(448, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer7): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(480, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(480, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer8): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(512, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer9): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(544, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(544, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer10): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(576, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(576, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer11): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(608, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(608, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer12): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(640, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(640, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer13): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(672, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(672, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer14): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(704, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(704, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer15): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(736, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(736, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer16): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(768, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(768, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer17): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(800, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(800, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer18): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(832, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(832, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer19): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(864, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(864, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer20): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(896, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(896, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer21): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(928, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(928, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer22): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(960, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(960, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer23): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(992, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(992, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

)

(Transition3): Transition(

(norm): BatchNorm1d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv): Conv1d(1024, 512, kernel_size=(3,), stride=(1,), padding=(1,))

(Avgpool): AvgPool1d(kernel_size=(2,), stride=(2,), padding=(0,))

)

(DenseBlock4): DenseBlock(

(denselayer0): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(512, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer1): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(544, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(544, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer2): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(576, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(576, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer3): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(608, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(608, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer4): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(640, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(640, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer5): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(672, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(672, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer6): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(704, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(704, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer7): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(736, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(736, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer8): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(768, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(768, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer9): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(800, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(800, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer10): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(832, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(832, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer11): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(864, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(864, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer12): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(896, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(896, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer13): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(928, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(928, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer14): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(960, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(960, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

(denselayer15): DenseLayer(

(layer): Sequential(

(0): BatchNorm1d(992, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): ReLU(inplace=True)

(2): Conv1d(992, 128, kernel_size=(1,), stride=(1,))

(3): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

(5): Conv1d(128, 32, kernel_size=(3,), stride=(1,), padding=(1,))

)

)

)

(avgpool): AdaptiveAvgPool1d(output_size=1)

(classifer): Sequential(

(0): Linear(in_features=1024, out_features=512, bias=True)

(1): ReLU()

(2): Dropout(p=0.5, inplace=False)

(3): Linear(in_features=512, out_features=5, bias=True)

)

)

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv1d-1 [-1, 64, 112] 512

BatchNorm1d-2 [-1, 64, 112] 128

ReLU-3 [-1, 64, 112] 0

MaxPool1d-4 [-1, 64, 56] 0

BatchNorm1d-5 [-1, 64, 56] 128

ReLU-6 [-1, 64, 56] 0

Conv1d-7 [-1, 128, 56] 8,320

BatchNorm1d-8 [-1, 128, 56] 256

ReLU-9 [-1, 128, 56] 0

Conv1d-10 [-1, 32, 56] 12,320

DenseLayer-11 [-1, 96, 56] 0

BatchNorm1d-12 [-1, 96, 56] 192

ReLU-13 [-1, 96, 56] 0

Conv1d-14 [-1, 128, 56] 12,416

BatchNorm1d-15 [-1, 128, 56] 256

ReLU-16 [-1, 128, 56] 0

Conv1d-17 [-1, 32, 56] 12,320

DenseLayer-18 [-1, 128, 56] 0

BatchNorm1d-19 [-1, 128, 56] 256

ReLU-20 [-1, 128, 56] 0

Conv1d-21 [-1, 128, 56] 16,512

BatchNorm1d-22 [-1, 128, 56] 256

ReLU-23 [-1, 128, 56] 0

Conv1d-24 [-1, 32, 56] 12,320

DenseLayer-25 [-1, 160, 56] 0

BatchNorm1d-26 [-1, 160, 56] 320

ReLU-27 [-1, 160, 56] 0

Conv1d-28 [-1, 128, 56] 20,608

BatchNorm1d-29 [-1, 128, 56] 256

ReLU-30 [-1, 128, 56] 0

Conv1d-31 [-1, 32, 56] 12,320

DenseLayer-32 [-1, 192, 56] 0

BatchNorm1d-33 [-1, 192, 56] 384

ReLU-34 [-1, 192, 56] 0

Conv1d-35 [-1, 128, 56] 24,704

BatchNorm1d-36 [-1, 128, 56] 256

ReLU-37 [-1, 128, 56] 0

Conv1d-38 [-1, 32, 56] 12,320

DenseLayer-39 [-1, 224, 56] 0

BatchNorm1d-40 [-1, 224, 56] 448

ReLU-41 [-1, 224, 56] 0

Conv1d-42 [-1, 128, 56] 28,800

BatchNorm1d-43 [-1, 128, 56] 256

ReLU-44 [-1, 128, 56] 0

Conv1d-45 [-1, 32, 56] 12,320

DenseLayer-46 [-1, 256, 56] 0

BatchNorm1d-47 [-1, 256, 56] 512

ReLU-48 [-1, 256, 56] 0

Conv1d-49 [-1, 128, 56] 98,432

AvgPool1d-50 [-1, 128, 28] 0

BatchNorm1d-51 [-1, 128, 28] 256

ReLU-52 [-1, 128, 28] 0

Conv1d-53 [-1, 128, 28] 16,512

BatchNorm1d-54 [-1, 128, 28] 256

ReLU-55 [-1, 128, 28] 0

Conv1d-56 [-1, 32, 28] 12,320

DenseLayer-57 [-1, 160, 28] 0

BatchNorm1d-58 [-1, 160, 28] 320

ReLU-59 [-1, 160, 28] 0

Conv1d-60 [-1, 128, 28] 20,608

BatchNorm1d-61 [-1, 128, 28] 256

ReLU-62 [-1, 128, 28] 0

Conv1d-63 [-1, 32, 28] 12,320

DenseLayer-64 [-1, 192, 28] 0

BatchNorm1d-65 [-1, 192, 28] 384

ReLU-66 [-1, 192, 28] 0

Conv1d-67 [-1, 128, 28] 24,704

BatchNorm1d-68 [-1, 128, 28] 256

ReLU-69 [-1, 128, 28] 0

Conv1d-70 [-1, 32, 28] 12,320

DenseLayer-71 [-1, 224, 28] 0

BatchNorm1d-72 [-1, 224, 28] 448

ReLU-73 [-1, 224, 28] 0

Conv1d-74 [-1, 128, 28] 28,800

BatchNorm1d-75 [-1, 128, 28] 256

ReLU-76 [-1, 128, 28] 0

Conv1d-77 [-1, 32, 28] 12,320

DenseLayer-78 [-1, 256, 28] 0

BatchNorm1d-79 [-1, 256, 28] 512

ReLU-80 [-1, 256, 28] 0

Conv1d-81 [-1, 128, 28] 32,896

BatchNorm1d-82 [-1, 128, 28] 256

ReLU-83 [-1, 128, 28] 0

Conv1d-84 [-1, 32, 28] 12,320

DenseLayer-85 [-1, 288, 28] 0

BatchNorm1d-86 [-1, 288, 28] 576

ReLU-87 [-1, 288, 28] 0

Conv1d-88 [-1, 128, 28] 36,992

BatchNorm1d-89 [-1, 128, 28] 256

ReLU-90 [-1, 128, 28] 0

Conv1d-91 [-1, 32, 28] 12,320

DenseLayer-92 [-1, 320, 28] 0

BatchNorm1d-93 [-1, 320, 28] 640

ReLU-94 [-1, 320, 28] 0

Conv1d-95 [-1, 128, 28] 41,088

BatchNorm1d-96 [-1, 128, 28] 256

ReLU-97 [-1, 128, 28] 0

Conv1d-98 [-1, 32, 28] 12,320

DenseLayer-99 [-1, 352, 28] 0

BatchNorm1d-100 [-1, 352, 28] 704

ReLU-101 [-1, 352, 28] 0

Conv1d-102 [-1, 128, 28] 45,184

BatchNorm1d-103 [-1, 128, 28] 256

ReLU-104 [-1, 128, 28] 0

Conv1d-105 [-1, 32, 28] 12,320

DenseLayer-106 [-1, 384, 28] 0

BatchNorm1d-107 [-1, 384, 28] 768

ReLU-108 [-1, 384, 28] 0

Conv1d-109 [-1, 128, 28] 49,280

BatchNorm1d-110 [-1, 128, 28] 256

ReLU-111 [-1, 128, 28] 0

Conv1d-112 [-1, 32, 28] 12,320

DenseLayer-113 [-1, 416, 28] 0

BatchNorm1d-114 [-1, 416, 28] 832

ReLU-115 [-1, 416, 28] 0

Conv1d-116 [-1, 128, 28] 53,376

BatchNorm1d-117 [-1, 128, 28] 256

ReLU-118 [-1, 128, 28] 0

Conv1d-119 [-1, 32, 28] 12,320

DenseLayer-120 [-1, 448, 28] 0

BatchNorm1d-121 [-1, 448, 28] 896

ReLU-122 [-1, 448, 28] 0

Conv1d-123 [-1, 128, 28] 57,472

BatchNorm1d-124 [-1, 128, 28] 256

ReLU-125 [-1, 128, 28] 0

Conv1d-126 [-1, 32, 28] 12,320

DenseLayer-127 [-1, 480, 28] 0

BatchNorm1d-128 [-1, 480, 28] 960

ReLU-129 [-1, 480, 28] 0

Conv1d-130 [-1, 128, 28] 61,568

BatchNorm1d-131 [-1, 128, 28] 256

ReLU-132 [-1, 128, 28] 0

Conv1d-133 [-1, 32, 28] 12,320

DenseLayer-134 [-1, 512, 28] 0

BatchNorm1d-135 [-1, 512, 28] 1,024

ReLU-136 [-1, 512, 28] 0

Conv1d-137 [-1, 256, 28] 393,472

AvgPool1d-138 [-1, 256, 14] 0

BatchNorm1d-139 [-1, 256, 14] 512

ReLU-140 [-1, 256, 14] 0

Conv1d-141 [-1, 128, 14] 32,896

BatchNorm1d-142 [-1, 128, 14] 256

ReLU-143 [-1, 128, 14] 0

Conv1d-144 [-1, 32, 14] 12,320

DenseLayer-145 [-1, 288, 14] 0

BatchNorm1d-146 [-1, 288, 14] 576

ReLU-147 [-1, 288, 14] 0

Conv1d-148 [-1, 128, 14] 36,992

BatchNorm1d-149 [-1, 128, 14] 256

ReLU-150 [-1, 128, 14] 0

Conv1d-151 [-1, 32, 14] 12,320

DenseLayer-152 [-1, 320, 14] 0

BatchNorm1d-153 [-1, 320, 14] 640

ReLU-154 [-1, 320, 14] 0

Conv1d-155 [-1, 128, 14] 41,088

BatchNorm1d-156 [-1, 128, 14] 256

ReLU-157 [-1, 128, 14] 0

Conv1d-158 [-1, 32, 14] 12,320

DenseLayer-159 [-1, 352, 14] 0

BatchNorm1d-160 [-1, 352, 14] 704

ReLU-161 [-1, 352, 14] 0

Conv1d-162 [-1, 128, 14] 45,184

BatchNorm1d-163 [-1, 128, 14] 256

ReLU-164 [-1, 128, 14] 0

Conv1d-165 [-1, 32, 14] 12,320

DenseLayer-166 [-1, 384, 14] 0

BatchNorm1d-167 [-1, 384, 14] 768

ReLU-168 [-1, 384, 14] 0

Conv1d-169 [-1, 128, 14] 49,280

BatchNorm1d-170 [-1, 128, 14] 256

ReLU-171 [-1, 128, 14] 0

Conv1d-172 [-1, 32, 14] 12,320

DenseLayer-173 [-1, 416, 14] 0

BatchNorm1d-174 [-1, 416, 14] 832

ReLU-175 [-1, 416, 14] 0

Conv1d-176 [-1, 128, 14] 53,376

BatchNorm1d-177 [-1, 128, 14] 256

ReLU-178 [-1, 128, 14] 0

Conv1d-179 [-1, 32, 14] 12,320

DenseLayer-180 [-1, 448, 14] 0

BatchNorm1d-181 [-1, 448, 14] 896

ReLU-182 [-1, 448, 14] 0

Conv1d-183 [-1, 128, 14] 57,472

BatchNorm1d-184 [-1, 128, 14] 256

ReLU-185 [-1, 128, 14] 0

Conv1d-186 [-1, 32, 14] 12,320

DenseLayer-187 [-1, 480, 14] 0

BatchNorm1d-188 [-1, 480, 14] 960

ReLU-189 [-1, 480, 14] 0

Conv1d-190 [-1, 128, 14] 61,568

BatchNorm1d-191 [-1, 128, 14] 256

ReLU-192 [-1, 128, 14] 0

Conv1d-193 [-1, 32, 14] 12,320

DenseLayer-194 [-1, 512, 14] 0

BatchNorm1d-195 [-1, 512, 14] 1,024

ReLU-196 [-1, 512, 14] 0

Conv1d-197 [-1, 128, 14] 65,664

BatchNorm1d-198 [-1, 128, 14] 256

ReLU-199 [-1, 128, 14] 0

Conv1d-200 [-1, 32, 14] 12,320

DenseLayer-201 [-1, 544, 14] 0

BatchNorm1d-202 [-1, 544, 14] 1,088

ReLU-203 [-1, 544, 14] 0

Conv1d-204 [-1, 128, 14] 69,760

BatchNorm1d-205 [-1, 128, 14] 256

ReLU-206 [-1, 128, 14] 0

Conv1d-207 [-1, 32, 14] 12,320

DenseLayer-208 [-1, 576, 14] 0

BatchNorm1d-209 [-1, 576, 14] 1,152

ReLU-210 [-1, 576, 14] 0

Conv1d-211 [-1, 128, 14] 73,856

BatchNorm1d-212 [-1, 128, 14] 256

ReLU-213 [-1, 128, 14] 0

Conv1d-214 [-1, 32, 14] 12,320

DenseLayer-215 [-1, 608, 14] 0

BatchNorm1d-216 [-1, 608, 14] 1,216

ReLU-217 [-1, 608, 14] 0

Conv1d-218 [-1, 128, 14] 77,952

BatchNorm1d-219 [-1, 128, 14] 256

ReLU-220 [-1, 128, 14] 0

Conv1d-221 [-1, 32, 14] 12,320

DenseLayer-222 [-1, 640, 14] 0

BatchNorm1d-223 [-1, 640, 14] 1,280

ReLU-224 [-1, 640, 14] 0

Conv1d-225 [-1, 128, 14] 82,048

BatchNorm1d-226 [-1, 128, 14] 256

ReLU-227 [-1, 128, 14] 0

Conv1d-228 [-1, 32, 14] 12,320

DenseLayer-229 [-1, 672, 14] 0

BatchNorm1d-230 [-1, 672, 14] 1,344

ReLU-231 [-1, 672, 14] 0

Conv1d-232 [-1, 128, 14] 86,144

BatchNorm1d-233 [-1, 128, 14] 256

ReLU-234 [-1, 128, 14] 0

Conv1d-235 [-1, 32, 14] 12,320

DenseLayer-236 [-1, 704, 14] 0

BatchNorm1d-237 [-1, 704, 14] 1,408

ReLU-238 [-1, 704, 14] 0

Conv1d-239 [-1, 128, 14] 90,240

BatchNorm1d-240 [-1, 128, 14] 256

ReLU-241 [-1, 128, 14] 0

Conv1d-242 [-1, 32, 14] 12,320

DenseLayer-243 [-1, 736, 14] 0

BatchNorm1d-244 [-1, 736, 14] 1,472

ReLU-245 [-1, 736, 14] 0

Conv1d-246 [-1, 128, 14] 94,336

BatchNorm1d-247 [-1, 128, 14] 256

ReLU-248 [-1, 128, 14] 0

Conv1d-249 [-1, 32, 14] 12,320

DenseLayer-250 [-1, 768, 14] 0

BatchNorm1d-251 [-1, 768, 14] 1,536

ReLU-252 [-1, 768, 14] 0

Conv1d-253 [-1, 128, 14] 98,432

BatchNorm1d-254 [-1, 128, 14] 256

ReLU-255 [-1, 128, 14] 0

Conv1d-256 [-1, 32, 14] 12,320

DenseLayer-257 [-1, 800, 14] 0

BatchNorm1d-258 [-1, 800, 14] 1,600

ReLU-259 [-1, 800, 14] 0

Conv1d-260 [-1, 128, 14] 102,528

BatchNorm1d-261 [-1, 128, 14] 256

ReLU-262 [-1, 128, 14] 0

Conv1d-263 [-1, 32, 14] 12,320

DenseLayer-264 [-1, 832, 14] 0

BatchNorm1d-265 [-1, 832, 14] 1,664

ReLU-266 [-1, 832, 14] 0

Conv1d-267 [-1, 128, 14] 106,624

BatchNorm1d-268 [-1, 128, 14] 256

ReLU-269 [-1, 128, 14] 0

Conv1d-270 [-1, 32, 14] 12,320

DenseLayer-271 [-1, 864, 14] 0

BatchNorm1d-272 [-1, 864, 14] 1,728

ReLU-273 [-1, 864, 14] 0

Conv1d-274 [-1, 128, 14] 110,720

BatchNorm1d-275 [-1, 128, 14] 256

ReLU-276 [-1, 128, 14] 0

Conv1d-277 [-1, 32, 14] 12,320

DenseLayer-278 [-1, 896, 14] 0

BatchNorm1d-279 [-1, 896, 14] 1,792

ReLU-280 [-1, 896, 14] 0

Conv1d-281 [-1, 128, 14] 114,816

BatchNorm1d-282 [-1, 128, 14] 256

ReLU-283 [-1, 128, 14] 0

Conv1d-284 [-1, 32, 14] 12,320

DenseLayer-285 [-1, 928, 14] 0

BatchNorm1d-286 [-1, 928, 14] 1,856

ReLU-287 [-1, 928, 14] 0

Conv1d-288 [-1, 128, 14] 118,912

BatchNorm1d-289 [-1, 128, 14] 256

ReLU-290 [-1, 128, 14] 0

Conv1d-291 [-1, 32, 14] 12,320

DenseLayer-292 [-1, 960, 14] 0

BatchNorm1d-293 [-1, 960, 14] 1,920

ReLU-294 [-1, 960, 14] 0

Conv1d-295 [-1, 128, 14] 123,008

BatchNorm1d-296 [-1, 128, 14] 256

ReLU-297 [-1, 128, 14] 0

Conv1d-298 [-1, 32, 14] 12,320

DenseLayer-299 [-1, 992, 14] 0

BatchNorm1d-300 [-1, 992, 14] 1,984

ReLU-301 [-1, 992, 14] 0

Conv1d-302 [-1, 128, 14] 127,104

BatchNorm1d-303 [-1, 128, 14] 256

ReLU-304 [-1, 128, 14] 0

Conv1d-305 [-1, 32, 14] 12,320

DenseLayer-306 [-1, 1024, 14] 0

BatchNorm1d-307 [-1, 1024, 14] 2,048

ReLU-308 [-1, 1024, 14] 0

Conv1d-309 [-1, 512, 14] 1,573,376

AvgPool1d-310 [-1, 512, 7] 0

BatchNorm1d-311 [-1, 512, 7] 1,024

ReLU-312 [-1, 512, 7] 0

Conv1d-313 [-1, 128, 7] 65,664

BatchNorm1d-314 [-1, 128, 7] 256

ReLU-315 [-1, 128, 7] 0

Conv1d-316 [-1, 32, 7] 12,320

DenseLayer-317 [-1, 544, 7] 0

BatchNorm1d-318 [-1, 544, 7] 1,088

ReLU-319 [-1, 544, 7] 0

Conv1d-320 [-1, 128, 7] 69,760

BatchNorm1d-321 [-1, 128, 7] 256

ReLU-322 [-1, 128, 7] 0

Conv1d-323 [-1, 32, 7] 12,320

DenseLayer-324 [-1, 576, 7] 0

BatchNorm1d-325 [-1, 576, 7] 1,152

ReLU-326 [-1, 576, 7] 0

Conv1d-327 [-1, 128, 7] 73,856

BatchNorm1d-328 [-1, 128, 7] 256

ReLU-329 [-1, 128, 7] 0

Conv1d-330 [-1, 32, 7] 12,320

DenseLayer-331 [-1, 608, 7] 0

BatchNorm1d-332 [-1, 608, 7] 1,216

ReLU-333 [-1, 608, 7] 0

Conv1d-334 [-1, 128, 7] 77,952

BatchNorm1d-335 [-1, 128, 7] 256

ReLU-336 [-1, 128, 7] 0

Conv1d-337 [-1, 32, 7] 12,320

DenseLayer-338 [-1, 640, 7] 0

BatchNorm1d-339 [-1, 640, 7] 1,280

ReLU-340 [-1, 640, 7] 0

Conv1d-341 [-1, 128, 7] 82,048

BatchNorm1d-342 [-1, 128, 7] 256

ReLU-343 [-1, 128, 7] 0

Conv1d-344 [-1, 32, 7] 12,320

DenseLayer-345 [-1, 672, 7] 0

BatchNorm1d-346 [-1, 672, 7] 1,344

ReLU-347 [-1, 672, 7] 0

Conv1d-348 [-1, 128, 7] 86,144

BatchNorm1d-349 [-1, 128, 7] 256

ReLU-350 [-1, 128, 7] 0

Conv1d-351 [-1, 32, 7] 12,320

DenseLayer-352 [-1, 704, 7] 0

BatchNorm1d-353 [-1, 704, 7] 1,408

ReLU-354 [-1, 704, 7] 0

Conv1d-355 [-1, 128, 7] 90,240

BatchNorm1d-356 [-1, 128, 7] 256

ReLU-357 [-1, 128, 7] 0

Conv1d-358 [-1, 32, 7] 12,320

DenseLayer-359 [-1, 736, 7] 0

BatchNorm1d-360 [-1, 736, 7] 1,472

ReLU-361 [-1, 736, 7] 0

Conv1d-362 [-1, 128, 7] 94,336

BatchNorm1d-363 [-1, 128, 7] 256

ReLU-364 [-1, 128, 7] 0

Conv1d-365 [-1, 32, 7] 12,320

DenseLayer-366 [-1, 768, 7] 0

BatchNorm1d-367 [-1, 768, 7] 1,536

ReLU-368 [-1, 768, 7] 0

Conv1d-369 [-1, 128, 7] 98,432

BatchNorm1d-370 [-1, 128, 7] 256

ReLU-371 [-1, 128, 7] 0

Conv1d-372 [-1, 32, 7] 12,320

DenseLayer-373 [-1, 800, 7] 0

BatchNorm1d-374 [-1, 800, 7] 1,600

ReLU-375 [-1, 800, 7] 0

Conv1d-376 [-1, 128, 7] 102,528

BatchNorm1d-377 [-1, 128, 7] 256

ReLU-378 [-1, 128, 7] 0

Conv1d-379 [-1, 32, 7] 12,320

DenseLayer-380 [-1, 832, 7] 0

BatchNorm1d-381 [-1, 832, 7] 1,664

ReLU-382 [-1, 832, 7] 0

Conv1d-383 [-1, 128, 7] 106,624

BatchNorm1d-384 [-1, 128, 7] 256

ReLU-385 [-1, 128, 7] 0

Conv1d-386 [-1, 32, 7] 12,320

DenseLayer-387 [-1, 864, 7] 0

BatchNorm1d-388 [-1, 864, 7] 1,728

ReLU-389 [-1, 864, 7] 0

Conv1d-390 [-1, 128, 7] 110,720

BatchNorm1d-391 [-1, 128, 7] 256

ReLU-392 [-1, 128, 7] 0

Conv1d-393 [-1, 32, 7] 12,320

DenseLayer-394 [-1, 896, 7] 0

BatchNorm1d-395 [-1, 896, 7] 1,792

ReLU-396 [-1, 896, 7] 0

Conv1d-397 [-1, 128, 7] 114,816

BatchNorm1d-398 [-1, 128, 7] 256

ReLU-399 [-1, 128, 7] 0

Conv1d-400 [-1, 32, 7] 12,320

DenseLayer-401 [-1, 928, 7] 0

BatchNorm1d-402 [-1, 928, 7] 1,856

ReLU-403 [-1, 928, 7] 0

Conv1d-404 [-1, 128, 7] 118,912

BatchNorm1d-405 [-1, 128, 7] 256

ReLU-406 [-1, 128, 7] 0

Conv1d-407 [-1, 32, 7] 12,320

DenseLayer-408 [-1, 960, 7] 0

BatchNorm1d-409 [-1, 960, 7] 1,920

ReLU-410 [-1, 960, 7] 0

Conv1d-411 [-1, 128, 7] 123,008

BatchNorm1d-412 [-1, 128, 7] 256

ReLU-413 [-1, 128, 7] 0

Conv1d-414 [-1, 32, 7] 12,320

DenseLayer-415 [-1, 992, 7] 0

BatchNorm1d-416 [-1, 992, 7] 1,984

ReLU-417 [-1, 992, 7] 0

Conv1d-418 [-1, 128, 7] 127,104

BatchNorm1d-419 [-1, 128, 7] 256

ReLU-420 [-1, 128, 7] 0

Conv1d-421 [-1, 32, 7] 12,320

DenseLayer-422 [-1, 1024, 7] 0

AdaptiveAvgPool1d-423 [-1, 1024, 1] 0

Linear-424 [-1, 512] 524,800

ReLU-425 [-1, 512] 0

Dropout-426 [-1, 512] 0

Linear-427 [-1, 5] 2,565

================================================================

Total params: 7,431,301

Trainable params: 7,431,301

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.00

Forward/backward pass size (MB): 15.11

Params size (MB): 28.35

Estimated Total Size (MB): 43.46

----------------------------------------------------------------

Process finished with exit code 0

如果需要训练模板,可以在下面的浩浩的科研笔记中的付费资料购买,赠送所有一维神经网络模型的经典代码,可以在模板中随意切换。