MapReduce案例实操—Combiner合并、序列化、partition分区、ReduceTask

一、Combiner合并

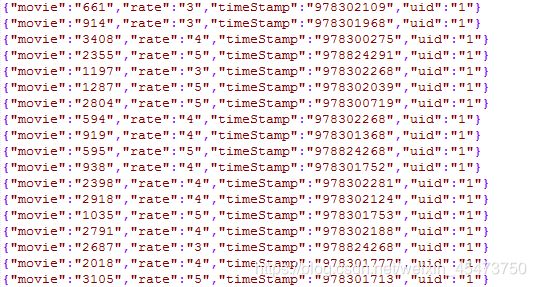

原始数据

截取部分数据如下:

该数据为电影评分数据,分别是电影名、评分、时间、点评人ID。

需求:得到最火的10部电影

即得到评价次数最多的10部电影,先计数再排序再取出。

自定义Combiner实现步骤:

<1>自定义一个combiner继承Reducer,重写reduce方法

<2>在job中设置:job.setCombinerClass(WordcountCombiner.class);

代码如下:

因为要使用Combiner合并,在进入reduce前,先对map阶段的数据小合并一次

所以需要两个类,一个主函数类,一个Combiner类

(1)RateHotN.java

import com.Top.UidTopBean;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

import org.codehaus.jackson.map.ObjectMapper;

import java.io.IOException;

import java.util.Comparator;

import java.util.Map;

import java.util.TreeMap;

public class RateHotN {

public static class RateHotNMap extends Mapper<LongWritable, Text,Text, IntWritable> {

ObjectMapper objectMapper = new ObjectMapper();

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String line = value.toString();

UidTopBean uidTopBean = objectMapper.readValue(line, UidTopBean.class);

String movie = uidTopBean.getMovie();

context.write(new Text(movie),new IntWritable(1));

}

}

public static class RateHotNReduce extends Reducer<Text,IntWritable,Text,IntWritable> {

TreeMap<IntWritable,Text> map;//类似于迭代器,一个存储器

@Override

protected void setup(Context context) throws IOException, InterruptedException {

map = new TreeMap<>(new Comparator<IntWritable>() {

@Override

public int compare(IntWritable o1, IntWritable o2) {

return o2.compareTo(o1);

}

});

}

@Override

protected void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException {

Integer count = 0;

for (IntWritable value : values){

count = count + value.get();

}

map.put(new IntWritable(count),new Text(key));

}

@Override

protected void cleanup(Context context) throws IOException, InterruptedException {

Configuration conf = context.getConfiguration();

int num = conf.getInt("num", 3);

for (int i=0;i<num;i++){

Map.Entry<IntWritable, Text> entry = map.pollFirstEntry();

IntWritable count = entry.getKey();

Text movie = entry.getValue();

context.write(movie,count);

}

}

}

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

conf.setInt("num",Integer.parseInt(args[0]));

conf.set("yarn.resorcemanager.hostname","jasmine01");

conf.set("fs.deafutFS","hdfs://jasmine01:9000/");

Job job = Job.getInstance(conf);

job.setCombinerClass(RateHotNCombiner.class);//使用重写后的Combiner

job.setJarByClass(RateHotN.class);

job.setMapperClass(RateHotNMap.class);

job.setReducerClass(RateHotNReduce.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

//制定本次job读取源数据时需要用的组件:我们的源文件在hdfs的文本文件中,用TextInputFormat

job.setInputFormatClass(TextInputFormat.class);

//制定本次job输出数据时需要用的组件:我们要输出到hdfs文件中,用TextInputFormat

job.setOutputFormatClass(TextOutputFormat.class);

FileInputFormat.setInputPaths(job,new Path(args[0]));

FileOutputFormat.setOutputPath(job,new Path(args[1]));

boolean b = job.waitForCompletion(true);

System.exit(b?0:1);

}

}

(2)RateHotNCombiner.java

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

//combiner是MR程序中Mapper和Reducer之外的一种组件

//combiner组件的父类就是Reducer

//combiner和Reducer是有区别的,combiner是在每个maptask都有一个,Reducer是将所有的mapTask的结果进行合并后去执行。

public class RateHotNCombiner extends Reducer<Text, IntWritable,Text,IntWritable> {

@Override

protected void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException {

Integer count = 0;

for (IntWritable value :values){

count++;

}

context.write(key,new IntWritable(count));

}

}

二、partition分区、分组并设置ReduceTask

原始数据

数据分别代表:时间戳、手机号码、网段、IP…上行流量、下行流量等

我们的需求是:

需求1:统计手机号耗费的总上行流量、下行流量、总流量(序列化)

基本思路:

Map阶段:

(1)读取一行数据,切分字段

(2)抽取手机号、上行流量、下行流量

(3)以手机号为key,bean对象为value输出,即context.write(手机号,bean);

Reduce阶段:

(1)累加上行流量和下行流量得到总流量。

(2)实现自定义的bean来封装流量信息,并将bean作为map输出的key来传输

(3)MR程序在处理数据的过程中会对数据排序(map输出的kv对传输到reduce之前,会排序),排序的依据是map输出的key

所以,我们如果要实现自己需要的排序规则,则可以考虑将排序因素放到key中,让key实现接口:WritableComparable。然后重写key的compareTo方法。

依旧是两个类,一个Bean类,一个主方法类

(1)Bean.java

package com.jasmine;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

import org.apache.hadoop.io.Writable;

// bean对象要实例化

public class FlowBean implements Writable {

private long upFlow;

private long downFlow;

private long sumFlow;

// 反序列化时,需要反射调用空参构造函数,所以必须有

public FlowBean() {

super();

}

public FlowBean(long upFlow, long downFlow) {

super();

this.upFlow = upFlow;

this.downFlow = downFlow;

this.sumFlow = upFlow + downFlow;

}

public long getSumFlow() {

return sumFlow;

}

public void setSumFlow(long sumFlow) {

this.sumFlow = sumFlow;

}

public long getUpFlow() {

return upFlow;

}

public void setUpFlow(long upFlow) {

this.upFlow = upFlow;

}

public long getDownFlow() {

return downFlow;

}

public void setDownFlow(long downFlow) {

this.downFlow = downFlow;

}

/**

* 序列化方法

*

* @param out

* @throws IOException

*/

@Override

public void write(DataOutput out) throws IOException {

out.writeLong(upFlow);

out.writeLong(downFlow);

out.writeLong(sumFlow);

}

/**

* 反序列化方法

注意反序列化的顺序和序列化的顺序完全一致

*

* @param in

* @throws IOException

*/

@Override

public void readFields(DataInput in) throws IOException {

upFlow = in.readLong();

downFlow = in.readLong();

sumFlow = in.readLong();

}

@Override

public String toString() {

return upFlow + "\t" + downFlow + "\t" + sumFlow;

}

}

(2)Count.class

package com.jasmine;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class FlowCount {

//输出的value是FlowBean

static class FlowCountMapper extends Mapper {

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

// 1 将一行内容转成string

String ling = value.toString();

// 2 切分字段

String[] fields = ling.split("\t");//\t是tab键

// 3 取出手机号码

String phoneNum = fields[1];

// 4 取出上行流量和下行流量

long upFlow = Long.parseLong(fields[fields.length - 3]);

long downFlow = Long.parseLong(fields[fields.length - 2]);

// 5 写出数据

context.write(new Text(phoneNum), new FlowBean(upFlow, downFlow));

}

}

static class FlowCountReducer extends Reducer {

@Override

protected void reduce(Text key, Iterable values, Context context)

throws IOException, InterruptedException {

long sum_upFlow = 0;

long sum_downFlow = 0;

// 1 遍历所用bean,将其中的上行流量,下行流量分别累加

for (FlowBean bean : values) {

sum_upFlow += bean.getUpFlow();

sum_downFlow += bean.getDownFlow();

}

// 2 封装对象

FlowBean resultBean = new FlowBean(sum_upFlow, sum_downFlow);

context.write(key, resultBean);

}

}

public static void main(String[] args) throws Exception {

// 1 获取配置信息,或者job对象实例

Configuration configuration = new Configuration();

Job job = Job.getInstance(configuration);

// 6 指定本程序的jar包所在的本地路径

job.setJarByClass(FlowCount.class);

// 2 指定本业务job要使用的mapper/Reducer业务类

job.setMapperClass(FlowCountMapper.class);

job.setReducerClass(FlowCountReducer.class);

// 3 指定mapper输出数据的kv类型

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(FlowBean.class);

// 4 指定最终输出的数据的kv类型

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(FlowBean.class);

// 5 指定job的输入原始文件所在目录

FileInputFormat.setInputPaths(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

// 7 将job中配置的相关参数,以及job所用的java类所在的jar包, 提交给yarn去运行

boolean result = job.waitForCompletion(true);

System.exit(result ? 0 : 1);

}

}

需求2:将统计结果按照手机归属地不同省份输出到不同文件中(Partitioner)

分析:如果要按照我们自己的需求进行分组,则需要改写数据分发(分组)组件Partitioner

(1)自定义一个CustomPartitioner继承抽象类:Partitioner

(2)在job驱动中,设置自定义partitioner: job.setPartitionerClass(CustomPartitioner.class)

代码如下:

两个类,一个主方法类,一个自定义分区类

(1)自定义分区—PrivatePatition.java

package com.Patitioner;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Partitioner;

import java.util.HashMap;

public class PrivatePatition extends Partitioner<Text, IntWritable> {

private static HashMap<String,Integer> privateMap = new HashMap<>();

static {

privateMap.put("135",0);

privateMap.put("136",1);

privateMap.put("137",2);

privateMap.put("138",3);

privateMap.put("139",4);

}

@Override

public int getPartition(Text text, IntWritable intWritable, int i) {

// 获取电话号码的前三位

String tellNum = text.toString().substring(0, 3);

//与定义的分区对比判断属于哪个分区

Integer partitionCode = privateMap.get(tellNum);

//若partitionCode为空,则放入5分区;若不为空,则放入匹配到的相应分区。

return partitionCode == null ? 5 : partitionCode;

}

}

(2)主方法类—TelePatition.java

package com.Patitioner;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

import java.io.IOException;

public class TelePatition {

public static class TelePatitionMap extends Mapper<LongWritable, Text,Text, IntWritable> {

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String[] split = value.toString().split("\t");

String telleNum = split[1];

Integer upFlow = Integer.parseInt(split[8]);

Integer donwFlow = Integer.parseInt(split[9]);

Integer sumFlow = upFlow + donwFlow;

context.write(new Text(telleNum),new IntWritable(sumFlow));

}

}

public static class TelePatitionReduce extends Reducer<Text,IntWritable,Text,IntWritable> {

@Override

protected void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException {

Integer heFlow = 0;

for (IntWritable value : values){

heFlow += value.get();

}

context.write(key,new IntWritable(heFlow));

}

}

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf = new Configuration();

Job job = Job.getInstance(conf);

job.setJarByClass(TelePatition.class);

//指定reducetask数量

//假设自定义分区数为6,则

//job.setNumReduceTasks(1);会正常运行,只不过会产生一个输出文件

//job.setNumReduceTasks(2);会报错

//job.setNumReduceTasks(7);大于5,程序会正常运行,会产生空文件

job.setNumReduceTasks(6);

//具体使用哪个partition

//指定自定义数据分区

job.setPartitionerClass(PrivatePatition.class);

job.setMapperClass(TelePatitionMap.class);

job.setReducerClass(TelePatitionReduce.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

//制定本次job读取源数据时需要用的组件:我们的源文件在hdfs的文本文件中,用TextInputFormat

job.setInputFormatClass(TextInputFormat.class);

//制定本次job输出数据时需要用的组件:我们要输出到hdfs文件中,用TextInputFormat

job.setOutputFormatClass(TextOutputFormat.class);

FileInputFormat.setInputPaths(job,new Path(args[0]));

FileOutputFormat.setOutputPath(job,new Path(args[1]));

//如果不写FileOutputFormat.setOutputPath(job,new Path(args[1]));则可以这样写:

//Path outputPath = new Path("E:\\mrtest\\flow\\output-partition");

//FileSystem fs = FileSystem.get(conf);

//if(fs.exists(outputPath)){

// fs.delete(outputPath,true);

//}

//FileOutputFormat.setOutputPath(job,outputPath);

boolean b = job.waitForCompletion(true);

System.exit(b?0:1);

}

}

我还找到了另一种自定义分区类的写法

代码如下:

import java.util.HashMap;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Partitioner;

public class ProvincePartitioner extends Partitioner {

@Override

public int getPartition(Text key, FlowBean value, int numPartitions) {

// 1 获取电话号码的前三位

String preNum = key.toString().substring(0, 3);

int partition = 4;

// 2 判断是哪个省

if ("136".equals(preNum)) {

partition = 0;

}else if ("137".equals(preNum)) {

partition = 1;

}else if ("138".equals(preNum)) {

partition = 2;

}else if ("139".equals(preNum)) {

partition = 3;

}

return partition;

}

}

至此,MapReduce阶段的学习就告一段落了。