chainer-语义分割-训练预测框架构建

文章目录

- 前言

- 一、数据标注

- 二、框架搭建

-

- 1.数据加载

- 2.数据迭代器

- 3.主体训练类设计

-

- 1.CPU/GPU配置

- 2.模型初始化

- 3.模型训练

- 4.模型预测

- 三、训练预测代码

- 总结

前言

本文主要类似图像分类一样,先做一个整体的语义分割的框架,然后只需要修改模型结构即可

一、数据标注

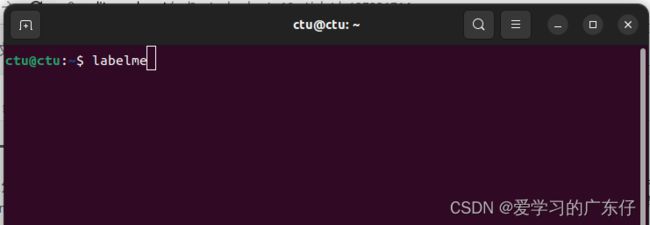

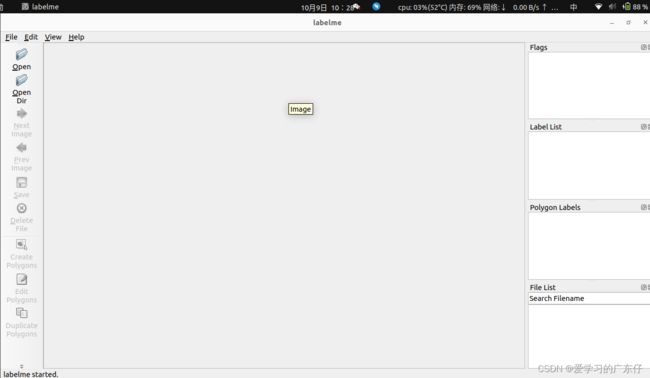

语义分割数据标注主要使用labelImg工具,python安装只需要:pip install labelme 即可,然后在命令提示符输入:labelme即可,如图:

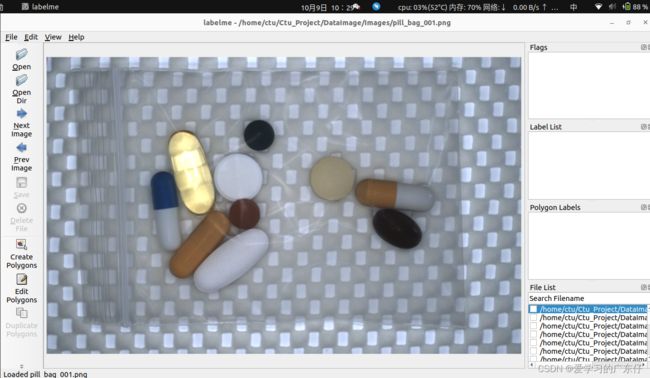

在这里只需要修改“OpenDir“,“OpenDir“主要是存放图片需要标注的路径

选择好路径之后即可开始绘制:

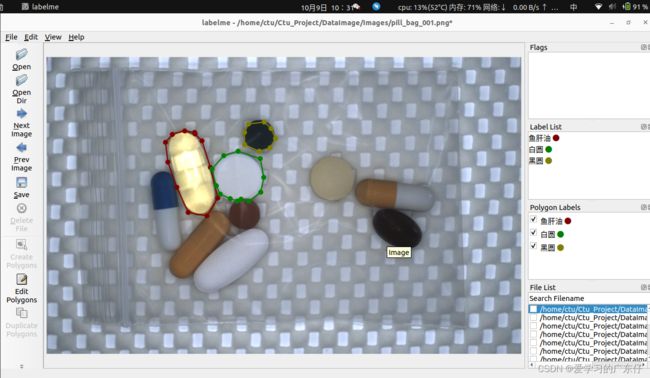

我在平时标注的时候快捷键一般只用到:

createpolygons:(ctrl+N)开始绘制

a:上一张

d:下一张

绘制过程如图:

就只需要一次把目标绘制完成即可。

二、框架搭建

1.数据加载

def CreateDataList(DataDir,train_split=0.9):

DataList = [os.path.join(DataDir, fileEach) for fileEach in [each for each in os.listdir(DataDir) if re.match(r'.*\.json', each)]]

All_label_name = ['_background_']

voc_colormap = [[0, 0, 0]]

for each_json in DataList:

data = json.load(open(each_json,encoding='utf-8',errors='ignore'))

for shape in sorted(data['shapes'], key=lambda x: x['label']):

label_name = shape['label']

if label_name not in All_label_name:

All_label_name.append(label_name)

voc_colormap.append([len(voc_colormap), len(voc_colormap), len(voc_colormap)])

random.shuffle(DataList)

if train_split<=0 or train_split>=1:

train_list = DataList

val_list = DataList

else:

train_list = DataList[:int(len(DataList)*train_split)]

val_list = DataList[int(len(DataList)*train_split):]

return train_list,val_list, All_label_name, voc_colormap

2.数据迭代器

class SegmentationDataset(GetterDataset):

def __init__(self, data_list,classes_names):

super(SegmentationDataset, self).__init__()

self.data_list = data_list

self.classes_names = classes_names

self.add_getter('img', self._get_image)

self.add_getter('label', self._get_label)

def __len__(self):

return len(self.data_list)

def _get_image(self, i):

img, _ = JsonToLabel(self.data_list[i],self.classes_names)

img = img[:, :, ::-1] # BGR -> RGB

img = img.transpose((2, 0, 1)) # HWC -> CHW

return img.astype(np.float32)

def _get_label(self, i):

_, img = JsonToLabel(self.data_list[i],self.classes_names)

if img.ndim == 2:

return img

elif img.shape[2] == 1:

return img[:, :, 0]

3.主体训练类设计

1.CPU/GPU配置

chainer.cuda.get_device_from_id(self.gpu_devices).use()

注意对于GPU配置,需要在变量使用前都需要加载到GPU内存上

如:

chainer.cuda.to_gpu(pre_img) # CPU->GPU

chainer.cuda.to_cpu(pre_img) # GPU->CPU

2.模型初始化

self.train_datalist, self.val_datalist, self.classes_names,self.colormap = CreateDataList(DataDir,train_split)

train_dataset = SegmentationDataset(self.train_datalist,self.classes_names)

val_dataset = SegmentationDataset(self.val_datalist,self.classes_names)

train_dataset = TransformDataset(train_dataset, ('img', 'label'), ValTransform(self.mean, (self.image_size,self.image_size)))

val_dataset = TransformDataset(val_dataset, ('img', 'label'), ValTransform(self.mean, (self.image_size,self.image_size)))

self.train_iter = chainer.iterators.MultiprocessIterator(train_dataset, batch_size=self.batch_size , n_processes=num_workers)

self.val_iter = chainer.iterators.SerialIterator(val_dataset, batch_size=self.batch_size, repeat=False, shuffle=False)

model = None

self.model = ModifiedClassifier(model, lossfun=F.softmax_cross_entropy)

if self.gpu_devices>=0:

chainer.cuda.get_device_from_id(self.gpu_devices).use()

self.model.to_gpu()

这里变量model主要是各个模型结构的变量,这里制作框架暂时不需要,因此可以先空着

3.模型训练

首先我们先理解一下chainer的训练结构,如图:

![]() 从图中我们可以了解到,首先我们需要设置一个Trainer,这个可以理解为一个大大的训练板块,然后做一个Updater,这个从图中可以看出是把训练的数据迭代器和优化器链接到更新器中,实现对模型的正向反向传播,更新模型参数。然后还有就是Extensions,此处的功能是在训练的中途进行操作可以随时做一些回调(描述可能不太对),比如做一些模型评估,修改学习率,可视化验证集等操作。

从图中我们可以了解到,首先我们需要设置一个Trainer,这个可以理解为一个大大的训练板块,然后做一个Updater,这个从图中可以看出是把训练的数据迭代器和优化器链接到更新器中,实现对模型的正向反向传播,更新模型参数。然后还有就是Extensions,此处的功能是在训练的中途进行操作可以随时做一些回调(描述可能不太对),比如做一些模型评估,修改学习率,可视化验证集等操作。

因此我们只需要严格按照此图建设训练步骤基本上没有什么大问题,下面一步一步设置

设置优化器:

optimizer = optimizers.MomentumSGD(lr=learning_rate, momentum=0.9)

optimizer.setup(self.model)

设置update和trainer:

updater = chainer.training.StandardUpdater(self.train_iter, optimizer, device=self.gpu_devices)

trainer = chainer.training.Trainer(updater, (TrainNum, 'epoch'), out=ModelPath)

Extensions功能设置:

# 修改学习率

trainer.extend(

extensions.ExponentialShift('lr', 0.9, init=learning_rate),

trigger=chainer.training.triggers.ManualScheduleTrigger([50,80,150,200,280,350], 'epoch'))

# 每过一次迭代验证集跑一次

trainer.extend(

extensions.Evaluator(self.val_iter, self.model, device=self.gpu_devices),

trigger=chainer.training.triggers.ManualScheduleTrigger([each for each in range(1,TrainNum)], 'epoch'))

# 模型保存

trainer.extend(

chainer.training.extensions.snapshot_object(self.extractor, 'Ctu_final_Model.npz'),

trigger=chainer.training.triggers.ManualScheduleTrigger([each for each in range(1,TrainNum)], 'epoch'))

# 日志及文件输出

log_interval = 0.1, 'epoch'

trainer.extend(chainer.training.extensions.LogReport(filename='ctu_log.json',trigger=log_interval))

trainer.extend(chainer.training.extensions.observe_lr(), trigger=log_interval)

trainer.extend(extensions.dump_graph("main/loss", filename='ctu_net.net'))

最后配置完之后只需要一行代码即可开始训练

trainer.run()

模型训练部分对于分类、定位、分割基本是一致的

4.模型预测

def predict(self,img_cv):

start_time = time.time()

pre_img = []

for each_img in img_cv:

img = each_img[:, :, ::-1] # BGR -> RGB

img = img.transpose((2, 0, 1)) # HWC -> CHW

img = img.astype(np.float32)

img = resize(img, (self.image_size,self.image_size), Image.BICUBIC)

img = img - self.mean

pre_img.append(img)

pre_img = np.array(pre_img)

pred_img = self.model.predictor(chainer.cuda.to_gpu(pre_img))

pred_img = self.model.predictor.xp.argmax(pred_img.data, axis=1).astype("i")

pred_img = chainer.cuda.to_cpu(pred_img)

result_value = {

"classes_names":self.classes_names,

"origin_img":[],

"image_result": [],

"image_result_label":[],

"img_add": [],

"time": 0,

'num_image':len(img_cv)

}

for each_id in range(len(img_cv)):

origin_img = img_cv[each_id]

base_imageSize = origin_img.shape

pred = pred_img[each_id]

pred = pred.astype("uint8")

seg_img = np.zeros((np.shape(pred)[0], np.shape(pred)[1], 3))

for c in range(len(self.classes_names)):

seg_img[:,:,0] += ((pred[:,: ] == c )*( self.colors[c][0] )).astype('uint8')

seg_img[:,:,1] += ((pred[:,: ] == c )*( self.colors[c][1] )).astype('uint8')

seg_img[:,:,2] += ((pred[:,: ] == c )*( self.colors[c][2] )).astype('uint8')

image_result = cv2.resize(seg_img, (base_imageSize[1], base_imageSize[0]))

image_result = image_result.astype("uint8")

image_result_label = cv2.resize(pred, (base_imageSize[1], base_imageSize[0]))

image_result_label = image_result_label.astype("uint8")

img_add = cv2.addWeighted(origin_img, 1.0, image_result, 0.7, 0)

result_value['origin_img'].append(origin_img)

result_value['image_result'].append(image_result)

result_value['image_result_label'].append(image_result_label)

result_value['img_add'].append(img_add)

result_value['time'] = (time.time() - start_time) * 1000

return result_value

三、训练预测代码

因为本代码是以对象形式编写的,因此调用起来也是很方便的,如下显示:

ctu = Ctu_DeepLab(USEGPU='0',image_size=416)

ctu.InitModel(r'D:/Ctu/Ctu_Project_DL\DataSet\DataSet_Segmentation_YaoPian\DataImage', train_split=0.9, alpha = 1, batch_size = 2,num_workers=1,backbone='xception',Pre_Model=None)

ctu.train(TrainNum=120,learning_rate=0.0001, ModelPath='result_Model_xception')

# ctu = Ctu_DeepLab(USEGPU='0')

# ctu.LoadModel(ModelPath='./result')

# predictNum=1

# predict_cvs = []

# cv2.namedWindow("result", 0)

# cv2.resizeWindow("result", 640, 480)

# for root, dirs, files in os.walk(r'D:/Ctu/Ctu_Project_DL\DataSet\DataSet_Segmentation_YaoPian/test'):

# for f in files:

# if len(predict_cvs) >=predictNum:

# predict_cvs.clear()

# img_cv = ctu.read_image(os.path.join(root, f))

# if img_cv is None:

# continue

# print(os.path.join(root, f))

# predict_cvs.append(img_cv)

# if len(predict_cvs) == predictNum:

# result = ctu.predict(predict_cvs)

# print(result['time'])

# for each_id in range(result['num_image']):

# htich = np.hstack((predict_cvs[each_id],result['img_add'][each_id]))

# htich2 = np.hstack((result['image_result'][each_id],cv2.cvtColor(result['image_result_label'][each_id],cv2.COLOR_GRAY2RGB)))

# vtich = np.vstack((htich, htich2))

# cv2.imshow("result", vtich)

# cv2.waitKey()

总结

本文章主要是基于chainer的语义分割的基本框架,下边的主要是开始对各个模型如何在此框架中应用起来并把结果展示出来。

有问题可以私聊