ELFK日志平台入门5---Logstash+Filebeat集群搭建

ELFK日志平台入门1---架构设计

ELFK日志平台入门2---Elasticseach集群搭建

ELFK日志平台入门3---Kibana搭建

ELFK日志平台入门4---Kafka集群搭建

ELFK日志平台入门5---Logstash+Filebeat集群搭建

这个章节我们介绍下logstash+filebeat集群搭建。

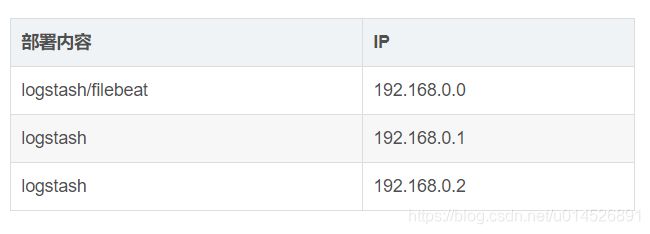

1、环境准备

资源规划:

2、Logstash集群部署

下面操作均在三台机器操作:

- 解压Logstash安装包:

# tar zxf logstash-6.7.1.tar.gz && mv logstash-6.7.1/ /usr/local/logstash

# mkdir /usr/local/logstash/conf.d- 修改Logstash配置:

# vim /usr/local/logstash/config/logstash.yml

http.host: "192.168.0.0" #填本机ip

http.port: 9600- 配置Logstash服务:

新增服务配置文件:

# vim /etc/default/logstash

LS_HOME="/usr/local/logstash"

LS_SETTINGS_DIR="/usr/local/logstash"

LS_PIDFILE="/usr/local/logstash/run/logstash.pid"

LS_USER="elk"

LS_GROUP="elk"

LS_GC_LOG_FILE="/usr/local/logstash/logs/gc.log"

LS_OPEN_FILES="16384"

LS_NICE="19"

SERVICE_NAME="logstash"

SERVICE_DESCRIPTION="logstash"

新增服务文件:

# vim /etc/systemd/system/logstash.service

[Unit]

Description=logstash

[Service]

Type=simple

User=elk

Group=elk

# Load env vars from /etc/default/ and /etc/sysconfig/ if they exist.

# Prefixing the path with '-' makes it try to load, but if the file doesn't

# exist, it continues onward.

EnvironmentFile=-/etc/default/logstash

EnvironmentFile=-/etc/sysconfig/logstash

ExecStart=/usr/local/logstash/bin/logstash "--path.settings" "/usr/local/logstash/config" "--path.config" "/usr/local/logstash/conf.d"

Restart=always

WorkingDirectory=/

Nice=19

LimitNOFILE=16384

[Install]

WantedBy=multi-user.target

管理服务:

# mkdir /usr/local/logstash/{run,logs} && touch /usr/local/logstash/run/logstash.pid

# touch /usr/local/logstash/logs/gc.log && chown -R elk:elk /usr/local/logstash

# systemctl daemon-reload

# systemctl enable logstash

3、Filebeat部署

- 解压Filebeat安装包:

# tar zxf filebeat-6.2.4-linux-x86_64.tar.gz && mv filebeat-6.2.4-linux-x86_64 /usr/local/filebeat

- 配置filebeat服务 :

新增服务配置文件:

# vim /usr/lib/systemd/system/filebeat.service

[Unit]

Description=Filebeat sends log files to Logstash or directly to Elasticsearch.

Documentation=https://www.elastic.co/products/beats/filebeat

Wants=network-online.target

After=network-online.target

[Service]

ExecStart=/usr/local/filebeat/filebeat -c /usr/local/filebeat/filebeat.yml -path.home /usr/local/filebeat -path.config /usr/local/filebeat -path.data /usr/local/filebeat/data -path.logs /usr/local/filebeat/logs

Restart=always

[Install]

WantedBy=multi-user.target管理服务:

# mkdir /usr/local/filebeat/{data,logs}

# systemctl daemon-reload

# systemctl enable filebeat3、与Kafka结合

这里以收集/app/log/app.log目录下日志为例:

- 配置filebeat:

# vim /usr/local/filebeat/filebeat.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /app/log/app.log #日志路径

fields:

log_topics: app-service-log

output.kafka:

enabled: true

hosts: ["192.168.0.0:9092","192.168.0.1:9092","192.168.0.2:9092"]

topic: '%{[fields][log_topics]}'- Kafka集群创建topic:

# /usr/local/kafka/bin/kafka-topics.sh --create --zookeeper 192.168.0.0:2181 --replication-factor 3 --partitions 1 --topic app-service-log

Created topic app-service-log.

- 配置Logstash:

# vim /usr/local/logstash/conf.d/messages.conf

input {

kafka {

bootstrap_servers => "192.168.0.0:9092,192.168.0.1:9092,192.168.0.2:9092"

group_id => "app-service" #默认为logstash

topics => "app-service-log"

auto_offset_reset => "latest" #从最新的偏移量开始消费

consumer_threads => 5 #消费的线程数

decorate_events => true #在输出消息的时候回输出自身的信息,包括:消费消息的大小、topic来源以及consumer的group信息

type => "app-service"

}

}

output {

elasticsearch {

hosts => ["192.168.0.0:9200","192.168.0.1:9200","192.168.0.2:9200"]

index => "sys_messages.log-%{+YYYY.MM.dd}"

}

}安装logstash-input-kafka插件:

# chown -R elk:elk /usr/local/logstash

# /usr/local/logstash/bin/logstash-plugin install logstash-input-kafka- 启动相关服务:

# systemctl start filebeat #启动filebeat

# systemctl start logstash #启动kafka

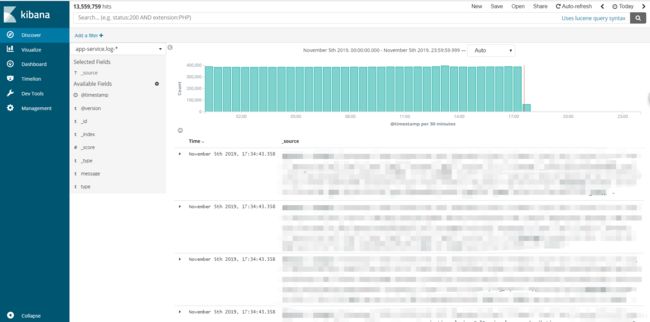

- 浏览器访问Kibana,新增索引查询:

4、Elasticsearch数据定时清理

由于生产环境日志量较大,长期存放Elasticsearch,会导致磁盘空间爆满风险,那如何保留近两天的日志呢?

这边要采用crontab来支持,Linux crontab是用来定期执行程序的命令。

- 创建sh可执行文件:

# vi /user/local/elasticsearch/es-index-clear.sh

#!/bin/bash

LAST_DATA=`date -d "-2 days" "+%Y.%m.%d"` #两天前的时间

curl -XDELETE http://192.168.0.0:9200/*-${LAST_DATA} #删除es两天前数据

# chmod 777 es-index-clear.sh- 配置执行时间、文件路径:

# crontab -e

10 00 * * * /usr/local/elasticsearch/es-index-clear.sh #每天00点10分执行- 重启crontab服务:

# service crond restart至此ELFK+Kafka集群已经搭建完成,开始体验日志分析平台带来的快捷。