深度学习8 GAN生成对抗网络

文章目录

- 前言

- 正文

-

- 1 GAN的原理介绍

- 2 GAN的训练过程体现出GAN原理的精髓

- 3 GAN无监督学习

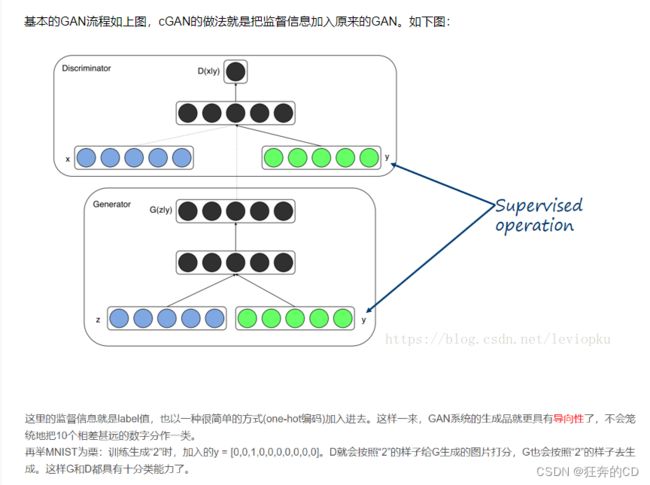

- 4 CGAN

前言

一直觉得GANs是个值得研究的方向,借着公司的项目终于有机会了。GANs的成名战是AlphaGo,后来被用到图像生成,AI换脸,AI作画等。核心是让AI天马行空的想象,同时它是无监督的,意味着不需要人工去标注它。对于长期标注矫正训练数据的我们来说,成本太大,这方面会不会是解决方案呢?这个话题有点深度了,且短期不易实现。我的实际需求是,用于训练模型的数据受限,小样本的情况下是否有机会用纯深度学习来解决问题呢,比如用GANs来生成补全对应的数据。

正文

1 GAN的原理介绍

参考:https://www.guyuehome.com/37692

理解GAN的两大护法G和D

G是generator,生成器: 负责凭空捏造数据出来

D是discriminator,判别器: 负责判断数据是不是真数据

这样可以简单的看作是两个网络的博弈过程。在最原始的GAN论文里面,G和D都是两个多层感知机网络。首先,注意一点,GAN操作的数据不一定非得是图像数据,不过为了更方便解释,我在这里用图像数据为例解释以下GAN:

上图中,z是随机噪声(就是随机生成的一些数,也就是GAN生成图像的源头)。D通过真图和假图的数据(相当于天然label),进行一个二分类神经网络训练(想各位必再熟悉不过了)。G根据一串随机数就可以捏造一个“假图像”出来,用这些假图去欺骗D,D负责辨别这是真图还是假图,会给出一个score。比如,G生成了一张图,在D这里得分很高,那证明G是很成功的;如果D能有效区分真假图,则G的效果还不太好,需要调整参数。GAN就是这么一个博弈的过程。

2 GAN的训练过程体现出GAN原理的精髓

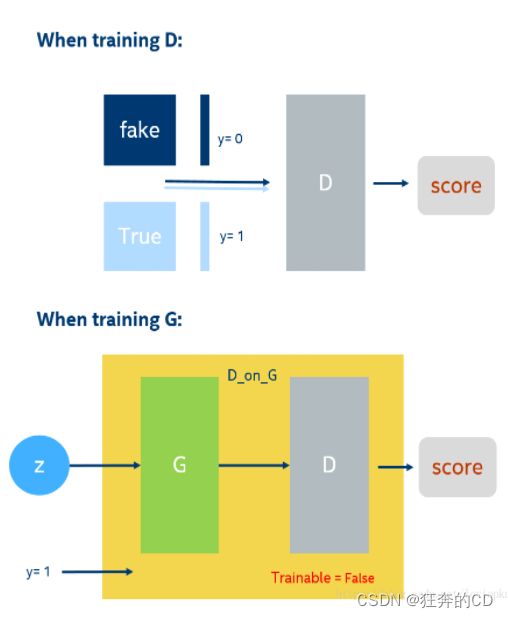

GAN的训练在同一轮梯度反传的过程中可以细分为2步,先训练D在训练G 。 为了更清楚的描述这过程,参考另一篇 https://36kr.com/p/1721743589377

第一阶段:训练鉴别器,冻结生成器(冻结意思是不训练,神经网络只向前传播,不进行Backpropagation反向传播)

第二阶段:训练生成器,冻结鉴别器。

这里面的冻结很形象了。

3 GAN无监督学习

需要注意的是,整个GAN的整个过程都是无监督的(后面会有监督性GAN比如cGAN),怎么理解这里的无监督呢?

这里,给的真图是没有经过人工标注的,你只知道这是真实的图片,比如全是人脸,而系统里的D并不知道来的图片是什么玩意儿,它只需要分辨真假。G也不知道自己生成的是什么玩意儿,反正就是学真图片的样子骗D。

正由于GAN的无监督,在生成过程中,G就会按照自己的意思天马行空生成一些“诡异”的图片,可怕的是D还能给一个很高的分数。比如,生成人脸极度扭曲的图片。这就是无监督目的性不强所导致的,所以在同年的NIPS大会上,有一篇论文conditional GAN就加入了监督性进去,将可控性增强,表现效果也好很多。

作者提供了Keras的实现代码也很简洁:

from __future__ import print_function, division

from keras.datasets import mnist

from keras.layers import Input, Dense, Reshape, Flatten, Dropout

from keras.layers import BatchNormalization, Activation, ZeroPadding2D

from keras.layers.advanced_activations import LeakyReLU

from keras.layers.convolutional import UpSampling2D, Conv2D

from keras.models import Sequential, Model

from keras.optimizers import Adam

import matplotlib.pyplot as plt

import sys

import numpy as np

class GAN():

def __init__(self):

self.img_rows = 28

self.img_cols = 28

self.channels = 1

self.img_shape = (self.img_rows, self.img_cols, self.channels)

self.latent_dim = 100

optimizer = Adam(0.0002, 0.5)

# Build and compile the discriminator

self.discriminator = self.build_discriminator()

self.discriminator.compile(loss='binary_crossentropy',

optimizer=optimizer,

metrics=['accuracy'])

# Build the generator

self.generator = self.build_generator()

# The generator takes noise as input and generates imgs

z = Input(shape=(self.latent_dim,))

img = self.generator(z)

# For the combined model we will only train the generator

self.discriminator.trainable = False

# The discriminator takes generated images as input and determines validity

validity = self.discriminator(img)

# The combined model (stacked generator and discriminator)

# Trains the generator to fool the discriminator

self.combined = Model(z, validity)

self.combined.compile(loss='binary_crossentropy', optimizer=optimizer)

def build_generator(self):

model = Sequential()

model.add(Dense(256, input_dim=self.latent_dim))

model.add(LeakyReLU(alpha=0.2))

model.add(BatchNormalization(momentum=0.8))

model.add(Dense(512))

model.add(LeakyReLU(alpha=0.2))

model.add(BatchNormalization(momentum=0.8))

model.add(Dense(1024))

model.add(LeakyReLU(alpha=0.2))

model.add(BatchNormalization(momentum=0.8))

model.add(Dense(np.prod(self.img_shape), activation='tanh'))

model.add(Reshape(self.img_shape))

model.summary()

noise = Input(shape=(self.latent_dim,))

img = model(noise)

return Model(noise, img)

def build_discriminator(self):

model = Sequential()

model.add(Flatten(input_shape=self.img_shape))

model.add(Dense(512))

model.add(LeakyReLU(alpha=0.2))

model.add(Dense(256))

model.add(LeakyReLU(alpha=0.2))

model.add(Dense(1, activation='sigmoid'))

model.summary()

img = Input(shape=self.img_shape)

validity = model(img)

return Model(img, validity)

def train(self, epochs, batch_size=128, sample_interval=50):

# Load the dataset

(X_train, _), (_, _) = mnist.load_data()

# Rescale -1 to 1

X_train = X_train / 127.5 - 1.

X_train = np.expand_dims(X_train, axis=3)

# Adversarial ground truths

valid = np.ones((batch_size, 1))

fake = np.zeros((batch_size, 1))

for epoch in range(epochs):

# ---------------------

# Train Discriminator

# ---------------------

# Select a random batch of images

idx = np.random.randint(0, X_train.shape[0], batch_size)

imgs = X_train[idx]

noise = np.random.normal(0, 1, (batch_size, self.latent_dim))

# Generate a batch of new images

gen_imgs = self.generator.predict(noise)

# Train the discriminator

d_loss_real = self.discriminator.train_on_batch(imgs, valid)

d_loss_fake = self.discriminator.train_on_batch(gen_imgs, fake)

d_loss = 0.5 * np.add(d_loss_real, d_loss_fake)

# ---------------------

# Train Generator

# ---------------------

noise = np.random.normal(0, 1, (batch_size, self.latent_dim))

# Train the generator (to have the discriminator label samples as valid)

g_loss = self.combined.train_on_batch(noise, valid)

# Plot the progress

print ("%d [D loss: %f, acc.: %.2f%%] [G loss: %f]" % (epoch, d_loss[0], 100*d_loss[1], g_loss))

# If at save interval => save generated image samples

if epoch % sample_interval == 0:

self.sample_images(epoch)

def sample_images(self, epoch):

r, c = 5, 5

noise = np.random.normal(0, 1, (r * c, self.latent_dim))

gen_imgs = self.generator.predict(noise)

# Rescale images 0 - 1

gen_imgs = 0.5 * gen_imgs + 0.5

fig, axs = plt.subplots(r, c)

cnt = 0

for i in range(r):

for j in range(c):

axs[i,j].imshow(gen_imgs[cnt, :,:,0], cmap='gray')

axs[i,j].axis('off')

cnt += 1

fig.savefig("images/%d.png" % epoch)

plt.close()

if __name__ == '__main__':

gan = GAN()

gan.train(epochs=30000, batch_size=32, sample_interval=200)