YOLOv4论文阅读(附原文翻译)

YOLOv4论文阅读(附原文翻译)

- 论文阅读

- 论文翻译

-

- Abstract摘要

- 1.Introduction 引言

- 2.Related work相关工作

-

- 2.1.Object detection models目标检测模型

- 2.2.Bag of freebies免费礼包

- 2.3.Bag of specials特价礼包

- 3. Methodology方法论

-

- 3.1. Selection of architecture网络结构的选择

- 3.2. Selection of BoF and BoS

- 3.3. Additional improvements

- 3.4. YOLOv4

论文阅读

https://arxiv.org/abs/2004.10934

https://github.com/AlexeyAB/darknet

YOLOv4出来一年多了,之前只是看了别人的翻译或者总结,最近终于有空好好看了下原文。

通过复现作者的工作看有没有可以在实际工作中可以用的到的部分,然后通过对每个知识点的扩展阅读进一步扎实自己的基本功。

pdf共有17页。

1页:Abstract和Introduction。

2-4页:Related Work。就是一篇写得很好的综述,介绍了目标检测网络的基本结构Architecture,常见的Backbone、Neck、Head。

还介绍了一些提升性能的策略技巧,分为Bag of freebies免费礼包和Bag of specials特价礼包。这个比方很形象很好理解,影响Inference就是需要cost(花钱)。Bag of freebies免费礼包只影响Traing不影响Inference,Bag of specials特价礼包稍微有点影响Inference。

这一章节的内容其实很多,每个知识点都是一笔带过,其实对应的参考文献都应该好好阅读。

5-7页:Methodology。介绍了YOLOv4的主要工作。除了在上一章介绍的内容中对Architecture、Bag of freebies和Bag of specials进行选择,作者还做了自己的创新。

7-10页:Experiments和Results。介绍了实验配置和结果。

10页:Conclusions和Acknowledgements。结论和声明。

11-13:结果表格。

14-17:参考文献。

论文翻译

Abstract摘要

There are a huge number of features which are said to improve Convolutional Neural Network (CNN) accuracy. Practical testing of combinations of such features on large datasets, and theoretical justification of the result, is required. Some features operate on certain models exclusively and for certain problems exclusively, or only for small-scale datasets; while some features, such as batch-normalization and residual-connections, are applicable to the majority of models, tasks, and datasets. We assume that such universal features include Weighted-Residual-Connections (WRC), Cross-Stage-Partial-connections (CSP), Cross mini-Batch Normalization (CmBN), Self-adversarial-training (SAT) and Mish-activation. We use new features: WRC, CSP, CmBN, SAT, Mish activation, Mosaic data augmentation, CmBN, DropBlock regularization, and CIoU loss, and combine some of them to achieve state-of-the-art results: 43.5% AP (65.7% AP50) for the MS COCO dataset at a realtime speed of ~65 FPS on Tesla V100. Source code is at https://github.com/AlexeyAB/darknet.

很多特性都声称能够提升CNN的准确率accuracy,必须要对这些技巧进行组合并在大数据集上进行实际测试和理论论证才能证明。有些特性只对特定的模型、特定的问题或者小数据集上有效;而有些特性,比如批归一化(Batch Normalization)和残差连接(Residual-connections)对大多数模型、任务、数据集有效。我们假设这些通用特性包括加权残差连接(Weighted-Residual-Connections, WRC)、跨阶段部分连接(Cross-Stage-Partial-connections,CSP)、跨迷你批量归一化(Cross mini-Batch Normalization,CmBN)、自对抗训练(Self-adversarial-training,SAT)和Mish激活函数。我们使用新特性:WRC,CSP, CmBN,SAT,Mish激活函数,马赛克数据增广,CmBN,DropBlock正规化,CIoU loss函数,并将它们进行组合在COCO数据集上达到最先进的结果:43.5%AP(65.7% A P 50 AP_{50} AP50),在Tesla V100上实时速度约65FPS。源代码在https://github.com/AlexeyAB/darknet。

1.Introduction 引言

The majority of CNN-based object detectors are largely applicable only for recommendation systems. For example, searching for free parking spaces via urban video cameras is executed by slow accurate models, whereas car collision warning is related to fast inaccurate models. Improving the real-time object detector accuracy enables using them not only for hint generating recommendation systems, but also for stand-alone process management and human input reduction. Real-time object detector operation on conventional Graphics Processing Units (GPU) allows their mass usage at an affordable price. The most accurate modern neural networks do not operate in real time and require large number of GPUs for training with a large mini-batch-size. We address such problems through creating a CNN that operates in real-time on a conventional GPU, and for which training requires only one conventional GPU.

大多数CNN目标检测器只适用于推荐系统。例如,通过城市摄像机搜索免费停车位是由慢速准确的模型执行的,而汽车碰撞预警则使用快速但不准确的模型。提高实时目标检测器的准确性,使得它们不仅可以用于推荐系统,还可以用于独立的流程并减少人工输入。在常规GPU上运行的实时目标检测器,使得它们在合理的价格内大规模使用。最精确的现代神经网络不是实时运行,且需要大量的GPU来进行大mini-batch-size的训练。我们通过创建一个可以在一块常规GPU上训练并实时运行的CNN来解决上述问题。

The main goal of this work is designing a fast operating speed of an object detector in production systems and optimization for parallel computations, rather than the low computation volume theoretical indicator (BFLOP). We hope that the designed object can be easily trained and used. For example, anyone who uses a conventional GPU to train and test can achieve real-time, high quality, and convincing object detection results, as the YOLOv4 results shown in Figure 1. Our contributions are summarized as follows:

1. We develop an efficient and powerful object detection model. It makes everyone can use a 1080 Ti or 2080 Ti GPU to train a super fast and accurate object detector.

2. We verify the influence of state-of-the-art Bag-of-Freebies and Bag-of-Specials methods of object detection during the detector training.

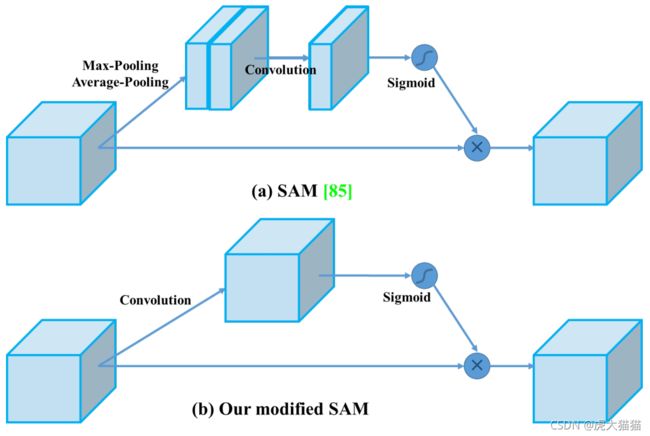

3. We modify state-of-the-art methods and make them more effecient and suitable for single GPU training, including CBN [89], PAN [49], SAM [85], etc.

本工作的主要目标是生产系统中快速运行的目标检测器的设计和并行计算的优化,而不是低计算量理论指标(BFLOP)。我们希望设计的对象能够容易训练和使用。例如,任何人使用一个常规GPU进行训练和测试都可以获得实时、高质量、令人信服的目标检测结果,如图1所示是YOLOv4的结果。本文的贡献总结如下:

1.我们开发了一个高效、强大的目标检测模型。每个人都可以使用1080 Ti或2080 Ti来训练一个超级快速和准确的目标检测器。

2.在检测器训练过程中,我们验证了最先进的Bag-of-Freebies和Bag-of-Specials方法的影响。

3.我们改进了目前最先进的方法,使它们更有效且更适用于单GPU训练,包括CBN[89]、PAN[49]、SAM[85]等。

2.Related work相关工作

2.1.Object detection models目标检测模型

A modern detector is usually composed of two parts, a backbone which is pre-trained on ImageNet and a head which is used to predict classes and bounding boxes of objects. For those detectors running on GPU platform, their backbone could be VGG [68], ResNet [26], ResNeXt [86], or DenseNet [30]. For those detectors running on CPU platform, their backbone could be SqueezeNet [31], MobileNet [28, 66, 27, 74], or ShuffleNet [97, 53]. As to the head part, it is usually categorized into two kinds, i.e., one-stage object detector and two-stage object detector. The most representative two-stage object detector is the R-CNN [19] series, including fast R-CNN [18], faster R-CNN [64], R-FCN [9], and Libra R-CNN [58]. It is also possible to make a two- stage object detector an anchor-free object detector, such as RepPoints [87]. As for one-stage object detector, the most representative models are YOLO [61, 62, 63], SSD [50], and RetinaNet [45]. In recent years, anchor-free one-stage object detectors are developed. The detectors of this sort are CenterNet [13], CornerNet [37, 38], FCOS [78], etc. Object detectors developed in recent years often insert some layers between backbone and head, and these layers are usually used to collect feature maps from different stages. We can call it the neck of an object detector. Usually, a neck is composed of several bottom-up paths and several top-down paths. Networks equipped with this mechanism include Feature Pyramid Network (FPN) [44], Path Aggregation Network (PAN) [49], BiFPN [77], and NAS-FPN [17].In addition to the above models, some researchers put their emphasis on directly building a new backbone (DetNet [43], DetNAS [7]) or a new whole model (SpineNet [12], HitDetector [20]) for object detection.

To sum up, an ordinary object detector is composed of several parts:

现代目标检测器一般由两部分组成,一个backbone(主干网络)和一个head,backbone是在ImageNet上预训练的,head是用于预测物品的类别和包围框。在GPU平台上运行的检测器,它们的backbone一般是VGG [68],ResNet [26],ResNeXt [86]或者 DenseNet [30]。在CPU平台上运行的检测器,它们的backbone一般是SqueezeNet [31],MobileNet [28, 66, 27, 74]或者ShuffleNet [97, 53]。head一般分为两类,即one-stage检测器和two-stage检测器。典型的two-stage检测器有R-CNN [19] 系列,包括fast R-CNN [18],faster R-CNN [64],R-FCN [9]和Libra R-CNN [58]。也可以将two-stage检测器变成anchor-free目标检测器,如RepPoints[87]。典型的one-stage检测器有 YOLO [61, 62, 63],SSD [50]和RetinaNet [45]。近年来,出现了anchor-free的one-stage目标检测,如CenterNet [13],CornerNet [37, 38],FCOS [78]。近年来还发展出了在backbone和head中间加入一些layer,这些层通常用于收集不同阶段的feature map特征图。我们将其称为目标检测器的neck。通常,一个neck通常由若干自底向上路径和自顶向下路径组成。具备这种机制的网络包括Feature Pyramid Network (FPN) [44],Path Aggregation Network (PAN) [49],BiFPN [77]和 NAS-FPN [17]。除了上述模型,一些研究人员把重点放在直接建立一个新的backbone(DetNet [43],DetNAS [7])或者一个全新的模型(SpineNet [12],HitDetector [20])。

总结一下,一个通用的目标检测器包含以下部分:

输入Input: 图像Image,块Patches,图像金字塔Image Pyramid

主干网络Backbones: VGG16 [68],ResNet-50 [26],SpineNet[12],EfficientNet-B0/B7 [75],CSPResNeXt50 [81],CSPDarknet53 [81]

脖子Neck:

Additional block: SPP [25], ASPP [5], RFB [47], SAM [85]

**Path-aggregation block: ** FPN [44],PAN [49],NAS-FPN [17],Fully-connected FPN,BiFPN [77],ASFF [48],SFAM [98]

检测头Head:

稠密预测Dense Prediction(one-stage):

anchor based:RPN [64],SSD [50],YOLO [61],RetinaNet [45]

anchor free:CornerNet [37],CenterNet [13],MatrixNet [60],FCOS [78]

稀疏预测Sparse Prediction(two-stage):

anchor based:Faster R-CNN [64], R-FCN [9], Mask R-CNN [23]

anchor free:RepPoints [87]

2.2.Bag of freebies免费礼包

Usually, a conventional object detector is trained offline. Therefore, researchers always like to take this advantage and develop better training methods which can make the object detector receive better accuracy without increasing the inference cost. We call these methods that only change the training strategy or only increase the training cost as “bag of freebies.” What is often adopted by object detection methods and meets the definition of bag of freebies is data augmentation. The purpose of data augmentation is to increase the variability of the input images, so that the designed object detection model has higher robustness to the images obtained from different environments. For examples, photometric distortions and geometric distortions are two commonly used data augmentation method and they definitely benefit the object detection task. In dealing with photometric distortion, we adjust the brightness, contrast, hue, saturation, and noise of an image. For geometric distortion, we add random scaling, cropping, flipping, and rotating.

通常,一个常规的目标检测器是离线训练的。因此,学者往往利用这一点来开发更好的训练方法使目标检测器能够达到更好的准确度,同时不增加推理的成本。我们称这些改变训练策略或增加训练成本的方法为免费礼包。经常使用的免费礼包有数据增广。数据增广的目的在于增加输入图像的多样性,从而使得设计出来的目标检测模型对不同环境的图像具有更高的鲁棒性。例如,光度失真和几何失真是两种常用的数据增广方法,在目标检测任务中很有效。在处理光度失真时,通过调节图像的亮度、对比度、色相、饱和度和噪声来实现。对于几何失真,通过随机缩放、裁剪、翻转和旋转来实现。

The data augmentation methods mentioned above are all pixel-wise adjustments, and all original pixel information in the adjusted area is retained. In addition, some researchers engaged in data augmentation put their emphasis on simulating object occlusion issues. They have achieved good results in image classification and object detection. For example, random erase [100] and CutOut [11] can randomly select the rectangle region in an image and fill in a random or complementary value of zero. As for hide-and-seek [69] and grid mask [6], they randomly or evenly select multiple rectangle regions in an image and replace them to all zeros. If similar concepts are applied to feature maps, there are DropOut [71], DropConnect [80], and DropBlock [16] methods. In addition, some researchers have proposed the methods of using multiple images together to perform data augmentation. For example, MixUp [92] uses two images to multiply and superimpose with different coefficient ratios, and then adjusts the label with these superimposed ratios. As for CutMix [91], it is to cover the cropped image to rectangle region of other images, and adjusts the label according to the size of the mix area. In addition to the above mentioned methods, style transfer GAN [15] is also used for data augmentation, and such usage can effectively reduce the texture bias learned by CNN.

上述的数据增广方法都是像素级的调整,被调整区域的所有原始像素级的信息都被保留。另外,一些数据增强的研究者将重点放在模拟物体遮挡,并在图像分类和目标检测领域得到很好的结果。例如,随机擦除和CutOut可以随机选取图像中的矩形区域,并填入一个零的随机或互补值。而hide-and-seek和grid mask是随机或平均地选择图像中的多个矩形区域并用零值替补。在特征图中相似的操作有DropOut、DropConnect和DropBlock。另外,还有一些学者提出了用多个图像一起进行数据增广。例如,MixUp使用不同的系数比例将两个图像相乘或叠加,然后使用同样的比例调整标签。而CutMix则是将裁剪后的图像覆盖到其他图像的矩形区域,并根据混合区域的大小调整标签。除了上述方法外,style transfer GAN进行数据增强,这样可以有效减少CNN学习到的纹理偏差。

Different from the various approaches proposed above, some other bag of freebies methods are dedicated to solving the problem that the semantic distribution in the dataset may have bias. In dealing with the problem of semantic distribution bias, a very important issue is that there is a problem of data imbalance between different classes, and this problem is often solved by hard negative example mining [72] or online hard example mining [67] in two-stage object detector. But the example mining method is not applicable to one-stage object detector, because this kind of detector belongs to the dense prediction architecture. Therefore Lin et al. [45] proposed focal loss to deal with the problem of data imbalance existing between various classes. Another very important issue is that it is difficult to express the relationship of the degree of association between different categories with the one-hot hard representation. This representation scheme is often used when executing labeling. The label smoothing proposed in [73] is to convert hard label into soft label for training, which can make model more robust. In order to obtain a better soft label, Islam et al. [33] introduced the concept of knowledge distillation to design the label refinement network.

不同于上面提到的各种方法,还有一些免费礼包方法旨在解决存在变差的数据集的语义分布。解决语义分布偏差时,一个很重要的问题是不同类别的数据之间的不平衡,在two-stage目标检测器中这个问题通常通过负样本难例挖掘或在线难例挖掘来解决。但是样本挖掘的方法不适用于one-stage目标检测器,因为这类检测器检测器属于稠密预测结构。因此[45]在处理不同类别的数据不平衡问题时提出了focal loss。另一个很重要的问题是,用one-hot表示法很难表达不同类别之间的关联程度。而one-hot表示法在labeling的时候常用,[73]提出了Label Smoothing将硬标签转换成软标签,使得模型更加鲁棒。为了获得更好的软标签,[33]引入了知识蒸馏的概念来设计标签细化网络。

The last bag of freebies is the objective function of Bounding Box (BBox) regression. The traditional object detector usually uses Mean Square Error (MSE) to directly perform regression on the center point coordinates and height and width of the BBox, i.e., { x c e n t e r , y c e n t e r , w , h } \{x_{center}, y_{center}, w, h \} {xcenter,ycenter,w,h}, or the upper left point and the lower right point, i.e., { x t o p _ l e f t , y t o p _ l e f t , x b o t t o m _ r i g h t , y b o t t o m _ r i g h t } \{ x_{top\_left}, y_{top\_left}, x_{bottom\_right}, y_{bottom\_right} \} {xtop_left,ytop_left,xbottom_right,ybottom_right}. As for anchor-based method, it is to estimate the corresponding offset, for example { x c e n t e r _ o f f s e t , y c e n t e r _ o f f s e t , w o f f s e t , h o f f s e t } \{ x_{center\_offset}, y_{center\_offset}, w_{offset}, h_{offset} \} {xcenter_offset,ycenter_offset,woffset,hoffset}and { x t o p _ l e f t _ o f f s e t , y t o p _ l e f t _ o f f s e t , x b o t t o m _ r i g h t _ o f f s e t , y b o t t o m _ r i g h t _ o f f s e t } \{ x_{top\_left\_offset}, y_{top\_left\_offset}, x_{bottom\_right\_offset}, y_{bottom\_right\_offset}\} {xtop_left_offset,ytop_left_offset,xbottom_right_offset,ybottom_right_offset}. However, to directly estimate the coordinate values of each point of the BBox is to treat these points as independent variables, but in fact does not consider the integrity of the object itself. In order to make this issue processed better, some researchers recently proposed IoU loss [90], which puts the coverage of predicted BBox area and ground truth BBox area into consideration. The IoU loss computing process will trigger the calculation of the four coordinate points of the BBox by executing IoU with the ground truth, and then connecting the generated results into a whole code. Because IoU is a scale invariant representation, it can solve the problem that when traditional methods calculate the l 1 l_{1} l1 or l 2 l_{2} l2 loss of { x , y , w , h } \{x, y, w, h\} {x,y,w,h}, the loss will increase with the scale. Recently, some researchers have continued to improve IoU loss. For example, GIoU loss [65] is to include the shape and orientation of object in addition to the coverage area. They proposed to find the smallest area BBox that can simultaneously cover the predicted BBox and ground truth BBox, and use this BBox as the denominator to replace the denominator originally used in IoU loss. As for DIoU loss [99], it additionally considers the distance of the center of an object, and CIoU loss [99], on the other hand simultaneously considers the overlapping area, the distance between center points, and the aspect ratio. CIoU can achieve better convergence speed and accuracy on the BBox regression problem.

**最后一个免费礼包方法就是BBox回归的目标函数。传统的目标检测器一般使用MSE(Mean Square Error)直接对中心点坐标 以及高度和宽度进行回归,即 { x c e n t e r , y c e n t e r , w , h } \{x_{center}, y_{center}, w, h \} {xcenter,ycenter,w,h},或者左上右下点,即 { x t o p _ l e f t , y t o p _ l e f t , x b o t t o m _ r i g h t , y b o t t o m _ r i g h t } \{ x_{top\_left}, y_{top\_left}, x_{bottom\_right}, y_{bottom\_right} \} {xtop_left,ytop_left,xbottom_right,ybottom_right}。对于基于anchor的方法,一般是估计偏移量,例如 { x c e n t e r _ o f f s e t , y c e n t e r _ o f f s e t , w o f f s e t , h o f f s e t } \{ x_{center\_offset}, y_{center\_offset}, w_{offset}, h_{offset} \} {xcenter_offset,ycenter_offset,woffset,hoffset}和 { x t o p _ l e f t _ o f f s e t , y t o p _ l e f t _ o f f s e t , x b o t t o m _ r i g h t _ o f f s e t , y b o t t o m _ r i g h t _ o f f s e t } \{ x_{top\_left\_offset}, y_{top\_left\_offset}, x_{bottom\_right\_offset}, y_{bottom\_right\_offset}\} {xtop_left_offset,ytop_left_offset,xbottom_right_offset,ybottom_right_offset}。然而,直接估计BBox中每个点的坐标值,是将这些点视为独立的变量,并没有考虑对目标身的完整性。 为了使效果更好,一些学者提出了IoU loss,将预测BBox和真值BBox的覆盖率加以考虑。IoU loss计算过程通过BBox的四个坐标点计算和真值的IoU,将结果送入完整的代码。因为IoU是一种尺度不变的表示法,所以它能解决传统 l 1 l_{1} l1或 l 2 l_{2} l2 loss对于 { x , y , w , h } \{x, y, w, h\} {x,y,w,h}时,loss随尺度增加的问题。近年来,又有学者继续改进IoU loss。例如GIoU loss[65]是在覆盖区域的基础上包括了目标的形状和方向,提出了同时覆盖预测BBox和真值BBox的最小面积BBox作为分母,替代原IoU loss种的分母。DIoU额外考虑了目标中心点之间距离。CIoU同时考虑了重叠面积,中心点之间的距离和纵横比。CIoU在BBox回归问题上能取得较好的收敛速度和精度。

2.3.Bag of specials特价礼包

For those plugin modules and post-processing methods that only increase the inference cost by a small amount but can significantly improve the accuracy of object detection, we call them “bag of specials”. Generally speaking, these plugin modules are for enhancing certain attributes in a model, such as enlarging receptive field, introducing attention mechanism, or strengthening feature integration capability, etc., and post-processing is a method for screening model prediction results.

我们将那些只增加少量推理成本但能够显著提升目标检测准确度的插件模块和后处理方法,称为特价礼包。一般来说,这些插件模块能够提升模型的特定属性,比如扩大感受野、引入注意力机制或者增强特征集成能力等等。后处理就是一种筛选模型预测结果的方法。

Common modules that can be used to enhance receptive field are SPP [25], ASPP [5], and RFB [47]. The SPP module was originated from Spatial Pyramid Matching (SPM) [39], and SPMs original method was to split feature map into several d × d equal blocks, where d can be f1; 2; 3; :::g, thus forming spatial pyramid, and then extracting bag-of-word features. SPP integrates SPM into CNN and use max-pooling operation instead of bag-of-word operation. Since the SPP module proposed by He et al. [25] will output one dimensional feature vector, it is infeasible to

be applied in Fully Convolutional Network (FCN). Thus in the design of YOLOv3 [63], Redmon and Farhadi improve SPP module to the concatenation of max-pooling outputs

with kernel size k × k, where k = f1; 5; 9; 13g, and stride equals to 1. Under this design, a relatively large k × k maxpooling effectively increase the receptive field of backbone feature. After adding the improved version of SPP module, YOLOv3-608 upgrades AP50 by 2.7% on the MS COCO object detection task at the cost of 0.5% extra computation. The difference in operation between ASPP [5] module and improved SPP module is mainly from the original k×k kernel size, max-pooling of stride equals to 1 to several 3 × 3 kernel size, dilated ratio equals to k, and stride equals to 1 in dilated convolution operation. RFB module is to use several dilated convolutions of k×k kernel, dilated ratio equals to k, and stride equals to 1 to obtain a more comprehensive spatial coverage than ASPP. RFB [47] only costs 7% extra inference time to increase the AP50 of SSD on MS COCO by 5.7%.

扩大感受野的通用模块有SPP、ASPP和RFB。SPP模块来源于Spatial Pyramid Matching (SPM),而SPM方法是将特征图分成若干个 d × d d\times d d×d的块, d d d可以是 { 1 , 2 , 3 , … } \{1, 2, 3,…\} {1,2,3,…},从而组成空间金字塔并生成bag-of-word特征。SPP模块将SPM和CNN结合,使用最大池化操作来替代词袋操作。何恺明提出的SPP插件输出是一维特征向量,不能应用于全卷积网络Fully Convolutional Network (FCN)。在YOLOv3的设计中,Redmon和Farhadi通过拼接 k × k k\times k k×k核的最大池化输出( k = { 1 , 5 , 9 , 13 } , s t r i d e = 1 k=\{1, 5, 9,13\},stride=1 k={1,5,9,13},stride=1)改进了SPP模块。通过这个设计,一个相对大的 k × k k\times k k×k最大池化显著增加了主干网络的感受野。增加了改进SPP模块后,YOLOv3-608增加了0.5%的计算成本,同时将MS COCO目标检测任务的 A P 50 AP_{50} AP50提升了2.7%。改进SPP模块和ASPP模块在运算的差异主要一个是 k × k k\times k k×k核大小、步长 s t r i d e = 1 stride=1 stride=1的最大池化,一个是 3 × 3 3\times 3 3×3核大小、膨胀系数为 k k k、步长 s t r i d e = 1 stride=1 stride=1的膨胀卷积。RFB模块也使用了若干个 k × k k\times k k×k膨胀卷积核,膨胀系数为 k k k,步长 s t r i d e = 1 stride=1 stride=1,获得比ASPP更全面的空间覆盖。RFB[47]只增加了7%的推理时间,把SSD在MS COCO上的 A P 50 AP_{50} AP50提升了5.7%。

The attention module that is often used in object detection is mainly divided into channel-wise attention and pointwise attention, and the representatives of these two attention models are Squeeze-and-Excitation (SE) [29] and Spatial Attention Module (SAM) [85], respectively. Although SE module can improve the power of ResNet50 in the ImageNet image classification task 1% top-1 accuracy at the cost of only increasing the computational effort by 2%, but on a GPU usually it will increase the inference time by about 10%, so it is more appropriate to be used in mobile devices. But for SAM, it only needs to pay 0.1% extra calculation and it can improve ResNet50-SE 0.5% top-1 accuracy on the ImageNet image classification task. Best of all, it does not affect the speed of inference on the GPU at all.

目标检测中常用的注意力模块主要分为通道注意和点注意,这两种注意模型的代表分别是挤压-激励(Squeeze-and-Excitation, SE)[29]和空间注意模块(Spatial attention Module, SAM)[85]。尽管SE模块只增加2%的计算成本,将ResNet50在ImageNet图像分类任务上的top-1准确率提升1%,但是GPU的计算时间通常会增加10%,所以它更适合在移动设备上使用。而SAM只增加0.1%的额外计算,就能将ResNet50在ImageNet图像分类任务上的top-1准确率提升0.5%。最重要的是,它完全不影响GPU上的推理速度。

In terms of feature integration, the early practice is to use skip connection [51] or hyper-column [22] to integrate lowlevel physical feature to high-level semantic feature. Since multi-scale prediction methods such as FPN have become popular, many lightweight modules that integrate different feature pyramid have been proposed. The modules of this sort include SFAM [98], ASFF [48], and BiFPN [77]. The main idea of SFAM is to use SE module to execute channelwise level re-weighting on multi-scale concatenated feature maps. As for ASFF, it uses softmax as point-wise level reweighting and then adds feature maps of different scales. In BiFPN, the multi-input weighted residual connections is proposed to execute scale-wise level re-weighting, and then add feature maps of different scales.

在特征融合方面,早期的实践有skip connection [51]或hyper-column [22]将底层的物理特征和高层的语义特征融合。由于多尺度的预测方法如FPN的流行,很多集成不同特征金字塔的轻量模块被提出。这类方法包括SFAM [98],ASFF [48]和BiFPN [77]。SFAM的主要思想是使用SE模块对多尺度级联特征图进行通道级重加权。ASFF使用softmax作为像素级重加权,然后添加不同尺度的特征图。BiFPN中,多输入加权残差连接被用于生成尺度级重加权,然后增加不同尺度的特征图。

In the research of deep learning, some people put their focus on searching for good activation function. A good activation function can make the gradient more efficiently propagated, and at the same time it will not cause too much extra computational cost. In 2010, Nair and Hinton [56] propose ReLU to substantially solve the gradient vanish problem which is frequently encountered in traditional tanh and sigmoid activation function. Subsequently, LReLU [54], PReLU [24], ReLU6 [28], Scaled Exponential Linear Unit (SELU) [35], Swish [59], hard-Swish [27], and Mish [55], etc., which are also used to solve the gradient vanish problem, have been proposed. The main purpose of LReLU and PReLU is to solve the problem that the gradient of ReLU is zero when the output is less than zero. As for ReLU6 and hard-Swish, they are specially designed for quantization networks. For self-normalizing a neural network, the SELU activation function is proposed to satisfy the goal. One thing to be noted is that both Swish and Mish are continuously differentiable activation function.

还有一些人将深度学习的研究重点放在激活函数上。一个好的激活函数能够使梯度更有效地传播,同时不会增加太多额外的计算消耗。2010年,Nair和Hinton提出的ReLU,从根本上解决了使用传统tanh和sigmoid函数会导致梯度爆炸的问题。随后,LReLU、PReLU、ReLU6、SELU(Scaled Exponential Linear Unit)、Swish、hard-Swish和Mish等等被提出。LReLU和PReLU的主要目的是解决ReLU在输出小于0的时候梯度为0的问题。ReLU6和hard-Swish则是专门为量子化网络设计的。SELU是为了满足神经网络的自归一化而提出的。需要注意的是Swish和Mish都是连续可微的激活函数。

The post-processing method commonly used in deep learning-based object detection is NMS, which can be used to filter those BBoxes that badly predict the same object, and only retain the candidate BBoxes with higher response. The way NMS tries to improve is consistent with the method of optimizing an objective function. The original method proposed by NMS does not consider the context information, so Girshick et al. [19] added classification confidence score in R-CNN as a reference, and according to the order of confidence score, greedy NMS was performed in the order of high score to low score. As for soft NMS [1],it considers the problem that the occlusion of an object may cause the degradation of confidence score in greedy NMS with IoU score. The DIoU NMS [99] developers way of thinking is to add the information of the center point distance to the BBox screening process on the basis of soft NMS. It is worth mentioning that, since none of above postprocessing methods directly refer to the captured image features, post-processing is no longer required in the subsequent development of an anchor-free method.

基于深度学习的目标检测常用的后处理方法是NMS,可以滤除同一个目标预测结果中较差的BBox,保留一个较好的候选框。改进NMS与优化目标函数的方法是一致的。原始的NMS方法没有考虑上下文信息,所以Girshick等人[19]在R-CNN中加入分类置信值作为参考,根据置信值的顺序,按照高到低的顺序进行greedy NMS。而soft NMS[1]考虑到目标遮挡可能会导致置信度下降的问题,从而影响greedy NMS的IoU评分。DIoU NMS[99]的思路是在soft NMS的基础上,在BBox筛选过程中加入中心点距离的信息。值得一提的是,由于上述后处理方法都没有直接指向图像特征,因此在后续的无锚方法开发中不再需要后处理。

3. Methodology方法论

The basic aim is fast operating speed of neural network, in production systems and optimization for parallel computations, rather than the low computation volume theoretical indicator (BFLOP). We present two options of real-time neural networks:

• For GPU we use a small number of groups (1 - 8) in convolutional layers: CSPResNeXt50 / CSPDarknet53

• For VPU we use grouped-convolution, but we refrain from using Squeeze-and-Excitation(SE) blocks -specifically this includes the following models: EfficientNet-lite / MixNet [76] / GhostNet [21] / MobileNetV3

基本的目标是提高在生产系统中神经网络的运行速度和优化并行计算,而不是低计算量理论指标(BFLOP)。我们提出了两种实时神经网络的选择:

针对GPU我们使用少量(1-8组)的卷积层:CSPResNeXt50 / CSPDarknet53

针对VPU我们使用成组的卷积,但是避免使用SE块。包括以下模型:EfficientNet-lite / MixNet [76] / GhostNet [21] / MobileNetV3。

3.1. Selection of architecture网络结构的选择

Our objective is to find the optimal balance among the input network resolution, the convolutional layer number, the parameter number (filter size2 * filters * channel / groups), and the number of layer outputs (filters). For instance, our numerous studies demonstrate that the CSPResNext50 is considerably better compared to CSPDarknet53 in terms of object classification on the ILSVRC2012 (ImageNet) dataset [10]. However, conversely, the CSPDarknet53 is better compared to CSPResNext50 in terms of detecting objects on the MS COCO dataset [46].

The next objective is to select additional blocks for increasing the receptive field and the best method of parameter aggregation from different backbone levels for different detector levels: e.g. FPN, PAN, ASFF, BiFPN.

A reference model which is optimal for classification is not always optimal for a detector. In contrast to the classifier, the detector requires the following:

• Higher input network size (resolution) – for detecting multiple small-sized objects

• More layers – for a higher receptive field to cover the increased size of input network

• More parameters – for greater capacity of a model to detect multiple objects of different sizes in a single image

我们的首要目标是在输入分辨率、卷积层数量、参数量( f i l t e r _ s i z e 2 ∗ f i l t e r s ∗ c h a n n e l / g r o u p s ) filter\_size^2*filters*channel/groups) filter_size2∗filters∗channel/groups)和输出的数量( f i l t e r s filters filters)之间寻找平衡。例如,我们的大量研究表明,在ILSVRC2012 (ImageNet)数据集[10]上的目标分类任务上,CSPResNeXt50比CSPDarknet53要好得多。然而,相反地,CSPDarknet53在MS COCO数据集的目标检测任务上比CSPResNeXt50更好。

第二个目标是是选择额外的块来增加感受野,并为不同的检测器选择来自不同backbone的最佳参数聚合方法:如FPN, PAN, ASFF, BiFPN。

一个在分类任务上最优的参考模型不一定是检测最优的。相比于分类器,检测器需要如下:

• 更高的网络输入尺寸(分辨率)——检测不同的小目标

• 更多层——更大的感受野来覆盖增大的网络输入

• 更多参数——更大的模型容量来检测单张图像中不同尺寸的多个目标。

Hypothetically speaking, we can assume that a model with a larger receptive field size (with a larger number of convolutional layers 3 × 3) and a larger number of parameters should be selected as the backbone. Table 1 shows the information of CSPResNeXt50, CSPDarknet53, and EfficientNet B3. The CSPResNext50 contains only 16 convolutional layers 3 × 3, a 425 × 425 receptive field and 20.6 M parameters, while CSPDarknet53 contains 29 convolutional layers 3 × 3, a 725 × 725 receptive field and 27.6 M parameters. This theoretical justification, together with our numerous experiments, show that CSPDarknet53 neural network is the optimal model of the two as the backbone for a detector.

我们假定已经选择一个具有大的感受野尺寸(大量的3*3的卷积层)和大量参数的模型作为参数。表1给出了CSPResNeXt50、CSPDarknet53和EfficientNet B3的信息。CSPResNeXt50仅包含16个3x3的卷积层,一个425x425的感受野和20.6M的参数量。CSPDarknet53包含29个3x3的卷积层,一个725x725的感受野和27.6M的参数量。这个理论论证,加上我们大量的实验,表明CSPDarknet53是作为目标检测的主干网络的最优模型。

| Backbone model | Input network resolution | Receptive field size | Parameters | Average size of layer output(WxHxC) | BFLOPS(512x512 network resolution) | FPS(RTX 2070) |

|---|---|---|---|---|---|---|

| CSPResNeXt50 | 512x512 | 425x425 | 20.6M | 1058K | 31(15.5FMA) | 62 |

| CSPDarknet53 | 512x512 | 725x725 | 27.6M | 950K | 52(26.0FMA) | 66 |

| EfficientNet-B39(ours) | 512x512 | 1311x1311 | 12.0M | 668K | 11(5.5FMA) | 26 |

The influence of the receptive field with different sizes is summarized as follows:

• Up to the object size - allows viewing the entire object

• Up to network size - allows viewing the context around the object

• Exceeding the network size - increases the number of connections between the image point and the final activation

不同尺寸的感受野的影响总结如下:

•目标大小层面-可以看到整个目标

•网络大小层面-可以看到目标周围的上下文信息

•超过网络大小层面-增加图像点和最终激活之间的连接数量

We add the SPP block over the CSPDarknet53, since it significantly increases the receptive field, separates out the most significant context features and causes almost no reduction of the network operation speed. We use PANet as the method of parameter aggregation from different backbone levels for different detector levels, instead of the FPN used in YOLOv3.

Finally, we choose CSPDarknet53 backbone, SPP additional module, PANet path-aggregation neck, and YOLOv3 (anchor based) head as the architecture of YOLOv4.

In the future we plan to expand significantly the content of Bag of Freebies (BoF) for the detector, which theoretically can address some problems and increase the detector accuracy, and sequentially check the influence of each feature in an experimental fashion.

We do not use Cross-GPU Batch Normalization (CGBN or SyncBN) or expensive specialized devices. This allows anyone to reproduce our state-of-the-art outcomes on a conventional graphic processor e.g. GTX 1080Ti or RTX 2080Ti.

我们在CSPDarknet53上增加了SPP块,能够显著地扩大感受野,分出最显著的上下文信息并且几乎不降低网络速度。我们在不同的检测器的不同backbone中都使用了PANet作为参数集合手段,而不是YOLOv3中的FPN。

最终,我们选定了CSPDarknet53的backbone、SPP模块、PANet参数聚合neck和YOLOv3(anchor based)head作为YOLO v4的结构。

未来我们计划扩充目标检测器的BoF (Bag of Freebies)的内容,理论上来说可以解决一些问题并提高探测器的准确性,并以实验的方式依次检验每个特征的效果。

我们没有使用Cross-GPU Batch Normalization(CGBN或SyncBN)或者昂贵的专用设备。这使得每个人都可以使用一个常规的GPU(GTX1080Ti或者RTX2080Ti)来复现我们的结果。

3.2. Selection of BoF and BoS

For improving the object detection training, a CNN usually uses the following:

• Activations: ReLU, leaky-ReLU, parametric-ReLU, ReLU6, SELU, Swish, or Mish

• Bounding box regression loss: MSE, IoU, GIoU, CIoU, DIoU

• Data augmentation: CutOut, MixUp, CutMix

• Regularization method: DropOut, DropPath [36], Spatial DropOut [79], or DropBlock

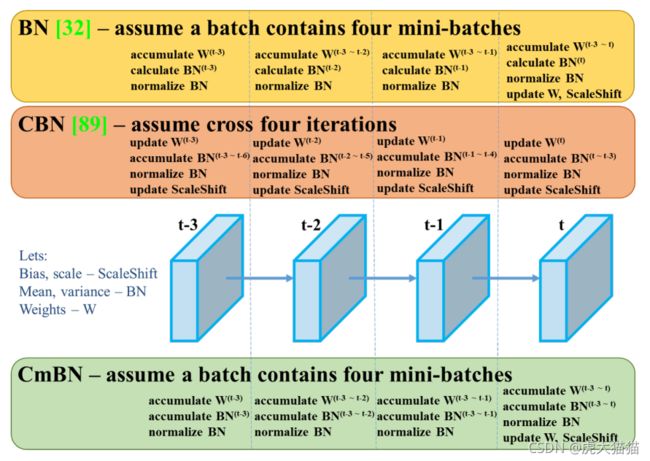

• Normalization of the network activations by their mean and variance: Batch Normalization (BN) [32], Cross-GPU Batch Normalization (CGBN or SyncBN)[93], Filter Response Normalization (FRN) [70], or Cross-Iteration Batch Normalization (CBN) [89]

• Skip-connections: Residual connections, Weighted residual connections, Multi-input weighted residual connections, or Cross stage partial connections (CSP)

为了提高目标检测训练,CNN通常使用以下方法:

•激活函数:ReLU、leaky-ReLU、parametric-ReLU、ReLU6、SELU、Swish或Mish

•BBox回归损失:MSE、IoU、GIoU、CIoU、DIoU

•数据增广:CutOut、MixUp、CutMix

•正则方法:DropOut、DropPath、Spatial DropOut或DropBlock

•通过网络激活的平均值和方差进行归一化:Batch Normalization (BN) 、Cross-GPU Batch Normalization (CGBN or SyncBN)、Filter Response Normalization (FRN) 或Cross-Iteration Batch Normalization (CBN)

•跳跃连接:残差连接、加权残差连接、多输入加权残差连接或跨阶段部分连接

As for training activation function, since PReLU and SELU are more difficult to train, and ReLU6 is specifically designed for quantization network, we therefore remove the above activation functions from the candidate list. In the method of regularization, the people who published DropBlock have compared their method with other methods in detail, and their regularization method has won a lot. Therefore, we did not hesitate to choose DropBlock as our regularization method. As for the selection of normalization method, since we focus on a training strategy that uses only one GPU, syncBN is not considered.

对于激活函数,优于PReLU和SELU更难训练,而ReLU6是为量化网络设计的,因此我们将这些从候选列表中删除。而正则化方面,提出DropBlock的学者将他们的方法和其他方法进行了详细对比,DropBlock完胜。因此,我们直接选择DropBlock作为正则化手段。在归一化方法上,我们只关注单GPU上的训练策略,因此不考虑syncBN。

3.3. Additional improvements

In order to make the designed detector more suitable for training on single GPU, we made additional design and improvement as follows:

• We introduce a new method of data augmentation Mosaic, and Self-Adversarial Training (SAT)

• We select optimal hyper-parameters while applying genetic algorithms

• We modify some exsiting methods to make our design suitble for efficient training and detection - modified SAM, modified PAN, and Cross mini-Batch Normalization (CmBN)

为了设计更合适单GPU训练的检测器,我们做了额外的设计和改进:

•我们引入了全新的数据增广方法:Mosaic和SAT(Self-Adversarial Training自对抗训练)

•我们在应用遗传算法时选择了优化的超参数

•我们修改了一些现有方法使之更适合训练和检测——改进的SAM、改进的PAN和Cross mini-Batch Normalization(CmBN)

Mosaic represents a new data augmentation method that mixes 4 training images. Thus 4 different contexts are mixed, while CutMix mixes only 2 input images. This allows detection of objects outside their normal context. In addition, batch normalization calculates activation statistics from 4 different images on each layer. This significantly reduces the need for a large mini-batch size.

Mosaic马赛克展示了一种全新的数据增广方法,将四张训练图像混合。四张不同图像的上下文信息被混合,而CutMix只混合了两张图像。这使得在非常规上下文中检测目标变得可行。另外,BN会在每一层中从四张不同的图像中计算激活信息,这显著地减少了对大mini-batch尺寸的要求。

自对抗训练(Self-Adversarial Training)也是一种全新的数据增广技术在前向和反向传播两个阶段进行。在第一阶段,神经网络改变原始图像而不是网络权重。这样神经网络就对自身进行了一次对抗攻击,修改图像制造出图像上没有期望目标的假象。在第二阶段,训练神经网络以正常的方式检测出修改过的图像上的目标。

CmBN represents a CBN modified version, as shown in Figure 4, defined as Cross mini-Batch Normalization

(CmBN). This collects statistics only between mini-batches within a single batch.

CmBN是CBN的改进版本,如图4所示。这个方法仅仅统计单个batch里面的mini-batch间的信息。

3.4. YOLOv4

In this section, we shall elaborate the details of YOLOv4.

YOLOv4 consists of:

• Backbone: CSPDarknet53 [81]

• Neck: SPP [25], PAN [49]

• Head: YOLOv3 [63]

YOLOv4 uses:

• Bag of Freebies (BoF) for backbone: CutMix and Mosaic data augmentation, DropBlock regularization, Class label smoothing

• Bag of Specials (BoS) for backbone: Mish activation, Cross-stage partial connections (CSP), Multiinput weighted residual connections (MiWRC)

• Bag of Freebies (BoF) for detector: CIoU-loss, CmBN, DropBlock regularization, Mosaic data augmentation, Self-Adversarial Training, Eliminate grid sensitivity, Using multiple anchors for a single ground truth, Cosine annealing scheduler [52], Optimal hyperparameters, Random training shapes

• Bag of Specials (BoS) for detector: Mish activation, SPP-block, SAM-block, PAN path-aggregation block, DIoU-NMS

在本节中,我们将详细介绍YOLOv4。

YOLOv4的组成:

• Backbone主干网络: CSPDarknet53 [81]

• Neck脖子: SPP [25], PAN [49]

• Head检测头: YOLOv3 [63]

YOLOv4使用了:

• 主干网络BoF: CutMix和Mosaic数据增广、DropBlock正则化, 类别标签平滑

• 主干网络BoS: Mish激活函数、CSP、多输入加权残差连接(MiWRC)

• 检测器BoF: CIoU-loss, CmBN, DropBlock正则化、Mosaic数据增广、SAT自对抗训练、grid敏感性消除、单GT多锚点、余弦退火 [52]、最优超参数、随机训练尺寸

• 检测器BoS: Mish激活函数、SPP块、SAM块、PAN路径聚合块, DIoU非极大抑制

** **