pytorch深度学习实践-循环神经网络高级0115

B站 刘二大人:循环神经网络(高级篇)

目录

1、名字分类数据

2、bi-directional双向循环神经网络

3、pack_padded_sequence作用

4、代码实现名字分类

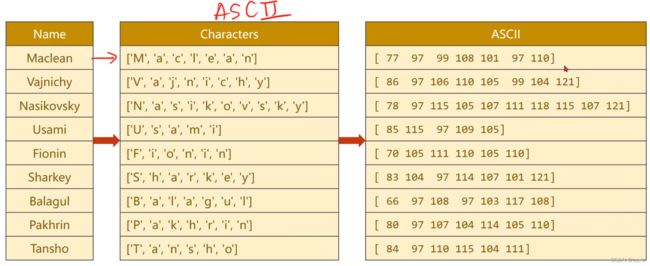

1、名字分类数据

数据准备:

补零:

国家对照:

2、bi-directional双向循环神经网络

反向传播计算一次。

3、pack_padded_sequence作用

堆叠未排序不可行:

先根据长度来排序:

4、代码实现名字分类

代码如下:

import math

import matplotlib.pyplot as plt

import numpy as np

import torch

from torch.utils.data import Dataset

from torch.utils.data import DataLoader

import gzip

import csv

import time

HIDDEN_SIZE = 100

BATCH_SIZE = 256

N_LAYER = 2

N_EPOCHS = 50

N_CHARS = 128 # 这个是为了构造嵌入层

USE_GPU = False # 使用GPU会报错

class NameDataset(Dataset):

def __init__(self, is_train_set):

filename = './names_train.csv.gz' if is_train_set else './names_test.csv.gz'

with gzip.open(filename, 'rt') as f: # r表示只读,从文件头开始,t表示文本模式

reader = csv.reader(f)

rows = list(reader) # 读出名字和语言

self.names = [row[0] for row in rows]

self.len = len(self.names)

self.countries = [row[1] for row in rows]

self.country_list = list(sorted(set(self.countries))) # set变成集合,然后排序

self.country_dict = self.getCountryDict() # 变成词典

self.country_num = len(self.country_list)

def __getitem__(self, index): # 根据索引拿到的是名字,国家的索引

return self.names[index], self.country_dict[self.countries[index]]

def __len__(self):

return self.len

def getCountryDict(self):

country_dict = dict()

for idx, country_name in enumerate(self.country_list, 0):

country_dict[country_name] = idx

return country_dict

def idx2country(self, index):

return self.country_list[index] # 根据索引返回国家字符串

def getCountriesNum(self):

return self.country_num # 返回国家数量

trainSet = NameDataset(is_train_set=True)

trainLoader = DataLoader(trainSet, batch_size=BATCH_SIZE, shuffle=True)

testSet = NameDataset(is_train_set=False)

testLoader = DataLoader(testSet, batch_size=BATCH_SIZE, shuffle=False)

N_COUNTRY = trainSet.getCountriesNum()

class RNNClassifier(torch.nn.Module):

def __init__(self, input_size, hidden_size, output_size, n_layers=1, bidirectional=True): # bidirectional单向还是双向

super(RNNClassifier, self).__init__()

self.hidden_size = hidden_size

self.n_layers = n_layers

self.n_directions = 2 if bidirectional else 1 # 双向2单向1

# 嵌入层(, ℎ) --> (, ℎ, hidden_size)

self.embedding = torch.nn.Embedding(input_size, hidden_size)

self.gru = torch.nn.GRU(hidden_size, hidden_size, n_layers, bidirectional=bidirectional)

self.fc = torch.nn.Linear(hidden_size * self.n_directions, output_size)

# hidden_size * self.n_directions拼接在一起

def _init_hidden(self, batch_size):

hidden = torch.zeros(self.n_layers * self.n_directions, batch_size, self.hidden_size)

return create_tensor(hidden)

def forward(self, input, seq_lengths):

# input shape : B x S -> S x B

input = input.t() # 转置

batch_size = input.size(1)

hidden = self._init_hidden(batch_size)

embedding = self.embedding(input)

# pack them up

gru_input = torch.nn.utils.rnn.pack_padded_sequence(embedding, seq_lengths)

# 提速

output, hidden = self.gru(gru_input, hidden)

if self.n_directions == 2:

hidden_cat = torch.cat([hidden[-1], hidden[-2]], dim=1) # 两个就拼起来

else:

hidden_cat = hidden[-1]

fc_output = self.fc(hidden_cat)

return fc_output

def create_tensor(tensor):

if USE_GPU:

device = torch.device("cuda:0")

tensor = tensor.to(device)

return tensor

def name2list(name):

arr = [ord(c) for c in name]

return arr, len(arr)

def make_tensors(names, countries):

sequences_and_lengths = [name2list(name) for name in names] # 每个名字变成列表

name_sequences = [s1[0] for s1 in sequences_and_lengths] # 拿出列表长度

seq_lengths = torch.LongTensor([s1[1] for s1 in sequences_and_lengths]) # 序列长度转换

countries = countries.long() # 转换成long

# make tensor of name, BatchSize * seqLen,padding操作

seq_tensor = torch.zeros(len(name_sequences), seq_lengths.max()).long()

# 补零的方式先将所有的0 Tensor给初始化出来,然后在每行前面填充每个名字

for idx, (seq, seq_len) in enumerate(zip(name_sequences, seq_lengths), 0):

seq_tensor[idx, :seq_len] = torch.LongTensor(seq)

# sort by length to use pack_padded_sequence

# 将名字长度降序排列,并且返回降序之后的长度在原tensor中的小标perm_idx

seq_lengths, perm_idx = seq_lengths.sort(dim=0, descending=True)

seq_tensor = seq_tensor[perm_idx]

countries = countries[perm_idx]

# 返回排序之后名字Tensor,排序之后的名字长度Tensor,排序之后的国家名字Tensor

return create_tensor(seq_tensor),\

create_tensor(seq_lengths), \

create_tensor(countries)

# 模型训练

def trainModel():

total_loss = 0

for i, (names, countries) in enumerate(trainLoader, 1):

inputs, seq_lengths, target = make_tensors(names, countries)

output = classifier(inputs, seq_lengths)

loss = criterion(output, target)

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_loss += loss.item()

print(f'[{time_since(start)}] Epoch {epoch}', end=' ')

print(f'[{i * len(inputs)}/{len(trainSet)}]', end=' ')

print(f'loss = {total_loss / (i * len(inputs))}')

return total_loss

# 模型测试

def testModel():

correct = 0

total = len(testSet)

print("evaluating train model .....")

with torch.no_grad():

for i, (names, countries) in enumerate(testLoader, 1):

inputs, seq_lengths, target = make_tensors(names, countries)

output = classifier(inputs, seq_lengths)

pred = output.max(dim=1, keepdim=True)[1]

correct += pred.eq(target.view_as(pred)).sum().item()

percent = '%.2f' % (100 * correct / total)

print(f'Test set: Accuracy {correct} / {total} {percent}%', '\n')

return correct / total

def time_since(since):

s = time.time() - since

m = math.floor(s / 60)

s -= m * 60

return '%dm %ds' % (m, s)

# 主过程

if __name__ == '__main__':

classifier = RNNClassifier(N_CHARS, HIDDEN_SIZE, N_COUNTRY, N_LAYER)

# N_CHARS字母表的数量,

if USE_GPU:

device = torch.device("cuda:0")

classifier.to(device)

criterion = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(classifier.parameters(), lr=0.001)

start = time.time() # 计算时间

print("Training for %d epochs..." % N_EPOCHS)

acc_list = []

for epoch in range(1, N_EPOCHS + 1):

trainModel()

acc = testModel()

acc_list.append(acc)

epoch = np.arange(1, len(acc_list) + 1)

acc_list = np.array(acc_list)

plt.plot(epoch, acc_list)

plt.xlabel('Epoch')

plt.ylabel('Accuracy')

plt.grid()

plt.show()

使用GPU会报错,暂未解决这个问题。

结果如下:

发现效果并不理想,准确率与展示的有巨大偏差。

考虑将学习率从0.001改为0.01,发现效果有明显提升:

完结撒花!

但是有些地方还未完全理解,还待进一步研究。