Ablation Study-学习笔记

[1]文章:Entity and Evidence Guided Relation Extraction for DocRED

Kevin Huang†, Guangtao Wang†, Tengyu Ma‡, Jing Huang†JD AI Research†, Stanford University‡{kevin.huang3, guangtao.wang, jing.huang}@[email protected]

文章[1]中的4.4节标题为Ablation Study.

什么是Ablation Study (消融研究)?

英文解释:An ablation study typically refers to removing some “feature” of the model or algorithm, and seeing how that affects performance.

中文解释:就是通过删除模型或算法中某些“功能”,并查看是如何影响性能的。

在科研实验中,经常会提出多个算法或者模块,这些内容共同构成一个系统或组织,为了解每个算法或模块的作用功能程度,经常会在科研中使用消融研究。

例如论文的研究方法包括A、B算法。而这个研究方法是基于Baseline(BL)进行改进的。因此消融研究(Ablation study )会有如下对比:①BL;②BL+A;③BL+B;④BL+A+B等实验,根据实验设置的指标进行上述几种情况的对比分析,找出对应的算法或模块的作用的变化及大小请况。

案例:

如下是文章[1]中设置的4.4节:

4.4 Ablation Study

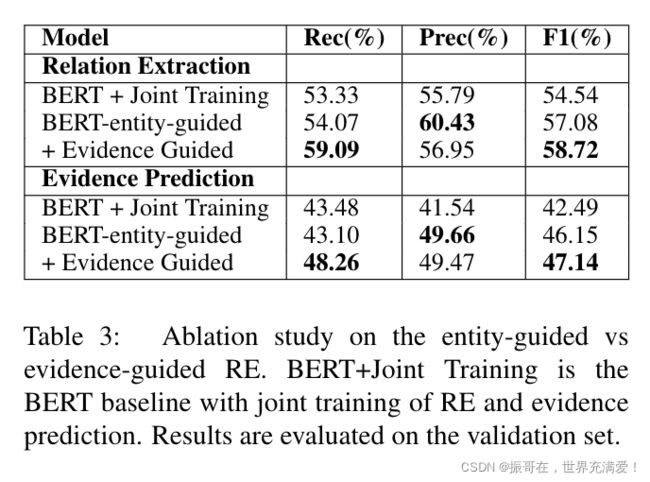

Analysis of Method Components Table 3 shows the ablation study of our method on the effectiveness of entity-guided and evidence-guided training.

The baseline here is the joint training model of relation extraction and evidence prediction with BERT-base.

两个模型分别对比了:BERT+Joint Training;BERT-entity-guided;+Evidence Guided。

We see that the entity-guided BERT improves the over this baseline by 2.5%, and evidence-guided training further improve the method by 1.7%. This shows that both parts of our method are important to the overall E2GRE method. Our E2GRE method not only obtains improvement on the relation extraction F1, but it also obtains significant improvement on evidence prediction compared to this baseline. This further shows that our evidence-guided finetuning method is effective.

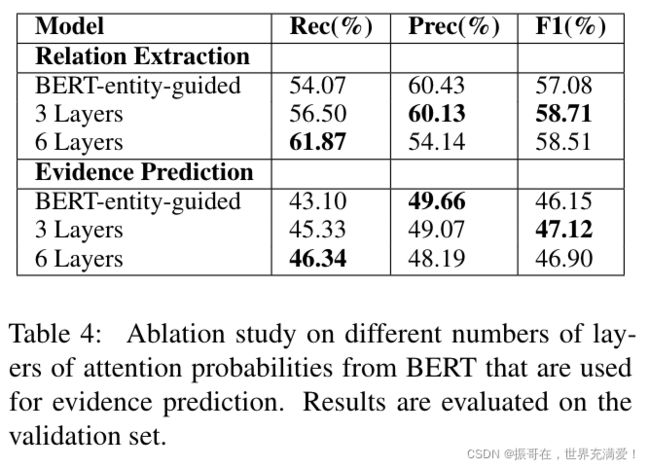

三个对比:BERT-entity-guided,3Layers,6Layers

Analysis of Number of BERT Layers.We also conduct experiments to analyze the impact of the number of BERT layers used for obtaining attention probability values, see the results in Table 4.From this table, we observe that using more layers is not necessarily better for relation extraction.One possible reason may be that the BERT model encodes more syntactic information in the middle layers (Clark et al., 2019)