PyTorch 深度学习之线性模型(一)

一、步骤是什么?

1.DataSet

2.Model

3.Training

4.Inferring

P.S可能出现过拟合,可以使用Dev开发集提高泛化能力

二、线性模型基础(linearmodel )

A random guess-穷举法

2.1.Evaluate Model Error

![]()

2.2.MSE

平均 平方 误差

三、代码操作(穷举法)

1.f(x)=w*x

import numpy as np

import matplotlib.pyplot as plt

x_data = [1.0, 2.0, 3.0]

y_data = [2.0, 4.0, 6.0]

def forward(x):

return x*w

def loss(x, y):

y_pred = forward(x)

return (y_pred - y)*(y_pred - y)

w_list = []

mse_list = []

for w in np.arange(0.0, 4.1, 0.1):

print("w=", w)

l_sum = 0

for x_val, y_val in zip(x_data, y_data):

y_pred_val = forward(x_val)

loss_val = loss(x_val, y_val)

l_sum += loss_val

print('\t', x_val, y_val, y_pred_val, loss_val)

print('MSE=', l_sum/3)

w_list.append(w)

mse_list.append(l_sum/3)

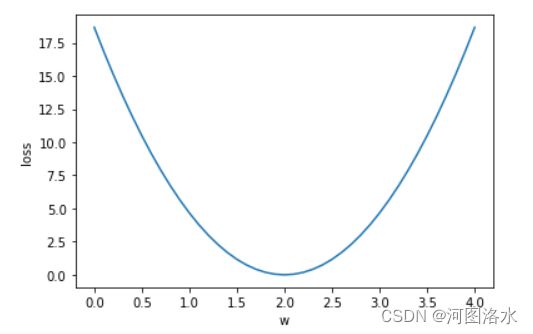

plt.plot(w_list,mse_list)

plt.ylabel('Loss')

plt.xlabel('w')

plt.show() 结果展示

P.S:zip()用法

>>> a = [1,2,3]

>>> b = [4,5,6]

>>> c = [4,5,6,7,8]

>>> zipped = zip(a,b) # 打包为元组的列表

[(1, 4), (2, 5), (3, 6)]

>>> zip(a,c) # 元素个数与最短的列表一致

[(1, 4), (2, 5), (3, 6)]

>>> zip(*zipped) # 与 zip 相反,*zipped 可理解为解压,返回二维矩阵式

[(1, 2, 3), (4, 5, 6)]2.f(x)=w*x+b(双参数)

import numpy as np

import matplotlib.pyplot as plt

from mpl_toolkits.mplot3d import Axes3D

#函数为y=4x+2

x_data = [1.0,2.0,3.0]

y_data = [6.0,10.0,14.0]

def forward(x):

return x * w + b

def loss(x,y):

y_pred = forward(x)

return (y_pred-y)*(y_pred-y)

mse_list = []

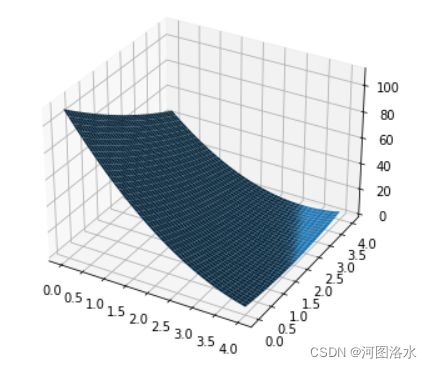

W=np.arange(0.0,4.1,0.1)

B=np.arange(0.0,4.1,0.1)

[w,b]=np.meshgrid(W,B)

l_sum = 0

for x_val, y_val in zip(x_data, y_data):

y_pred_val = forward(x_val)

print(y_pred_val)

loss_val = loss(x_val, y_val)

l_sum += loss_val

fig = plt.figure()

ax = Axes3D(fig)

ax.plot_surface(w, b, l_sum/3)

plt.show()

结果展示