【ROS】利用ROS将KITTI数据集点云数据投影到2D图像

课题涉及到感知融合,首先需要将点云投影到图像上,本文利用ROS实现投影。分为两个ROS节点,一个节点负责不断读取点云原始bin文件,并以 sensor_msgs::PointCloud2 的消息格式发布话题;另一个节点对接收到的点云消息进行处理,把点云投影到相机图像上。

目录

- 笔者环境

- 一、创建ROS工作空间

-

- 1. 创建工作空间目录

- 2. 编译工作空间

- 3. 设置环境变量

- 二、创建投影功能包

-

- 1. 统一格式

- 2. 创建点云投影功能包

- 3. 编写节点cpp文件

- 三、创建launch功能包

-

- 1. 创建launch包

- 2. 创建 run.launch 文件

- 3. 编写 run.launch 文件

- 四、在project功能包添加其他依赖

-

- 1. 修改project目录下的CMakeLists.txt

- 2. 修改project目录下的package.xml

- 五、编译project功能包

- 六、投影效果展示

笔者环境

Ubuntu18.04+ ROS Melodic + Opencv 3.2.0 + Eigen 3.3.7 + PCL 1.8.0

测试数据集是Kitti的raw_data的2011_09_26(如果使用其他天的数据,需要在lidtocamp.cpp中修改标定数据)

一、创建ROS工作空间

1. 创建工作空间目录

这步比较基础,目的是在src文件中创建了一个 CMakeLists.txt 的文件,告诉系统这个是ROS的工作空间

#创建catkin_ws和src目录

mkdir -p ~/catkin_ws/src

#进入src目录下,并进行文件夹初始化

cd ~/catkin_ws/src

catkin_init_workspace

2. 编译工作空间

在catkin_ws文件夹下进行编译:

cd ~/catkin_ws/

catkin_make

编译完成后,catkin_ws中新增build 和 devel

3. 设置环境变量

安装ROS的时候是将整个ros系统的环境变量设置到bash脚本中,现在我们需要把我们工作空间的环境变量设置到bash中。

#打开系统变量文件

gedit ~/.bashrc

#在文件末加入

source ~/catkin_ws/devel/setup.bash

#更新变量文件

source ~/.bashrc

如果想查看ros的环境变量: echo $ROS_PACKAGE_PATH

终端输出:/home/wdd/catkin_ws/src : /opt/ros/kinetic/share

第一个为我们刚创建工作空间的,第二个是ROS系统的

二、创建投影功能包

1. 统一格式

在src目录下创建

catkin_create_pkg package_name depend1 depend2 depend2

package_name:功能包名称

depend:依赖项

2. 创建点云投影功能包

在src目录下使用 catkin_create_pkg 创建一个 package 包并添加 roscpp 和 std_msgs 依赖项

catkin_create_pkg Lid_project_cam roscpp std_msgs

std_msgs:包含常见消息类型

roscpp:使用C++实现ROS各种功能

(如果使用python实现ROS各种功能,添加rospy)

3. 编写节点cpp文件

在功能包目录的src文件夹下创建节点文件:

cd Lid_project_cam/src

#发布节点

touch laserhandle.cpp

#接受节点

touch lidtocamp.cpp

感谢这位博主提供的代码:https://blog.csdn.net/qq_33287871/article/details/107587233

laserhandle.cpp:

#include lidtocamp.cpp:

#include 三、创建launch功能包

1. 创建launch包

在catkin_ws/src下用 catkin_create_pkg 创建 launch 包,不添加任何依赖项

catkin_create_pkg launch

2. 创建 run.launch 文件

进入 ~/catkin_ws/src/launch 目录,创建 run.launch 文件

cd launch

touch run.launch

3. 编写 run.launch 文件

分为两个ROS节点,一个节点负责不断读取点云原始bin文件,并以 sensor_msgs::PointCloud2 的消息格式发布话题;另一个节点对接收到的点云消息进行处理,把点云投影到相机图像上。

内容如下:

<launch>

<node pkg="Lid_project_cam" name = "laserhandle" type = "laserhandle" output="screen" />

<node pkg="Lid_project_cam" name = "lidtocamp" type = "lidtocamp" output="screen" />

</launch>

四、在project功能包添加其他依赖

因为投影过程中要用到PCL,可视化要用到Opencv,所以我们需要加入PCL和Opencv的依赖到投影功能包中

1. 修改project目录下的CMakeLists.txt

(1)修改find_package

find_package(catkin REQUIRED COMPONENTS

roscpp

rospy

std_msgs

sensor_msgs

cv_bridge

image_transport

pcl_conversions

pcl_ros

)

find_package(OpenCV REQUIRED)

(2)修改include_directories

include_directories(

# include

${catkin_INCLUDE_DIRS}

${OpenCV_INCLUDE_DIRS}

)

(3)生成可执行文件并连接到库

add_executable(laserhandle src/laserhandle.cpp)

target_link_libraries(laserhandle ${catkin_LIBRARIES} ${OpenCV_LIBRARIES})

add_executable(lidtocamp src/lidtocamp.cpp)

target_link_libraries(lidtocamp ${catkin_LIBRARIES} ${OpenCV_LIBRARIES})

2. 修改project目录下的package.xml

在package.xml添加:

<build_depend>libpcl-all-dev</build_depend>

<exec_depend>libpcl-all</exec_depend>

五、编译project功能包

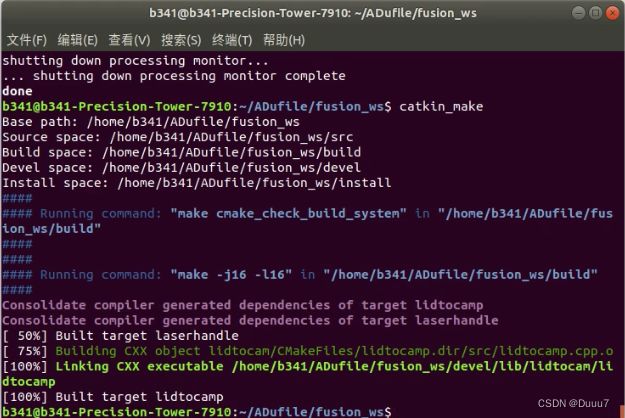

#cd到catkin_ws下,编译整个工程

cd ~/catkin_ws/

catkin_make

显示下图则编译成功,在/catkin_ws/devel/lib/project下有了两个可执行文件:laserhandle和lidtocamp

六、投影效果展示

启动run.launch文件,实现节点收发

roslaunch launch run.launch

投影效果如下图所示:

演示视频:https://www.bilibili.com/video/BV1XL4y1A7zN?spm_id_from=333.999.0.0&vd_source=85578835a77d53d45d756c48a7f801c6

参考致谢:

https://blog.csdn.net/qq_43944331/article/details/117470536

https://blog.csdn.net/EchoChou428/article/details/105257293

https://blog.csdn.net/qq_28306361/article/details/85142192

https://blog.csdn.net/qq_33287871/article/details/107587233